|

Nondeterministic Turing Machines

In theoretical computer science, a nondeterministic Turing machine (NTM) is a theoretical model of computation whose governing rules specify more than one possible action when in some given situations. That is, an NTM's next state is ''not'' completely determined by its action and the current symbol it sees, unlike a deterministic Turing machine. NTMs are sometimes used in thought experiments to examine the abilities and limits of computers. One of the most important open problems in theoretical computer science is the P versus NP problem, which (among other equivalent formulations) concerns the question of how difficult it is to simulate nondeterministic computation with a deterministic computer. Background In essence, a Turing machine is imagined to be a simple computer that reads and writes symbols one at a time on an endless tape by strictly following a set of rules. It determines what action it should perform next according to its internal ''state'' and ''what symbol it curr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theoretical Computer Science

Theoretical computer science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation, lambda calculus, and type theory. It is difficult to circumscribe the theoretical areas precisely. The Association for Computing Machinery, ACM's ACM SIGACT, Special Interest Group on Algorithms and Computation Theory (SIGACT) provides the following description: History While logical inference and mathematical proof had existed previously, in 1931 Kurt Gödel proved with his incompleteness theorem that there are fundamental limitations on what statements could be proved or disproved. Information theory was added to the field with a 1948 mathematical theory of communication by Claude Shannon. In the same decade, Donald Hebb introduced a mathematical model of Hebbian learning, learning in the brain. With mounting biological data supporting this hypothesis with some modification, the fields of n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P = NP Problem

The P versus NP problem is a major unsolved problem in theoretical computer science. In informal terms, it asks whether every problem whose solution can be quickly verified can also be quickly solved. The informal term ''quickly'', used above, means the existence of an algorithm solving the task that runs in polynomial time, such that the time to complete the task varies as a polynomial function on the size of the input to the algorithm (as opposed to, say, exponential time). The general class of questions for which some algorithm can provide an answer in polynomial time is " P" or "class P". For some questions, there is no known way to find an answer quickly, but if one is provided with information showing what the answer is, it is possible to verify the answer quickly. The class of questions for which an answer can be ''verified'' in polynomial time is NP, which stands for "nondeterministic polynomial time".A nondeterministic Turing machine can move to a state that is not d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NP-complete

In computational complexity theory, a problem is NP-complete when: # it is a problem for which the correctness of each solution can be verified quickly (namely, in polynomial time) and a brute-force search algorithm can find a solution by trying all possible solutions. # the problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. In this sense, NP-complete problems are the hardest of the problems to which solutions can be verified quickly. If we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified. The name "NP-complete" is short for "nondeterministic polynomial-time complete". In this name, "nondeterministic" refers to nondeterministic Turing machines, a way of mathematically formalizing the idea of a brute-force search algorithm. Polynomial time refers to an amount of time that is considered "quick" for a det ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scott Aaronson

Scott Joel Aaronson (born May 21, 1981) is an American theoretical computer scientist and David J. Bruton Jr. Centennial Professor of Computer Science at the University of Texas at Austin. His primary areas of research are quantum computing and computational complexity theory. Early life and education Aaronson grew up in the United States, though he spent a year in Asia when his father—a science writer turned public-relations executive—was posted to Hong Kong. He enrolled in a school there that permitted him to skip ahead several years in math, but upon returning to the US, he found his education restrictive, getting bad grades and having run-ins with teachers. He enrolled in The Clarkson School, a gifted education program run by Clarkson University, which enabled Aaronson to apply for colleges while only in his freshman year of high school. He was accepted into Cornell University, where he obtained his BSc in computer science in 2000, [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Superposition

Quantum superposition is a fundamental principle of quantum mechanics. It states that, much like waves in classical physics, any two (or more) quantum states can be added together ("superposed") and the result will be another valid quantum state; and conversely, that every quantum state can be represented as a sum of two or more other distinct states. Mathematically, it refers to a property of solutions to the Schrödinger equation; since the Schrödinger equation is linear, any linear combination of solutions will also be a solution(s) . An example of a physically observable manifestation of the wave nature of quantum systems is the interference peaks from an electron beam in a double-slit experiment. The pattern is very similar to the one obtained by diffraction of classical waves. Another example is a quantum logical qubit state, as used in quantum information processing, which is a quantum superposition of the "basis states" , 0 \rangle and , 1 \rangle . Here , ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Bit

In quantum computing, a qubit () or quantum bit is a basic unit of quantum information—the quantum version of the classic binary bit physically realized with a two-state device. A qubit is a two-state (or two-level) quantum-mechanical system, one of the simplest quantum systems displaying the peculiarity of quantum mechanics. Examples include the spin of the electron in which the two levels can be taken as spin up and spin down; or the polarization of a single photon in which the two states can be taken to be the vertical polarization and the horizontal polarization. In a classical system, a bit would have to be in one state or the other. However, quantum mechanics allows the qubit to be in a coherent Quantum superposition, superposition of both states simultaneously, a property that is fundamental to quantum mechanics and quantum computing. Etymology The coining of the term ''qubit'' is attributed to Benjamin Schumacher. In the acknowledgments of his 1995 paper, Schumache ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Computer

Quantum computing is a type of computation whose operations can harness the phenomena of quantum mechanics, such as superposition, interference, and entanglement. Devices that perform quantum computations are known as quantum computers. Though current quantum computers may be too small to outperform usual (classical) computers for practical applications, larger realizations are believed to be capable of solving certain computational problems, such as integer factorization (which underlies RSA encryption), substantially faster than classical computers. The study of quantum computing is a subfield of quantum information science. There are several models of quantum computation with the most widely used being quantum circuits. Other models include the quantum Turing machine, quantum annealing, and adiabatic quantum computation. Most models are based on the quantum bit, or " qubit", which is somewhat analogous to the bit in classical computation. A qubit can be in a 1 or 0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BQP Complexity Class Diagram

In computational complexity theory, bounded-error quantum polynomial time (BQP) is the class of decision problems solvable by a quantum computer in polynomial time, with an error probability of at most 1/3 for all instances.Michael Nielsen and Isaac Chuang (2000). ''Quantum Computation and Quantum Information''. Cambridge: Cambridge University Press. . It is the quantum analogue to the complexity class BPP. A decision problem is a member of BQP if there exists a quantum algorithm (an algorithm that runs on a quantum computer) that solves the decision problem with high probability and is guaranteed to run in polynomial time. A run of the algorithm will correctly solve the decision problem with a probability of at least 2/3. Definition BQP can be viewed as the languages associated with certain bounded-error uniform families of quantum circuits. A language ''L'' is in BQP if and only if there exists a polynomial-time uniform family of quantum circuits \, such that * For all ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Breadth-first Search

Breadth-first search (BFS) is an algorithm for searching a tree data structure for a node that satisfies a given property. It starts at the tree root and explores all nodes at the present depth prior to moving on to the nodes at the next depth level. Extra memory, usually a queue, is needed to keep track of the child nodes that were encountered but not yet explored. For example, in a chess endgame a chess engine may build the game tree from the current position by applying all possible moves, and use breadth-first search to find a win position for white. Implicit trees (such as game trees or other problem-solving trees) may be of infinite size; breadth-first search is guaranteed to find a solution node if one exists. In contrast, (plain) depth-first search, which explores the node branch as far as possible before backtracking and expanding other nodes, may get lost in an infinite branch and never make it to the solution node. Iterative deepening depth-first search avoid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deterministic Turing Machine

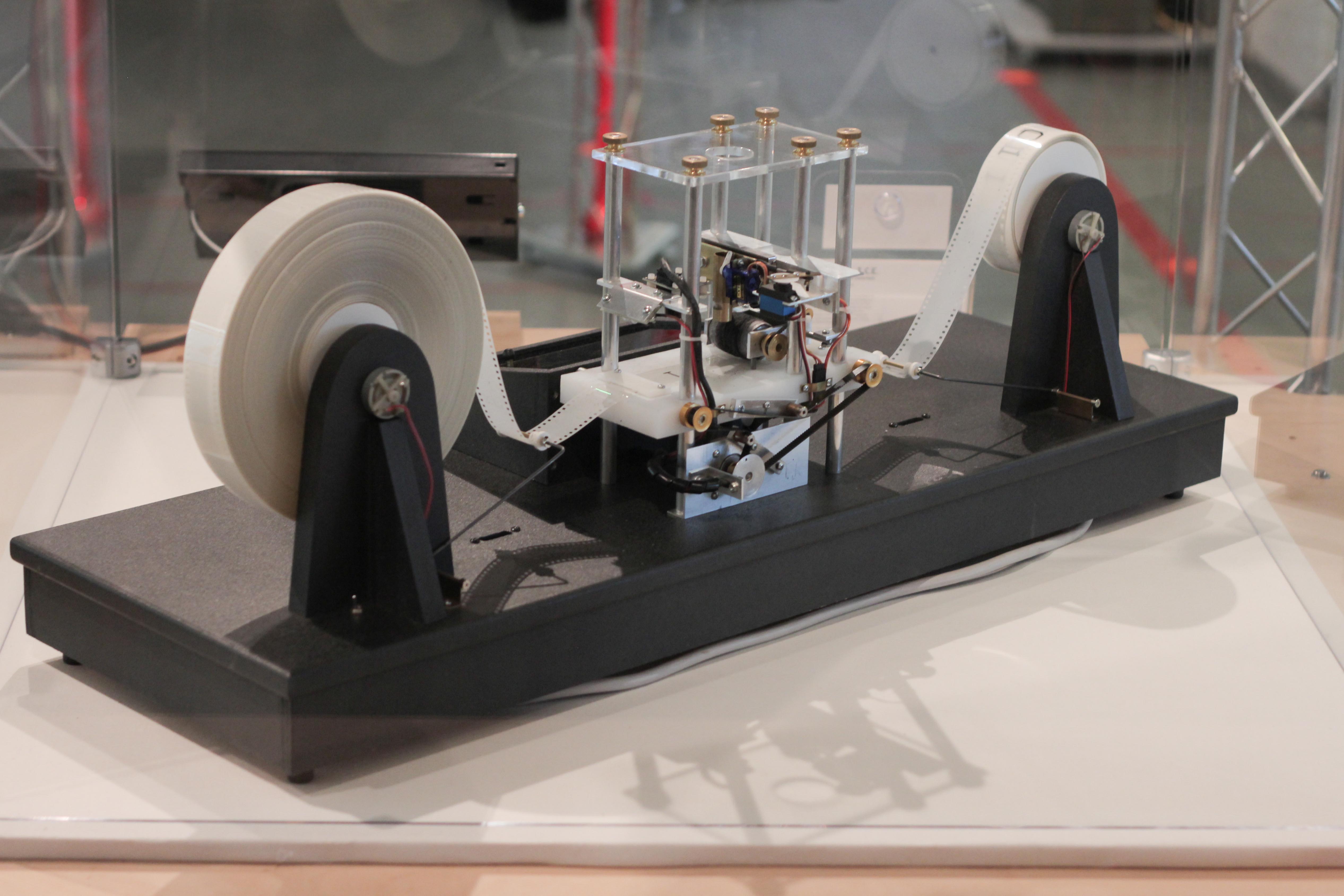

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Complexity

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally express ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)