|

Natural Exponential Families

In probability and statistics, a natural exponential family (NEF) is a class of probability distributions that is a special case of an exponential family (EF). Definition Univariate case The natural exponential families (NEF) are a subset of the exponential families. A NEF is an exponential family in which the natural parameter ''η'' and the natural statistic ''T''(''x'') are both the identity. A distribution in an exponential family with parameter ''θ'' can be written with probability density function (PDF) : f_X(x\mid \theta) = h(x)\ \exp\Big(\ \eta(\theta) T(x) - A(\theta)\ \Big) \,\! , where h(x) and A(\theta) are known functions. A distribution in a natural exponential family with parameter θ can thus be written with PDF : f_X(x\mid \theta) = h(x)\ \exp\Big(\ \theta x - A(\theta)\ \Big) \,\! . [Note that slightly different notation is used by the originator of the NEF, Carl Morris.Morris C. (2006) "Natural exponential families", ''Encyclopedia of Stat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and 1, where, roughly speaking, 0 indicates impossibility of the event and 1 indicates certainty."Kendall's Advanced Theory of Statistics, Volume 1: Distribution Theory", Alan Stuart and Keith Ord, 6th Ed, (2009), .William Feller, ''An Introduction to Probability Theory and Its Applications'', (Vol 1), 3rd Ed, (1968), Wiley, . The higher the probability of an event, the more likely it is that the event will occur. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes ("heads" and "tails") are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2 (which could also be written ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Geometric Distribution

In probability theory and statistics, the geometric distribution is either one of two discrete probability distributions: * The probability distribution of the number ''X'' of Bernoulli trials needed to get one success, supported on the set \; * The probability distribution of the number ''Y'' = ''X'' − 1 of failures before the first success, supported on the set \. Which of these is called the geometric distribution is a matter of convention and convenience. These two different geometric distributions should not be confused with each other. Often, the name ''shifted'' geometric distribution is adopted for the former one (distribution of the number ''X''); however, to avoid ambiguity, it is considered wise to indicate which is intended, by mentioning the support explicitly. The geometric distribution gives the probability that the first occurrence of success requires ''k'' independent trials, each with success probability ''p''. If the probability of succe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

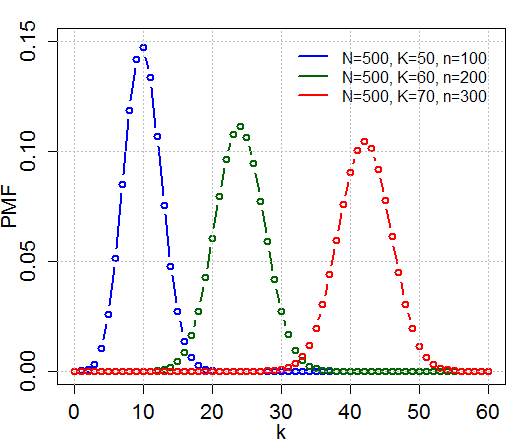

Hypergeometric Distribution

In probability theory and statistics, the hypergeometric distribution is a discrete probability distribution that describes the probability of k successes (random draws for which the object drawn has a specified feature) in n draws, ''without'' replacement, from a finite population of size N that contains exactly K objects with that feature, wherein each draw is either a success or a failure. In contrast, the binomial distribution describes the probability of k successes in n draws ''with'' replacement. Definitions Probability mass function The following conditions characterize the hypergeometric distribution: * The result of each draw (the elements of the population being sampled) can be classified into one of two mutually exclusive categories (e.g. Pass/Fail or Employed/Unemployed). * The probability of a success changes on each draw, as each draw decreases the population (''sampling without replacement'' from a finite population). A random variable X follows the hyperg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Beta Distribution

In probability theory and statistics, the beta distribution is a family of continuous probability distributions defined on the interval , 1in terms of two positive parameters, denoted by ''alpha'' (''α'') and ''beta'' (''β''), that appear as exponents of the random variable and control the shape of the distribution. The beta distribution has been applied to model the behavior of random variables limited to intervals of finite length in a wide variety of disciplines. The beta distribution is a suitable model for the random behavior of percentages and proportions. In Bayesian inference, the beta distribution is the conjugate prior probability distribution for the Bernoulli, binomial, negative binomial and geometric distributions. The formulation of the beta distribution discussed here is also known as the beta distribution of the first kind, whereas ''beta distribution of the second kind'' is an alternative name for the beta prime distribution. The generalization to mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperbolic Secant Distribution

In probability theory and statistics, the hyperbolic secant distribution is a continuous probability distribution whose probability density function and characteristic function are proportional to the hyperbolic secant function. The hyperbolic secant function is equivalent to the reciprocal hyperbolic cosine, and thus this distribution is also called the inverse-cosh distribution. Generalisation of the distribution gives rise to the Meixner distribution, also known as the Natural Exponential Family - Generalised Hyperbolic Secant or NEF-GHS distribution. Explanation A random variable follows a hyperbolic secant distribution if its probability density function (pdf) can be related to the following standard form of density function by a location and shift transformation: :f(x) = \frac12 \; \operatorname\!\left(\frac\,x\right)\! , where "sech" denotes the hyperbolic secant function. The cumulative distribution function (cdf) of the standard distribution is a scaled and shifted ve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Carl Morris (statistician)

Carl Neracher Morris is a professor in the Statistics Department of Harvard University and spent several years as a researcher for the RAND Corporation working on the RAND Health Insurance Experiment. Early life Carl Morris had received his BS in Aeronautical Engineering from the California Institute of Technology in 1960 and then attended Indiana University until 1962. He obtained his Ph.D. in statistics from Stanford University under advisor Charles Stein in 1966. Since 1990, Morris has been at Harvard Statistics Department and Harvard Medical School Department of Health Care Policy. He served as the chair of the Harvard Statistics Department from 1994 to 2000. Morris has also been a professor at the University of California, Santa Cruz, Frederick S. Pardee RAND Graduate School, Stanford University, and the University of Texas at Austin where he served as Director of the Center for Statistical Sciences. Morris is a Fellow of the American Statistical Association, Institute of Ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hessian Matrix

In mathematics, the Hessian matrix or Hessian is a square matrix of second-order partial derivatives of a scalar-valued function, or scalar field. It describes the local curvature of a function of many variables. The Hessian matrix was developed in the 19th century by the German mathematician Ludwig Otto Hesse and later named after him. Hesse originally used the term "functional determinants". Definitions and properties Suppose f : \R^n \to \R is a function taking as input a vector \mathbf \in \R^n and outputting a scalar f(\mathbf) \in \R. If all second-order partial derivatives of f exist, then the Hessian matrix \mathbf of f is a square n \times n matrix, usually defined and arranged as follows: \mathbf H_f= \begin \dfrac & \dfrac & \cdots & \dfrac \\ .2ex \dfrac & \dfrac & \cdots & \dfrac \\ .2ex \vdots & \vdots & \ddots & \vdots \\ .2ex \dfrac & \dfrac & \cdots & \dfrac \end, or, by stating an equation for the coefficients using indices i and j, (\mathbf H_f)_ = \fra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p is the "direction and rate of fastest increase". If the gradient of a function is non-zero at a point , the direction of the gradient is the direction in which the function increases most quickly from , and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to maximize a function by gradient ascent. In coordinate-free terms, the gradient of a function f(\bf) may be defined by: :df=\nabla f \cdot d\bf where ''df'' is the total infinitesimal change in ''f'' for an infinitesimal displacement d\bf, and is seen to be maximal when d\bf is in the direction of the gradi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Identically Distributed

In probability theory and statistics, a collection of random variables is independent and identically distributed if each random variable has the same probability distribution as the others and all are mutually independent. This property is usually abbreviated as ''i.i.d.'', ''iid'', or ''IID''. IID was first defined in statistics and finds application in different fields such as data mining and signal processing. Introduction In statistics, we commonly deal with random samples. A random sample can be thought of as a set of objects that are chosen randomly. Or, more formally, it’s “a sequence of independent, identically distributed (IID) random variables”. In other words, the terms ''random sample'' and ''IID'' are basically one and the same. In statistics, we usually say “random sample,” but in probability it’s more common to say “IID.” * Identically Distributed means that there are no overall trends–the distribution doesn’t fluctuate and all items in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multinomial Distribution

In probability theory, the multinomial distribution is a generalization of the binomial distribution. For example, it models the probability of counts for each side of a ''k''-sided dice rolled ''n'' times. For ''n'' independent trials each of which leads to a success for exactly one of ''k'' categories, with each category having a given fixed success probability, the multinomial distribution gives the probability of any particular combination of numbers of successes for the various categories. When ''k'' is 2 and ''n'' is 1, the multinomial distribution is the Bernoulli distribution. When ''k'' is 2 and ''n'' is bigger than 1, it is the binomial distribution. When ''k'' is bigger than 2 and ''n'' is 1, it is the categorical distribution. The term "multinoulli" is sometimes used for the categorical distribution to emphasize this four-way relationship (so ''n'' determines the prefix, and ''k'' the suffix). The Bernoulli distribution models the outcome of a single Bernoulli trial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Posterior Probability Distribution

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior probability contains everything there is to know about an uncertain proposition (such as a scientific hypothesis, or parameter values), given prior knowledge and a mathematical model describing the observations available at a particular time. After the arrival of new information, the current posterior probability may serve as the prior in another round of Bayesian updating. In the context of Bayesian statistics, the posterior probability distribution usually describes the epistemic uncertainty about statistical parameters conditional on a collection of observed data. From a given posterior distribution, various point and interval estimates can be derived, such as the maximum a posteriori (MAP) or the highest posterior density interval (HPDI ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derived from a statistical model for the observed data. Bayesian inference computes the posterior probability according to Bayes' theorem: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |