|

Multiple Description Coding

Multiple description coding (MDC) in computing is a Channel coding, coding technique that fragments a single Streaming media, media stream into ''n'' substreams (''n'' ≥ 2) referred to as descriptions. The Packet (information technology), packets of each description are routed over multiple, (partially) disjoint paths. In order to decode the media stream, any description can be used, however, the quality improves with the number of descriptions received in parallel. The idea of MDC is to provide error resilience to media streams. Since an arbitrary subset of descriptions can be used to decode the original stream, network congestion or packet loss — which are common in best-effort networks such as the Internet — will not interrupt the stream but only cause a (temporary) loss of quality. The quality of a stream can be expected to be roughly proportional to data rate sustained by the receiver. MDC is a form of data partitioning, thus comparable to layered coding as it i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, engineering, mathematical, technological and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology and software engineering. The term "computing" is also synonymous with counting and calculating. In earlier times, it was used in reference to the action performed by mechanical computing machines, and before that, to human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. Computing is intimately tied to the representation of numbers, though mathematical conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

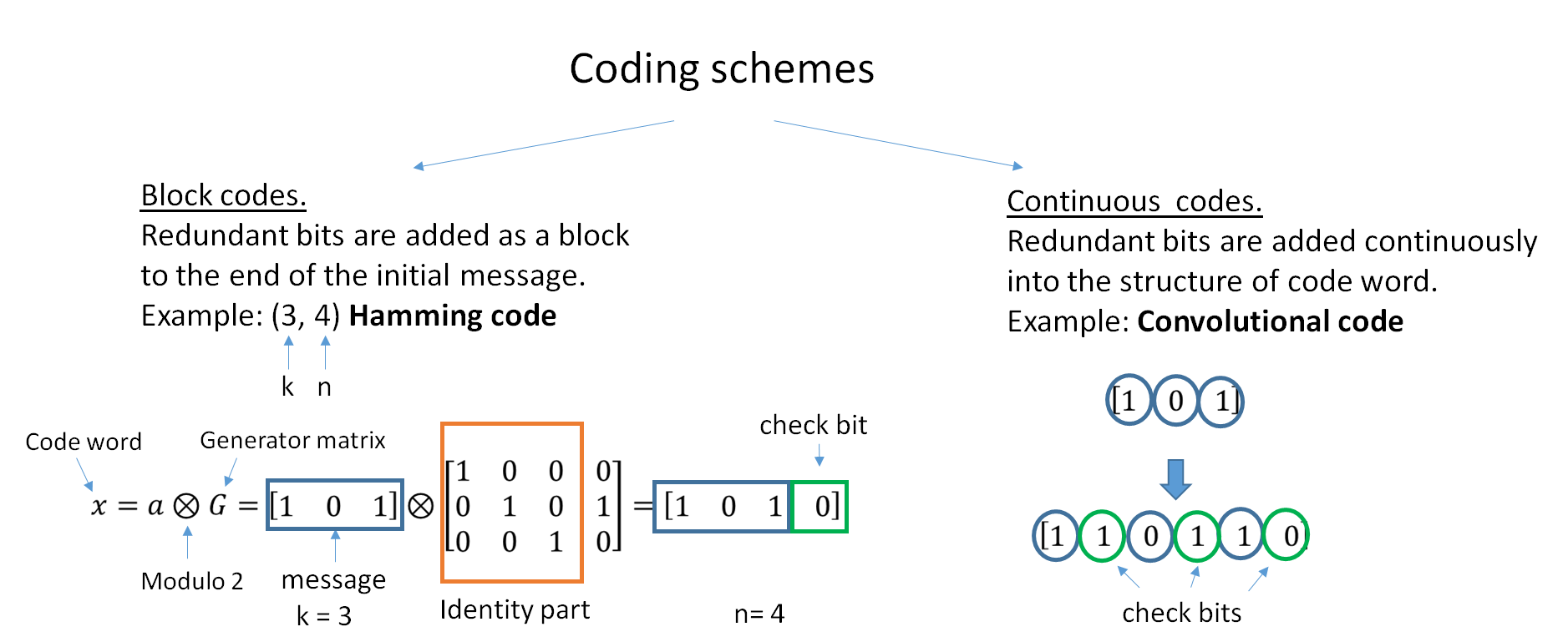

Channel Coding

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Streaming Media

Streaming media is multimedia that is delivered and consumed in a continuous manner from a source, with little or no intermediate storage in network elements. ''Streaming'' refers to the delivery method of content, rather than the content itself. Distinguishing delivery method from the media applies specifically to telecommunications networks, as most of the traditional media delivery systems are either inherently ''streaming'' (e.g. radio, television) or inherently ''non-streaming'' (e.g. books, videotape, audio CDs). There are challenges with streaming content on the Internet. For example, users whose Internet connection lacks sufficient bandwidth may experience stops, lags, or poor buffering of the content, and users lacking compatible hardware or software systems may be unable to stream certain content. With the use of buffering of the content for just a few seconds in advance of playback, the quality can be much improved. Livestreaming is the real-time delivery of co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Packet (information Technology)

In telecommunications and computer networking, a network packet is a formatted unit of data carried by a packet-switched network. A packet consists of control information and user data; the latter is also known as the ''payload''. Control information provides data for delivering the payload (e.g., source and destination network addresses, error detection codes, or sequencing information). Typically, control information is found in packet headers and trailers. In packet switching, the bandwidth of the transmission medium is shared between multiple communication sessions, in contrast to circuit switching, in which circuits are preallocated for the duration of one session and data is typically transmitted as a continuous bit stream. Terminology In the seven-layer OSI model of computer networking, ''packet'' strictly refers to a protocol data unit at layer 3, the network layer. A data unit at layer 2, the data link layer, is a ''frame''. In layer 4, the transport layer, the data u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Network Congestion

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of new connections. A consequence of congestion is that an incremental increase in offered load leads either only to a small increase or even a decrease in network throughput. Network protocols that use aggressive retransmissions to compensate for packet loss due to congestion can increase congestion, even after the initial load has been reduced to a level that would not normally have induced network congestion. Such networks exhibit two stable states under the same level of load. The stable state with low throughput is known as congestive collapse. Networks use congestion control and congestion avoidance techniques to try to avoid collapse. These include: exponential backoff in protocols such as CSMA/CA in 802.11 and the similar CSMA/CD i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Best-effort Network

Best-effort delivery describes a network service in which the network does ''not'' provide any guarantee that data is delivered or that delivery meets any quality of service. In a best-effort network, all users obtain best-effort service. Under best-effort, network performance characteristics such as network delay and packet loss depend on the current network traffic load, and the network hardware capacity. When network load increases, this can lead to packet loss, retransmission, packet delay variation, and further network delay, or even timeout and session disconnect. Best-effort can be contrasted with reliable delivery, which can be built on top of best-effort delivery (possibly without latency and throughput guarantees), or with virtual circuit schemes which can maintain a defined quality of service. Network examples Physical services The postal service (''snail mail'') physically delivers letters using a best-effort delivery approach. The delivery of a certain lette ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet

The Internet (or internet) is the global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP) to communicate between networks and devices. It is a '' network of networks'' that consists of private, public, academic, business, and government networks of local to global scope, linked by a broad array of electronic, wireless, and optical networking technologies. The Internet carries a vast range of information resources and services, such as the inter-linked hypertext documents and applications of the World Wide Web (WWW), electronic mail, telephony, and file sharing. The origins of the Internet date back to the development of packet switching and research commissioned by the United States Department of Defense in the 1960s to enable time-sharing of computers. The primary precursor network, the ARPANET, initially served as a backbone for interconnection of regional academic and military networks in the 1970s to enable resource shari ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MPEG-2

MPEG-2 (a.k.a. H.222/H.262 as was defined by the ITU) is a standard for "the generic video coding format, coding of moving pictures and associated audio information". It describes a combination of Lossy compression, lossy video compression and Lossy compression, lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and HEVC, H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard. Main characteristics MPEG-2 is widely used as the format of digital television signals that are broadcast by terrestrial television, terrestrial (over-the-air), Cable television, cable, and direct broadcast satellite Television, TV systems. It also specifies the format of movies and other programs th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MPEG-4

MPEG-4 is a group of international standards for the compression of digital audio and visual data, multimedia systems, and file storage formats. It was originally introduced in late 1998 as a group of audio and video coding formats and related technology agreed upon by the ISO/IEC Moving Picture Experts Group (MPEG) ( ISO/IEC JTC 1/SC29/WG11) under the formal standard ISO/IEC 14496 – ''Coding of audio-visual objects''. Uses of MPEG-4 include compression of audiovisual data for Internet video and CD distribution, voice (telephone, videophone) and broadcast television applications. The MPEG-4 standard was developed by a group led by Touradj Ebrahimi (later the JPEG president) and Fernando Pereira. Background MPEG-4 absorbs many of the features of MPEG-1 and MPEG-2 and other related standards, adding new features such as (extended) VRML support for 3D rendering, object-oriented composite files (including audio, video and VRML objects), support for externally specified Digital ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Codec

A codec is a device or computer program that encodes or decodes a data stream or signal. ''Codec'' is a portmanteau of coder/decoder. In electronic communications, an endec is a device that acts as both an encoder and a decoder on a signal or data stream, and hence is a type of codec. ''Endec'' is a portmanteau of encoder/decoder. A coder or encoder encodes a data stream or a signal for transmission or storage, possibly in encrypted form, and the decoder function reverses the encoding for playback or editing. Codecs are used in videoconferencing, streaming media, and video editing applications. History In the mid-20th century, a codec was a device that coded analog signals into digital form using pulse-code modulation (PCM). Later, the name was also applied to software for converting between digital signal formats, including companding functions. Examples An audio codec converts analog audio signals into digital signals for transmission or encodes them for storage. A receiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Layered Coding

Layered coding is a type of data compression for digital video or digital audio where the result of compressing the source video data is not just one compressed data stream, as in other types of compression, but multiple streams, called ''layers'', allowing decompression even if some layers are missing. Overview With layered coding, multiple data streams or layers are created when compressing the original video stream. This is in contrast to other types of compression, where the result is typically a single data stream. During decompression, all layers can be combined to recreate the original video stream. Additionally, the stream can be decoded even if some layers are missing (though usually a layer hierarchy has to be respected, with a base layer that must available). If layers are missing, the resulting stream will have reduced visual quality, but will still be usable. Use cases Layered coding is helpful when the same video stream needs to be available in different qualitie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |