|

Multiple Comparisons Problem

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values. The more inferences are made, the more likely erroneous inferences become. Several statistical techniques have been developed to address that problem, typically by requiring a stricter significance threshold for individual comparisons, so as to compensate for the number of inferences being made. History The problem of multiple comparisons received increased attention in the 1950s with the work of statisticians such as Tukey and Scheffé. Over the ensuing decades, many procedures were developed to address the problem. In 1996, the first international conference on multiple comparison procedures took place in Israel. Definition Multiple comparisons arise when a statistical analysis involves multiple simultaneous statistical tests, each of which has a poten ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spurious Correlations - Spelling Bee Spiders

{{disambiguation ...

Spurious may refer to: * Spurious relationship in statistics * Spurious emission or spurious tone in radio engineering * Spurious key in cryptography * Spurious interrupt in computing * Spurious wakeup in computing * ''Spurious'', a 2011 novel by Lars Iyer Lars Iyer is a British novelist and philosopher of Indian/Danish parentage. He is best known for a trilogy of short novels: ''Spurious'' (2011), ''Dogma'' (2012), and ''Exodus'' (2013), all published by Melville House. Iyer has been shortlisted f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Šidák Correction

In statistics, the Šidák correction, or Dunn–Šidák correction, is a method used to counteract the problem of multiple comparisons. It is a simple method to control the familywise error rate. When all null hypotheses are true, the method provides familywise error control that is exact for tests that are stochastically independent, is conservative for tests that are positively dependent, and is liberal for tests that are negatively dependent. It is credited to a 1967 paper by the statistician and probabilist Zbyněk Šidák. Usage * Given ''m'' different null hypotheses and a familywise alpha level of \alpha, each null hypotheses is rejected that has a p-value lower than \alpha_ = 1-(1-\alpha)^\frac . * This test produces a familywise Type I error rate of exactly \alpha when the tests are independent from each other and all null hypotheses are true. It is less stringent than the Bonferroni correction, but only slightly. For example, for \alpha = 0.05 and ''m'' = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The Royal Statistical Society, Series B

The ''Journal of the Royal Statistical Society'' is a peer-reviewed scientific journal of statistics. It comprises three series and is published by Wiley for the Royal Statistical Society. History The Statistical Society of London was founded in 1834, but would not begin producing a journal for four years. From 1834 to 1837, members of the society would read the results of their studies to the other members, and some details were recorded in the proceedings. The first study reported to the society in 1834 was a simple survey of the occupations of people in Manchester, England. Conducted by going door-to-door and inquiring, the study revealed that the most common profession was mill-hands, followed closely by weavers. When founded, the membership of the Statistical Society of London overlapped almost completely with the statistical section of the British Association for the Advancement of Science. In 1837 a volume of ''Transactions of the Statistical Society of London'' were ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

False Positive Rate

In statistics, when performing multiple comparisons, a false positive ratio (also known as fall-out or false alarm ratio) is the probability of falsely rejecting the null hypothesis for a particular test. The false positive rate is calculated as the ratio between the number of negative events wrongly categorized as positive (false positives) and the total number of actual negative events (regardless of classification). The false positive rate (or "false alarm rate") usually refers to the expectancy of the false positive ratio. Definition The false positive rate is FPR=\frac where \mathrm is the number of false positives, \mathrm is the number of true negatives and N=\mathrm+\mathrm is the total number of ground truth negatives. The level of significance that is used to test each hypothesis is set based on the form of inference ( simultaneous inference vs. selective inference) and its supporting criteria (for example FWER or FDR), that were pre-determined by the researche ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exploratory Data Analysis

In statistics, exploratory data analysis (EDA) is an approach of analyzing data sets to summarize their main characteristics, often using statistical graphics and other data visualization methods. A statistical model can be used or not, but primarily EDA is for seeing what the data can tell us beyond the formal modeling and thereby contrasts traditional hypothesis testing. Exploratory data analysis has been promoted by John Tukey since 1970 to encourage statisticians to explore the data, and possibly formulate hypotheses that could lead to new data collection and experiments. EDA is different from initial data analysis (IDA), which focuses more narrowly on checking assumptions required for model fitting and hypothesis testing, and handling missing values and making transformations of variables as needed. EDA encompasses IDA. Overview Tukey defined data analysis in 1961 as: "Procedures for analyzing data, techniques for interpreting the results of such procedures, ways of plan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Technology

Information technology (IT) is the use of computers to create, process, store, retrieve, and exchange all kinds of Data (computing), data . and information. IT forms part of information and communications technology (ICT). An information technology system (IT system) is generally an information system, a communications system, or, more specifically speaking, a Computer, computer system — including all Computer hardware, hardware, software, and peripheral equipment — operated by a limited group of IT users. Although humans have been storing, retrieving, manipulating, and communicating information since the earliest writing systems were developed, the term ''information technology'' in its modern sense first appeared in a 1958 article published in the ''Harvard Business Review''; authors Harold Leavitt, Harold J. Leavitt and Thomas L. Whisler commented that "the new technology does not yet have a single established name. We shall call it information technology (IT)." Their ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Measurement

Measurement is the quantification of attributes of an object or event, which can be used to compare with other objects or events. In other words, measurement is a process of determining how large or small a physical quantity is as compared to a basic reference quantity of the same kind. The scope and application of measurement are dependent on the context and discipline. In natural sciences and engineering, measurements do not apply to nominal properties of objects or events, which is consistent with the guidelines of the ''International vocabulary of metrology'' published by the International Bureau of Weights and Measures. However, in other fields such as statistics as well as the social and behavioural sciences, measurements can have multiple levels, which would include nominal, ordinal, interval and ratio scales. Measurement is a cornerstone of trade, science, technology and quantitative research in many disciplines. Historically, many measurement systems existed f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Genetic Association

Genetic association is when one or more genotypes within a population co-occur with a phenotypic trait more often than would be expected by chance occurrence. Studies of genetic association aim to test whether single-locus alleles or genotype frequencies (or more generally, multilocus haplotype frequencies) differ between two groups of individuals (usually diseased subjects and healthy controls). Genetic association studies today are based on the principle that genotypes can be compared "directly", i.e. with the sequences of the actual genomes or exomes via whole genome sequencing or whole exome sequencing. Before 2010, DNA sequencing methods were used. Description Genetic association can be between phenotypes, such as visible characteristics such as flower color or height, between a phenotype and a genetic polymorphism, such as a single nucleotide polymorphism (SNP), or between two genetic polymorphisms. Association between genetic polymorphisms occurs when there is non-random ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DNA Microarray

A DNA microarray (also commonly known as DNA chip or biochip) is a collection of microscopic DNA spots attached to a solid surface. Scientists use DNA microarrays to measure the expression levels of large numbers of genes simultaneously or to genotype multiple regions of a genome. Each DNA spot contains picomoles (10−12 moles) of a specific DNA sequence, known as '' probes'' (or ''reporters'' or '' oligos''). These can be a short section of a gene or other DNA element that are used to hybridize a cDNA or cRNA (also called anti-sense RNA) sample (called ''target'') under high-stringency conditions. Probe-target hybridization is usually detected and quantified by detection of fluorophore-, silver-, or chemiluminescence-labeled targets to determine relative abundance of nucleic acid sequences in the target. The original nucleic acid arrays were macro arrays approximately 9 cm × 12 cm and the first computerized image based analysis was published in 1981. It was inve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Genomics

Genomics is an interdisciplinary field of biology focusing on the structure, function, evolution, mapping, and editing of genomes. A genome is an organism's complete set of DNA, including all of its genes as well as its hierarchical, three-dimensional structural configuration. In contrast to genetics, which refers to the study of ''individual'' genes and their roles in inheritance, genomics aims at the collective characterization and quantification of ''all'' of an organism's genes, their interrelations and influence on the organism. Genes may direct the production of proteins with the assistance of enzymes and messenger molecules. In turn, proteins make up body structures such as organs and tissues as well as control chemical reactions and carry signals between cells. Genomics also involves the sequencing and analysis of genomes through uses of high throughput DNA sequencing and bioinformatics to assemble and analyze the function and structure of entire genomes. Advances in gen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Variance

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician Ronald Fisher. ANOVA is based on the law of total variance, where the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. In other words, the ANOVA is used to test the difference between two or more means. History While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis test ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

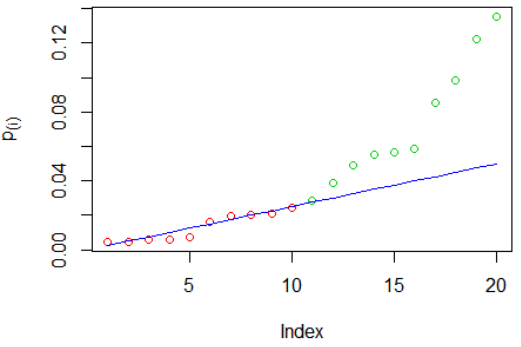

False Discovery Rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expected proportion of "discoveries" (rejected null hypotheses) that are false (incorrect rejections of the null). Equivalently, the FDR is the expected ratio of the number of false positive classifications (false discoveries) to the total number of positive classifications (rejections of the null). The total number of rejections of the null include both the number of false positives (FP) and true positives (TP). Simply put, FDR = FP / (FP + TP). FDR-controlling procedures provide less stringent control of Type I errors compared to family-wise error rate (FWER) controlling procedures (such as the Bonferroni correction), which control the probability of ''at least one'' Type I error. Thus, FDR-controlling procedures have greater power, at the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |