|

Markov Information Source

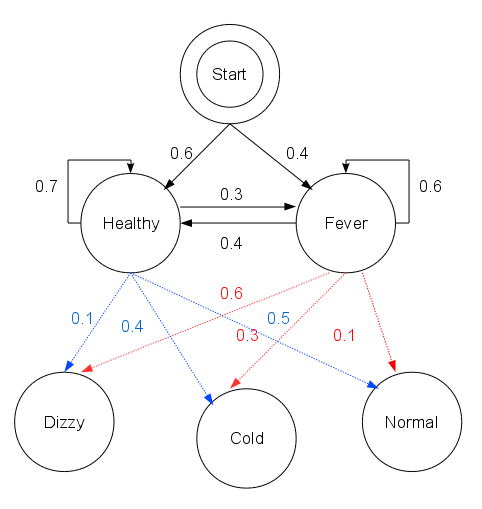

In mathematics, a Markov information source, or simply, a Markov source, is an information source whose underlying dynamics are given by a stationary finite Markov chain. Formal definition An information source is a sequence of random variables ranging over a finite alphabet Γ, having a stationary distribution. A Markov information source is then a (stationary) Markov chain ''M'', together with a function :f:S\to \Gamma that maps states ''S'' in the Markov chain to letters in the alphabet Γ. A unifilar Markov source is a Markov source for which the values f(s_k) are distinct whenever each of the states s_k are reachable, in one step, from a common prior state. Unifilar sources are notable in that many of their properties are far more easily analyzed, as compared to the general case. 00 Applications Markov sources are commonly used in communication theory, as a model of a transmitter. Markov sources also occur in natural language processing, where they are used to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented in modern mathematics with the major subdisciplines of number theory, algebra, geometry, and analysis, respectively. There is no general consensus among mathematicians about a common definition for their academic discipline. Most mathematical activity involves the discovery of properties of abstract objects and the use of pure reason to prove them. These objects consist of either abstractions from nature orin modern mathematicsentities that are stipulated to have certain properties, called axioms. A ''proof'' consists of a succession of applications of deductive rules to already established results. These results include previously proved theorems, axioms, andin case of abstraction from naturesome basic properties that are considered true starting points of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Source (mathematics)

In mathematics, an information source is a sequence of random variables ranging over a finite alphabet Γ, having a stationary distribution. The uncertainty, or entropy rate, of an information source is defined as :H\ = \lim_ H(X_n , X_0, X_1, \dots, X_) where : X_0, X_1, \dots, X_n is the sequence of random variables defining the information source, and :H(X_n , X_0, X_1, \dots, X_) is the conditional information entropy of the sequence of random variables. Equivalently, one has :H\ = \lim_ \frac. See also * Markov information source * Asymptotic equipartition property In information theory, the asymptotic equipartition property (AEP) is a general property of the output samples of a stochastic source. It is fundamental to the concept of typical set used in theories of data compression. Roughly speaking, the th ... References * Robert B. Ash, ''Information Theory'', (1965) Dover Publications. zh-yue:資訊源 Information theory Stochastic processes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain

A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs ''now''." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). It is named after the Russian mathematician Andrey Markov. Markov chains have many applications as statistical models of real-world processes, such as studying cruise control systems in motor vehicles, queues or lines of customers arriving at an airport, currency exchange rates and animal population dynamics. Markov processes are the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the possible upper sides of a flipped coin such as heads H and tails T) in a sample space (e.g., the set \) to a measurable space, often the real numbers (e.g., \ in which 1 corresponding to H and -1 corresponding to T). Informally, randomness typically represents some fundamental element of chance, such as in the roll of a dice; it may also represent uncertainty, such as measurement error. However, the interpretation of probability is philosophically complicated, and even in specific cases is not always straightforward. The purely mathematical analysis of random variables is independent of such interpretational difficulties, and can be based upon a rigorous axiomatic setup. In the formal mathematical language of measure theory, a random var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stationary Distribution

Stationary distribution may refer to: * A special distribution for a Markov chain such that if the chain starts with its stationary distribution, the marginal distribution of all states at any time will always be the stationary distribution. Assuming irreducibility, the stationary distribution is always unique if it exists, and its existence can be implied by positive recurrence of all states. The stationary distribution has the interpretation of the limiting distribution when the chain is irreducible and aperiodic. * The marginal distribution of a stationary process or stationary time series * The set of joint probability distributions of a stationary process or stationary time series In some fields of application, the term stable distribution is used for the equivalent of a stationary (marginal) distribution, although in probability and statistics the term has a rather different meaning: see stable distribution. Crudely stated, all of the above are specific cases of a common ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Communication Theory

Communication theory is a proposed description of communication phenomena, the relationships among them, a storyline describing these relationships, and an argument for these three elements. Communication theory provides a way of talking about and analyzing key events, processes, and commitments that together form communication. Theory can be seen as a way to map the world and make it navigable; communication theory gives us tools to answer empirical, conceptual, or practical communication questions. Communication is defined in both commonsense and specialized ways. Communication theory emphasizes its symbolic and social process aspects as seen from two perspectives—as exchange of information (the transmission perspective), and as work done to connect and thus enable that exchange (the ritual perspective). Sociolinguistic research in the 1950s and 1960s demonstrated that the level to which people change their formality of their language depending on the social context that the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transmitter

In electronics and telecommunications, a radio transmitter or just transmitter is an electronic device which produces radio waves with an antenna (radio), antenna. The transmitter itself generates a radio frequency alternating current, which is applied to the Antenna (radio), antenna. When excited by this alternating current, the antenna radiates radio waves. Transmitters are necessary component parts of all electronic devices that communicate by radio communication, radio, such as radio broadcasting, radio and television broadcasting stations, cell phones, walkie-talkies, Wireless LAN, wireless computer networks, Bluetooth enabled devices, garage door openers, two-way radios in aircraft, ships, spacecraft, radar sets and navigational beacons. The term ''transmitter'' is usually limited to equipment that generates radio waves for Communication engineering, communication purposes; or radiolocation, such as radar and navigational transmitters. Generators of radio waves for heatin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hidden Markov Model

A hidden Markov model (HMM) is a statistical Markov model in which the system being modeled is assumed to be a Markov process — call it X — with unobservable ("''hidden''") states. As part of the definition, HMM requires that there be an observable process Y whose outcomes are "influenced" by the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about X by observing Y. HMM has an additional requirement that the outcome of Y at time t=t_0 must be "influenced" exclusively by the outcome of X at t=t_0 and that the outcomes of X and Y at t handwriting recognition, handwriting, gesture recognition, part-of-speech tagging, musical score following, partial discharges and bioinformatics. Definition Let X_n and Y_n be discrete-time stochastic processes and n\geq 1. The pair (X_n,Y_n) is a ''hidden Markov model'' if * X_n is a Markov process whose behavior is not directly observable ("hidden"); * \operatorname\bigl(Y_n \i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events, especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. History The Viterbi a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy Rate

In the mathematical theory of probability, the entropy rate or source information rate of a stochastic process is, informally, the time density of the average information in a stochastic process. For stochastic processes with a countable index, the entropy rate H(X) is the limit of the joint entropy of n members of the process X_k divided by n, as n tends to infinity: :H(X) = \lim_ \frac H(X_1, X_2, \dots X_n) when the limit exists. An alternative, related quantity is: :H'(X) = \lim_ H(X_n, X_, X_, \dots X_1) For strongly stationary stochastic processes, H(X) = H'(X). The entropy rate can be thought of as a general property of stochastic sources; this is the asymptotic equipartition property. The entropy rate may be used to estimate the complexity of stochastic processes. It is used in diverse applications ranging from characterizing the complexity of languages, blind source separation, through to optimizing quantizers and data compression algorithms. For example, a maximum en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Processes

Markov (Bulgarian, russian: Марков), Markova, and Markoff are common surnames used in Russia and Bulgaria. Notable people with the name include: Academics *Ivana Markova (born 1938), Czechoslovak-British emeritus professor of psychology at the University of Stirling *John Markoff (sociologist) (born 1942), American professor of sociology and history at the University of Pittsburgh *Konstantin Markov (1905–1980), Soviet geomorphologist and quaternary geologist Mathematics, science, and technology *Alexander V. Markov (1965-), Russian biologist *Andrey Markov (1856–1922), Russian mathematician *Vladimir Andreevich Markov (1871–1897), Russian mathematician, brother of Andrey Markov (Sr.) *Andrey Markov Jr. (1903–1979), Russian mathematician and son of Andrey Markov *John Markoff (born 1949), American journalist of computer industry and technology *Moisey Markov (1908–1994), Russian physicist Performing arts *Albert Markov, Russian American violinist, composer * Alexan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |