|

Memoization

In computing, memoization or memoisation is an optimization technique used primarily to speed up computer programs by storing the results of expensive function calls to pure functions and returning the cached result when the same inputs occur again. Memoization has also been used in other contexts (and for purposes other than speed gains), such as in simple mutually recursive descent parsing. It is a type of caching, distinct from other forms of caching such as buffering and page replacement. In the context of some logic programming languages, memoization is also known as tabling. Etymology The term ''memoization'' was coined by Donald Michie in 1968 and is derived from the Latin word ('to be remembered'), usually truncated as ''memo'' in American English, and thus carries the meaning of 'turning he results ofa function into something to be remembered'. While ''memoization'' might be confused with ''memorization'' (because they are etymological cognates), ''memoization'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lookup Table

In computer science, a lookup table (LUT) is an array data structure, array that replaces runtime (program lifecycle phase), runtime computation of a mathematical function (mathematics), function with a simpler array indexing operation, in a process termed as ''direct addressing''. The savings in processing time can be significant, because retrieving a value from memory is often faster than carrying out an "expensive" computation or input/output operation. The tables may be precomputation, precalculated and stored in static memory allocation, static program storage, calculated (or prefetcher, "pre-fetched") as part of a program's initialization phase (''memoization''), or even stored in hardware in application-specific platforms. Lookup tables are also used extensively to validate input values by matching against a list of valid (or invalid) items in an array and, in some programming languages, may include pointer functions (or offsets to labels) to process the matching input. Fiel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimization (computer Science)

In computer science, program optimization, code optimization, or software optimization is the process of modifying a software system to make some aspect of it work more algorithmic efficiency, efficiently or use fewer resources. In general, a computer program may be optimized so that it executes more rapidly, or to make it capable of operating with less Computer data storage, memory storage or other resources, or draw less power. Overview Although the term "optimization" is derived from "optimum", achieving a truly optimal system is rare in practice, which is referred to as superoptimization. Optimization typically focuses on improving a system with respect to a specific quality metric rather than making it universally optimal. This often leads to trade-offs, where enhancing one metric may come at the expense of another. One popular example is space-time tradeoff, reducing a program’s execution time by increasing its memory consumption. Conversely, in scenarios where memory is l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factorial

In mathematics, the factorial of a non-negative denoted is the Product (mathematics), product of all positive integers less than or equal The factorial also equals the product of n with the next smaller factorial: \begin n! &= n \times (n-1) \times (n-2) \times (n-3) \times \cdots \times 3 \times 2 \times 1 \\ &= n\times(n-1)!\\ \end For example, 5! = 5\times 4! = 5 \times 4 \times 3 \times 2 \times 1 = 120. The value of 0! is 1, according to the convention for an empty product. Factorials have been discovered in several ancient cultures, notably in Indian mathematics in the canonical works of Jain literature, and by Jewish mystics in the Talmudic book ''Sefer Yetzirah''. The factorial operation is encountered in many areas of mathematics, notably in combinatorics, where its most basic use counts the possible distinct sequences – the permutations – of n distinct objects: there In mathematical analysis, factorials are used in power series for the ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pure Function

In computer programming, a pure function is a function that has the following properties: # the function return values are identical for identical arguments (no variation with local static variables, non-local variables, mutable reference arguments or input streams, i.e., referential transparency), and # the function has no side effects (no mutation of non-local variables, mutable reference arguments or input/output streams). Examples Pure functions The following examples of C++ functions are pure: Impure functions The following C++ functions are impure as they lack the above property 1: The following C++ functions are impure as they lack the above property 2: The following C++ functions are impure as they lack both the above properties 1 and 2: I/O in pure functions I/O is inherently impure: input operations undermine referential transparency, and output operations create side effects. Nevertheless, there is a sense in which a function can perform inpu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Referential Transparency

In analytic philosophy and computer science, referential transparency and referential opacity are properties of linguistic constructions, and by extension of languages. A linguistic construction is called ''referentially transparent'' when for any expression built from it, Rewriting, replacing a subexpression with another one that Denotation, denotes the same value does not change the value of the expression. Also: Otherwise, it is called ''referentially opaque''. Each expression built from a referentially opaque linguistic construction states something about a subexpression, whereas each expression built from a referentially transparent linguistic construction states something not about a subexpression, meaning that the subexpressions are ‘transparent’ to the expression, acting merely as ‘references’ to something else. For example, the linguistic construction ‘_ was wise’ is referentially transparent (e.g., ''Socrates was wise'' is equivalent to ''The founder of Weste ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hardware and software. Computing has scientific, engineering, mathematical, technological, and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology, and software engineering. The term ''computing'' is also synonymous with counting and calculation, calculating. In earlier times, it was used in reference to the action performed by Mechanical computer, mechanical computing machines, and before that, to Computer (occupation), human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudocode

In computer science, pseudocode is a description of the steps in an algorithm using a mix of conventions of programming languages (like assignment operator, conditional operator, loop) with informal, usually self-explanatory, notation of actions and conditions. Although pseudocode shares features with regular programming languages, it is intended for human reading rather than machine control. Pseudocode typically omits details that are essential for machine implementation of the algorithm, meaning that pseudocode can only be verified by hand. The programming language is augmented with natural language description details, where convenient, or with compact mathematical notation. The reasons for using pseudocode are that it is easier for people to understand than conventional programming language code and that it is an efficient and environment-independent description of the key principles of an algorithm. It is commonly used in textbooks and scientific publications to document ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-platform

Within computing, cross-platform software (also called multi-platform software, platform-agnostic software, or platform-independent software) is computer software that is designed to work in several Computing platform, computing platforms. Some cross-platform software requires a separate build for each platform, but some can be directly run on any platform without special preparation, being written in an interpreted language or compiled to portable bytecode for which the Interpreter (computing), interpreters or run-time packages are common or standard components of all supported platforms. For example, a cross-platform application software, application may run on Linux, macOS and Microsoft Windows. Cross-platform software may run on many platforms, or as few as two. Some frameworks for cross-platform development are Codename One, ArkUI-X, Kivy (framework), Kivy, Qt (software), Qt, GTK, Flutter (software), Flutter, NativeScript, Xamarin, Apache Cordova, Ionic (mobile app framework ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine-dependent

Machine-dependent software is software that runs only on a specific computer. Applications that run on multiple computer architectures are called machine-independent, or cross-platform. Many organisations opt for such software because they believe that machine-dependent software is an asset and will attract more buyers. Organizations that want application software to work on heterogeneous computers may port that software to the other machines. Deploying machine-dependent applications on such architectures, such applications require porting. This procedure includes composing, or re-composing, the application's code to suit the target platform. Porting Porting is the process of converting an application from one architecture to another. Software languages such as Java are designed so that applications can migrate across architectures without source code modifications. The term is applied when programming/equipment is changed to make it usable in a different architecture. Code that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compile-time

In computer science, compile time (or compile-time) describes the time window during which a language's statements are converted into binary instructions for the processor to execute. The term is used as an adjective to describe concepts related to the context of program compilation, as opposed to concepts related to the context of program execution ( run time). For example, ''compile-time requirements'' are programming language requirements that must be met by source code before compilation and ''compile-time properties'' are properties of the program that can be reasoned about during compilation. The actual length of time it takes to compile a program is usually referred to as ''compilation time''. Overview Most compilers have at least the following compiler phases (which therefore occur at compile-time): syntax analysis, semantic analysis, and code generation. During optimization phases, constant expressions in the source code can also be evaluated at compile-time usin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Run Time (program Lifecycle Phase)

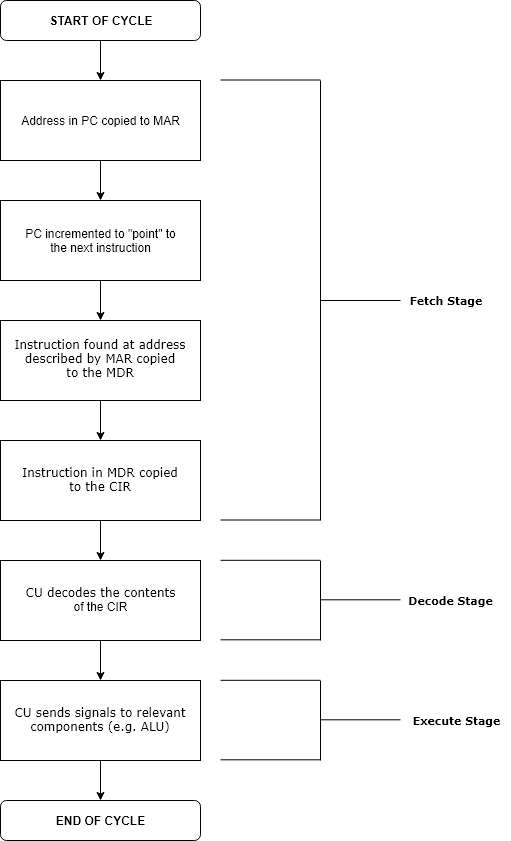

Execution in computer and software engineering is the process by which a computer or virtual machine interprets and acts on the instructions of a computer program. Each instruction of a program is a description of a particular action which must be carried out, in order for a specific problem to be solved. Execution involves repeatedly following a " fetch–decode–execute" cycle for each instruction done by the control unit. As the executing machine follows the instructions, specific effects are produced in accordance with the semantics of those instructions. Programs for a computer may be executed in a batch process without human interaction or a user may type commands in an interactive session of an interpreter. In this case, the "commands" are simply program instructions, whose execution is chained together. The term run is used almost synonymously. A related meaning of both "to run" and "to execute" refers to the specific action of a user starting (or ''launching'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |