|

Markov Models

In probability theory, a Markov model is a stochastic model used to Mathematical model, model pseudo-randomly changing systems. It is assumed that future states depend only on the current state, not on the events that occurred before it (that is, it assumes the Markov property). Generally, this assumption enables reasoning and computation with the model that would otherwise be Intractability (complexity), intractable. For this reason, in the fields of predictive modelling and probabilistic forecasting, it is desirable for a given model to exhibit the Markov property. Introduction Andrey Andreyevich Markov (14 June 1856 – 20 July 1922) was a Russian mathematician best known for his work on stochastic processes. A primary subject of his research later became known as the Markov chain. There are four common Markov models used in different situations, depending on whether every sequential state is observable or not, and whether the system is to be adjusted on the basis of observation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms of probability, axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure (mathematics), measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event (probability theory), event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of determinism, non-deterministic or uncertain processes or measured Quantity, quantities that may either be single occurrences or evolve over time in a random fashion). Although it is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

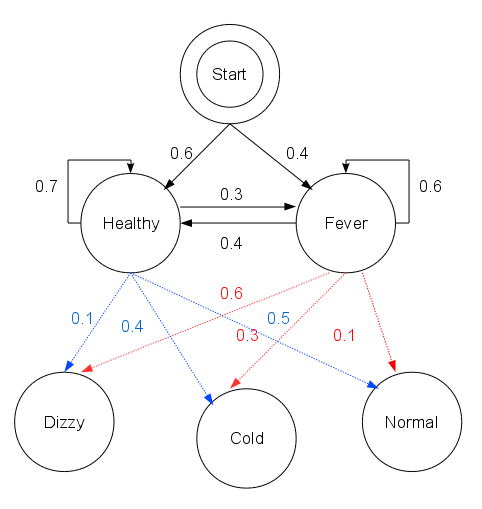

Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events. This is done especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. His ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variable-order Markov Model

In the mathematical theory of stochastic processes, variable-order Markov (VOM) models are an important class of models that extend the well known Markov chain models. In contrast to the Markov chain models, where each random variable in a sequence with a Markov property depends on a fixed number of random variables, in VOM models this number of conditioning random variables may vary based on the specific observed realization. This realization sequence is often called the ''context''; therefore the VOM models are also called ''context trees''. VOM models are nicely rendered by colorized probabilistic suffix trees (PST). The flexibility in the number of conditioning random variables turns out to be of real advantage for many applications, such as statistical analysis, classification and prediction. Example Consider for example a sequence of random variables, each of which takes a value from the ternary alphabet . Specifically, consider the string constructed from infinite concatena ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Andrey Markov

Andrey Andreyevich Markov (14 June 1856 – 20 July 1922) was a Russian mathematician best known for his work on stochastic processes. A primary subject of his research later became known as the Markov chain. He was also a strong, close to master-level, chess player. Markov and his younger brother Vladimir Markov (mathematician), Vladimir Andreyevich Markov (1871–1897) proved the Markov brothers' inequality. His son, another Andrey Markov (Soviet mathematician), Andrey Andreyevich Markov (1903–1979), was also a notable mathematician, making contributions to constructive mathematics and Recursion#Functional recursion, recursive function theory. Biography Andrey Markov was born on 14 June 1856 in Russia. He attended the St. Petersburg Grammar School, where some teachers saw him as a rebellious student. In his academics he performed poorly in most subjects other than mathematics. Later in life he attended Saint Petersburg Imperial University (now Saint Petersburg State Uni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Blanket

In statistics and machine learning, a Markov blanket of a random variable is a minimal set of variables that renders the variable conditionally independent of all other variables in the system. This concept is central in probabilistic graphical models and feature selection. If a Markov blanket is minimal—meaning that no variable in it can be removed without losing this conditional independence—it is called a Markov boundary. Identifying a Markov blanket or boundary allows for efficient inference and helps isolate relevant variables for prediction or causal reasoning. The terms of Markov blanket and Markov boundary were coined by Judea Pearl in 1988. A Markov blanket may be derived from the structure of a probabilistic graphical model such as a Bayesian network or Markov random field. Markov blanket A Markov blanket of a random variable Y in a random variable set \mathcal=\ is any subset \mathcal_1 of \mathcal, conditioned on which other variables are independent with Y: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solar Irradiance

Solar irradiance is the power per unit area (surface power density) received from the Sun in the form of electromagnetic radiation in the wavelength range of the measuring instrument. Solar irradiance is measured in watts per square metre (W/m2) in SI units. Solar irradiance is often integrated over a given time period in order to report the radiant energy emitted into the surrounding environment (joule per square metre, J/m2) during that time period. This integrated solar irradiance is called solar irradiation, solar radiation, solar exposure, solar insolation, or insolation. Irradiance may be measured in space or at the Earth's surface after atmospheric absorption and scattering. Irradiance in space is a function of distance from the Sun, the solar cycle, and cross-cycle changes.Michael Boxwell, ''Solar Electricity Handbook: A Simple, Practical Guide to Solar Energy'' (2012), pp. 41–42. Irradiance on the Earth's surface additionally depends on the tilt of the measuri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchical Hidden Markov Model

The hierarchical hidden Markov model (HHMM) is a statistical model derived from the hidden Markov model (HMM). In an HHMM, each state is considered to be a self-contained probabilistic model. More precisely, each state of the HHMM is itself an HHMM. HHMMs and HMMs are useful in many fields, including pattern recognition. Background It is sometimes useful to use HMMs in specific structures in order to facilitate learning and generalization. For example, even though a fully connected HMM could always be used if enough training data is available, it is often useful to constrain the model by not allowing arbitrary state transitions. In the same way it can be beneficial to embed the HMM into a greater structure; which, theoretically, may not be able to solve any other problems than the basic HMM but can solve some problems more efficiently when it comes to the amount of training data required. Description In the hierarchical hidden Markov model (HHMM), each state is considered to b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Random Field

In the domain of physics and probability, a Markov random field (MRF), Markov network or undirected graphical model is a set of random variables having a Markov property described by an undirected graph In discrete mathematics, particularly in graph theory, a graph is a structure consisting of a set of objects where some pairs of the objects are in some sense "related". The objects are represented by abstractions called '' vertices'' (also call .... In other words, a random field is said to be a Andrey Markov, Markov random field if it satisfies Markov properties. The concept originates from the Spin glass#Sherrington–Kirkpatrick model, Sherrington–Kirkpatrick model. A Markov network or MRF is similar to a Bayesian network in its representation of dependencies; the differences being that Bayesian networks are directed acyclic graph, directed and acyclic, whereas Markov networks are undirected and may be cyclic. Thus, a Markov network can represent certain dependencies th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NP Complete

In computational complexity theory, NP-complete problems are the hardest of the problems to which ''solutions'' can be verified ''quickly''. Somewhat more precisely, a problem is NP-complete when: # It is a decision problem, meaning that for any input to the problem, the output is either "yes" or "no". # When the answer is "yes", this can be demonstrated through the existence of a short (polynomial length) ''solution''. # The correctness of each solution can be verified quickly (namely, in polynomial time) and a brute-force search algorithm can find a solution by trying all possible solutions. # The problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. Hence, if we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified. The name "NP-complete" is short for "nondeterministic polynomial-time complete". In this name, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

POMDP

A partially observable Markov decision process (POMDP) is a generalization of a Markov decision process (MDP). A POMDP models an agent decision process in which it is assumed that the system dynamics are determined by an MDP, but the agent cannot directly observe the underlying state. Instead, it must maintain a sensor model (the probability distribution of different observations given the underlying state) and the underlying MDP. Unlike the policy function in MDP which maps the underlying states to the actions, POMDP's policy is a mapping from the history of observations (or belief states) to the actions. The POMDP framework is general enough to model a variety of real-world sequential decision processes. Applications include robot navigation problems, machine maintenance, and planning under uncertainty in general. The general framework of Markov decision processes with imperfect information was described by Karl Johan Åström in 1965 in the case of a discrete state space, and it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Waveform

In electronics, acoustics, and related fields, the waveform of a signal is the shape of its Graph of a function, graph as a function of time, independent of its time and Magnitude (mathematics), magnitude Scale (ratio), scales and of any displacement in time.David Crecraft, David Gorham, ''Electronics'', 2nd ed., , CRC Press, 2002, p. 62 ''Periodic waveforms'' repeat regularly at a constant wave period, period. The term can also be used for non-periodic or aperiodic signals, like chirps and pulse (signal processing), pulses. In electronics, the term is usually applied to time-varying voltages, electric current, currents, or electromagnetic fields. In acoustics, it is usually applied to steady periodic sounds — variations of air pressure, pressure in air or other media. In these cases, the waveform is an attribute that is independent of the frequency, amplitude, or phase shift of the signal. The waveform of an electrical signal can be visualized with an oscilloscope or an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Coding

Speech coding is an application of data compression to digital audio signals containing speech. Speech coding uses speech-specific parameter estimation using audio signal processing techniques to model the speech signal, combined with generic data compression algorithms to represent the resulting modeled parameters in a compact bitstream. Common applications of speech coding are mobile telephony and voice over IP (VoIP). The most widely used speech coding technique in mobile telephony is linear predictive coding (LPC), while the most widely used in VoIP applications are the LPC and modified discrete cosine transform (MDCT) techniques. The techniques employed in speech coding are similar to those used in audio data compression and audio coding where appreciation of psychoacoustics is used to transmit only data that is relevant to the human auditory system. For example, in voiceband speech coding, only information in the frequency band 400 to 3500 Hz is transmitted but the re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |