|

LPBoost

Linear Programming Boosting (LPBoost) is a supervised classifier from the boosting family of classifiers. LPBoost maximizes a margin between training samples of different classes, and thus also belongs to the class of margin classifier algorithms. Consider a classification function f: \mathcal \to \, which classifies samples from a space \mathcal into one of two classes, labelled 1 and -1, respectively. LPBoost is an algorithm for learning such a classification function, given a set of training examples with known class labels. LPBoost is a machine learning technique especially suited for joint classification and feature selection in structured domains. LPBoost overview As in all boosting classifiers, the final classification function is of the form :f(\boldsymbol) = \sum_^ \alpha_j h_j(\boldsymbol), where \alpha_j are non-negative weightings for ''weak'' classifiers h_j: \mathcal \to \. Each individual weak classifier h_j may be just a little bit better than random, bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AdaBoost

AdaBoost (short for Adaptive Boosting) is a statistical classification meta-algorithm formulated by Yoav Freund and Robert Schapire in 1995, who won the 2003 Gödel Prize for their work. It can be used in conjunction with many types of learning algorithm to improve performance. The output of multiple ''weak learners'' is combined into a weighted sum that represents the final output of the boosted classifier. Usually, AdaBoost is presented for binary classification, although it can be generalized to multiple classes or bounded intervals of real values. AdaBoost is adaptive in the sense that subsequent weak learners (models) are adjusted in favor of instances misclassified by previous models. In some problems, it can be less susceptible to overfitting than other learning algorithms. The individual learners can be weak, but as long as the performance of each one is slightly better than random guessing, the final model can be proven to converge to a strong learner. Although AdaBo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boosting (machine Learning)

In machine learning (ML), boosting is an Ensemble learning, ensemble metaheuristic for primarily reducing Bias–variance tradeoff, bias (as opposed to variance). It can also improve the Stability (learning theory), stability and accuracy of ML Statistical classification, classification and Regression analysis, regression algorithms. Hence, it is prevalent in supervised learning for converting weak learners to strong learners. The concept of boosting is based on the question posed by Michael Kearns (computer scientist), Kearns and Leslie Valiant, Valiant (1988, 1989):Michael Kearns(1988)''Thoughts on Hypothesis Boosting'' Unpublished manuscript (Machine Learning class project, December 1988) "Can a set of weak learners create a single strong learner?" A weak learner is defined as a Statistical classification, classifier that is only slightly correlated with the true classification. A strong learner is a classifier that is arbitrarily well-correlated with the true classification. R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Delayed Column Generation

Column generation or delayed column generation is an efficient algorithm for solving large linear programs. The overarching idea is that many linear programs are too large to consider all the variables explicitly. The idea is thus to start by solving the considered program with only a subset of its variables. Then iteratively, variables that have the potential to improve the objective function are added to the program. Once it is possible to demonstrate that adding new variables would no longer improve the value of the objective function, the procedure stops. The hope when applying a column generation algorithm is that only a very small fraction of the variables will be generated. This hope is supported by the fact that in the optimal solution, most variables will be non-basic and assume a value of zero, so the optimal solution can be found without them. In many cases, this method allows to solve large linear programs that would otherwise be intractable. The classical example of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ensemble Learning

In statistics and machine learning, ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. Unlike a statistical ensemble in statistical mechanics, which is usually infinite, a machine learning ensemble consists of only a concrete finite set of alternative models, but typically allows for much more flexible structure to exist among those alternatives. Overview Supervised learning algorithms search through a hypothesis space to find a suitable hypothesis that will make good predictions with a particular problem. Even if this space contains hypotheses that are very well-suited for a particular problem, it may be very difficult to find a good one. Ensembles combine multiple hypotheses to form one which should be theoretically better. ''Ensemble learning'' trains two or more machine learning algorithms on a specific classification or regression task. The algorithms wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Selection

Model selection is the task of selecting a model from among various candidates on the basis of performance criterion to choose the best one. In the context of machine learning and more generally statistical analysis, this may be the selection of a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Stump

A decision stump is a machine learning model consisting of a one-level decision tree. That is, it is a decision tree with one internal node (the root) which is immediately connected to the terminal nodes (its leaves). A decision stump makes a prediction based on the value of just a single input feature. Sometimes they are also called 1-rules. Depending on the type of the input feature, several variations are possible. For nominal features, one may build a stump which contains a leaf for each possible feature value or a stump with the two leaves, one of which corresponds to some chosen category, and the other leaf to all the other categories.This is what has been implemented in Weka's DecisionStump classifier. For binary features these two schemes are identical. A missing value may be treated as a yet another category. For continuous features, usually, some threshold feature value is selected, and the stump contains two leaves — for values below and above the threshold. However, r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dual Problem

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

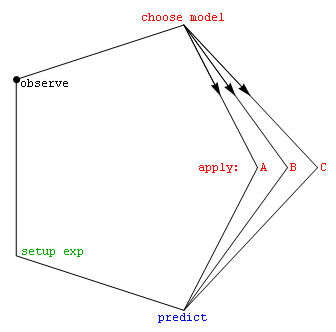

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often human-made labels. The training process builds a function that maps new data to expected output values. An optimal scenario will allow for the algorithm to accurately determine output values for unseen instances. This requires the learning algorithm to Generalization (learning), generalize from the training data to unseen situations in a reasonable way (see inductive bias). This statistical quality of an algorithm is measured via a ''generalization error''. Steps to follow To solve a given problem of supervised learning, the following steps must be performed: # Determine the type of training samples. Before doing anything else, the user should decide what kind of data is to be used as a Training, validation, and test data sets, trainin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Program

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements and objective are represented by linear relationships. Linear programming is a special case of mathematical programming (also known as mathematical optimization). More formally, linear programming is a technique for the optimization of a linear objective function, subject to linear equality and linear inequality constraints. Its feasible region is a convex polytope, which is a set defined as the intersection of finitely many half spaces, each of which is defined by a linear inequality. Its objective function is a real-valued affine (linear) function defined on this polytope. A linear programming algorithm finds a point in the polytope where this function has the largest (or smallest) value if such a point exists. Linear programs are problems that can be expressed in standard form as: : \beg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

When classification is performed by a computer, statistical methods are normally used to develop the algorithm. Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a concrete implementation, is known as a classifier. The term "classifier" sometimes also refers to the mathematical function, implemented by a classification algorithm, that maps input data to a category. Terminology across fields is quite varied. In statistics, where classi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Selection

In machine learning, feature selection is the process of selecting a subset of relevant Feature (machine learning), features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons: * simplification of models to make them easier to interpret, * shorter training times, * to avoid the curse of dimensionality, * improve the compatibility of the data with a certain learning model class, * to encode inherent Symmetric space, symmetries present in the input space. The central premise when using feature selection is that data sometimes contains features that are ''redundant'' or ''irrelevant'', and can thus be removed without incurring much loss of information. Redundancy and irrelevance are two distinct notions, since one relevant feature may be redundant in the presence of another relevant feature with which it is strongly correlated. Feature extraction creates new features from functions of the original features, whereas feat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |