|

Limits Of Computation

The limits of computation are governed by a number of different factors. In particular, there are several physical and practical limits to the amount of computation or data storage that can be performed with a given amount of mass, volume, or energy. Hardware limits or physical limits Processing and memory density * The Bekenstein bound limits the amount of information that can be stored within a spherical volume to the entropy of a black hole with the same surface area. * Thermodynamics limit the data storage of a system based on its energy, number of particles and particle modes. In practice, it is a stronger bound than the Bekenstein bound. Processing speed * Bremermann's limit is the maximum computational speed of a self-contained system in the material universe, and is based on mass–energy versus quantum uncertainty constraints. Communication delays * The Margolus–Levitin theorem sets a bound on the maximum computational speed per unit of energy: 6 × 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computation

A computation is any type of arithmetic or non-arithmetic calculation that is well-defined. Common examples of computation are mathematical equation solving and the execution of computer algorithms. Mechanical or electronic devices (or, historically, people) that perform computations are known as ''computers''. Computer science is an academic field that involves the study of computation. Introduction The notion that mathematical statements should be 'well-defined' had been argued by mathematicians since at least the 1600s, but agreement on a suitable definition proved elusive. A candidate definition was proposed independently by several mathematicians in the 1930s. The best-known variant was formalised by the mathematician Alan Turing, who defined a well-defined statement or calculation as any statement that could be expressed in terms of the initialisation parameters of a Turing machine. Other (mathematically equivalent) definitions include Alonzo Church's '' lambda-defin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Constant

The Boltzmann constant ( or ) is the proportionality factor that relates the average relative thermal energy of particles in a ideal gas, gas with the thermodynamic temperature of the gas. It occurs in the definitions of the kelvin (K) and the molar gas constant, in Planck's law of black-body radiation and Boltzmann's entropy formula, and is used in calculating Johnson–Nyquist noise, thermal noise in resistors. The Boltzmann constant has Dimensional analysis, dimensions of energy divided by temperature, the same as entropy and heat capacity. It is named after the Austrian scientist Ludwig Boltzmann. As part of the 2019 revision of the SI, the Boltzmann constant is one of the seven "Physical constant, defining constants" that have been defined so as to have exact finite decimal values in SI units. They are used in various combinations to define the seven SI base units. The Boltzmann constant is defined to be exactly joules per kelvin, with the effect of defining the SI unit ke ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Seth Lloyd

Seth Lloyd (born August 2, 1960) is a professor of mechanical engineering and physics at the Massachusetts Institute of Technology. His research area is the interplay of information with complex systems, especially quantum systems. He has performed seminal work in the fields of quantum computation, quantum communication and quantum biology, including proposing the first technologically feasible design for a quantum computer, demonstrating the viability of quantum analog computation, proving quantum analogs of Shannon's noisy channel theorem, and designing novel methods for quantum error correction and noise reduction. Biography Lloyd was born on August 2, 1960. He graduated from Phillips Academy in 1978 and received a bachelor of arts degree from Harvard College in 1982. He earned a certificate of advanced study in mathematics and a master of philosophy degree from Cambridge University in 1983 and 1984, while on a Marshall Scholarship. Lloyd was awarded a doctorate by Rocke ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Black Hole Information Paradox

The black hole information paradox is a paradox that appears when the predictions of quantum mechanics and general relativity are combined. The theory of general relativity predicts the existence of black holes that are regions of spacetime from which nothing—not even light—can escape. In the 1970s, Stephen Hawking applied the semiclassical approach of quantum field theory in curved spacetime to such systems and found that an isolated black hole would emit a form of radiation (now called Hawking radiation in his honor). He also argued that the detailed form of the radiation would be independent of the initial state of the black hole, and depend only on its mass, electric charge and angular momentum. The information paradox appears when one considers a process in which a black hole is formed through a physical process and then evaporates away entirely through Hawking radiation. Hawking's calculation suggests that the final state of radiation would retain information only about ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stephen Hawking

Stephen William Hawking (8January 194214March 2018) was an English theoretical physics, theoretical physicist, cosmologist, and author who was director of research at the Centre for Theoretical Cosmology at the University of Cambridge. Between 1979 and 2009, he was the Lucasian Professor of Mathematics at Cambridge, widely viewed as one of the most prestigious academic posts in the world. Hawking was born in Oxford into a family of physicians. In October 1959, at the age of 17, he began his university education at University College, Oxford, where he received a First Class Honours, first-class Honours degree, BA degree in physics. In October 1962, he began his graduate work at Trinity Hall, Cambridge, where, in March 1966, he obtained his PhD in applied mathematics and theoretical physics, specialising in general relativity and cosmology. In 1963, at age 21, Hawking was diagnosed with an early-onset slow-progressing form of motor neurone disease that gradually, over decades, pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nanotechnology

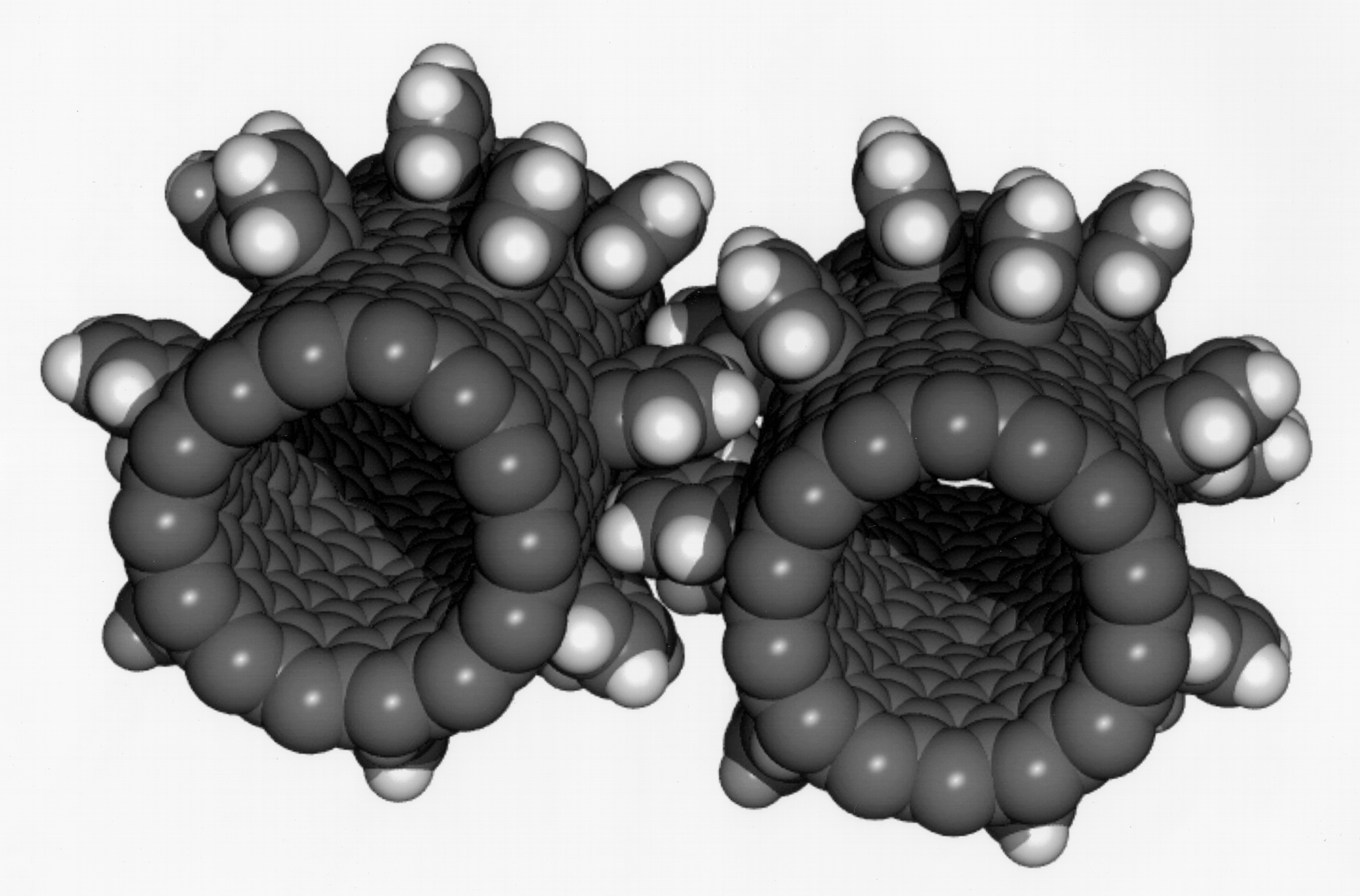

Nanotechnology is the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (nm). At this scale, commonly known as the nanoscale, surface area and quantum mechanical effects become important in describing properties of matter. This definition of nanotechnology includes all types of research and technologies that deal with these special properties. It is common to see the plural form "nanotechnologies" as well as "nanoscale technologies" to refer to research and applications whose common trait is scale. An earlier understanding of nanotechnology referred to the particular technological goal of precisely manipulating atoms and molecules for fabricating macroscale products, now referred to as molecular nanotechnology. Nanotechnology defined by scale includes fields of science such as surface science, organic chemistry, molecular biology, semiconductor physics, energy storage, engineering, microfabrication, and molecular engineering. The associated rese ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Femtotechnology

Femtotechnology is a term used in reference to the hypothetical manipulation of matter on the scale of a femtometer, or 10−15 m. This is three orders of magnitude lower than picotechnology, at the scale of 10−12 m, and six orders of magnitude lower than nanotechnology, at the scale of 10−9 m. Theory Work in the femtometer range involves manipulation of excited energy states within atomic nuclei, specifically nuclear isomers, to produce metastable (or otherwise stabilized) states with unusual properties. In the extreme case, excited states of the individual nucleons that make up the atomic nucleus (protons and neutrons) are considered, ostensibly to tailor the behavioral properties of these particles. The most advanced form of molecular nanotechnology is often imagined to involve self-replicating molecular machines, and there have been some speculations suggesting something similar might be possible with analogues of molecules composed of nucleons rather tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computronium

Computronium is a material hypothesized by Norman Margolus and Tommaso Toffoli of MIT in 1991 to be used as "programmable matter", a substrate for computer modeling of virtually any real object. It also refers to an arrangement of matter that is the best possible form of computing device for that amount of matter. In this context, the term can refer both to a theoretically perfect arrangement of hypothetical materials that would have been developed using nanotechnology at the molecular, atomic, or subatomic level (in which case this interpretation of computronium could be unobtainium), and to the best possible achievable form using currently available and used computational materials. According to the Barrow scale, a modified variant of the Kardashev scale created by British physicist John D. Barrow, which is intended to categorize the development stage of extraterrestrial civilizations, it would be conceivable that advanced civilizations do not claim more and more space and res ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neutron Star

A neutron star is the gravitationally collapsed Stellar core, core of a massive supergiant star. It results from the supernova explosion of a stellar evolution#Massive star, massive star—combined with gravitational collapse—that compresses the core past white dwarf star density to that of Atomic nucleus, atomic nuclei. Surpassed only by black holes, neutron stars are the second smallest and densest known class of stellar objects. Neutron stars have a radius on the order of and a mass of about . Stars that collapse into neutron stars have a total mass of between 10 and 25 solar masses (), or possibly more for those that are especially rich in Metallicity, elements heavier than hydrogen and helium. Once formed, neutron stars no longer actively generate heat and cool over time, but they may still evolve further through Stellar collision, collisions or Accretion (astrophysics), accretion. Most of the basic models for these objects imply that they are composed almost entirely o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nucleon

In physics and chemistry, a nucleon is either a proton or a neutron, considered in its role as a component of an atomic nucleus. The number of nucleons in a nucleus defines the atom's mass number. Until the 1960s, nucleons were thought to be elementary particles, not made up of smaller parts. Now they are understood as composite particles, made of three quarks bound together by the strong interaction. The interaction between two or more nucleons is called internucleon interaction or nuclear force, which is also ultimately caused by the strong interaction. (Before the discovery of quarks, the term "strong interaction" referred to just internucleon interactions.) Nucleons sit at the boundary where particle physics and nuclear physics overlap. Particle physics, particularly quantum chromodynamics, provides the fundamental equations that describe the properties of quarks and of the strong interaction. These equations describe quantitatively how quarks can bind together into protons ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Well

A quantum well is a potential well with only discrete energy values. The classic model used to demonstrate a quantum well is to confine particles, which were initially free to move in three dimensions, to two dimensions, by forcing them to occupy a planar region. The effects of quantum confinement take place when the quantum well thickness becomes comparable to the de Broglie wavelength of the carriers (generally electrons and electron hole, holes), leading to energy levels called "energy subbands", i.e., the carriers can only have discrete energy values. The concept of quantum well was proposed in 1963 independently by Herbert Kroemer and by Zhores Alferov and R.F. Kazarinov.Zh. I. Alferov and R.F. Kazarinov, Authors Certificate 28448 (U.S.S.R) 1963. History The semiconductor quantum well was developed in 1970 by Leo Esaki, Esaki and Raphael Tsu, Tsu, who also invented synthetic superlattices. They suggested that a Heterojunction, heterostructure made up of alternating thin l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degenerate Star

In astronomy, the term compact object (or compact star) refers collectively to white dwarfs, neutron stars, and black holes. It could also include exotic stars if such hypothetical, dense bodies are confirmed to exist. All compact objects have a high mass relative to their radius, giving them a very high density, compared to ordinary atomic matter. Compact objects are often the endpoints of stellar evolution and, in this respect, are also called stellar remnants. They can also be called dead stars in public communications. The state and type of a stellar remnant depends primarily on the mass of the star that it formed from. The ambiguous term ''compact object'' is often used when the exact nature of the star is not known, but evidence suggests that it has a very small radius compared to ordinary stars. A compact object that is not a black hole may be called a degenerate star. In June 2020, astronomers reported narrowing down the source of Fast radio burst, Fast Radio Bursts (FRBs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |