|

Kruskal–Wallis One-way Analysis Of Variance

The Kruskal–Wallis test by ranks, Kruskal–Wallis ''H'' testKruskal–Wallis H Test using SPSS Statistics Laerd Statistics (named after William Kruskal and ), or one-way ANOVA on ranks is a non-parametric method for testing whether samples originate from the same distribution. It is used for comparing two or more independent samples of equal or different sample sizes. It extends the [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Kruskal

William Henry Kruskal (; October 10, 1919 – April 21, 2005) was an American mathematician and statistician. He is best known for having formulated the Kruskal–Wallis one-way analysis of variance (together with W. Allen Wallis), a widely used nonparametric statistical method. Biography Kruskal was born to a Jewish family in New York City to a successful fur wholesaler. University of St Andrews, Scotland - School of Mathematics and Statistics: "William Kruskal" by J.J. O'Connor and E.F. Robertson November 2006 His mother, Lillian Rose Vorhaus Kr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Tests

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset ( Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jonckheere's Trend Test

In statistics, the Jonckheere trend test (sometimes called the Jonckheere–Terpstra test) is a test for an ordered alternative hypothesis within an independent samples (between-participants) design. It is similar to the Kruskal–Wallis test in that the null hypothesis is that several independent samples are from the same population. However, with the Kruskal–Wallis test there is no a priori ordering of the populations from which the samples are drawn. When there is an ''a priori'' ordering, the Jonckheere test has more statistical power than the Kruskal–Wallis test. The test was developed by Aimable Robert Jonckheere, who was a psychologist and statistician at University College London. The null and alternative hypotheses can be conveniently expressed in terms of population medians for ''k'' populations (where ''k'' > 2). Letting ''θi'' be the population median for the ''i''th population, the null hypothesis is: :H_0: \theta_1 = \theta_2 = \cdots = \theta_k The alternati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Friedman Test

The Friedman test is a non-parametric statistical test developed by Milton Friedman. Similar to the parametric repeated measures ANOVA, it is used to detect differences in treatments across multiple test attempts. The procedure involves ranking each row (or ''block'') together, then considering the values of ranks by columns. Applicable to complete block designs, it is thus a special case of the Durbin test. Classic examples of use are: * ''n'' wine judges each rate ''k'' different wines. Are any of the ''k'' wines ranked consistently higher or lower than the others? * ''n'' welders each use ''k'' welding torches, and the ensuing welds were rated on quality. Do any of the ''k'' torches produce consistently better or worse welds? The Friedman test is used for one-way repeated measures analysis of variance by ranks. In its use of ranks it is similar to the Kruskal–Wallis one-way analysis of variance by ranks. The Friedman test is widely supported by many statistical softwar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Communications In Statistics

''Communications in Statistics'' is a peer-reviewed scientific journal that publishes papers related to statistics. It is published by Taylor & Francis in three series, ''Theory and Methods'', ''Simulation and Computation'', and ''Case Studies, Data Analysis and Applications''. ''Communications in Statistics – Theory and Methods'' This series started publishing in 1970 and publishes papers related to statistical theory and methods. It publishes 20 issues each year. Based on Web of Science, the five most cited papers in the journal are: * Kulldorff M. A spatial scan statistic, 1997, 982 cites. * Holland PW, Welsch RE. Robust regression using iteratively reweighted least-squares, 1977, 526 cites. * Sugiura N. Further analysts of the data by Akaike's information criterion and the finite corrections, 1978, 490 cites. * Hosmer DW, Lemeshow S. Goodness of fit tests for the multiple logistic regression model, 1980, 401 cites. * Iman RL, Conover WJ. Small sample sensitivity analysis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Comparisons Problem

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values. The more inferences are made, the more likely erroneous inferences become. Several statistical techniques have been developed to address that problem, typically by requiring a stricter significance threshold for individual comparisons, so as to compensate for the number of inferences being made. History The problem of multiple comparisons received increased attention in the 1950s with the work of statisticians such as Tukey and Scheffé. Over the ensuing decades, many procedures were developed to address the problem. In 1996, the first international conference on multiple comparison procedures took place in Israel. Definition Multiple comparisons arise when a statistical analysis involves multiple simultaneous statistical tests, each of which has a potent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of ''N'' independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to ''N'' − 1. Mathematically, degrees of freedom is the number of dimensions of the domain ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Distribution

In probability theory and statistics, the chi-squared distribution (also chi-square or \chi^2-distribution) with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. The chi-squared distribution is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in construction of confidence intervals. This distribution is sometimes called the central chi-squared distribution, a special case of the more general noncentral chi-squared distribution. The chi-squared distribution is used in the common chi-squared tests for goodness of fit of an observed distribution to a theoretical one, the independence of two criteria of classification of qualitative data, and in confidence interval estimation for a population standard deviation of a normal distribution from a sample standard deviation. Many other statistical tes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-parametric Statistics

Nonparametric statistics is the branch of statistics that is not based solely on parametrized families of probability distributions (common examples of parameters are the mean and variance). Nonparametric statistics is based on either being distribution-free or having a specified distribution but with the distribution's parameters unspecified. Nonparametric statistics includes both descriptive statistics and statistical inference. Nonparametric tests are often used when the assumptions of parametric tests are violated. Definitions The term "nonparametric statistics" has been imprecisely defined in the following two ways, among others: Applications and purpose Non-parametric methods are widely used for studying populations that take on a ranked order (such as movie reviews receiving one to four stars). The use of non-parametric methods may be necessary when data have a ranking but no clear numerical interpretation, such as when assessing preferences. In terms of levels of me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

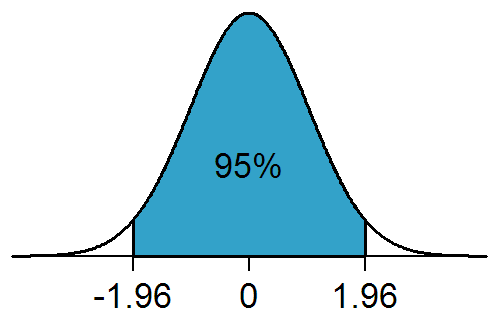

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |