|

Kosambi–Karhunen–Loève Theorem

In the theory of stochastic processes, the Karhunen–Loève theorem (named after Kari Karhunen and Michel Loève), also known as the Kosambi–Karhunen–Loève theorem states that a stochastic process can be represented as an infinite linear combination of orthogonal functions, analogous to a Fourier series representation of a function on a bounded interval. The transformation is also known as Hotelling transform and eigenvector transform, and is closely related to principal component analysis (PCA) technique widely used in image processing and in data analysis in many fields. There exist many such expansions of a stochastic process: if the process is indexed over , any orthonormal basis of yields an expansion thereof in that form. The importance of the Karhunen–Loève theorem is that it yields the best such basis in the sense that it minimizes the total mean squared error. In contrast to a Fourier series where the coefficients are fixed numbers and the expansion basis consi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Process

In probability theory and related fields, a stochastic () or random process is a mathematical object usually defined as a family of random variables. Stochastic processes are widely used as mathematical models of systems and phenomena that appear to vary in a random manner. Examples include the growth of a bacterial population, an electrical current fluctuating due to thermal noise, or the movement of a gas molecule. Stochastic processes have applications in many disciplines such as biology, chemistry, ecology, neuroscience, physics, image processing, signal processing, control theory, information theory, computer science, cryptography and telecommunications. Furthermore, seemingly random changes in financial markets have motivated the extensive use of stochastic processes in finance. Applications and the study of phenomena have in turn inspired the proposal of new stochastic processes. Examples of such stochastic processes include the Wiener process or Brownian motion process, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastically Independent

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convergence Of Random Variables

In probability theory, there exist several different notions of convergence of random variables. The convergence of sequences of random variables to some limit random variable is an important concept in probability theory, and its applications to statistics and stochastic processes. The same concepts are known in more general mathematics as stochastic convergence and they formalize the idea that a sequence of essentially random or unpredictable events can sometimes be expected to settle down into a behavior that is essentially unchanging when items far enough into the sequence are studied. The different possible notions of convergence relate to how such a behavior can be characterized: two readily understood behaviors are that the sequence eventually takes a constant value, and that values in the sequence continue to change but can be described by an unchanging probability distribution. Background "Stochastic convergence" formalizes the idea that a sequence of essentially rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mercer's Theorem

In mathematics, specifically functional analysis, Mercer's theorem is a representation of a symmetric positive-definite function on a square as a sum of a convergent sequence of product functions. This theorem, presented in , is one of the most notable results of the work of James Mercer (1883–1932). It is an important theoretical tool in the theory of integral equations; it is used in the Hilbert space theory of stochastic processes, for example the Karhunen–Loève theorem; and it is also used to characterize a symmetric positive semi-definite kernel. Introduction To explain Mercer's theorem, we first consider an important special case; see below for a more general formulation. A ''kernel'', in this context, is a symmetric continuous function : K: ,b\times ,b\rightarrow \mathbb where symmetric means that K(x,y) = K(y,x) for all x,y \in ,b/math>. ''K'' is said to be ''non-negative definite'' (or positive semidefinite) if and only if : \sum_^n\sum_^n K(x_i, x_j) c_i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integral Equation

In mathematics, integral equations are equations in which an unknown Function (mathematics), function appears under an integral sign. In mathematical notation, integral equations may thus be expressed as being of the form: f(x_1,x_2,x_3,...,x_n ; u(x_1,x_2,x_3,...,x_n) ; I^1 (u), I^2(u), I^3(u), ..., I^m(u)) = 0where I^i(u) is an integral operator acting on ''u.'' Hence, integral equations may be viewed as the analog to differential equations where instead of the equation involving derivatives, the equation contains integrals. A direct comparison can be seen with the mathematical form of the general integral equation above with the general form of a differential equation which may be expressed as follows:f(x_1,x_2,x_3,...,x_n ; u(x_1,x_2,x_3,...,x_n) ; D^1 (u), D^2(u), D^3(u), ..., D^m(u)) = 0where D^i(u) may be viewed as a differential operator of order ''i''. Due to this close connection between differential and integral equations, one can often convert between the two. For examp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hilbert–Schmidt Integral Operator

In mathematics, a Hilbert–Schmidt integral operator is a type of integral transform. Specifically, given a domain (an open and connected set) Ω in ''n''-dimensional Euclidean space R''n'', a Hilbert–Schmidt kernel is a function ''k'' : Ω × Ω → C with :\int_ \int_ , k(x, y) , ^ \,dx \, dy < \infty (that is, the ''L''2(Ω×Ω; C) norm of ''k'' is finite), and the associated Hilbert–Schmidt integral operator is the operator ''K'' : ''L''2(Ω; C) → ''L''2(Ω; C) given by : Then ''K'' is a with Hilbert–Schmidt norm : Hilbert–Schmidt integral ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Operator

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping V \to W between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism. If a linear map is a bijection then it is called a . In the case where V = W, a linear map is called a (linear) ''endomorphism''. Sometimes the term refers to this case, but the term "linear operator" can have different meanings for different conventions: for example, it can be used to emphasize that V and W are real vector spaces (not necessarily with V = W), or it can be used to emphasize that V is a function space, which is a common convention in functional analysis. Sometimes the term ''linear function'' has the same meaning as ''linear map' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Square-integrable Function

In mathematics, a square-integrable function, also called a quadratically integrable function or L^2 function or square-summable function, is a real- or complex-valued measurable function for which the integral of the square of the absolute value is finite. Thus, square-integrability on the real line (-\infty,+\infty) is defined as follows. One may also speak of quadratic integrability over bounded intervals such as ,b/math> for a \leq b. An equivalent definition is to say that the square of the function itself (rather than of its absolute value) is Lebesgue integrable. For this to be true, the integrals of the positive and negative portions of the real part must both be finite, as well as those for the imaginary part. The vector space of square integrable functions (with respect to Lebesgue measure) forms the ''Lp'' space with p=2. Among the ''Lp'' spaces, the class of square integrable functions is unique in being compatible with an inner product, which allows notions lik ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Space

In probability theory, a probability space or a probability triple (\Omega, \mathcal, P) is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models the throwing of a die. A probability space consists of three elements:Stroock, D. W. (1999). Probability theory: an analytic view. Cambridge University Press. # A sample space, \Omega, which is the set of all possible outcomes. # An event space, which is a set of events \mathcal, an event being a set of outcomes in the sample space. # A probability function, which assigns each event in the event space a probability, which is a number between 0 and 1. In order to provide a sensible model of probability, these elements must satisfy a number of axioms, detailed in this article. In the example of the throw of a standard die, we would take the sample space to be \. For the event space, we could simply use the set of all subsets of the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

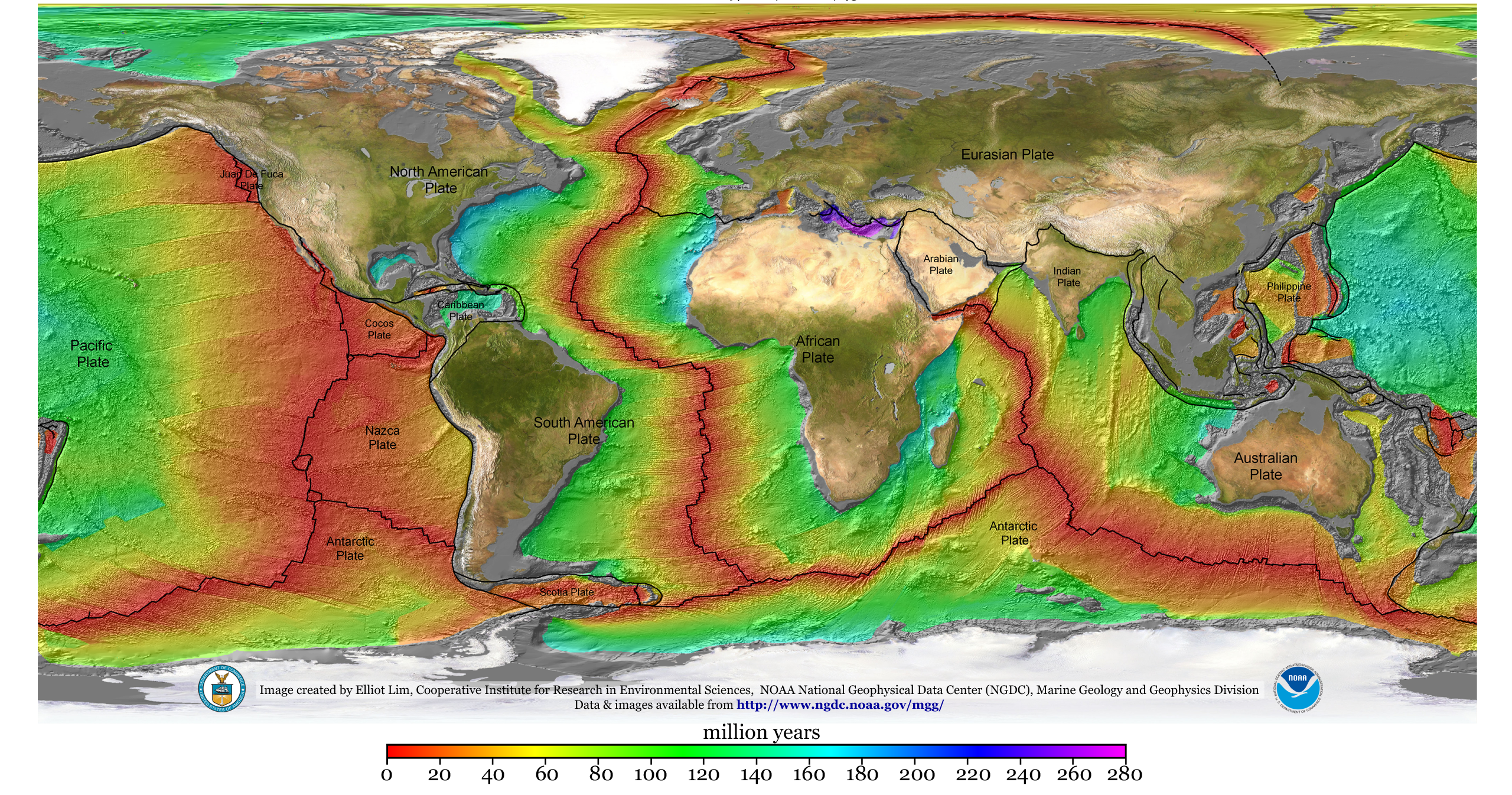

Geophysics

Geophysics () is a subject of natural science concerned with the physical processes and physical properties of the Earth and its surrounding space environment, and the use of quantitative methods for their analysis. The term ''geophysics'' sometimes refers only to solid earth applications: Earth's shape; its gravitational and magnetic fields; its internal structure and composition; its dynamics and their surface expression in plate tectonics, the generation of magmas, volcanism and rock formation. However, modern geophysics organizations and pure scientists use a broader definition that includes the water cycle including snow and ice; fluid dynamics of the oceans and the atmosphere; electricity and magnetism in the ionosphere and magnetosphere and solar-terrestrial physics; and analogous problems associated with the Moon and other planets. Gutenberg, B., 1929, Lehrbuch der Geophysik. Leipzig. Berlin (Gebruder Borntraeger). Runcorn, S.K, (editor-in-chief), 1967, International ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Meteorology

Meteorology is a branch of the atmospheric sciences (which include atmospheric chemistry and physics) with a major focus on weather forecasting. The study of meteorology dates back millennia, though significant progress in meteorology did not begin until the 18th century. The 19th century saw modest progress in the field after weather observation networks were formed across broad regions. Prior attempts at prediction of weather depended on historical data. It was not until after the elucidation of the laws of physics, and more particularly in the latter half of the 20th century the development of the computer (allowing for the automated solution of a great many modelling equations) that significant breakthroughs in weather forecasting were achieved. An important branch of weather forecasting is marine weather forecasting as it relates to maritime and coastal safety, in which weather effects also include atmospheric interactions with large bodies of water. Meteorological pheno ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Empirical Orthogonal Functions

In statistics and signal processing, the method of empirical orthogonal function (EOF) analysis is a decomposition of a signal or data set in terms of orthogonal basis functions which are determined from the data. The term is also interchangeable with the geographically weighted Principal components analysis in geophysics. The ''i'' th basis function is chosen to be orthogonal to the basis functions from the first through ''i'' − 1, and to minimize the residual variance. That is, the basis functions are chosen to be different from each other, and to account for as much variance as possible. The method of EOF analysis is similar in spirit to harmonic analysis, but harmonic analysis typically uses predetermined orthogonal functions, for example, sine and cosine functions at fixed frequencies. In some cases the two methods may yield essentially the same results. The basis functions are typically found by computing the eigenvectors of the covariance matrix of the data set. A m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |