|

Kőnig's Theorem (graph Theory)

In the mathematical area of graph theory, Kőnig's theorem, proved by , describes an equivalence between the maximum matching problem and the minimum vertex cover problem in bipartite graphs. It was discovered independently, also in 1931, by Jenő Egerváry in the more general case of weighted graphs. Setting A vertex cover in a graph is a set of vertices that includes at least one endpoint of every edge, and a vertex cover is ''minimum'' if no other vertex cover has fewer vertices. A matching in a graph is a set of edges no two of which share an endpoint, and a matching is ''maximum'' if no other matching has more edges. It is obvious from the definition that any vertex-cover set must be at least as large as any matching set (since for every edge in the matching, at least one vertex is needed in the cover). In particular, the minimum vertex cover set is at least as large as the maximum matching set. Kőnig's theorem states that, in any bipartite graph, the minimum vertex co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Program

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements are represented by linear relationships. Linear programming is a special case of mathematical programming (also known as mathematical optimization). More formally, linear programming is a technique for the optimization of a linear objective function, subject to linear equality and linear inequality constraints. Its feasible region is a convex polytope, which is a set defined as the intersection of finitely many half spaces, each of which is defined by a linear inequality. Its objective function is a real-valued affine (linear) function defined on this polyhedron. A linear programming algorithm finds a point in the polytope where this function has the smallest (or largest) value if such a point exists. Linear programs are problems that can be expressed in canonical form as : \begin & \text && ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

International Congress Of Mathematicians

The International Congress of Mathematicians (ICM) is the largest conference for the topic of mathematics. It meets once every four years, hosted by the International Mathematical Union (IMU). The Fields Medals, the Nevanlinna Prize (to be renamed as the IMU Abacus Medal), the Gauss Prize, and the Chern Medal are awarded during the congress's opening ceremony. Each congress is memorialized by a printed set of Proceedings recording academic papers based on invited talks intended to be relevant to current topics of general interest. Being invited to talk at the ICM has been called "the equivalent ... of an induction to a hall of fame". History Felix Klein and Georg Cantor are credited with putting forward the idea of an international congress of mathematicians in the 1890s.A. John Coleman"Mathematics without borders": a book review ''CMS Notes'', vol 31, no. 3, April 1999, pp. 3-5 The University of Chicago, which had opened in 1892, organized an International Mathematical Con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perfect Matching

In graph theory, a perfect matching in a graph is a matching that covers every vertex of the graph. More formally, given a graph , a perfect matching in is a subset of edge set , such that every vertex in the vertex set is adjacent to exactly one edge in . A perfect matching is also called a 1-factor; see Graph factorization for an explanation of this term. In some literature, the term complete matching is used. Every perfect matching is a maximum-cardinality matching, but the opposite is not true. For example, consider the following graphs: : In graph (b) there is a perfect matching (of size 3) since all 6 vertices are matched; in graphs (a) and (c) there is a maximum-cardinality matching (of size 2) which is not perfect, since some vertices are unmatched. A perfect matching is also a minimum-size edge cover. If there is a perfect matching, then both the matching number and the edge cover number equal . A perfect matching can only occur when the graph has an even n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regular Graph

In graph theory, a regular graph is a graph where each vertex has the same number of neighbors; i.e. every vertex has the same degree or valency. A regular directed graph must also satisfy the stronger condition that the indegree and outdegree of each vertex are equal to each other. A regular graph with vertices of degree is called a graph or regular graph of degree . Also, from the handshaking lemma, a regular graph contains an even number of vertices with odd degree. Regular graphs of degree at most 2 are easy to classify: a graph consists of disconnected vertices, a graph consists of disconnected edges, and a graph consists of a disjoint union of cycles and infinite chains. A graph is known as a cubic graph. A strongly regular graph is a regular graph where every adjacent pair of vertices has the same number of neighbors in common, and every non-adjacent pair of vertices has the same number of neighbors in common. The smallest graphs that are regular but not ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dénes Kőnig

Dénes Kőnig (September 21, 1884 – October 19, 1944) was a Hungarian mathematician of Jewish heritage who worked in and wrote the first textbook on the field of graph theory. Biography Kőnig was born in Budapest, the son of mathematician Gyula Kőnig. In 1907, he received his doctorate Translated by Richard McCoart; with commentary by W.T. Tutte. at, and joined the faculty of the Royal Joseph University in Budapest (today Budapest University of Technology and Economics). His classes were visited by Paul Erdős, who, as a first year student, solved one of his problems. Kőnig became a full professor there in 1935. To honor his fathers' death in 1913, Kőnig and his brother György created the Gyula Kőnig prize in 1918. This prize was meant to be an endowment for young mathematicians, however was later devaluated. But the prize remained as a medal of high scientific recognition. In 1899, he published his first work while still attending High School in a journal ''Matematikai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Set (graph Theory)

In graph theory, an independent set, stable set, coclique or anticlique is a set of vertices in a graph, no two of which are adjacent. That is, it is a set S of vertices such that for every two vertices in S, there is no edge connecting the two. Equivalently, each edge in the graph has at most one endpoint in S. A set is independent if and only if it is a clique in the graph's complement. The size of an independent set is the number of vertices it contains. Independent sets have also been called "internally stable sets", of which "stable set" is a shortening. A maximal independent set is an independent set that is not a proper subset of any other independent set. A maximum independent set is an independent set of largest possible size for a given graph G. This size is called the independence number of ''G'' and is usually denoted by \alpha(G). The optimization problem of finding such a set is called the maximum independent set problem. It is a strongly NP-hard problem. As suc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NP-complete

In computational complexity theory, a problem is NP-complete when: # it is a problem for which the correctness of each solution can be verified quickly (namely, in polynomial time) and a brute-force search algorithm can find a solution by trying all possible solutions. # the problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. In this sense, NP-complete problems are the hardest of the problems to which solutions can be verified quickly. If we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified. The name "NP-complete" is short for "nondeterministic polynomial-time complete". In this name, "nondeterministic" refers to nondeterministic Turing machines, a way of mathematically formalizing the idea of a brute-force search algorithm. Polynomial time refers to an amount of time that is considered "quick" for a det ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polynomial Time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally express ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Algorithm

A distributed algorithm is an algorithm designed to run on computer hardware constructed from interconnected processors. Distributed algorithms are used in different application areas of distributed computing, such as telecommunications, scientific computing, distributed information processing, and real-time process control. Standard problems solved by distributed algorithms include leader election, consensus, distributed search, spanning tree generation, mutual exclusion, and resource allocation. Distributed algorithms are a sub-type of parallel algorithm, typically executed concurrently, with separate parts of the algorithm being run simultaneously on independent processors, and having limited information about what the other parts of the algorithm are doing. One of the major challenges in developing and implementing distributed algorithms is successfully coordinating the behavior of the independent parts of the algorithm in the face of processor failures and unreliable communi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation Algorithm

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems (in particular NP-hard problems) with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

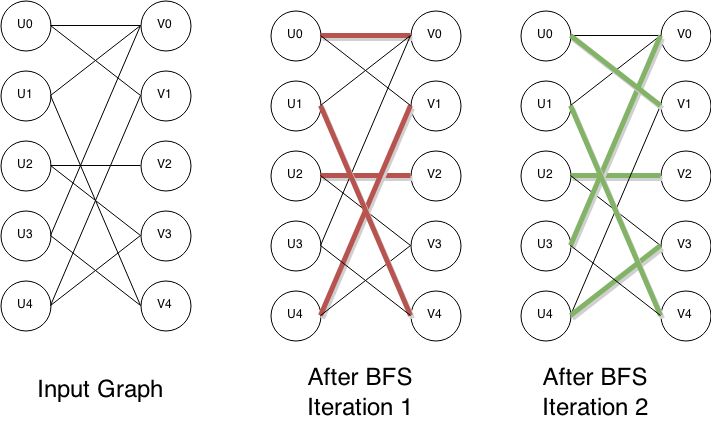

Hopcroft–Karp Algorithm

In computer science, the Hopcroft–Karp algorithm (sometimes more accurately called the Hopcroft–Karp–Karzanov algorithm) is an algorithm that takes a bipartite graph as input and produces a maximum cardinality matching as output – a set of as many edges as possible with the property that no two edges share an endpoint. It runs in O(, E, \sqrt) time in the worst case, where E is set of edges in the graph, V is set of vertices of the graph, and it is assumed that , E, =\Omega(, V, ). In the case of dense graphs the time bound becomes O(, V, ^), and for sparse random graphs it runs in time O(, E, \log , V, ) with high probability. The algorithm was discovered by and independently by . As in previous methods for matching such as the Hungarian algorithm and the work of , the Hopcroft–Karp algorithm repeatedly increases the size of a partial matching by finding ''augmenting paths''. These paths are sequences of edges of the graph, which alternate between edges in the matchi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |