|

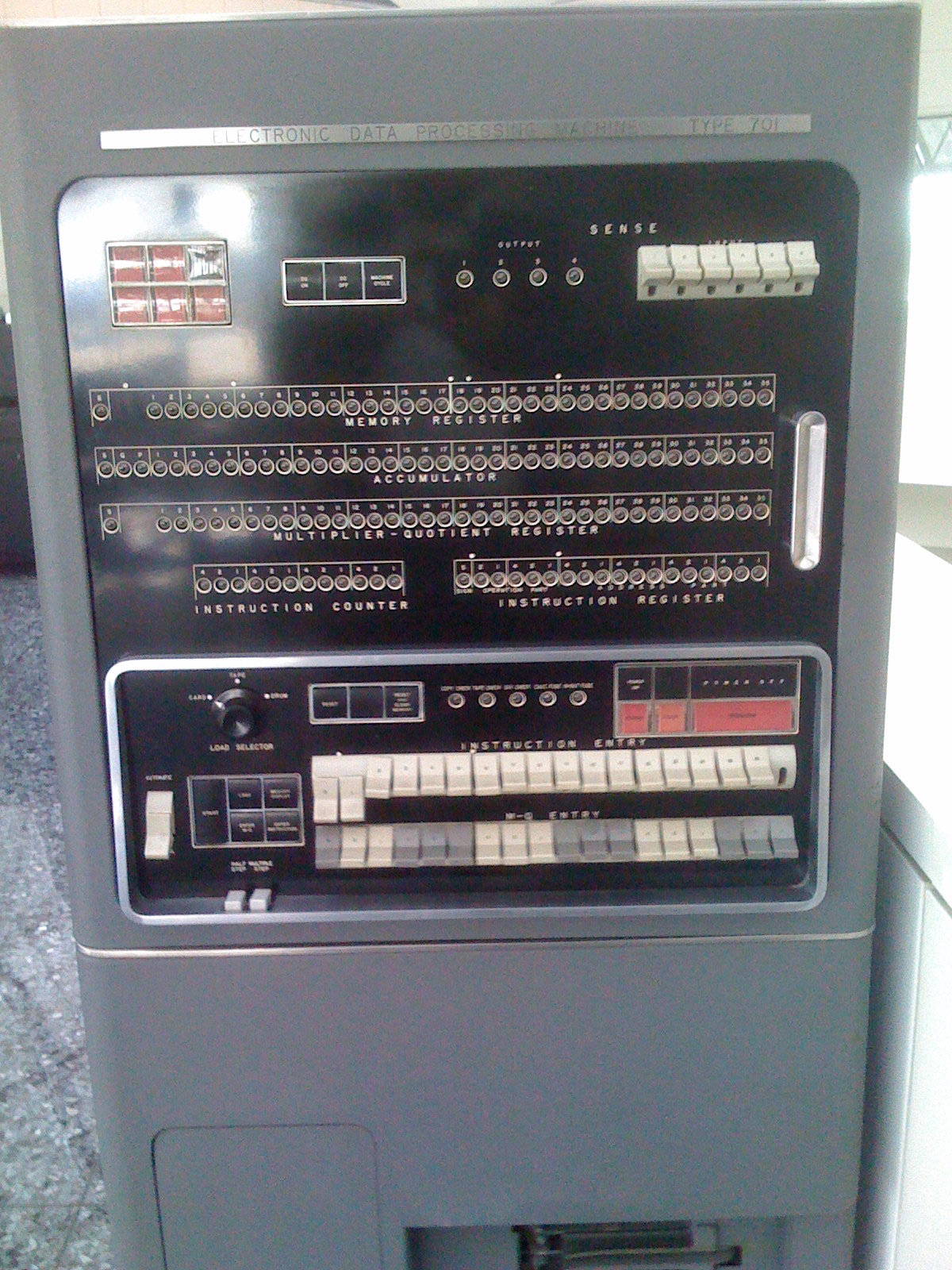

Instruction Address Register

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is a processor register that indicates where a computer is in its program sequence. Usually, the PC is incremented after fetching an instruction, and holds the memory address of (" points to") the next instruction that would be executed. Processors usually fetch instructions sequentially from memory, but ''control transfer'' instructions change the sequence by placing a new value in the PC. These include branches (sometimes called jumps), subroutine calls, and returns. A transfer that is conditional on the truth of some assertion lets the computer follow a different sequence under different conditions. A branch provides that the next instruction is fetched from elsewhere in memory. A subroutine call not only branches but saves the precedin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Processor Register

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. In computer architecture, registers are typically addressed by mechanisms other than main memory, but may in some cases be assigned a memory address e.g. DEC PDP-10, ICT 1900. Almost all computers, whether load/store architecture or not, load data from a larger memory into registers where it is used for arithmetic operations and is manipulated or tested by machine instructions. Manipulated data is then often stored back to main memory, either by the same instruction or by a subsequent one. Modern processors use either static or dynamic RAM as main memory, with the latter usually accessed via one or more cache levels. Processor registers are normally at the top of the memory hierarchy, and provide the fastest way to access data. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Combinatory Logic

Combinatory logic is a notation to eliminate the need for quantified variables in mathematical logic. It was introduced by Moses Schönfinkel and Haskell Curry, and has more recently been used in computer science as a theoretical model of computation and also as a basis for the design of functional programming languages. It is based on combinators, which were introduced by Schönfinkel in 1920 with the idea of providing an analogous way to build up functions—and to remove any mention of variables—particularly in predicate logic. A combinator is a higher-order function that uses only function application and earlier defined combinators to define a result from its arguments. In mathematics Combinatory logic was originally intended as a 'pre-logic' that would clarify the role of quantified variables in logic, essentially by eliminating them. Another way of eliminating quantified variables is Quine's predicate functor logic. While the expressive power of combinatory logic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Functional Programming

In computer science, functional programming is a programming paradigm where programs are constructed by Function application, applying and Function composition (computer science), composing Function (computer science), functions. It is a declarative programming paradigm in which function definitions are Tree (data structure), trees of Expression (computer science), expressions that map Value (computer science), values to other values, rather than a sequence of Imperative programming, imperative Statement (computer science), statements which update the State (computer science), running state of the program. In functional programming, functions are treated as first-class citizens, meaning that they can be bound to names (including local Identifier (computer languages), identifiers), passed as Parameter (computer programming), arguments, and Return value, returned from other functions, just as any other data type can. This allows programs to be written in a Declarative programming, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dataflow

In computing, dataflow is a broad concept, which has various meanings depending on the application and context. In the context of software architecture, data flow relates to stream processing or reactive programming. Software architecture Dataflow computing is a software paradigm based on the idea of representing computations as a directed graph, where nodes are computations and data flow along the edges. Dataflow can also be called stream processing or reactive programming. There have been multiple data-flow/stream processing languages of various forms (see Stream processing). Data-flow hardware (see Dataflow architecture) is an alternative to the classic von Neumann architecture. The most obvious example of data-flow programming is the subset known as reactive programming with spreadsheets. As a user enters new values, they are instantly transmitted to the next logical "actor" or formula for calculation. Distributed data flows have also been proposed as a programming abstrac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Von Neumann Bottleneck

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the ''First Draft of a Report on the EDVAC''. The document describes a design architecture for an electronic digital computer with these components: * A processing unit with both an arithmetic logic unit and processor registers * A control unit that includes an instruction register and a program counter * Memory that stores data and instructions * External mass storage * Input and output mechanisms.. The term "von Neumann architecture" has evolved to refer to any stored-program computer in which an instruction fetch and a data operation cannot occur at the same time (since they share a common bus). This is referred to as the von Neumann bottleneck, which often limits the performance of the corresponding system. The design of a von Neumann architecture machine is simpler than in a Harva ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Flow

In computer science, control flow (or flow of control) is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an ''imperative programming'' language from a '' declarative programming'' language. Within an imperative programming language, a ''control flow statement'' is a statement that results in a choice being made as to which of two or more paths to follow. For non-strict functional languages, functions and language constructs exist to achieve the same result, but they are usually not termed control flow statements. A set of statements is in turn generally structured as a block, which in addition to grouping, also defines a lexical scope. Interrupts and signals are low-level mechanisms that can alter the flow of control in a way similar to a subroutine, but usually occur as a response to some external stimulus or event (that can occur asynchronously), ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Von Neumann Architecture

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the ''First Draft of a Report on the EDVAC''. The document describes a design architecture for an electronic digital computer with these components: * A processing unit with both an arithmetic logic unit and processor registers * A control unit that includes an instruction register and a program counter * Memory that stores data and instructions * External mass storage * Input and output mechanisms.. The term "von Neumann architecture" has evolved to refer to any stored-program computer in which an instruction fetch and a data operation cannot occur at the same time (since they share a common bus). This is referred to as the von Neumann bottleneck, which often limits the performance of the corresponding system. The design of a von Neumann architecture machine is simpler than in a Harva ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Page (computer Memory)

A page, memory page, or virtual page is a fixed-length contiguous block of virtual memory, described by a single entry in the page table. It is the smallest unit of data for memory management in a virtual memory operating system. Similarly, a page frame is the smallest fixed-length contiguous block of physical memory into which memory pages are mapped by the operating system. A transfer of pages between main memory and an auxiliary store, such as a hard disk drive, is referred to as paging or swapping. Page size trade-off Page size is usually determined by the processor architecture. Traditionally, pages in a system had uniform size, such as 4,096 bytes. However, processor designs often allow two or more, sometimes simultaneous, page sizes due to its benefits. There are several points that can factor into choosing the best page size. Page table size A system with a smaller page size uses more pages, requiring a page table that occupies more space. For example, if a 232 vi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Segmentation (memory)

Memory segmentation is an operating system memory management technique of division of a computer's primary memory into segments or sections. In a computer system using segmentation, a reference to a memory location includes a value that identifies a segment and an offset (memory location) within that segment. Segments or sections are also used in object files of compiled programs when they are linked together into a program image and when the image is loaded into memory. Segments usually correspond to natural divisions of a program such as individual routines or data tables so segmentation is generally more visible to the programmer than paging alone. Segments may be created for program modules, or for classes of memory usage such as code and data segments. Certain segments may be shared between programs. Segmentation was originally invented as a method by which system software could isolate software processes ( tasks) and data they are using. It was intended to increase reli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AVR Controller

AVR is a family of microcontrollers developed since 1996 by Atmel, acquired by Microchip Technology in 2016. These are modified Harvard architecture 8-bit RISC single-chip microcontrollers. AVR was one of the first microcontroller families to use on-chip flash memory for program storage, as opposed to one-time programmable ROM, EPROM, or EEPROM used by other microcontrollers at the time. AVR microcontrollers find many applications as embedded systems. They are especially common in hobbyist and educational embedded applications, popularized by their inclusion in many of the Arduino line of open hardware development boards. History The AVR architecture was conceived by two students at the Norwegian Institute of Technology (NTH), Alf-Egil Bogen and Vegard Wollan.Archived aGhostarchiveand thWayback Machine Atmel says that the name AVR is not an acronym and does not stand for anything in particular. The creators of the AVR give no definitive answer as to what the term "AVR" sta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stored-program Computer

A stored-program computer is a computer that stores program instructions in electronically or optically accessible memory. This contrasts with systems that stored the program instructions with plugboards or similar mechanisms. The definition is often extended with the requirement that the treatment of programs and data in memory be interchangeable or uniform. Description In principle, stored-program computers have been designed with various architectural characteristics. A computer with a von Neumann architecture stores program data and instruction data in the same memory, while a computer with a Harvard architecture has separate memories for storing program and data. However, the term ''stored-program computer'' is sometimes used as a synonym for the von Neumann architecture. Jack Copeland considers that it is "historically inappropriate, to refer to electronic stored-program digital computers as 'von Neumann machines'". Hennessy and Patterson wrote that the early Harvard m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |