|

Hopcroft–Karp Algorithm

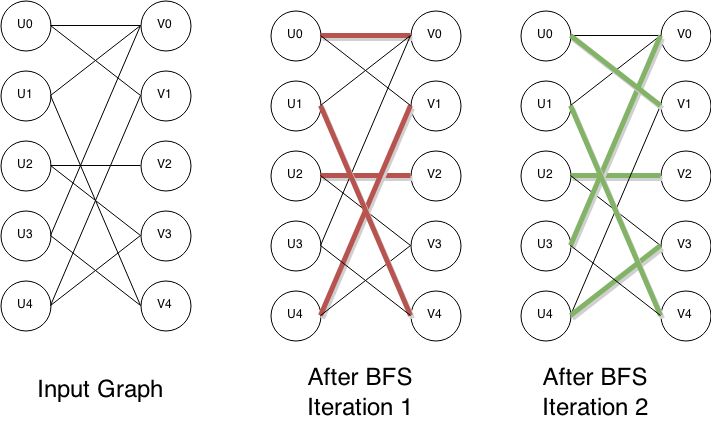

In computer science, the Hopcroft–Karp algorithm (sometimes more accurately called the Hopcroft–Karp–Karzanov algorithm) is an algorithm that takes a bipartite graph as input and produces a maximum-cardinality matching as output — a set of as many edges as possible with the property that no two edges share an endpoint. It runs in O(, E, \sqrt) time in the worst case, where E is set of edges in the graph, V is set of vertices of the graph, and it is assumed that , E, =\Omega(, V, ). In the case of dense graphs the time bound becomes O(, V, ^), and for sparse random graphs it runs in time O(, E, \log , V, ) with high probability. The algorithm was discovered by and independently by . As in previous methods for matching such as the Hungarian algorithm and the work of , the Hopcroft–Karp algorithm repeatedly increases the size of a partial matching by finding ''augmenting paths''. These paths are sequences of edges of the graph, which alternate between edges in the matchi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Graph (data Structure)

In computer science, a graph is an abstract data type that is meant to implement the undirected graph and directed graph concepts from the field of graph theory within mathematics. A graph data structure consists of a finite (and possibly mutable) set of ''vertices'' (also called ''nodes'' or ''points''), together with a set of unordered pairs of these vertices for an undirected graph or a set of ordered pairs for a directed graph. These pairs are known as ''edges'' (also called ''links'' or ''lines''), and for a directed graph are also known as ''edges'' but also sometimes ''arrows'' or ''arcs''. The vertices may be part of the graph structure, or may be external entities represented by integer indices or references. A graph data structure may also associate to each edge some ''edge value'', such as a symbolic label or a numeric attribute (cost, capacity, length, etc.). Operations The basic operations provided by a graph data structure ''G'' usually include:See, e.g. , Sec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pseudocode

In computer science, pseudocode is a description of the steps in an algorithm using a mix of conventions of programming languages (like assignment operator, conditional operator, loop) with informal, usually self-explanatory, notation of actions and conditions. Although pseudocode shares features with regular programming languages, it is intended for human reading rather than machine control. Pseudocode typically omits details that are essential for machine implementation of the algorithm, meaning that pseudocode can only be verified by hand. The programming language is augmented with natural language description details, where convenient, or with compact mathematical notation. The reasons for using pseudocode are that it is easier for people to understand than conventional programming language code and that it is an efficient and environment-independent description of the key principles of an algorithm. It is commonly used in textbooks and scientific publications to document ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Algorithmica

''Algorithmica'' is a monthly peer-reviewed scientific journal focusing on research and the application of computer science algorithms. The journal was established in 1986 and is published by Springer Science+Business Media. The editor in chief is Mohammad Hajiaghayi. Subject coverage includes sorting, searching, data structures, computational geometry, and linear programming, VLSI, distributed computing, parallel processing, computer aided design, robotics, graphics, data base design, and software tool A programming tool or software development tool is a computer program that is used to software development, develop another computer program, usually by helping the developer manage computer files. For example, a programmer may use a tool called ...s. Springer Science+Business Media. 2013 Abstract ...

|

Edmonds–Karp Algorithm

In computer science, the Edmonds–Karp algorithm is an implementation of the Ford–Fulkerson method for computing the maximum flow in a flow network in O(, V, , E, ^2) time. The algorithm was first published by Yefim Dinitz in 1970, and independently published by Jack Edmonds and Richard Karp in 1972. Dinitz's algorithm includes additional techniques that reduce the running time to O(, V, ^2, E, ). Algorithm The algorithm is identical to the Ford–Fulkerson algorithm, except that the search order when finding the augmenting path is defined. The path found must be a shortest path that has available capacity. This can be found by a breadth-first search, where we apply a weight of 1 to each edge. The running time of O(, V, , E, ^2) is found by showing that each augmenting path can be found in O(, E, ) time, that every time at least one of the edges becomes saturated (an edge which has the maximum possible flow), that the distance from the saturated edge to the source along t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Weighted Graphs

A weight function is a mathematical device used when performing a sum, integral, or average to give some elements more "weight" or influence on the result than other elements in the same set. The result of this application of a weight function is a weighted sum or weighted average. Weight functions occur frequently in statistics and analysis, and are closely related to the concept of a measure. Weight functions can be employed in both discrete and continuous settings. They can be used to construct systems of calculus called "weighted calculus" and "meta-calculus".Jane Grossma''Meta-Calculus: Differential and Integral'' , 1981. Discrete weights General definition In the discrete setting, a weight function w \colon A \to \R^+ is a positive function defined on a discrete set A, which is typically finite or countable. The weight function w(a) := 1 corresponds to the ''unweighted'' situation in which all elements have equal weight. One can then apply this weight to various conce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Assignment Problem

The assignment problem is a fundamental combinatorial optimization problem. In its most general form, the problem is as follows: :The problem instance has a number of ''agents'' and a number of ''tasks''. Any agent can be assigned to perform any task, incurring some ''cost'' that may vary depending on the agent-task assignment. It is required to perform as many tasks as possible by assigning at most one agent to each task and at most one task to each agent, in such a way that the ''total cost'' of the assignment is minimized. Alternatively, describing the problem using graph theory: :The assignment problem consists of finding, in a weighted graph, weighted bipartite graph, a Matching (graph theory), matching of maximum size, in which the sum of weights of the edges is minimum. If the numbers of agents and tasks are equal, then the problem is called balanced assignment, and the graph-theoretic version is called minimum-cost perfect matching. Otherwise, it is called unbalanced assig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Maximum Cardinality Matching

Maximum cardinality matching is a fundamental problem in graph theory. We are given a graph , and the goal is to find a matching containing as many edges as possible; that is, a maximum cardinality subset of the edges such that each vertex is adjacent to at most one edge of the subset. As each edge will cover exactly two vertices, this problem is equivalent to the task of finding a matching that covers as many vertices as possible. An important special case of the maximum cardinality matching problem is when is a bipartite graph, whose vertices are partitioned between left vertices in and right vertices in , and edges in always connect a left vertex to a right vertex. In this case, the problem can be efficiently solved with simpler algorithms than in the general case. Algorithms for bipartite graphs Flow-based algorithm The simplest way to compute a maximum cardinality matching is to follow the Ford–Fulkerson algorithm. This algorithm solves the more general probl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Logarithm

In mathematics, the logarithm of a number is the exponent by which another fixed value, the base, must be raised to produce that number. For example, the logarithm of to base is , because is to the rd power: . More generally, if , then is the logarithm of to base , written , so . As a single-variable function, the logarithm to base is the inverse of exponentiation with base . The logarithm base is called the ''decimal'' or ''common'' logarithm and is commonly used in science and engineering. The ''natural'' logarithm has the number as its base; its use is widespread in mathematics and physics because of its very simple derivative. The ''binary'' logarithm uses base and is widely used in computer science, information theory, music theory, and photography. When the base is unambiguous from the context or irrelevant it is often omitted, and the logarithm is written . Logarithms were introduced by John Napier in 1614 as a means of simplifying calculation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Sparse Graph

In mathematics, a dense graph is a Graph (discrete mathematics), graph in which the number of edges is close to the maximal number of edges (where every pair of Vertex (graph theory), vertices is connected by one edge). The opposite, a graph with only a few edges, is a sparse graph. The distinction of what constitutes a dense or sparse graph is ill-defined, and is often represented by 'roughly equal to' statements. Due to this, the way that density is defined often depends on the context of the problem. The graph density of simple graphs is defined to be the ratio of the number of edges with respect to the maximum possible edges. For undirected simple graphs, the graph density is: :D = \frac = \frac For Directed graph, directed, simple graphs, the maximum possible edges is twice that of undirected graphs (as there are two directions to an edge) so the density is: :D = \frac = \frac where is the number of edges and is the number of vertices in the graph. The maximum number of e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Average Case Analysis

In computer science, best, worst, and average cases of a given algorithm express what the resource usage is ''at least'', ''at most'' and ''on average'', respectively. Usually the resource being considered is running time, i.e. time complexity, but could also be memory or some other resource. Best case is the function which performs the minimum number of steps on input data of n elements. Worst case is the function which performs the maximum number of steps on input data of size n. Average case is the function which performs an average number of steps on input data of n elements. In real-time computing, the worst-case execution time is often of particular concern since it is important to know how much time might be needed ''in the worst case'' to guarantee that the algorithm will always finish on time. Average performance and worst-case performance are the most used in algorithm analysis. Less widely found is best-case performance, but it does have uses: for example, where the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |