|

Hamming Code (7,4)

In coding theory, Hamming(7,4) is a linear error-correcting code that encodes four bits of data into seven bits by adding three parity bits. It is a member of a larger family of Hamming codes, but the term ''Hamming code'' often refers to this specific code that Richard W. Hamming introduced in 1950. At the time, Hamming worked at Bell Telephone Laboratories and was frustrated with the error-prone punched card reader, which is why he started working on error-correcting codes. The Hamming code adds three additional check bits to every four data bits of the message. Hamming's (7,4) algorithm can correct any single-bit error, or detect all single-bit and two-bit errors. In other words, the minimal Hamming distance between any two correct codewords is 3, and received words can be correctly decoded if they are at a distance of at most one from the codeword that was transmitted by the sender. This means that for transmission medium situations where burst errors do not occur, Ham ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamming(7,4)

In coding theory, Hamming(7,4) is a linear error-correcting code that encodes four bits of data into seven bits by adding three parity bits. It is a member of a larger family of Hamming codes, but the term ''Hamming code'' often refers to this specific code that Richard W. Hamming introduced in 1950. At the time, Hamming worked at Bell Telephone Laboratories and was frustrated with the error-prone punched card reader, which is why he started working on error-correcting codes. The Hamming code adds three additional check bits to every four data bits of the message. Hamming's (7,4) algorithm can correct any single-bit error, or detect all single-bit and two-bit errors. In other words, the minimal Hamming distance between any two correct codewords is 3, and received words can be correctly decoded if they are at a distance of at most one from the codeword that was transmitted by the sender. This means that for transmission medium situations where burst errors do not occur, Hamming' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Algebra

Linear algebra is the branch of mathematics concerning linear equations such as: :a_1x_1+\cdots +a_nx_n=b, linear maps such as: :(x_1, \ldots, x_n) \mapsto a_1x_1+\cdots +a_nx_n, and their representations in vector spaces and through matrices. Linear algebra is central to almost all areas of mathematics. For instance, linear algebra is fundamental in modern presentations of geometry, including for defining basic objects such as lines, planes and rotations. Also, functional analysis, a branch of mathematical analysis, may be viewed as the application of linear algebra to spaces of functions. Linear algebra is also used in most sciences and fields of engineering, because it allows modeling many natural phenomena, and computing efficiently with such models. For nonlinear systems, which cannot be modeled with linear algebra, it is often used for dealing with first-order approximations, using the fact that the differential of a multivariate function at a point is the line ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Symmetric Channel

A binary symmetric channel (or BSCp) is a common communications channel model used in coding theory and information theory. In this model, a transmitter wishes to send a bit (a zero or a one), and the receiver will receive a bit. The bit will be "flipped" with a "crossover probability" of ''p'', and otherwise is received correctly. This model can be applied to varied communication channels such as telephone lines or disk drive storage. The noisy-channel coding theorem applies to BSCp, saying that information can be transmitted at any rate up to the channel capacity with arbitrarily low error. The channel capacity is 1 - \operatorname H_\text(p) bits, where \operatorname H_\text is the binary entropy function. Codes including Forney's code have been designed to transmit information efficiently across the channel. Definition A binary symmetric channel with crossover probability p, denoted by BSCp, is a channel with binary input and binary output and probability of error p. That ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Channel (communications)

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used for information transfer of, for example, a digital bit stream, from one or several '' senders'' to one or several '' receivers''. A channel has a certain capacity for transmitting information, often measured by its bandwidth in Hz or its data rate in bits per second. Communicating an information signal across distance requires some form of pathway or medium. These pathways, called communication channels, use two types of media: Transmission line (e.g. twisted-pair, coaxial, and fiber-optic cable) and broadcast (e.g. microwave, satellite, radio, and infrared). In information theory, a channel refers to a theoretical ''channel model'' with certain error characteristics. In this more general view, a storage device is also a communication channel, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

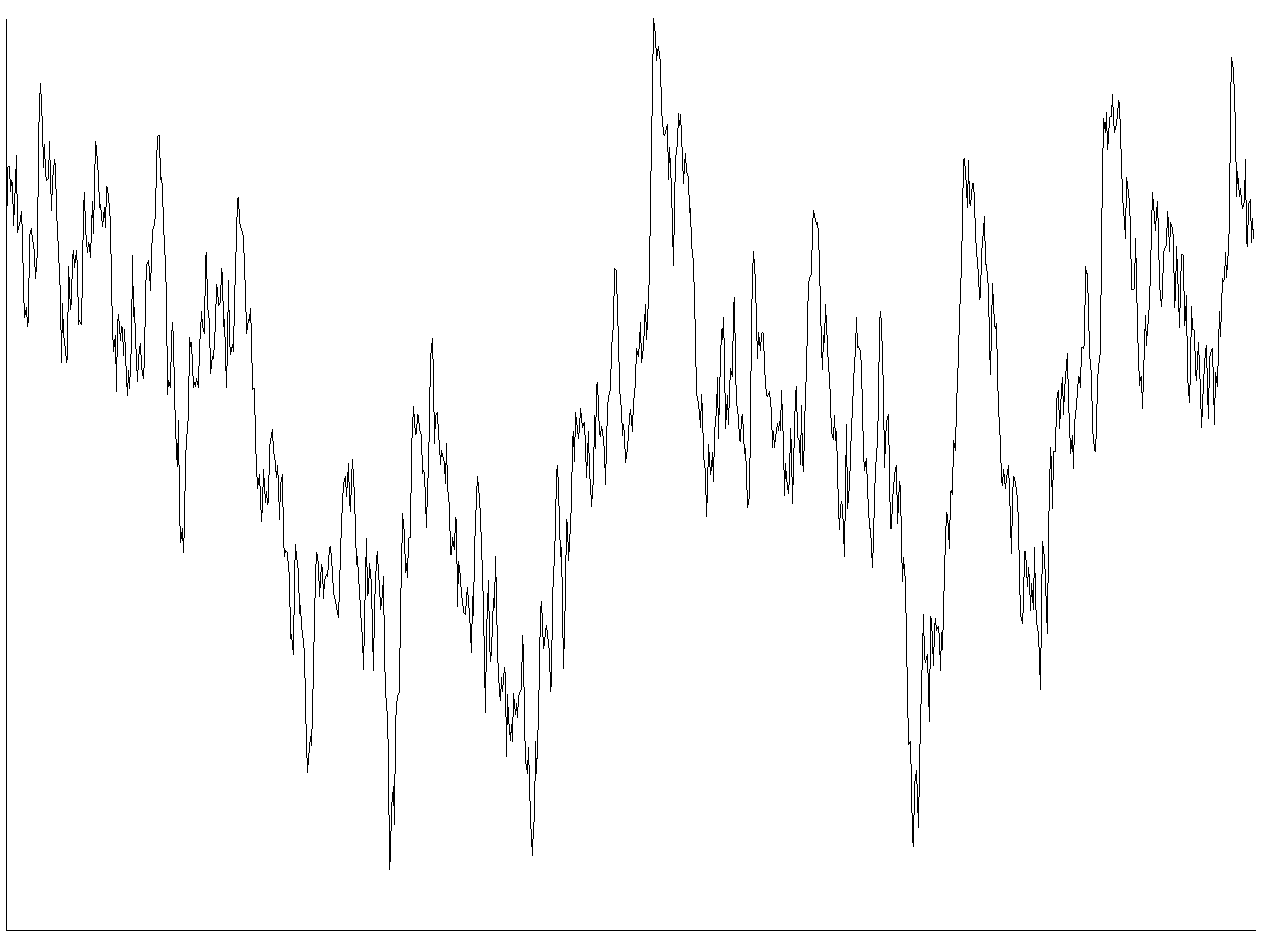

Signal Noise

In electronics, noise is an unwanted disturbance in an electrical signal. Noise generated by electronic devices varies greatly as it is produced by several different effects. In particular, noise is inherent in physics, and central to thermodynamics. Any conductor with electrical resistance will generate thermal noise inherently. The final elimination of thermal noise in electronics can only be achieved cryogenically, and even then quantum noise would remain inherent. Electronic noise is a common component of noise in signal processing. In communication systems, noise is an error or undesired random disturbance of a useful information signal in a communication channel. The noise is a summation of unwanted or disturbing energy from natural and sometimes man-made sources. Noise is, however, typically distinguished from interference, for example in the signal-to-noise ratio (SNR), signal-to-interference ratio (SIR) and signal-to-noise plus interference ratio (SNIR) m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamming(7,4) Example 1011

{{disambiguation, surname ...

Hamming may refer to: * Richard Hamming (1915–1998), American mathematician * Hamming(7,4), in coding theory, a linear error-correcting code * Overacting, or acting in an exaggerated way See also * Hamming code, error correction in telecommunication * Hamming distance, a way of defining how different two sequences are * Hamming weight, the number of non-zero elements in a sequence * Hamming window, a mathematical function used in signal processing * Hammond (other) * Ham (other) Ham is a cut of meat from an edible mammal's rear, usually from a pig. Ham or HAM may also refer to: Places Belgium * Ham, Belgium, a municipality France * Le Ham, Manche, a commune * Le Ham, Mayenne, a commune * Ham (Cergy), a village near C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Venn Diagram

A Venn diagram is a widely used diagram style that shows the logical relation between sets, popularized by John Venn (1834–1923) in the 1880s. The diagrams are used to teach elementary set theory, and to illustrate simple set relationships in probability, logic, statistics, linguistics and computer science. A Venn diagram uses simple closed curves drawn on a plane to represent sets. Very often, these curves are circles or ellipses. Similar ideas had been proposed before Venn. Christian Weise in 1712 (''Nucleus Logicoe Wiesianoe'') and Leonhard Euler ('' Letters to a German Princess'') in 1768, for instance, came up with similar ideas. The idea was popularised by Venn in ''Symbolic Logic'', Chapter V "Diagrammatic Representation", 1881. Details A Venn diagram may also be called a ''set diagram'' or ''logic diagram''. It is a diagram that shows ''all'' possible logical relations between a finite collection of different sets. These diagrams depict elements as points in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Modulo Operation

In computing, the modulo operation returns the remainder or signed remainder of a division, after one number is divided by another (called the '' modulus'' of the operation). Given two positive numbers and , modulo (often abbreviated as ) is the remainder of the Euclidean division of by , where is the dividend and is the divisor. For example, the expression "5 mod 2" would evaluate to 1, because 5 divided by 2 has a quotient of 2 and a remainder of 1, while "9 mod 3" would evaluate to 0, because 9 divided by 3 has a quotient of 3 and a remainder of 0; there is nothing to subtract from 9 after multiplying 3 times 3. Although typically performed with and both being integers, many computing systems now allow other types of numeric operands. The range of values for an integer modulo operation of is 0 to inclusive ( mod 1 is always 0; is undefined, possibly resulting in a division by zero error in some programming languages). See Modular arithmetic for an older and re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Vector (vector Space)

In mathematics, given a vector space ''X'' with an associated quadratic form ''q'', written , a null vector or isotropic vector is a non-zero element ''x'' of ''X'' for which . In the theory of real bilinear forms, definite quadratic forms and isotropic quadratic forms are distinct. They are distinguished in that only for the latter does there exist a nonzero null vector. A quadratic space which has a null vector is called a pseudo-Euclidean space. A pseudo-Euclidean vector space may be decomposed (non-uniquely) into orthogonal subspaces ''A'' and ''B'', , where ''q'' is positive-definite on ''A'' and negative-definite on ''B''. The null cone, or isotropic cone, of ''X'' consists of the union of balanced spheres: \bigcup_ \. The null cone is also the union of the isotropic lines through the origin. Examples The light-like vectors of Minkowski space are null vectors. The four linearly independent biquaternions , , , and are null vectors and can serve as a basis for the sub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Identity Matrix

In linear algebra, the identity matrix of size n is the n\times n square matrix with ones on the main diagonal and zeros elsewhere. Terminology and notation The identity matrix is often denoted by I_n, or simply by I if the size is immaterial or can be trivially determined by the context. I_1 = \begin 1 \end ,\ I_2 = \begin 1 & 0 \\ 0 & 1 \end ,\ I_3 = \begin 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end ,\ \dots ,\ I_n = \begin 1 & 0 & 0 & \cdots & 0 \\ 0 & 1 & 0 & \cdots & 0 \\ 0 & 0 & 1 & \cdots & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \cdots & 1 \end. The term unit matrix has also been widely used, but the term ''identity matrix'' is now standard. The term ''unit matrix'' is ambiguous, because it is also used for a matrix of ones and for any unit of the ring of all n\times n matrices. In some fields, such as group theory or quantum mechanics, the identity matrix is sometimes denoted by a boldface one, \mathbf, or called "id" (short for identity ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linearly Independent

In the theory of vector spaces, a set of vectors is said to be if there is a nontrivial linear combination of the vectors that equals the zero vector. If no such linear combination exists, then the vectors are said to be . These concepts are central to the definition of dimension. A vector space can be of finite dimension or infinite dimension depending on the maximum number of linearly independent vectors. The definition of linear dependence and the ability to determine whether a subset of vectors in a vector space is linearly dependent are central to determining the dimension of a vector space. Definition A sequence of vectors \mathbf_1, \mathbf_2, \dots, \mathbf_k from a vector space is said to be ''linearly dependent'', if there exist scalars a_1, a_2, \dots, a_k, not all zero, such that :a_1\mathbf_1 + a_2\mathbf_2 + \cdots + a_k\mathbf_k = \mathbf, where \mathbf denotes the zero vector. This implies that at least one of the scalars is nonzero, say a_1\ne 0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamming(7,4) As Bits

Hamming may refer to: * Richard Hamming (1915–1998), American mathematician * Hamming(7,4), in coding theory, a linear error-correcting code * Overacting, or acting in an exaggerated way See also * Hamming code, error correction in telecommunication * Hamming distance, a way of defining how different two sequences are * Hamming weight, the number of non-zero elements in a sequence * Hamming window, a mathematical function used in signal processing * Hammond (other) Hammond may refer to: People * Hammond Innes (1913–1998), English novelist * Hammond (surname) * Justice Hammond (other) Places Antarctica * Hammond Glacier, Antarctica Australia * Hammond, South Australia, a small settlement in Sou ... * Ham (other) {{disambiguation, surname ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |