|

HTTP 2

HTTP/2 (originally named HTTP/2.0) is a major revision of the HTTP network protocol used by the World Wide Web. It was derived from the earlier experimental SPDY protocol, originally developed by Google. HTTP/2 was developed by the HTTP Working Group (also called httpbis, where "" means "twice") of the Internet Engineering Task Force (IETF). HTTP/2 is the first new version of HTTP since HTTP/1.1, which was standardized in in 1997. The Working Group presented HTTP/2 to the Internet Engineering Steering Group (IESG) for consideration as a Proposed Standard in December 2014, and IESG approved it to publish as Proposed Standard on February 17, 2015 (and was updated in February 2020 in regard to TLS 1.3 and again in June 2022). The initial HTTP/2 specification was published as on May 14, 2015. The standardization effort was supported by Chrome, Opera, Firefox, Internet Explorer 11, Safari, Amazon Silk, and Edge browsers. Most major browsers had added HTTP/2 support by the end of 20 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Internet Engineering Task Force

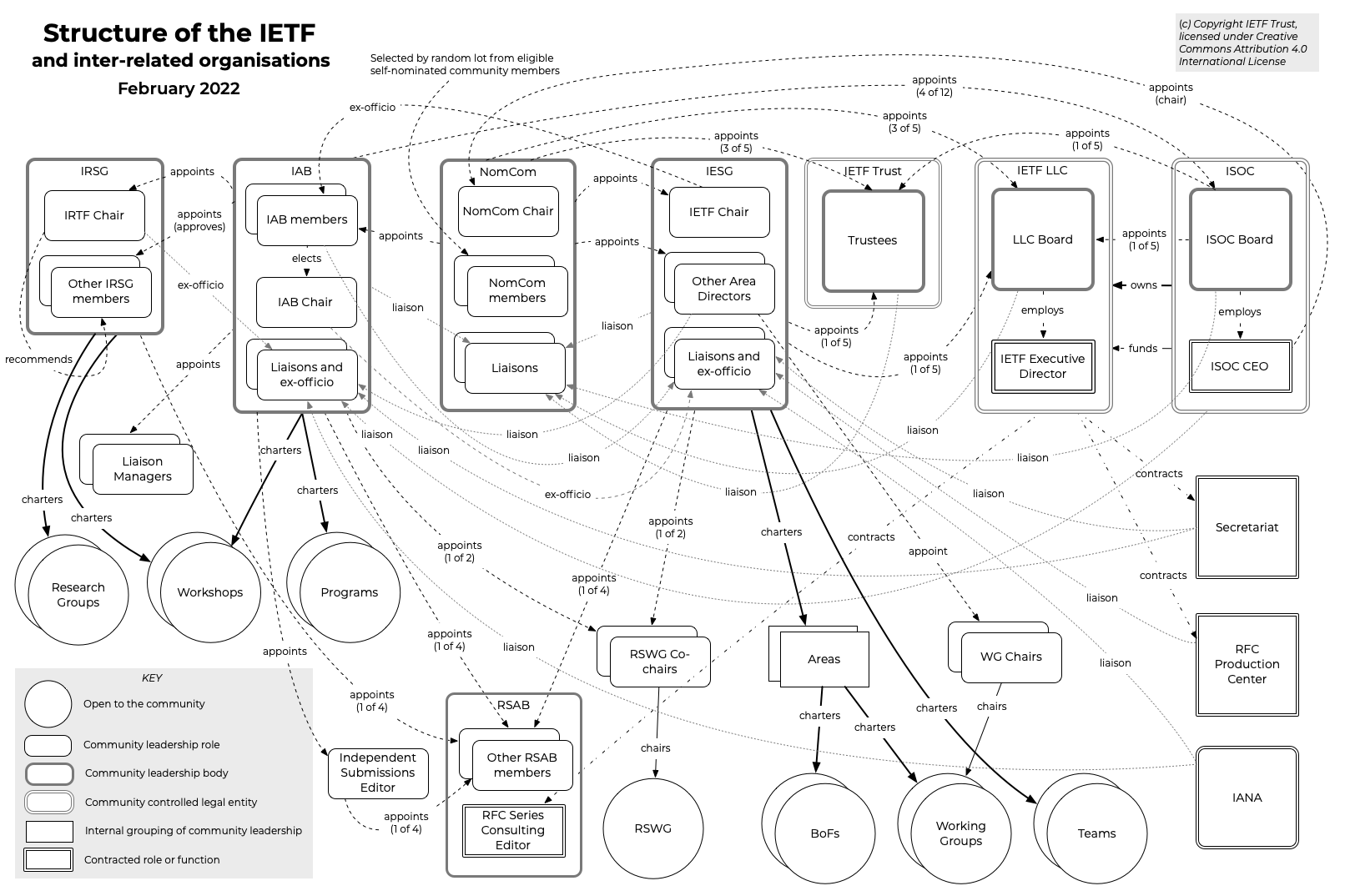

The Internet Engineering Task Force (IETF) is a standards organization for the Internet standard, Internet and is responsible for the technical standards that make up the Internet protocol suite (TCP/IP). It has no formal membership roster or requirements and all its participants are volunteers. Their work is usually funded by employers or other sponsors. The IETF was initially supported by the federal government of the United States but since 1993 has operated under the auspices of the Internet Society, a non-profit organization with local chapters around the world. Organization There is no membership in the IETF. Anyone can participate by signing up to a working group mailing list, or registering for an IETF meeting. The IETF operates in a bottom-up task creation mode, largely driven by working groups. Each working group normally has appointed two co-chairs (occasionally three); a charter that describes its focus; and what it is expected to produce, and when. It is open ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Head-of-line Blocking

Head-of-line blocking (HOL blocking) in computer networking is a performance-limiting phenomenon that occurs when a queue of packets is held up by the first packet in the queue. This occurs, for example, in input-buffered network switches, out-of-order delivery and multiple requests in HTTP pipelining. Network switches A switch may be composed of buffered input ports, a switch fabric, and buffered output ports. If first-in first-out (FIFO) input buffers are used, only the oldest packet is available for forwarding. If the oldest packet cannot be transmitted due to its target output being busy, then more recent arrivals cannot be forwarded. The output may be busy if there is output contention. Without HOL blocking, the new arrivals could potentially be forwarded around the stuck oldest packet to their respective destinations. HOL blocking can produce performance-degrading effects in input-buffered systems. This phenomenon limits the throughput of switches. For FIFO input bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Compression Oracle Attack

In the field of security engineering, an oracle attack is an attack that exploits the availability of a weakness in a system that can be used as an "oracle" to give a simple go/no go indication to inform attackers how close they are to their goals. The attacker can then combine the oracle with a systematic search of the problem space to complete their attack. The padding oracle attack, and compression oracle attacks such as BREACH, are examples of oracle attacks, as was the practice of "crib-dragging" in the cryptanalysis of the Enigma machine. An oracle need not be 100% accurate: even a small statistical correlation with the correct go/no go result can frequently be enough for a systematic automated attack. In a compression oracle attack the use of adaptive data compression on a mixture of chosen plaintext and unknown plaintext can result in content-sensitive changes in the length of the compressed text that can be detected even though the content of the compressed text itself is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Huffman Code

In computer science and information theory, a Huffman code is a particular type of optimal prefix code that is commonly used for lossless data compression. The process of finding or using such a code is Huffman coding, an algorithm developed by David A. Huffman while he was a Sc.D. student at MIT, and published in the 1952 paper "A Method for the Construction of Minimum-Redundancy Codes". The output from Huffman's algorithm can be viewed as a variable-length code table for encoding a source symbol (such as a character in a file). The algorithm derives this table from the estimated probability or frequency of occurrence (''weight'') for each possible value of the source symbol. As in other entropy encoding methods, more common symbols are generally represented using fewer bits than less common symbols. Huffman's method can be efficiently implemented, finding a code in time linear to the number of input weights if these weights are sorted. However, although optimal among metho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Facebook

Facebook is a social media and social networking service owned by the American technology conglomerate Meta Platforms, Meta. Created in 2004 by Mark Zuckerberg with four other Harvard College students and roommates, Eduardo Saverin, Andrew McCollum, Dustin Moskovitz, and Chris Hughes, its name derives from the face book directories often given to American university students. Membership was initially limited to Harvard students, gradually expanding to other North American universities. Since 2006, Facebook allows everyone to register from 13 years old, except in the case of a handful of nations, where the age requirement is 14 years. , Facebook claimed almost 3.07 billion monthly active users worldwide. , Facebook ranked as the List of most-visited websites, third-most-visited website in the world, with 23% of its traffic coming from the United States. It was the most downloaded mobile app of the 2010s. Facebook can be accessed from devices with Internet connectivit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

HTTP Speed+Mobility

HTTP (Hypertext Transfer Protocol) is an application layer protocol in the Internet protocol suite model for distributed, collaborative, hypermedia information systems. HTTP is the foundation of data communication for the World Wide Web, where hypertext documents include hyperlinks to other resources that the user can easily access, for example by a mouse click or by tapping the screen in a web browser. Development of HTTP was initiated by Tim Berners-Lee at CERN in 1989 and summarized in a simple document describing the behavior of a client and a server using the first HTTP version, named 0.9. That version was subsequently developed, eventually becoming the public 1.0. Development of early HTTP Requests for Comments (RFCs) started a few years later in a coordinated effort by the Internet Engineering Task Force (IETF) and the World Wide Web Consortium (W3C), with work later moving to the IETF. HTTP/1 was finalized and fully documented (as version 1.0) in 1996. It evolved ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Microsoft

Microsoft Corporation is an American multinational corporation and technology company, technology conglomerate headquartered in Redmond, Washington. Founded in 1975, the company became influential in the History of personal computers#The early 1980s and home computers, rise of personal computers through software like Windows, and the company has since expanded to Internet services, cloud computing, video gaming and other fields. Microsoft is the List of the largest software companies, largest software maker, one of the Trillion-dollar company, most valuable public U.S. companies, and one of the List of most valuable brands, most valuable brands globally. Microsoft was founded by Bill Gates and Paul Allen to develop and sell BASIC interpreters for the Altair 8800. It rose to dominate the personal computer operating system market with MS-DOS in the mid-1980s, followed by Windows. During the 41 years from 1980 to 2021 Microsoft released 9 versions of MS-DOS with a median frequen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Chunked Transfer Encoding

Chunked transfer encoding is a streaming data transfer mechanism available in Hypertext Transfer Protocol (HTTP) version 1.1, defined in RFC 9112 §7.1. In chunked transfer encoding, the data stream is divided into a series of non-overlapping "chunks". The chunks are sent out and received independently of one another. No knowledge of the data stream outside the currently-being-processed chunk is necessary for both the sender and the receiver at any given time. Each chunk is preceded by its size in bytes. The transmission ends when a zero-length chunk is received. The ''chunked'' keyword in the Transfer-Encoding header is used to indicate chunked transfer. Chunked transfer encoding is not supported in HTTP/2, which provides its own mechanisms for data streaming. Rationale The introduction of chunked encoding provided various benefits: * Chunked transfer encoding allows a server to maintain an HTTP persistent connection for dynamically generated content. In this case, the HTTP ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

HTTP Pipelining

HTTP pipelining is a feature of HTTP/1.1, which allows multiple HTTP requests to be sent over a single TCP connection without waiting for the corresponding responses. HTTP/1.1 requires servers to respond to pipelined requests correctly, with non-pipelined but valid responses even if server does not support HTTP pipelining. Despite this requirement, many legacy HTTP/1.1 servers do not support pipelining correctly, forcing most HTTP clients to not use HTTP pipelining. The technique was superseded by multiplexing via HTTP/2, which is supported by most modern browsers. In HTTP/3, multiplexing is accomplished via QUIC which replaces TCP. This further reduces loading time, as there is no head-of-line blocking even if some packets are lost. Motivation and limitations The pipelining of requests results in a dramatic improvement in the loading times of HTML pages, especially over high latency connections such as satellite Internet connections. The speedup is less apparent on broadban ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Minify

Minification (also minimisation or minimization) is the process of removing all unnecessary characters from the source code of interpreted programming languages or markup languages without changing its functionality. These unnecessary characters usually include whitespace characters, new line characters, comments, and sometimes block delimiters, which are used to add readability to the code but are not required for it to execute. Minification reduces the size of the source code, making its transmission over a network (e.g. the Internet) more efficient. In programmer culture, aiming at extremely minified source code is the purpose of recreational code golf competitions and a part of the demoscene. Minification can be distinguished from the more general concept of data compression in that the minified source can be interpreted immediately without the need for a decompression step: the same interpreter can work with both the original as well as with the minified source. The goa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Content Delivery Network

A content delivery network (CDN) or content distribution network is a geographically distributed network of proxy servers and their data centers. The goal is to provide high availability and performance ("speed") by distributing the service spatially relative to end users. CDNs came into existence in the late 1990s as a means for alleviating the performance bottlenecks of the Internet as the Internet was starting to become a mission-critical medium for people and enterprises. Since then, CDNs have grown to serve a large portion of Internet content, including web objects (text, graphics and scripts), downloadable objects (media files, software, documents), applications ( e-commerce, portals), live streaming media, on-demand streaming media, and social media sites. CDNs are a layer in the internet ecosystem. Content owners such as media companies and e-commerce vendors pay CDN operators to deliver their content to their end users. In turn, a CDN pays Internet service providers ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Firewall (computing)

In computing, a firewall is a network security system that monitors and controls incoming and outgoing network traffic based on configurable security rules. A firewall typically establishes a barrier between a trusted network and an untrusted network, such as the Internet or between several VLANs. Firewalls can be categorized as network-based or host-based. History The term '' firewall'' originally referred to a wall to confine a fire within a line of adjacent buildings. Later uses refer to similar structures, such as the metal sheet separating the engine compartment of a vehicle or aircraft from the passenger compartment. The term was applied in the 1980s to network technology that emerged when the Internet was fairly new in terms of its global use and connectivity. The predecessors to firewalls for network security were routers used in the 1980s. Because they already segregated networks, routers could filter packets crossing them. Before it was used in real-life comput ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |