|

Grammatical Man

''Grammatical Man: Information, Entropy, Language, and Life'' is a 1982 book written by the Evening Standard's Washington correspondent, Jeremy Campbell. The book touches on topics of probability, Information Theory, cybernetics, genetics and linguistics. The book frames and examines existence, from the Big Bang to DNA to human communication to artificial intelligence, in terms of information processes. The text consists of a foreword, twenty-one chapters, and an afterword. It is divided into four parts: ''Establishing the Theory of Information''; ''Nature as an Information Process''; ''Coding Language, Coding Life''; ''How the Brain Puts It All Together''. Part 1: Establishing the Theory of Information *The book's first chapter, ''The Second Law and the Yellow Peril'', introduces the concept of entropy and gives brief outlines of the histories of Information Theory, and cybernetics, examining World War II figures such as Claude Shannon and Norbert Wiener. *''The Noise of H ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

2nd Law Of Thermodynamics

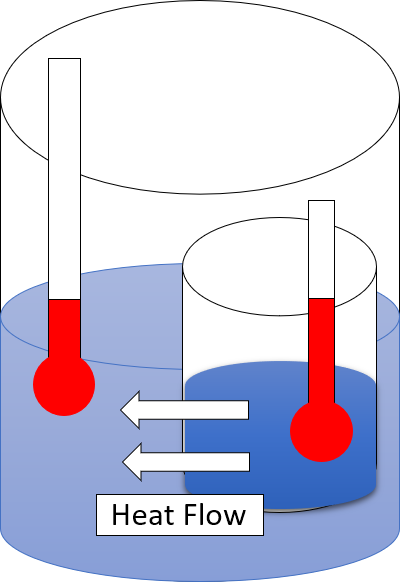

The second law of thermodynamics is a physical law based on universal experience concerning heat and Energy transformation, energy interconversions. One simple statement of the law is that heat always moves from hotter objects to colder objects (or "downhill"), unless energy in some form is supplied to reverse the direction of heat flow. Another definition is: "Not all heat energy can be converted into Work (thermodynamics), work in a cyclic process."Young, H. D; Freedman, R. A. (2004). ''University Physics'', 11th edition. Pearson. p. 764. The second law of thermodynamics in other versions establishes the concept of entropy as a physical property of a thermodynamic system. It can be used to predict whether processes are forbidden despite obeying the requirement of conservation of energy as expressed in the first law of thermodynamics and provides necessary criteria for spontaneous processes. The second law may be formulated by the observation that the entropy of isolated systems ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Grammar

Universal grammar (UG), in modern linguistics, is the theory of the genetic component of the language faculty, usually credited to Noam Chomsky. The basic postulate of UG is that there are innate constraints on what the grammar of a possible human language could be. When linguistic stimuli are received in the course of language acquisition, children then adopt specific syntactic rules that conform to UG. The advocates of this theory emphasize and partially rely on the poverty of the stimulus (POS) argument and the existence of some universal properties of natural human languages. However, the latter has not been firmly established, as some linguists have argued languages are so diverse that such universality is rare. It is a matter of empirical investigation to determine precisely what properties are universal and what linguistic capacities are innate. Argument The theory of universal grammar proposes that if human beings are brought up under normal conditions (not those of ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

David Layzer

David Raymond Layzer (December 31, 1925 – August 16, 2019) was an American astrophysicist, cosmologist, and the Donald H. Menzel Professor Emeritus of Astronomy at Harvard University. He is known for his cosmological theory of the expansion of the universe, which postulates that its order and information are increasing despite the second law of thermodynamics. He is also known for being one of the most notable researchers who advocated for a Cold Big Bang theory. When he proposed this theory in 1966, he suggested it would solve Olbers' paradox, which holds that the night sky on Earth should be much brighter than it actually is. He also published several articles critiquing hereditarian views on human intelligence, such as those of Richard Herrnstein and Arthur Jensen. He became a member of the American Academy of Arts and Sciences since 1963, and was also a member of Divisions B and J of the International Astronomical Union The International Astronomical Union (IAU; french: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Laplace's Demon

In the history of science, Laplace's demon was a notable published articulation of causal determinism on a scientific basis by Pierre-Simon Laplace in 1814. According to determinism, if someone (the demon) knows the precise location and momentum of every atom in the universe, their past and future values for any given time are entailed; they can be calculated from the laws of classical mechanics. This idea states that “free will” is merely an illusion, and that every action previously taken, currently being taken, or that will take place was destined to happen from the instant of the big bang. Discoveries and theories in the decades following suggest that some elements of Laplace's original writing are wrong or incompatible with our universe. For example, irreversible processes in thermodynamics suggest that Laplace's "demon" could not reconstruct past positions and momenta from the current state. English translation This intellect is often referred to as ''Laplace's d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Redundancy (information Theory)

In information theory, redundancy measures the fractional difference between the entropy of an ensemble , and its maximum possible value \log(, \mathcal_X, ). Informally, it is the amount of wasted "space" used to transmit certain data. Data compression is a way to reduce or eliminate unwanted redundancy, while forward error correction is a way of adding desired redundancy for purposes of error detection and correction when communicating over a noisy channel of limited capacity. Quantitative definition In describing the redundancy of raw data, the rate of a source of information is the average entropy per symbol. For memoryless sources, this is merely the entropy of each symbol, while, in the most general case of a stochastic process, it is :r = \lim_ \frac H(M_1, M_2, \dots M_n), in the limit, as ''n'' goes to infinity, of the joint entropy of the first ''n'' symbols divided by ''n''. It is common in information theory to speak of the "rate" or "entropy" of a language. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subjective Probability

Bayesian probability is an interpretation of the concept of probability, in which, instead of frequency or propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification of a personal belief. The Bayesian interpretation of probability can be seen as an extension of propositional logic that enables reasoning with hypotheses; that is, with propositions whose truth or falsity is unknown. In the Bayesian view, a probability is assigned to a hypothesis, whereas under frequentist inference, a hypothesis is typically tested without being assigned a probability. Bayesian probability belongs to the category of evidential probabilities; to evaluate the probability of a hypothesis, the Bayesian probabilist specifies a prior probability. This, in turn, is then updated to a posterior probability in the light of new, relevant data (evidence). The Bayesian interpretation provides a standard set of procedures and formula ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John Maynard Keynes

John Maynard Keynes, 1st Baron Keynes, ( ; 5 June 1883 – 21 April 1946), was an English economist whose ideas fundamentally changed the theory and practice of macroeconomics and the economic policies of governments. Originally trained in mathematics, he built on and greatly refined earlier work on the causes of business cycles. One of the most influential economists of the 20th century, he produced writings that are the basis for the school of thought known as Keynesian economics, and its various offshoots. His ideas, reformulated as New Keynesianism, are fundamental to mainstream macroeconomics. Keynes's intellect was evident early in life; in 1902, he gained admittance to the competitive mathematics program at King's College at the University of Cambridge. During the Great Depression of the 1930s, Keynes spearheaded a revolution in economic thinking, challenging the ideas of neoclassical economics that held that free markets would, in the short to medium term, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Richard Von Mises

Richard Edler von Mises (; 19 April 1883 – 14 July 1953) was an Austrian scientist and mathematician who worked on solid mechanics, fluid mechanics, aerodynamics, aeronautics, statistics and probability theory. He held the position of Gordon McKay Professor of Aerodynamics and Applied Mathematics at Harvard University. He described his work in his own words shortly before his death as being on :"... practical analysis, integral and differential equations, mechanics, hydrodynamics and aerodynamics, constructive geometry, probability calculus, statistics and philosophy." Although best known for his mathematical work, von Mises also contributed to the philosophy of science as a neo-positivist and empiricist, following the line of Ernst Mach. Historians of the Vienna Circle of logical empiricism recognize a "first phase" from 1907 through 1914 with Philipp Frank, Hans Hahn, and Otto Neurath. His older brother, Ludwig von Mises, held an opposite point of view with respect to po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Antoine Gombaud

Antoine Gombaud, ''alias'' Chevalier de Méré, (1607 – 29 December 1684) was a French writer, born in Poitou.E. Feuillâtre (Editor), ''Les Épistoliers Du XVIIe Siècle. Avec des Notices biographiques, des Notices littéraires, des Notes explicatives, des Jugements, un Questionnaire sur les Lettres et des Sujets de devoirs''. Librairie Larousse, 1952. Although he was not a nobleman, he adopted the title ''chevalier'' (knight) for the character in his dialogues who represented his own views (chevalier de Méré because he was educated at Méré). Later his friends began calling him by that name. Aaron Brown, ''The Poker Face of Wall Street,'' John Wiley & Sons, 2006. Life Gombaud was an important Salon theorist. Like many 17th century liberal thinkers, he distrusted both hereditary power and democracy, a stance at odds with his self-bestowed noble title. He believed that questions are best resolved in open discussions among witty, fashionable, intelligent people. Gombaud's ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gerolamo Cardano

Gerolamo Cardano (; also Girolamo or Geronimo; french: link=no, Jérôme Cardan; la, Hieronymus Cardanus; 24 September 1501– 21 September 1576) was an Italian polymath, whose interests and proficiencies ranged through those of mathematician, physician, biologist, physicist, chemist, astrologer, astronomer, philosopher, writer, and gambler. He was one of the most influential mathematicians of the Renaissance, and was one of the key figures in the foundation of probability and the earliest introducer of the binomial coefficients and the binomial theorem in the Western world. He wrote more than 200 works on science. Cardano partially invented and described several mechanical devices including the combination lock, the gimbal consisting of three concentric rings allowing a supported compass or gyroscope to rotate freely, and the Cardan shaft with universal joints, which allows the transmission of rotary motion at various angles and is used in vehicles to this day. He made sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

History Of Probability

Probability has a dual aspect: on the one hand the likelihood of hypotheses given the evidence for them, and on the other hand the behavior of stochastic processes such as the throwing of dice or coins. The study of the former is historically older in, for example, the law of evidence, while the mathematical treatment of dice began with the work of Cardano, Pascal, Fermat and Christiaan Huygens between the 16th and 17th century. Probability is distinguished from statistics; see history of statistics. While statistics deals with data and inferences from it, (stochastic) probability deals with the stochastic (random) processes which lie behind data or outcomes. Etymology ''Probable'' and ''probability'' and their cognates in other modern languages derive from medieval learned Latin ''probabilis'', deriving from Cicero and generally applied to an opinion to mean ''plausible'' or ''generally approved''. The form ''probability'' is from Old French (14 c.) and directly from Latin (no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |