|

Gibbs' Inequality

200px, Josiah Willard Gibbs In information theory, Gibbs' inequality is a statement about the information entropy of a discrete probability distribution. Several other bounds on the entropy of probability distributions are derived from Gibbs' inequality, including Fano's inequality. It was first presented by J. Willard Gibbs in the 19th century. Gibbs' inequality Suppose that P = \ and Q = \ are discrete probability distributions. Then : - \sum_^n p_i \log p_i \leq - \sum_^n p_i \log q_i with equality if and only if p_i = q_i for i = 1, \dots n. Put in words, the information entropy of a distribution P is less than or equal to its cross entropy with any other distribution Q. The difference between the two quantities is the Kullback–Leibler divergence or relative entropy, so the inequality can also be written: : D_(P\, Q) \equiv \sum_^n p_i \log \frac \geq 0. Note that the use of base-2 logarithms is optional, and allows one to refer to the quantity on each side o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Josiah Willard Gibbs -from MMS-

Josiah () or Yoshiyahu was the Kings of Judah, 16th king of Judah (–609 BCE). According to the Hebrew Bible, he instituted major religious reforms by removing official worship of gods other than Yahweh. Until the 1990s, the biblical description of Josiah’s reforms were usually considered to be more or less accurate, but that is now heavily debated. According to the Bible, Josiah became king of the Kingdom of Judah at the age of eight, after the assassination of his father, Amon of Judah, King Amon, and reigned for 31 years, from 641/640 to 610/609 BCE. Josiah is known only from biblical texts; no reference to him exists in other surviving texts of the period from ancient Egypt or Babylon, and no clear archaeological evidence, such as inscriptions bearing his name, has ever been found. However, a seal bearing the name "Nathan-melech," the name of an administrative official under King Josiah according to , dating to the 7th century BCE, was found in situ in an archeological sit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Surprisal

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative way of expressing probability, much like odds or log-odds, but which has particular mathematical advantages in the setting of information theory. The Shannon information can be interpreted as quantifying the level of "surprise" of a particular outcome. As it is such a basic quantity, it also appears in several other settings, such as the length of a message needed to transmit the event given an optimal source coding of the random variable. The Shannon information is closely related to ''entropy'', which is the expected value of the self-information of a random variable, quantifying how surprising the random variable is "on average". This is the average amount of self-information an observer would expect to gain about a random variable wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coding Theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and computer data storage, data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error detection and correction, Error control (or ''channel coding'') # Cryptography, Cryptographic coding # Line code, Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, DEFLATE data compression makes files small ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log Sum Inequality

The log sum inequality is used for proving theorems in information theory Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, .... Statement Let a_1,\ldots,a_n and b_1,\ldots,b_n be nonnegative numbers. Denote the sum of all a_is by a and the sum of all b_is by b. The log sum inequality states that :\sum_^n a_i\log\frac\geq a\log\frac, with equality if and only if \frac are equal for all i, in other words a_i =c b_i for all i. (Take a_i\log \frac to be 0 if a_i=0 and \infty if a_i>0, b_i=0. These are the limiting values obtained as the relevant number tends to 0.) Proof Notice that after setting f(x)=x\log x we have : \begin \sum_^n a_i\log\frac & = \sum_^n b_i f\left(\frac\right) = b\sum_^n \frac f\left(\frac\right) \\ & \geq b f\left(\sum_^n \frac\frac\right) = b f\left(\frac\ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Entropy

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable X, which may be any member x within the set \mathcal and is distributed according to p\colon \mathcal\to , 1/math>, the entropy is \Eta(X) := -\sum_ p(x) \log p(x), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bregman Divergence

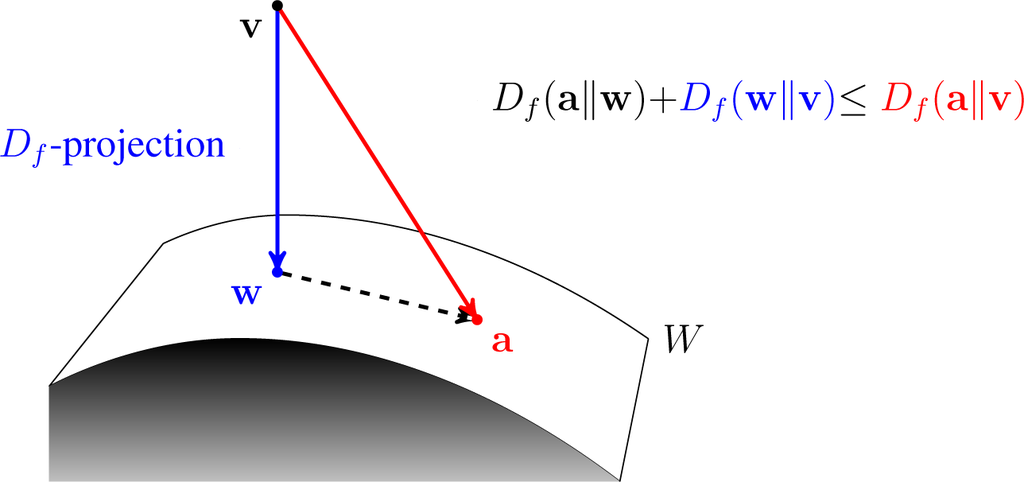

In mathematics, specifically statistics and information geometry, a Bregman divergence or Bregman distance is a measure of difference between two points, defined in terms of a strictly convex function; they form an important class of divergences. When the points are interpreted as probability distributions – notably as either values of the parameter of a parametric model or as a data set of observed values – the resulting distance is a statistical distance. The most basic Bregman divergence is the squared Euclidean distance. Bregman divergences are similar to metrics, but satisfy neither the triangle inequality (ever) nor symmetry (in general). However, they satisfy a generalization of the Pythagorean theorem, and in information geometry the corresponding statistical manifold is interpreted as a (dually) flat manifold. This allows many techniques of optimization theory to be generalized to Bregman divergences, geometrically as generalizations of least squares. Bregman ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log Sum Inequality

The log sum inequality is used for proving theorems in information theory Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, .... Statement Let a_1,\ldots,a_n and b_1,\ldots,b_n be nonnegative numbers. Denote the sum of all a_is by a and the sum of all b_is by b. The log sum inequality states that :\sum_^n a_i\log\frac\geq a\log\frac, with equality if and only if \frac are equal for all i, in other words a_i =c b_i for all i. (Take a_i\log \frac to be 0 if a_i=0 and \infty if a_i>0, b_i=0. These are the limiting values obtained as the relevant number tends to 0.) Proof Notice that after setting f(x)=x\log x we have : \begin \sum_^n a_i\log\frac & = \sum_^n b_i f\left(\frac\right) = b\sum_^n \frac f\left(\frac\right) \\ & \geq b f\left(\sum_^n \frac\frac\right) = b f\left(\frac\ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jensen's Inequality

In mathematics, Jensen's inequality, named after the Danish mathematician Johan Jensen, relates the value of a convex function of an integral to the integral of the convex function. It was proved by Jensen in 1906, building on an earlier proof of the same inequality for doubly-differentiable functions by Otto Hölder in 1889. Given its generality, the inequality appears in many forms depending on the context, some of which are presented below. In its simplest form the inequality states that the convex transformation of a mean is less than or equal to the mean applied after convex transformation (or equivalently, the opposite inequality for concave transformations). Jensen's inequality generalizes the statement that the secant line of a convex function lies ''above'' the graph of the function, which is Jensen's inequality for two points: the secant line consists of weighted means of the convex function (for ''t'' ∈ ,1, :t f(x_1) + (1-t) f(x_2), while the g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithm

In mathematics, the logarithm of a number is the exponent by which another fixed value, the base, must be raised to produce that number. For example, the logarithm of to base is , because is to the rd power: . More generally, if , then is the logarithm of to base , written , so . As a single-variable function, the logarithm to base is the inverse of exponentiation with base . The logarithm base is called the ''decimal'' or ''common'' logarithm and is commonly used in science and engineering. The ''natural'' logarithm has the number as its base; its use is widespread in mathematics and physics because of its very simple derivative. The ''binary'' logarithm uses base and is widely used in computer science, information theory, music theory, and photography. When the base is unambiguous from the context or irrelevant it is often omitted, and the logarithm is written . Logarithms were introduced by John Napier in 1614 as a means of simplifying calculation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kullback–Leibler Divergence

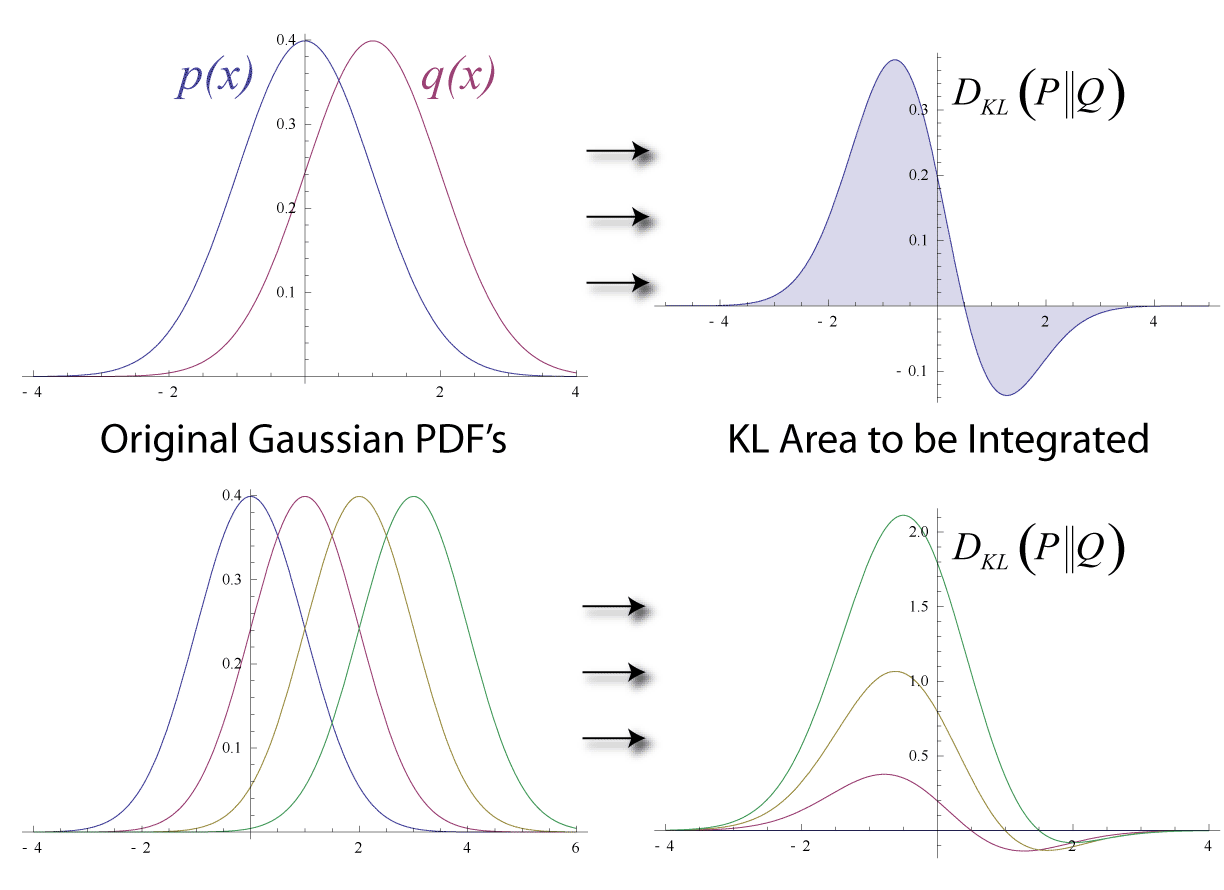

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is different from a true probability distribution . Mathematically, it is defined as D_\text(P \parallel Q) = \sum_ P(x) \, \log \frac\text A simple interpretation of the KL divergence of from is the expected excess surprise from using as a model instead of when the actual distribution is . While it is a measure of how different two distributions are and is thus a distance in some sense, it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for cer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |