|

G-test

In statistics, ''G''-tests are likelihood-ratio or maximum likelihood statistical significance tests that are increasingly being used in situations where chi-squared tests were previously recommended. Formulation The general formula for ''G'' is : G = 2\sum_ , where O_i \geq 0 is the observed count in a cell, E_i > 0 is the expected count under the null hypothesis, \ln denotes the natural logarithm, and the sum is taken over all non-empty cells. The resulting G is chi-squared distributed. Furthermore, the total observed count should be equal to the total expected count:\sum_i O_i = \sum_i E_i = Nwhere N is the total number of observations. Derivation We can derive the value of the ''G''-test from the log-likelihood ratio test where the underlying model is a multinomial model. Suppose we had a sample x = (x_1, \ldots, x_m) where each x_i is the number of times that an object of type i was observed. Furthermore, let n = \sum_^m x_i be the total number of objects observed. I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert R

Robert Lee Rayford (February 3, 1953 – May 15, 1969), sometimes identified as Robert R. due to his age, was an American teenager from Missouri who has been suggested to represent the earliest confirmed case of HIV/AIDS in North America. This is based on evidence published in 1988 in which the authors claimed that medical evidence indicated that he was "infected with a virus closely related or identical to human immunodeficiency virus type 1." Rayford died of pneumonia, but his other symptoms baffled the doctors who treated him. A study published in 1988 reported the detection of antibodies against HIV. Results of testing for HIV genetic material were reported at a scientific conference in Australia in 1999. However, the data has never been published in a peer-reviewed medical or scientific journal. No photos of Rayford are known to exist. Background Robert Rayford was born on February 3, 1953, in St. Louis, Missouri. As a single parent, his mother Constance had to rais ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Genetics

Statistical genetics is a scientific field concerned with the development and application of statistical methods for drawing inferences from genetic data. The term is most commonly used in the context of human genetics. Research in statistical genetics generally involves developing theory or methodology to support research in one of three related areas: *population genetics Population genetics is a subfield of genetics that deals with genetic differences within and among populations, and is a part of evolutionary biology. Studies in this branch of biology examine such phenomena as Adaptation (biology), adaptation, s ... - Study of evolutionary processes affecting genetic variation between organisms * genetic epidemiology - Studying effects of genes on diseases * quantitative genetics - Studying the effects of genes on 'normal' phenotypes Statistical geneticists tend to collaborate closely with geneticists, molecular biologists, clinicians and bioinformaticians. Statistic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

McDonald–Kreitman Test

The McDonald–Kreitman test is a statistical test often used by evolutionary and population biologists to detect and measure the amount of adaptive evolution within a species by determining whether adaptive evolution has occurred, and the proportion of substitutions that resulted from positive selection (also known as directional selection). To do this, the McDonald–Kreitman test compares the amount of variation within a species ( polymorphism) to the divergence between species (substitutions) at two types of sites, neutral and nonneutral. A substitution refers to a nucleotide that is fixed within one species, but a different nucleotide is fixed within a second species at the same base pair of homologous DNA sequences.Futuyma, D. J. 2013. Evolution. Sinauer Associates, Inc.: Sunderland. A site is nonneutral if it is either advantageous or deleterious. The two types of sites can be either synonymous or nonsynonymous within a protein-coding region. In a protein-coding sequence of D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable X, which may be any member x within the set \mathcal and is distributed according to p\colon \mathcal\to[0, 1], the entropy is \Eta(X) := -\sum_ p(x) \log p(x), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or "shannon (unit), shannons"), while base Euler's number, ''e'' gives "natural units" nat (unit), nat, and base 10 gives units of "dits", "bans", or "Hartley (unit), hartleys". An equivalent definition of entropy is the expected value of the self-information of a v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information content, amount of information" (in Units of information, units such as shannon (unit), shannons (bits), Nat (unit), nats or Hartley (unit), hartleys) obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of Entropy (information theory), entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable. Not limited to real-valued random variables and linear dependence like the Pearson correlation coefficient, correlation coefficient, MI is more general and determines how different the joint distribution of the pair (X,Y) is from the product of the marginal distributions of X and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

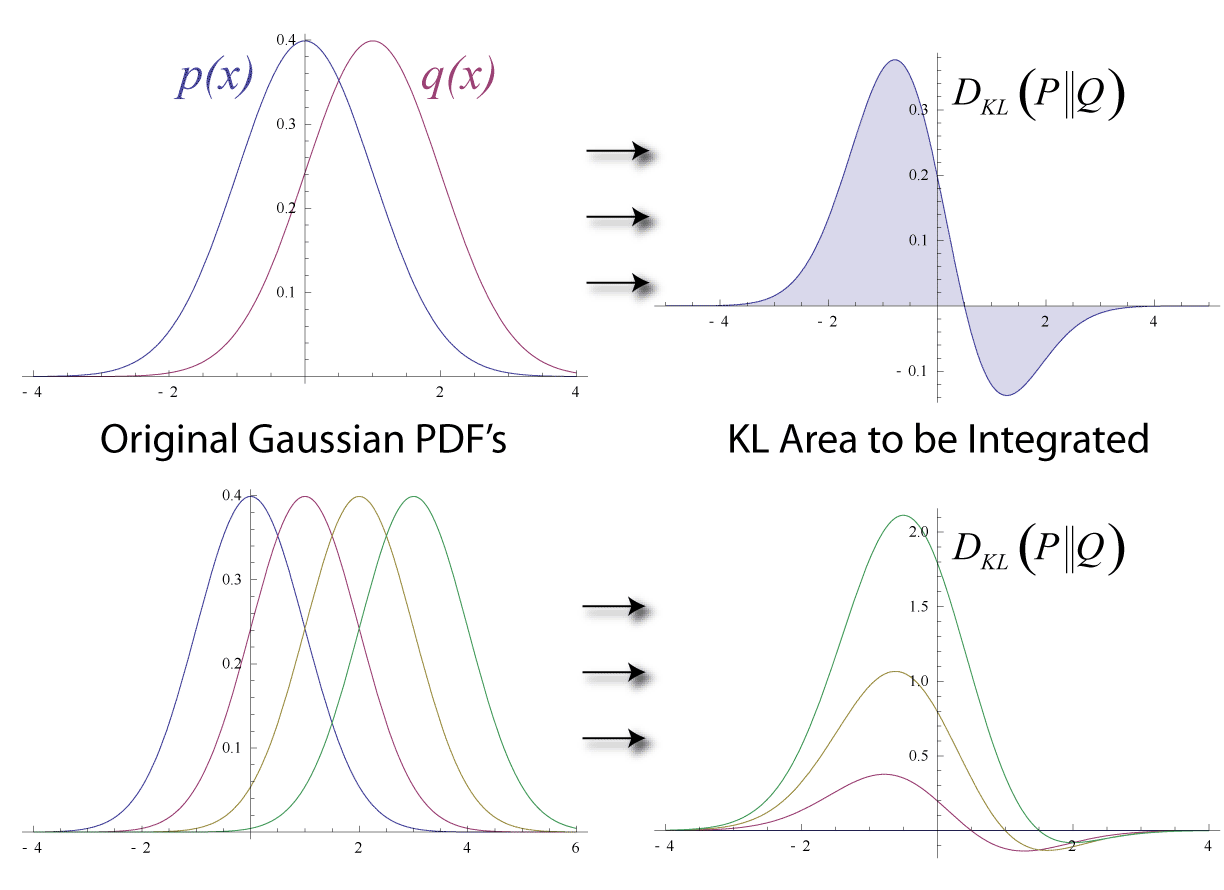

Kullback–Leibler Divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is different from a true probability distribution . Mathematically, it is defined as D_\text(P \parallel Q) = \sum_ P(x) \, \log \frac\text A simple interpretation of the KL divergence of from is the expected excess surprise from using as a model instead of when the actual distribution is . While it is a measure of how different two distributions are and is thus a distance in some sense, it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for cer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE Transactions On Information Theory

''IEEE Transactions on Information Theory'' is a monthly peer-reviewed scientific journal published by the IEEE Information Theory Society. It covers information theory and the mathematics of communications. It was established in 1953 as ''IRE Transactions on Information Theory''. The editor-in-chief is Venugopal V. Veeravalli (University of Illinois Urbana-Champaign). As of 2007, the journal allows the posting of preprints on arXiv. According to Jack van Lint, it is the leading research journal in the whole field of coding theory. A 2006 study using the PageRank network analysis algorithm found that, among hundreds of computer science-related journals, ''IEEE Transactions on Information Theory'' had the highest ranking and was thus deemed the most prestigious. ''ACM Computing Surveys'', with the highest impact factor The impact factor (IF) or journal impact factor (JIF) of an academic journal is a type of journal ranking. Journals with higher impact factor values are consid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Annals Of Statistics

The ''Annals of Statistics'' is a peer-reviewed statistics journal published by the Institute of Mathematical Statistics. It was started in 1973 as a continuation in part of the '' Annals of Mathematical Statistics (1930)'', which was split into the ''Annals of Statistics'' and the '' Annals of Probability''. The journal CiteScore is 5.8, and its SCImago Journal Rank is 5.877, both from 2020. Articles older than 3 years are available on JSTOR, and all articles since 2004 are freely available on the arXiv arXiv (pronounced as "archive"—the X represents the Chi (letter), Greek letter chi ⟨χ⟩) is an open-access repository of electronic preprints and postprints (known as e-prints) approved for posting after moderation, but not Scholarly pee .... Editorial board The following persons have been editors of the journal: * Ingram Olkin (1972–1973) * I. Richard Savage (1974–1976) * Rupert G. Miller (1977–1979) * David V. Hinkley (1980–1982) * Michael D. Perlm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Efficiency (statistics)

In statistics, efficiency is a measure of quality of an estimator, of an experimental design, or of a hypothesis testing procedure. Essentially, a more efficient estimator needs fewer input data or observations than a less efficient one to achieve the Cramér–Rao bound. An ''efficient estimator'' is characterized by having the smallest possible variance, indicating that there is a small deviance between the estimated value and the "true" value in the L2 norm sense. The relative efficiency of two procedures is the ratio of their efficiencies, although often this concept is used where the comparison is made between a given procedure and a notional "best possible" procedure. The efficiencies and the relative efficiency of two procedures theoretically depend on the sample size available for the given procedure, but it is often possible to use the asymptotic relative efficiency (defined as the limit of the relative efficiencies as the sample size grows) as the principal comparison ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pearson's Chi-squared Test

Pearson's chi-squared test or Pearson's \chi^2 test is a statistical test applied to sets of categorical data to evaluate how likely it is that any observed difference between the sets arose by chance. It is the most widely used of many chi-squared tests (e.g., Yates, likelihood ratio, portmanteau test in time series, etc.) – statistical procedures whose results are evaluated by reference to the chi-squared distribution. Its properties were first investigated by Karl Pearson in 1900. In contexts where it is important to improve a distinction between the test statistic and its distribution, names similar to ''Pearson χ-squared'' test or statistic are used. It is a p-value test. The setup is as follows: * Before the experiment, the experimenter fixes a certain number N of samples to take. * The observed data is (O_1, O_2, ..., O_n), the count number of samples from a finite set of given categories. They satisfy \sum_i O_i = N. * The null hypothesis is that the count numbers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Derivation (chi-squared)

Derivation may refer to: Language * Morphological derivation, a word-formation process * Parse tree or concrete syntax tree, representing a string's syntax in formal grammars Law * Derivative work, in copyright law * Derivation proceeding, a proceeding in United States patent law Music * The creation of a derived row, in the twelve-tone musical technique Science and mathematics * Derivation (differential algebra), a unary function satisfying the Leibniz product law * Formal proof or derivation, a sequence of sentences each of which is an axiom or follows from the preceding sentences in the sequence by a rule of inference * An after-the-fact justification for an action, in the work of sociologist Vilfredo Pareto See also * Derive (other), for meanings of "derive" and "derived" *Derivative In mathematics, the derivative is a fundamental tool that quantifies the sensitivity to change of a function's output with respect to its input. The derivative of a function ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |