|

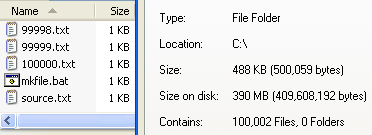

Free-space Bitmap

Free-space bitmaps are one method used to track allocated sectors by some file systems. While the most simplistic design is highly inefficient, advanced or hybrid implementations of free-space bitmaps are used by some modern file systems. Example The simplest form of free-space bitmap is a bit array, i.e. a block of bits. In this example, a zero would indicate a free sector, while a one indicates a sector in use. Each sector would be of fixed size. For explanatory purposes, we will use a 4 GiB hard drive with 4096-byte sectors and assume that the bitmap itself is stored elsewhere. The example disk would require 1,048,576 bits, one for each sector, or 1 MiB. Increasing the size of the drive will proportionately increase the size of the bitmap, while multiplying the sector size will produce a proportionate reduction. When the operating system (OS) needs to write a file, it will scan the bitmap until it finds enough free locations to fit the file. If a 12 KiB fi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Disk Sector

In computer disk storage, a sector is a subdivision of a track on a magnetic disk or optical disc. Each sector stores a fixed amount of user-accessible data, traditionally 512 bytes for hard disk drives (HDDs) and 2048 bytes for CD-ROMs and DVD-ROMs. Newer HDDs use 4096-byte (4 KiB) sectors, which are known as the Advanced Format (AF). The sector is the minimum storage unit of a hard drive. Most disk partitioning schemes are designed to have files occupy an integral number of sectors regardless of the file's actual size. Files that do not fill a whole sector will have the remainder of their last sector filled with zeroes. In practice, operating systems typically operate on blocks of data, which may span multiple sectors. Geometrically, the word sector means a portion of a disk between a center, two radii and a corresponding arc (see Figure 1, item B), which is shaped like a slice of a pie. Thus, the ''disk sector'' (Figure 1, item C) refers to the intersection o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

File System Fragmentation

In computing, file system fragmentation, sometimes called file system aging, is the tendency of a file system to lay out the contents of files non-continuously to allow in-place modification of their contents. It is a special case of data fragmentation. File system fragmentation negatively impacts seek time in spinning storage media, which is known to hinder throughput. Fragmentation can be remedied by re-organizing files and free space back into contiguous areas, a process called defragmentation. Solid-state drives do not physically seek, so their non-sequential data access is hundreds of times faster than moving drives', making fragmentation a non-issue. It is recommended to not defragment solid-state storage, because this can prematurely wear drives via unnecessary write–erase operations. Causes When a file system is first initialized on a partition, it contains only a few small internal structures and is otherwise one contiguous block of empty space. This means that th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

B-tree

In computer science, a B-tree is a self-balancing tree data structure that maintains sorted data and allows searches, sequential access, insertions, and deletions in logarithmic time. The B-tree generalizes the binary search tree, allowing for nodes with more than two children. Unlike other self-balancing binary search trees, the B-tree is well suited for storage systems that read and write relatively large blocks of data, such as databases and file systems. Origin B-trees were invented by Rudolf Bayer and Edward M. McCreight while working at Boeing Research Labs, for the purpose of efficiently managing index pages for large random-access files. The basic assumption was that indices would be so voluminous that only small chunks of the tree could fit in main memory. Bayer and McCreight's paper, ''Organization and maintenance of large ordered indices'', was first circulated in July 1970 and later published in '' Acta Informatica''. Bayer and McCreight never explained what, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ExFAT

exFAT (Extensible File Allocation Table) is a file system introduced by Microsoft in 2006 and optimized for flash memory such as USB flash drives and SD cards. exFAT was proprietary until 28 August 2019, when Microsoft published its specification. Microsoft owns patents on several elements of its design. exFAT can be used where NTFS is not a feasible solution (due to data-structure overhead), but where a greater file-size limit than that of the standard FAT32 file system (i.e. 4 GB) is required. exFAT has been adopted by the SD Association as the default file system for SDXC cards larger than 32 GB. Windows 8 and later versions natively support exFAT boot, and support the installation of the system in a special way to run in the exFAT volume. History exFAT was introduced in late 2006 as part of Windows CE 6.0, an embedded Windows operating system. Most of the vendors signing on for licenses are manufacturers of embedded systems or device manufacturers that pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High Performance File System

HPFS (High Performance File System) is a file system created specifically for the OS/2 operating system to improve upon the limitations of the FAT file system. It was written by Gordon Letwin and others at Microsoft and added to OS/2 version 1.2, at that time still a joint undertaking of Microsoft and IBM, and released in 1988. Overview Compared with FAT, HPFS provided a number of additional capabilities: *Support for mixed case file names, in different code pages *Support for long file names (255 characters as opposed to FAT's 8.3 naming scheme) *More efficient use of disk space (files are not stored using multiple-sector clusters but on a per-sector basis) *An internal architecture that keeps related items close to each other on the disk volume *Less fragmentation of data * Extent-based space allocation *Separate datestamps for last modification, last access, and creation (as opposed to last-modification-only datestamp in then-times implementations of FAT) *B+ tree stru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Block Availability Map

In computer file systems, a block availability map (BAM) is a data structure used to track disk blocks that are considered free (available for new data). It is used along with a directory to manage files on a disk (originally only a floppy disk, and later also a hard disk). In terms of Commodore DOS ( CBM DOS) compatible disk drives, the BAM was a data structure stored in a reserved area of the disk (its size and location varied based on the physical characteristics of the disk). For each track, the BAM consisted of a bitmap of available blocks and (usually) a count of the available blocks. The count was held in a single byte, as all formats had 256 or fewer blocks per track. The count byte was simply the sum of all 1-bits in the bitmap of bytes for the current track. The following table illustrates the layout of Commodore 1541 BAM. The table would be larger for higher-capacity disks (described below). The bitmap was contained in 3 bytes for Commodore 1541 format ( single ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flash Memory

Flash memory is an electronic non-volatile computer memory storage medium that can be electrically erased and reprogrammed. The two main types of flash memory, NOR flash and NAND flash, are named for the NOR and NAND logic gates. Both use the same cell design, consisting of floating gate MOSFETs. They differ at the circuit level depending on whether the state of the bit line or word lines is pulled high or low: in NAND flash, the relationship between the bit line and the word lines resembles a NAND gate; in NOR flash, it resembles a NOR gate. Flash memory, a type of floating-gate memory, was invented at Toshiba in 1980 and is based on EEPROM technology. Toshiba began marketing flash memory in 1987. EPROMs had to be erased completely before they could be rewritten. NAND flash memory, however, may be erased, written, and read in blocks (or pages), which generally are much smaller than the entire device. NOR flash memory allows a single machine word to be written to an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latency (engineering)

Latency, from a general point of view, is a time delay between the cause and the effect of some physical change in the system being observed. Lag, as it is known in gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games. Latency is physically a consequence of the limited velocity at which any physical interaction can propagate. The magnitude of this velocity is always less than or equal to the speed of light. Therefore, every physical system with any physical separation (distance) between cause and effect will experience some sort of latency, regardless of the nature of the stimulation at which it has been exposed to. The precise definition of latency depends on the system being observed or the nature of the simulation. In communications, the lower limit of latency is determined by the medium being used to transfer information. In reliable two-way communication ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random-access Memory

Random-access memory (RAM; ) is a form of computer memory that can be read and changed in any order, typically used to store working data and machine code. A random-access memory device allows data items to be read or written in almost the same amount of time irrespective of the physical location of data inside the memory, in contrast with other direct-access data storage media (such as hard disks, CD-RWs, DVD-RWs and the older magnetic tapes and drum memory), where the time required to read and write data items varies significantly depending on their physical locations on the recording medium, due to mechanical limitations such as media rotation speeds and arm movement. RAM contains multiplexing and demultiplexing circuitry, to connect the data lines to the addressed storage for reading or writing the entry. Usually more than one bit of storage is accessed by the same address, and RAM devices often have multiple data lines and are said to be "8-bit" or "16-bit", etc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

File System

In computing, file system or filesystem (often abbreviated to fs) is a method and data structure that the operating system uses to control how data is stored and retrieved. Without a file system, data placed in a storage medium would be one large body of data with no way to tell where one piece of data stopped and the next began, or where any piece of data was located when it was time to retrieve it. By separating the data into pieces and giving each piece a name, the data are easily isolated and identified. Taking its name from the way a paper-based data management system is named, each group of data is called a " file". The structure and logic rules used to manage the groups of data and their names is called a "file system." There are many kinds of file systems, each with unique structure and logic, properties of speed, flexibility, security, size and more. Some file systems have been designed to be used for specific applications. For example, the ISO 9660 file system is desi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Megabyte

The megabyte is a multiple of the unit byte for digital information. Its recommended unit symbol is MB. The unit prefix ''mega'' is a multiplier of (106) in the International System of Units (SI). Therefore, one megabyte is one million bytes of information. This definition has been incorporated into the International System of Quantities. In the computer and information technology fields, other definitions have been used that arose for historical reasons of convenience. A common usage has been to designate one megabyte as (220 B), a quantity that conveniently expresses the binary architecture of digital computer memory. The standards bodies have deprecated this usage of the megabyte in favor of a new set of binary prefixes, in which this quantity is designated by the unit mebibyte (MiB). Definitions The unit megabyte is commonly used for 10002 (one million) bytes or 10242 bytes. The interpretation of using base 1024 originated as technical jargon for the byte multiples that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |