|

Evolutionary Neural Network

Neuroevolution, or neuro-evolution, is a form of artificial intelligence that uses evolutionary algorithms to generate artificial neural networks (ANN), parameters, and rules. It is most commonly applied in artificial life, general game playing and evolutionary robotics. The main benefit is that neuroevolution can be applied more widely than supervised learning algorithms, which require a syllabus of correct input-output pairs. In contrast, neuroevolution requires only a measure of a network's performance at a task. For example, the outcome of a game (i.e., whether one player won or lost) can be easily measured without providing labeled examples of desired strategies. Neuroevolution is commonly used as part of the reinforcement learning paradigm, and it can be contrasted with conventional deep learning techniques that use backpropagation (gradient descent on a neural network) with a fixed topology. Features Many neuroevolution algorithms have been defined. One common distinction ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to machine perception, perceive their environment and use machine learning, learning and intelligence to take actions that maximize their chances of achieving defined goals. High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon (company), Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Amazon Alexa, Alexa); autonomous vehicles (e.g., Waymo); Generative artificial intelligence, generative and Computational creativity, creative tools (e.g., ChatGPT and AI art); and Superintelligence, superhuman play and analysis in strategy games (e.g., ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

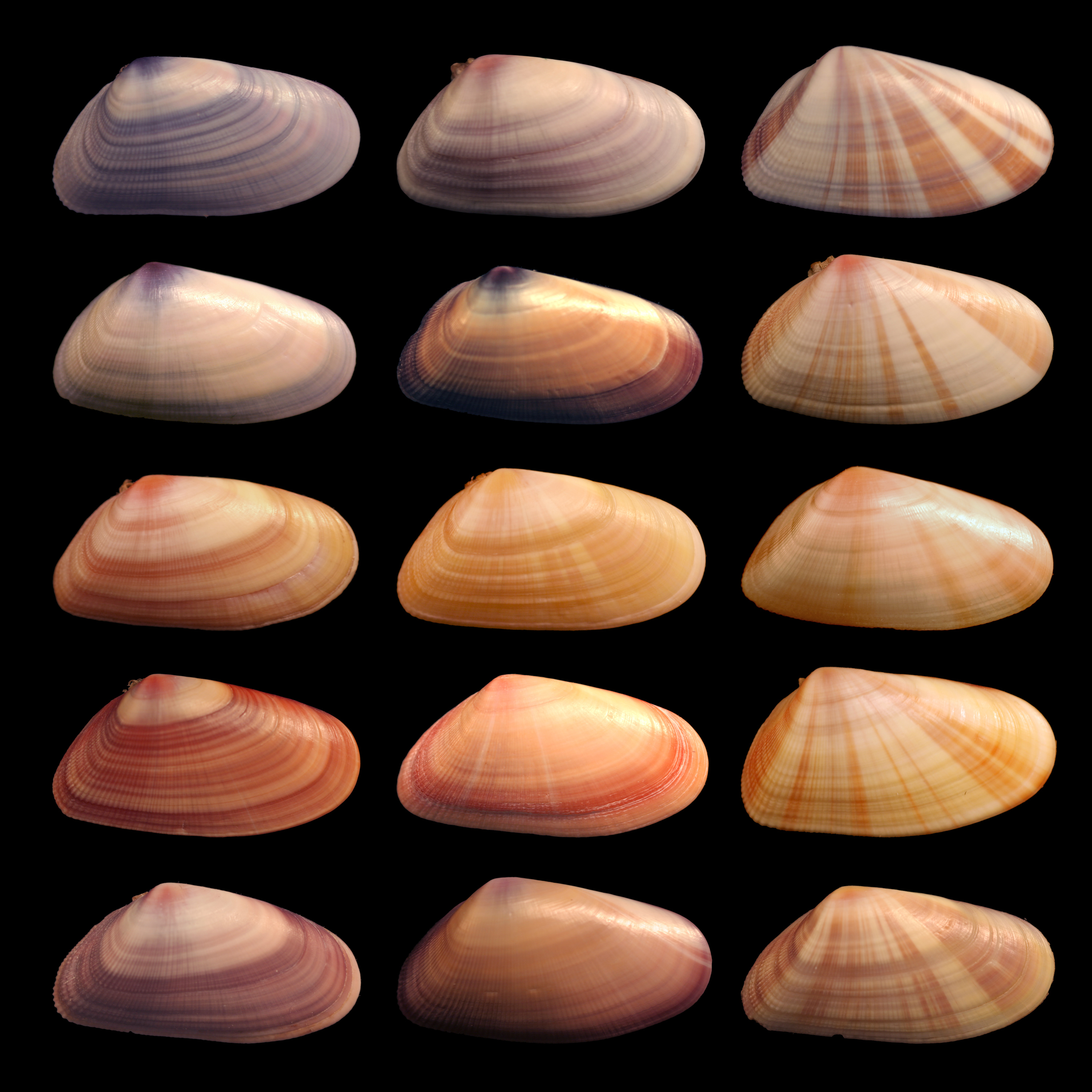

Phenotype

In genetics, the phenotype () is the set of observable characteristics or traits of an organism. The term covers the organism's morphology (physical form and structure), its developmental processes, its biochemical and physiological properties, and its behavior. An organism's phenotype results from two basic factors: the expression of an organism's genetic code (its genotype) and the influence of environmental factors. Both factors may interact, further affecting the phenotype. When two or more clearly different phenotypes exist in the same population of a species, the species is called polymorphic. A well-documented example of polymorphism is Labrador Retriever coloring; while the coat color depends on many genes, it is clearly seen in the environment as yellow, black, and brown. Richard Dawkins in 1978 and again in his 1982 book '' The Extended Phenotype'' suggested that one can regard bird nests and other built structures such as caddisfly larva cases and beaver dams ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hypercube

In geometry, a hypercube is an ''n''-dimensional analogue of a square ( ) and a cube ( ); the special case for is known as a ''tesseract''. It is a closed, compact, convex figure whose 1- skeleton consists of groups of opposite parallel line segments aligned in each of the space's dimensions, perpendicular to each other and of the same length. A unit hypercube's longest diagonal in ''n'' dimensions is equal to \sqrt. An ''n''-dimensional hypercube is more commonly referred to as an ''n''-cube or sometimes as an ''n''-dimensional cube. The term measure polytope (originally from Elte, 1912) is also used, notably in the work of H. S. M. Coxeter who also labels the hypercubes the γn polytopes. The hypercube is the special case of a hyperrectangle (also called an ''n-orthotope''). A ''unit hypercube'' is a hypercube whose side has length one unit. Often, the hypercube whose corners (or ''vertices'') are the 2''n'' points in R''n'' with each coordinate equal to 0 or 1 i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compositional Pattern-producing Network

Compositional pattern-producing networks (CPPNs) are a variation of artificial neural networks (ANNs) that have an architecture whose evolution is guided by genetic algorithms.Stanley, Kenneth O. "Compositional pattern producing networks: A novel abstraction of development." Genetic programming and evolvable machines 8.2 (2007): 131-162. While ANNs often contain only sigmoid functions and sometimes Gaussian functions, CPPNs can include both types of functions and many others. The choice of functions for the canonical set can be biased toward specific types of patterns and regularities. For example, periodic functions such as sine produce segmented patterns with repetitions, while symmetric functions such as Gaussian produce symmetric patterns. Linear functions can be employed to produce linear or fractal-like patterns. Thus, the architect of a CPPN-based genetic art system can bias the types of patterns it generates by deciding the set of canonical functions to include. Furthermore, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HyperNEAT

Hypercube-based NEAT, or HyperNEAT, is a generative encoding that evolves artificial neural networks In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected ... (ANNs) with the principles of the widely used NeuroEvolution of Augmented Topologies (NEAT) algorithm developed by Kenneth Stanley. It is a novel technique for evolving large-scale neural networks using the geometric regularities of the task domain. It uses Compositional Pattern Producing Networks ( CPPNs), which are used to generate the images foPicbreeder.org and shapes foEndlessForms.com. HyperNEAT has recently been extended to also evolve plastic ANNs and to evolve the location of every neuron in the network. Applications to date * Multi-agent learning * Checkers board evaluation * Controlling Legged Robovideo* Comparing G ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NeuroEvolution Of Augmenting Topologies

NeuroEvolution of Augmenting Topologies (NEAT) is a genetic algorithm (GA) for generating evolving artificial neural networks (a neuroevolution technique) developed by Kenneth Stanley and Risto Miikkulainen in 2002 while at The University of Texas at Austin. It alters both the weighting parameters and structures of networks, attempting to find a balance between the fitness of evolved solutions and their diversity. It is based on applying three key techniques: tracking genes with history markers to allow crossover among topologies, applying speciation (the evolution of species) to preserve innovations, and developing topologies incrementally from simple initial structures ("complexifying"). Performance On simple control tasks, the NEAT algorithm often arrives at effective networks more quickly than other contemporary neuro-evolutionary techniques and reinforcement learning methods, as of 2006.Kenneth O. Stanley and Risto Miikkulainen (2002). "Evolving Neural Networks Through Augm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simulated Annealing

Simulated annealing (SA) is a probabilistic technique for approximating the global optimum of a given function. Specifically, it is a metaheuristic to approximate global optimization in a large search space for an optimization problem. For large numbers of local optima, SA can find the global optimum. It is often used when the search space is discrete (for example the traveling salesman problem, the boolean satisfiability problem, protein structure prediction, and job-shop scheduling). For problems where finding an approximate global optimum is more important than finding a precise local optimum in a fixed amount of time, simulated annealing may be preferable to exact algorithms such as gradient descent or branch and bound. The name of the algorithm comes from annealing in metallurgy, a technique involving heating and controlled cooling of a material to alter its physical properties. Both are attributes of the material that depend on their thermodynamic free energy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Evolutionary Programming

Evolutionary programming is an evolutionary algorithm, where a share of new population is created by mutation of previous population without crossover. Evolutionary programming differs from evolution strategy ES(\mu+\lambda) in one detail. All individuals are selected for the new population, while in ES(\mu+\lambda), every individual has the same probability to be selected. It is one of the four major evolutionary algorithm paradigms. History It was first used by Lawrence J. Fogel in the US in 1960 in order to use simulated evolution as a learning process aiming to generate artificial intelligence. It was used to evolve finite-state machines as predictors. See also * Artificial intelligence * Genetic algorithm In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ... * Genetic opera ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Genetic Programming

Genetic programming (GP) is an evolutionary algorithm, an artificial intelligence technique mimicking natural evolution, which operates on a population of programs. It applies the genetic operators selection (evolutionary algorithm), selection according to a predefined fitness function, fitness measure, mutation (evolutionary algorithm), mutation and crossover (evolutionary algorithm), crossover. The crossover operation involves swapping specified parts of selected pairs (parents) to produce new and different offspring that become part of the new generation of programs. Some programs not selected for reproduction are copied from the current generation to the new generation. Mutation involves substitution of some random part of a program with some other random part of a program. Then the selection and other operations are recursively applied to the new generation of programs. Typically, members of each new generation are on average more fit than the members of the previous gene ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

S-expression

In computer programming, an S-expression (or symbolic expression, abbreviated as sexpr or sexp) is an expression in a like-named notation for nested List (computing), list (Tree (data structure), tree-structured) data. S-expressions were invented for, and popularized by, the programming language Lisp (programming language), Lisp, which uses them for source code as well as data. Characteristics In the usual parenthesized Syntax (programming languages), syntax of Lisp, an S-expression is classically definedJohn McCarthy (1960/2006)Recursive functions of symbolic expressions. Originally published in Communications of the ACM. as # an atom of the form ''x'', or # an Expression (computer science), expression of the form (''x'' . ''y'') where ''x'' and ''y'' are S-expressions. This definition reflects LISP's representation of a list as a series of "cells", each one an ordered pair. In plain lists, ''y'' points to the next cell (if any), thus forming a Linked list, list. The Recursi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Genetic Algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to generate high-quality solutions to optimization and search problems via biologically inspired operators such as selection, crossover, and mutation. Some examples of GA applications include optimizing decision trees for better performance, solving sudoku puzzles, hyperparameter optimization, and causal inference. Methodology Optimization problems In a genetic algorithm, a population of candidate solutions (called individuals, creatures, organisms, or phenotypes) to an optimization problem is evolved toward better solutions. Each candidate solution has a set of properties (its chromosomes or genotype) which can be mutated and altered; traditionally, solutions are represented in binary as strings of 0s and 1s, but other encod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |