|

Defragmenter

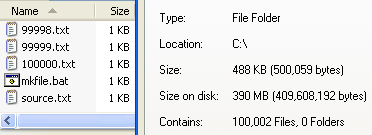

In the maintenance of file systems, defragmentation is a process that reduces the degree of file system fragmentation, fragmentation. It does this by physically organizing the contents of the mass storage device used to store computer file, files into the smallest number of Contiguous data storage, contiguous regions (fragments, ''extents''). It also attempts to create larger regions of free space using Data compaction, compaction to impede the return of fragmentation. Some defragmentation utilities try to keep smaller files within a single directory together, as they are often accessed in sequence. Defragmentation is advantageous and relevant to file systems on electromechanical Disk storage, disk drives (hard disk drives, Floppy disk, floppy disk drives and Optical disc drive, optical disk media). The movement of the Disk read-and-write head, hard drive's read/write heads over different areas of the disk when accessing fragmented files is slower, compared to accessing the ent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boot-time Defragmentation

In the maintenance of file systems, defragmentation is a process that reduces the degree of fragmentation. It does this by physically organizing the contents of the mass storage device used to store files into the smallest number of contiguous regions (fragments, ''extents''). It also attempts to create larger regions of free space using compaction to impede the return of fragmentation. Some defragmentation utilities try to keep smaller files within a single directory together, as they are often accessed in sequence. Defragmentation is advantageous and relevant to file systems on electromechanical disk drives (hard disk drives, floppy disk drives and optical disk media). The movement of the hard drive's read/write heads over different areas of the disk when accessing fragmented files is slower, compared to accessing the entire contents of a non-fragmented file sequentially without moving the read/write heads to seek other fragments. Causes of fragmentation Fragmentatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microsoft Drive Optimizer

Microsoft Drive Optimizer (formerly Disk Defragmenter) is a utility software, utility in Microsoft Windows designed to increase data access speed by rearranging Computer file, files stored on a Hard disk, disk to occupy Contiguous data storage, contiguous storage locations, a technique called defragmentation. Defragmenting a disk minimizes head travel, which reduces the time it takes to read files from and write files to the disk. As a result of the decreased read and write times, Microsoft Drive Optimizer decreases system startup times for systems starting from magnetic storage devices such as a hard drive. However, defragmentation is not helpful on storage devices such as solid state drives, USB flash drive, USB drives or SD cards that use flash memory to increase speeds, as these drives do not use a head. Defragmentation may decrease lifespan for certain technologies, e.g. solid state drives. Microsoft Drive Optimizer was first officially shipped with Windows XP. From Windows ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hard Disk Drive

A hard disk drive (HDD), hard disk, hard drive, or fixed disk is an electro-mechanical data storage device that stores and retrieves digital data using magnetic storage with one or more rigid rapidly rotating platters coated with magnetic material. The platters are paired with magnetic heads, usually arranged on a moving actuator arm, which read and write data to the platter surfaces. Data is accessed in a random-access manner, meaning that individual blocks of data can be stored and retrieved in any order. HDDs are a type of non-volatile storage, retaining stored data when powered off. Modern HDDs are typically in the form of a small rectangular box. Introduced by IBM in 1956, HDDs were the dominant secondary storage device for general-purpose computers beginning in the early 1960s. HDDs maintained this position into the modern era of servers and personal computers, though personal computing devices produced in large volume, like cell phones and tablets, rely on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Comparison Of Defragmentation Software ...

__NOTOC__ The following is a comparison of notable file system defragmentation software: Notes References {{Reflist, 30em External links The Big Windows 7 Defragmenter TestThe Big Windows XP Defragmenter Test Defragmentation software In the maintenance of file systems, defragmentation is a process that reduces the degree of fragmentation. It does this by physically organizing the contents of the mass storage device used to store files into the smallest number of contiguo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Seek Time

Higher performance in hard disk drives comes from devices which have better performance characteristics. These performance characteristics can be grouped into two categories: access time and data transfer time (or rate). Access time The ''access time'' or ''response time'' of a rotating drive is a measure of the time it takes before the drive can actually transfer data. The factors that control this time on a rotating drive are mostly related to the mechanical nature of the rotating disks and moving heads. It is composed of a few independently measurable elements that are added together to get a single value when evaluating the performance of a storage device. The access time can vary significantly, so it is typically provided by manufacturers or measured in benchmarks as an average. The key components that are typically added together to obtain the access time are: * Seek time * Rotational latency * Command processing time * Settle time Seek time With rotating drives, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Filesystem

In computing, file system or filesystem (often abbreviated to fs) is a method and data structure that the operating system uses to control how data is stored and retrieved. Without a file system, data placed in a storage medium would be one large body of data with no way to tell where one piece of data stopped and the next began, or where any piece of data was located when it was time to retrieve it. By separating the data into pieces and giving each piece a name, the data are easily isolated and identified. Taking its name from the way a paper-based data management system is named, each group of data is called a "file". The structure and logic rules used to manage the groups of data and their names is called a "file system." There are many kinds of file systems, each with unique structure and logic, properties of speed, flexibility, security, size and more. Some file systems have been designed to be used for specific applications. For example, the ISO 9660 file system is designe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

File System

In computing, file system or filesystem (often abbreviated to fs) is a method and data structure that the operating system uses to control how data is stored and retrieved. Without a file system, data placed in a storage medium would be one large body of data with no way to tell where one piece of data stopped and the next began, or where any piece of data was located when it was time to retrieve it. By separating the data into pieces and giving each piece a name, the data are easily isolated and identified. Taking its name from the way a paper-based data management system is named, each group of data is called a "file". The structure and logic rules used to manage the groups of data and their names is called a "file system." There are many kinds of file systems, each with unique structure and logic, properties of speed, flexibility, security, size and more. Some file systems have been designed to be used for specific applications. For example, the ISO 9660 file system is designe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Filesystem Hierarchy Standard

The Filesystem Hierarchy Standard (FHS) is a reference describing the conventions used for the layout of a UNIX system. It has been made popular by its use in Linux distributions, but it is used by other UNIX variants as well. It is maintained by the Linux Foundation. The latest version is 3.0, released on 3 June 2015. Directory structure In the FHS, all files and directories appear under the root directory /, even if they are stored on different physical or virtual devices. Some of these directories only exist on a particular system if certain subsystems, such as the X Window System, are installed. Most of these directories exist in all Unix-like operating systems and are generally used in much the same way; however, the descriptions here are those used specifically for the FHS and are not considered authoritative for platforms other than Linux. FHS compliance Most Linux distributions follow the Filesystem Hierarchy Standard and declare it their own policy to maintain FHS ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

UNIX

Unix (; trademarked as UNIX) is a family of multitasking, multiuser computer operating systems that derive from the original AT&T Unix, whose development started in 1969 at the Bell Labs research center by Ken Thompson, Dennis Ritchie, and others. Initially intended for use inside the Bell System, AT&T licensed Unix to outside parties in the late 1970s, leading to a variety of both academic and commercial Unix variants from vendors including University of California, Berkeley (Berkeley Software Distribution, BSD), Microsoft (Xenix), Sun Microsystems (SunOS/Solaris (operating system), Solaris), Hewlett-Packard, HP/Hewlett Packard Enterprise, HPE (HP-UX), and IBM (IBM AIX, AIX). In the early 1990s, AT&T sold its rights in Unix to Novell, which then sold the UNIX trademark to The Open Group, an industry consortium founded in 1996. The Open Group allows the use of the mark for certified operating systems that comply with the Single UNIX Specification (SUS). Unix systems are chara ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Disk Partitioning

Disk partitioning or disk slicing is the creation of one or more regions on secondary storage, so that each region can be managed separately. These regions are called partitions. It is typically the first step of preparing a newly installed disk, before any file system is created. The disk stores the information about the partitions' locations and sizes in an area known as the partition table that the operating system reads before any other part of the disk. Each partition then appears to the operating system as a distinct "logical" disk that uses part of the actual disk. System administrators use a program called a partition editor to create, resize, delete, and manipulate the partitions. Partitioning allows the use of different filesystems to be installed for different kinds of files. Separating user data from system data can prevent the system partition from becoming full and rendering the system unusable. Partitioning can also make backing up easier. A disadvantage is that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latency (engineering)

Latency, from a general point of view, is a time delay between the cause and the effect of some physical change in the system being observed. Lag, as it is known in gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games. Latency is physically a consequence of the limited velocity at which any physical interaction can propagate. The magnitude of this velocity is always less than or equal to the speed of light. Therefore, every physical system with any physical separation (distance) between cause and effect will experience some sort of latency, regardless of the nature of the stimulation at which it has been exposed to. The precise definition of latency depends on the system being observed or the nature of the simulation. In communications, the lower limit of latency is determined by the medium being used to transfer information. In reliable two-way communication syst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Native Command Queuing

In computing, Native Command Queuing (NCQ) is an extension of the Serial ATA protocol allowing hard disk drives to internally optimize the order in which received read and write commands are executed. This can reduce the amount of unnecessary drive head movement, resulting in increased performance (and slightly decreased wear of the drive) for workloads where multiple simultaneous read/write requests are outstanding, most often occurring in server-type applications. History Native Command Queuing was preceded by Parallel ATA's version of Tagged Command Queuing (TCQ). ATA's attempt at integrating TCQ was constrained by the requirement that ATA host bus adapters use ISA bus device protocols to interact with the operating system. The resulting high CPU overhead and negligible performance gain contributed to a lack of market acceptance for TCQ. NCQ differs from TCQ in that, with NCQ, each command is of equal importance, but NCQ's host bus adapter also programs its own first party ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |