|

Data Warehouse Automation

Data warehouse automation (DWA) refers to the process of accelerating and automating the data warehouse development cycles, while assuring quality and consistency. DWA is believed to provide automation of the entire lifecycle of a data warehouse, from source system analysis to testing to documentation. It helps improve productivity, reduce cost, and improve overall quality. General Data warehouse automation primarily focuses on automation of each and every step involved in the lifecycle of a data warehouse, thus reducing the efforts required in managing it. Data warehouse automation works on the principles of design patterns. It comprises a central repository of design patterns, which encapsulate architectural standards as well as best practices for data design, data management, data integration, and data usage. In November 2015, an analyst firm has published a guide ''Which Data Warehouse Automation Tool is Right for You?'' covering four of the leading products in the DWA space. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

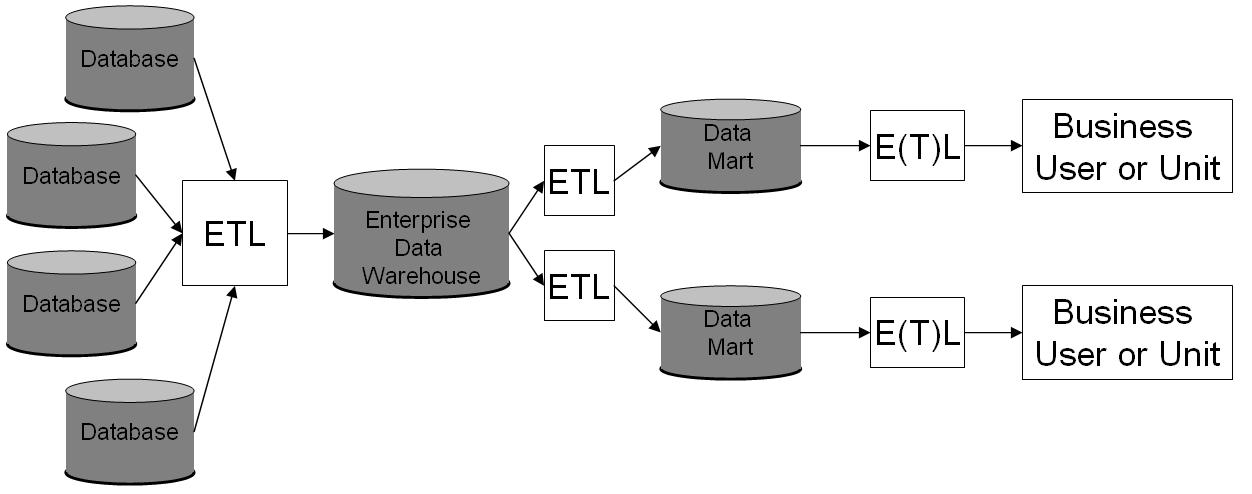

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is considered a core component of business intelligence. DWs are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses staging, data integration, and access lay ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extract, Transform, Load

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also be outputted to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on reoccurring schedules either as single jobs or aggregated into a batch of jobs. A properly designed ETL system extracts data from source systems and enforces data type and data validity standards and ensures it conforms structurally to the requirements of the output. Some ETL systems can also deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions. The ETL process became a popular concept in the 1970s and is often used in d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

WhereScape

WhereScape is a privately held international data warehouse automation and big data software company. WhereScape was acquired in 2019 by Idera Software. History The company was formerly named Profit Management Systems, but officially renamed to WhereScape in 2001 when expanding business operations into the USA. WhereScape was founded as a data warehouse consulting company in Auckland, New Zealand in 2002 by co-founders Michael Whitehead (President) and Wayne Richmond. WhereScape operates out of regional headquarters in Houston, Texas, USA; Reading, United Kingdom; and Singapore. As of 2015, it had over 720 customers in more than 15 countries and revenue of around $20 million (NZD). Sales channels vary by country but can be either direct sales or through local partners. WhereScape also has partnerships with third-party companies that embed WhereScape software in their solutions. WhereScape was acquired in 2019 by Idera Software Idera, Inc. () is the parent company of a por ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Integration

Data integration involves combining data residing in different sources and providing users with a unified view of them. This process becomes significant in a variety of situations, which include both commercial (such as when two similar companies need to merge their databases) and scientific (combining research results from different bioinformatics repositories, for example) domains. Data integration appears with increasing frequency as the volume (that is, big data) and the need to share existing data explodes. It has become the focus of extensive theoretical work, and numerous open problems remain unsolved. Data integration encourages collaboration between internal as well as external users. The data being integrated must be received from a heterogeneous database system and transformed to a single coherent data store that provides synchronous data across a network of files for clients. A common use of data integration is in data mining when analyzing and extracting informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse Appliance

In computing, the term data warehouse appliance (DWA) was coined by Foster Hinshaw for a computer architecture for data warehouses (DW) specifically marketed for big data analysis and discovery that is simple to use (not a pre-configuration) and has a high performance for the workload. A DWA includes an integrated set of servers, storage, operating systems, and databases. In marketing, the term evolved to include pre-installed and pre-optimized hardware and software as well as similar software-only systems promoted as easy to install on specific recommended hardware configurations or preconfigured as a complete system. These are marketing uses of the term and do not reflect the technical definition. A DWA is designed specifically for high performance big data analytics and is delivered as an easy-to-use packaged system. DW appliances are marketed for data volumes in the terabyte to petabyte range. Technology The data warehouse appliance (DWA) has several characteristics which d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mart

A data mart is a structure/access pattern specific to ''data warehouse'' environments, used to retrieve client-facing data. The data mart is a subset of the data warehouse and is usually oriented to a specific business line or team. Whereas data warehouses have an enterprise-wide depth, the information in data marts pertains to a single department. In some deployments, each department or business unit is considered the ''owner'' of its data mart including all the ''hardware'', ''software'' and ''data''. This enables each department to isolate the use, manipulation and development of their data. In other deployments where conformed dimensions are used, this business unit owner will not hold true for shared dimensions like customer, product, etc. Warehouses and data marts are built because the information in the database is not organized in a way that makes it readily accessible. This organization requires queries that are too complicated, difficult to access or resource intensive. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is considered a core component of business intelligence. DWs are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses staging, data integration, and access lay ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deployment Management

Deployment is the realisation of an application, or execution of a plan, idea, model, design, specification, standard, algorithm, or policy. Industry-specific definitions Computer science In computer science, a deployment is a realisation of a technical specification or algorithm as a program, software component, or other computer system through computer programming and deployment. Many implementations may exist for a given specification or standard. For example, web browsers contain implementations of World Wide Web Consortium-recommended specifications, and software development tools contain deployment of programming languages. A special case occurs in object-oriented programming, when a concrete class deploys an interface; in this case the concrete class is an ''deployment'' of the interface and it includes methods which are ''deployments'' of those methods specified by the interface. Information technology (IT) In the IT Industry, deployment refer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metadata Management

Metadata management involves managing metadata about other data, whereby this "other data" is generally referred to as content data. The term is used most often in relation to digital media, but older forms of metadata are catalogs, dictionaries, and taxonomies. For example, the Dewey Decimal Classification is a metadata management system developed in 1876 for libraries. Metadata schema Metadata management goes by the end-to-end process and governance framework for creating, controlling, enhancing, attributing, defining and managing a metadata schema, model or other structured aggregation system, either independently or within a repository and the associated supporting processes (often to enable the management of content). For web-based systems, Uniform Resource Locator, URLs, images, video etc. may be referenced from a triples table of object, attribute and value. Scope With specific knowledge domains, the boundaries of the metadata for each must be managed, since a general ontolo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Test Automation

In software testing, test automation is the use of software separate from the software being tested to control the execution of tests and the comparison of actual outcomes with predicted outcomes. Test automation can automate some repetitive but necessary tasks in a formalized testing process already in place, or perform additional testing that would be difficult to do manually. Test automation is critical for continuous delivery and continuous testing. There are many approaches to test automation, however below are the general approaches used widely: * Graphical user interface testing. A testing framework that generates user interface events such as keystrokes and mouse clicks, and observes the changes that result in the user interface, to validate that the observable behavior of the program is correct. * API driven testing. A testing framework that uses a programming interface to the application to validate the behaviour under test. Typically API driven testing bypasses ap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Exploration

Data exploration is an approach similar to initial data analysis, whereby a data analyst uses visual exploration to understand what is in a dataset and the characteristics of the data, rather than through traditional data management systems.FOSTER Open Science Overview of Data Exploration Techniques: Stratos Idreos, Olga Papaemmonouil, Surajit Chaudhuri. These characteristics can include size or amount of data, completeness of the data, correctness of the data, possible relationships amongst data elements or files/tables in the data. Data exploration is typically conducted using a combination of automated and manual activities.Stanford.edu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automation

Automation describes a wide range of technologies that reduce human intervention in processes, namely by predetermining decision criteria, subprocess relationships, and related actions, as well as embodying those predeterminations in machines. Automation has been achieved by various means including mechanical, hydraulic, pneumatic, electrical, electronic devices, and computers, usually in combination. Complicated systems, such as modern factories, airplanes, and ships typically use combinations of all of these techniques. The benefit of automation includes labor savings, reducing waste, savings in electricity costs, savings in material costs, and improvements to quality, accuracy, and precision. Automation includes the use of various equipment and control systems such as machinery, processes in factories, boilers, and heat-treating ovens, switching on telephone networks, steering, and stabilization of ships, aircraft, and other applications and vehicles with reduced hu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.png)