|

Damerau–Levenshtein Distance

In information theory and computer science, the Damerau–Levenshtein distance (named after Frederick J. Damerau and Vladimir I. Levenshtein.) is a string metric for measuring the edit distance between two sequences. Informally, the Damerau–Levenshtein distance between two words is the minimum number of operations (consisting of insertions, deletions or substitutions of a single character, or transposition of two adjacent characters) required to change one word into the other. The Damerau–Levenshtein distance differs from the classical Levenshtein distance by including transpositions among its allowable operations in addition to the three classical single-character edit operations (insertions, deletions and substitutions). In his seminal paper, Damerau stated that in an investigation of spelling errors for an information-retrieval system, more than 80% were a result of a single error of one of the four types. Damerau's paper considered only misspellings that could be correct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamic Programming

Dynamic programming is both a mathematical optimization method and a computer programming method. The method was developed by Richard Bellman in the 1950s and has found applications in numerous fields, from aerospace engineering to economics. In both contexts it refers to simplifying a complicated problem by breaking it down into simpler sub-problems in a recursive manner. While some decision problems cannot be taken apart this way, decisions that span several points in time do often break apart recursively. Likewise, in computer science, if a problem can be solved optimally by breaking it into sub-problems and then recursively finding the optimal solutions to the sub-problems, then it is said to have ''optimal substructure''. If sub-problems can be nested recursively inside larger problems, so that dynamic programming methods are applicable, then there is a relation between the value of the larger problem and the values of the sub-problems.Cormen, T. H.; Leiserson, C. E.; Rives ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

String Metrics

In mathematics and computer science, a string metric (also known as a string similarity metric or string distance function) is a metric that measures distance ("inverse similarity") between two text strings for approximate string matching or comparison and in fuzzy string searching. A requirement for a string ''metric'' (e.g. in contrast to string matching) is fulfillment of the triangle inequality. For example, the strings "Sam" and "Samuel" can be considered to be close. A string metric provides a number indicating an algorithm-specific indication of distance. The most widely known string metric is a rudimentary one called the Levenshtein distance (also known as edit distance). It operates between two input strings, returning a number equivalent to the number of substitutions and deletions needed in order to transform one input string into another. Simplistic string metrics such as Levenshtein distance have expanded to include phonetic, token, grammatical and character-based m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Typosquatting

Typosquatting, also called URL hijacking, a sting site, or a fake URL, is a form of cybersquatting, and possibly brandjacking which relies on mistakes such as typos made by Internet users when inputting a website address into a web browser. Should a user accidentally enter an incorrect website address, they may be led to any URL (including an alternative website owned by a cybersquatter). The typosquatter's URL will usually be one of five kinds, all ''similar to'' the victim site address: *A common misspelling, or foreign language spelling, of the intended site *A misspelling based on a typographical error *A plural of a singular domain name *A different top-level domain: (i.e. .com instead of .org) *An abuse of the Country Code Top-Level Domain (ccTLD) (.cm, .co, or .om instead of .com) Similar abuses: *Combosquatting - no misspelling, but appending an arbitrary word that appears legitimate, but that anyone could register. *Doppelganger domain - omitting a period or inserting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ispell

Ispell is a spelling checker for Unix that supports most Western languages. It offers several interfaces, including a programmatic interface for use by editors such as Emacs. Unlike GNU Aspell, ispell will only suggest corrections that are based on a Damerau–Levenshtein distance of 1; it will not attempt to guess more distant corrections based on English pronunciation rules. Ispell has a very long history that can be traced back to a program that was originally written in 1971 in PDP-10 Assembly language by R. E. Gorin, and later ported to the C programming language and expanded by many others. It is currently maintained by Geoff Kuenning. The generalized affix description system introduced by ispell has since been imitated by other spelling checkers such as MySpell. Like most computerized spelling checkers, ispell works by reading an input file word by word, stopping when a word is not found in its dictionary. Ispell then attempts to generate a list of possible corrections a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Smith–Waterman Algorithm

The Smith–Waterman algorithm performs local sequence alignment; that is, for determining similar regions between two strings of nucleic acid sequences or protein sequences. Instead of looking at the entire sequence, the Smith–Waterman algorithm compares segments of all possible lengths and optimizes the similarity measure. The algorithm was first proposed by Temple F. Smith and Michael S. Waterman in 1981. Like the Needleman–Wunsch algorithm, of which it is a variation, Smith–Waterman is a dynamic programming algorithm. As such, it has the desirable property that it is guaranteed to find the optimal local alignment with respect to the scoring system being used (which includes the substitution matrix and the gap-scoring scheme). The main difference to the Needleman–Wunsch algorithm is that negative scoring matrix cells are set to zero, which renders the (thus positively scoring) local alignments visible. Traceback procedure starts at the highest scoring matrix cell and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Needleman–Wunsch Algorithm

The Needleman–Wunsch algorithm is an algorithm used in bioinformatics to align protein or nucleotide sequences. It was one of the first applications of dynamic programming to compare biological sequences. The algorithm was developed by Saul B. Needleman and Christian D. Wunsch and published in 1970. The algorithm essentially divides a large problem (e.g. the full sequence) into a series of smaller problems, and it uses the solutions to the smaller problems to find an optimal solution to the larger problem. It is also sometimes referred to as the optimal matching algorithm and the global alignment technique. The Needleman–Wunsch algorithm is still widely used for optimal global alignment, particularly when the quality of the global alignment is of the utmost importance. The algorithm assigns a score to every possible alignment, and the purpose of the algorithm is to find all possible alignments having the highest score. Introduction This algorithm can be used for any two st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metric Tree

A metric tree is any tree data structure specialized to index data in metric spaces. Metric trees exploit properties of metric spaces such as the triangle inequality to make accesses to the data more efficient. Examples include the M-tree, vp-trees, cover trees, MVP trees, and BK-trees. Multidimensional search Most algorithms and data structures for searching a dataset are based on the classical binary search algorithm, and generalizations such as the k-d tree or range tree work by interleaving the binary search algorithm over the separate coordinates and treating each spatial coordinate as an independent search constraint. These data structures are well-suited for range query problems asking for every point (x,y) that satisfies \mbox_x \leq x \leq \mbox_x and \mbox_y \leq y \leq \mbox_y. A limitation of these multidimensional search structures is that they are only defined for searching over objects that can be treated as vectors. They aren't applicable for the more ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bitap Algorithm

The bitap algorithm (also known as the shift-or, shift-and or Baeza-Yates–Gonnet algorithm) is an approximate string matching algorithm. The algorithm tells whether a given text contains a substring which is "approximately equal" to a given pattern, where approximate equality is defined in terms of Levenshtein distance if the substring and pattern are within a given distance ''k'' of each other, then the algorithm considers them equal. The algorithm begins by precomputing a set of bitmasks containing one bit for each element of the pattern. Then it is able to do most of the work with bitwise operations, which are extremely fast. The bitap algorithm is perhaps best known as one of the underlying algorithms of the Unix utility agrep, written by Udi Manber, Sun Wu, and Burra Gopal. Manber and Wu's original paper gives extensions of the algorithm to deal with fuzzy matching of general regular expressions. Due to the data structures required by the algorithm, it performs best on pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudocode

In computer science, pseudocode is a plain language description of the steps in an algorithm or another system. Pseudocode often uses structural conventions of a normal programming language, but is intended for human reading rather than machine reading. It typically omits details that are essential for machine understanding of the algorithm, such as variable declarations and language-specific code. The programming language is augmented with natural language description details, where convenient, or with compact mathematical notation. The purpose of using pseudocode is that it is easier for people to understand than conventional programming language code, and that it is an efficient and environment-independent description of the key principles of an algorithm. It is commonly used in textbooks and scientific publications to document algorithms and in planning of software and other algorithms. No broad standard for pseudocode syntax exists, as a program in pseudocode is not an executa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wagner–Fischer Algorithm

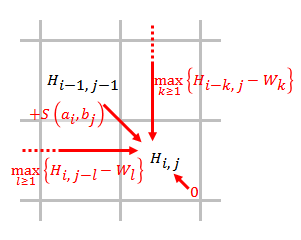

In computer science, the Wagner–Fischer algorithm is a dynamic programming algorithm that computes the edit distance between two strings of characters. History The Wagner–Fischer algorithm has a history of multiple invention. Navarro lists the following inventors of it, with date of publication, and acknowledges that the list is incomplete: * Vintsyuk, 1968 * Needleman and Wunsch, 1970 * Sankoff, 1972 * Sellers, 1974 * Wagner and Fischer, 1974 * Lowrance and Wagner, 1975 Calculating distance The Wagner–Fischer algorithm computes edit distance based on the observation that if we reserve a matrix to hold the edit distances between all prefixes of the first string and all prefixes of the second, then we can compute the values in the matrix by flood filling the matrix, and thus find the distance between the two full strings as the last value computed. A straightforward implementation, as pseudocode for a function ''Distance'' that takes two strings, ''s'' of length ''m'', and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |