|

Controlling For A Variable

In causal models, controlling for a variable means binning data according to measured values of the variable. This is typically done so that the variable can no longer act as a confounder in, for example, an observational study or experiment. When estimating the effect of explanatory variables on an outcome by regression, controlled-for variables are included as inputs in order to separate their effects from the explanatory variables. A limitation of controlling for variables is that a causal model is needed to identify important confounders (''backdoor criterion'' is used for the identification). Without having one, a possible confounder might remain unnoticed. Another associated problem is that if a variable which is not a real confounder is controlled for, it may in fact make other variables (possibly not taken into account) become confounders while they weren't confounders before. In other cases, controlling for a non-confounding variable may cause underestimation of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Causal Model

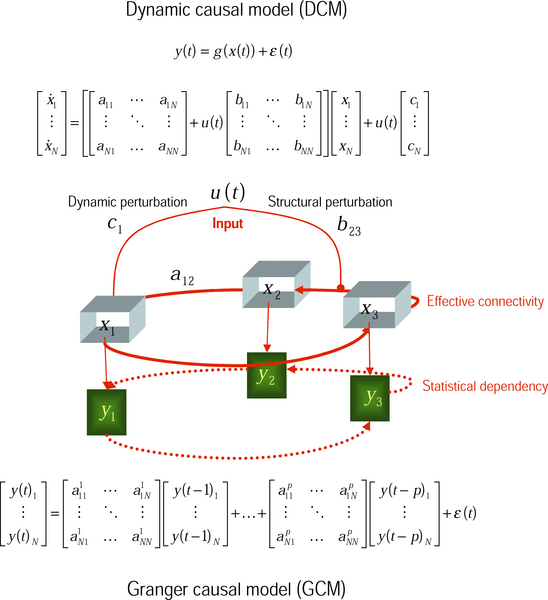

In the philosophy of science, a causal model (or structural causal model) is a conceptual model that describes the causal mechanisms of a system. Causal models can improve study designs by providing clear rules for deciding which independent variables need to be included/controlled for. They can allow some questions to be answered from existing observational data without the need for an interventional study such as a randomized controlled trial. Some interventional studies are inappropriate for ethical or practical reasons, meaning that without a causal model, some hypotheses cannot be tested. Causal models can help with the question of ''external validity'' (whether results from one study apply to unstudied populations). Causal models can allow data from multiple studies to be merged (in certain circumstances) to answer questions that cannot be answered by any individual data set. Causal models have found applications in signal processing, epidemiology and machine learning. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Variable

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, in turn, are not seen as depending on any other variable in the scope of the experiment in question. In this sense, some common independent variables are time, space, density, mass, fluid flow rate, and previous values of some observed value of interest (e.g. human population size) to predict future values (the dependent variable). Of the two, it is always the dependent variable whose variation is being studied, by altering inputs, also known as regressors in a statistical context. In an experiment, any variable that can be attributed a value without attributing a value to any other variable is called an in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Age Adjustment

In epidemiology and demography, age adjustment, also called age standardization, is a technique used to allow statistical populations to be compared when the age profiles of the populations are quite different. Example For example, in 2004/5, two Australian health surveys investigated rates of long-term circulatory system health problems (e.g. heart disease) in the general Australian population, and specifically in the Indigenous Australian population. In each age category over age 24, Indigenous Australians had markedly higher rates of circulatory disease than the general population: 5% vs 2% in age group 25–34, 12% vs 4% in age group 35–44, 22% vs 14% in age group 45–54, and 42% vs 33% in age group 55+. However, overall, these surveys estimated that 12% of all Indigenous Australians had long-term circulatory problems compared to 18% of the overall Australian population. To understand this "apparent contradiction", we note that this only includes age groups over 24 and igno ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixed Model

A mixed model, mixed-effects model or mixed error-component model is a statistical model containing both fixed effects and random effects. These models are useful in a wide variety of disciplines in the physical, biological and social sciences. They are particularly useful in settings where repeated measurements are made on the same statistical units (longitudinal study), or where measurements are made on clusters of related statistical units. Because of their advantage in dealing with missing values, mixed effects models are often preferred over more traditional approaches such as repeated measures analysis of variance. This page will discuss mainly linear mixed-effects models (LMEM) rather than generalized linear mixed models or nonlinear mixed-effects models. History and current status Ronald Fisher introduced random effects models to study the correlations of trait values between relatives. In the 1950s, Charles Roy Henderson provided best linear unbiased estimates ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Directed Acyclic Graph

In mathematics, particularly graph theory, and computer science, a directed acyclic graph (DAG) is a directed graph with no directed cycles. That is, it consists of vertices and edges (also called ''arcs''), with each edge directed from one vertex to another, such that following those directions will never form a closed loop. A directed graph is a DAG if and only if it can be topologically ordered, by arranging the vertices as a linear ordering that is consistent with all edge directions. DAGs have numerous scientific and computational applications, ranging from biology (evolution, family trees, epidemiology) to information science (citation networks) to computation (scheduling). Directed acyclic graphs are sometimes instead called acyclic directed graphs or acyclic digraphs. Definitions A graph is formed by vertices and by edges connecting pairs of vertices, where the vertices can be any kind of object that is connected in pairs by edges. In the case of a directed graph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Life Satisfaction

Life satisfaction is a measure of a person's well-being, assessed in terms of mood, relationship satisfaction, achieved goals, self-concepts, and self-perceived ability to cope with life. Life satisfaction involves a favorable attitude towards one's life—rather than an assessment of current feelings. Life satisfaction has been measured in relation to economic standing, degree of education, experiences, residence, and other factors. Life satisfaction is a key part of subjective well-being. Many factors influence subjective well-being and life satisfaction. Socio-demographic factors include gender, age, marital status, income, and education. Psychosocial factors include health and illness, functional ability, activity level, and social relationships. People tend to gain life satisfaction as they get older. Factors affecting life satisfaction Personality A meta-analysis using The Big Five personality model found that, among the Big Five, low neuroticism was the strongest pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation

Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Unit root processes, trend-stationary processes, autoregressive processes, and moving average processes are specific forms of processes with autocorrelatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Homogeneity And Heterogeneity (statistics)

In statistics, homogeneity and its opposite, heterogeneity, arise in describing the properties of a dataset, or several datasets. They relate to the validity of the often convenient assumption that the statistical properties of any one part of an overall dataset are the same as any other part. In meta-analysis, which combines the data from several studies, homogeneity measures the differences or similarities between the several studies (see also Study heterogeneity). Homogeneity can be studied to several degrees of complexity. For example, considerations of homoscedasticity examine how much the variability of data-values changes throughout a dataset. However, questions of homogeneity apply to all aspects of the statistical distributions, including the location parameter. Thus, a more detailed study would examine changes to the whole of the marginal distribution. An intermediate-level study might move from looking at the variability to studying changes in the skewness. In additio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ordinary Least Squares

In statistics, ordinary least squares (OLS) is a type of linear least squares method for choosing the unknown parameters in a linear regression model (with fixed level-one effects of a linear function of a set of explanatory variables) by the principle of least squares: minimizing the sum of the squares of the differences between the observed dependent variable (values of the variable being observed) in the input dataset and the output of the (linear) function of the independent variable. Geometrically, this is seen as the sum of the squared distances, parallel to the axis of the dependent variable, between each data point in the set and the corresponding point on the regression surface—the smaller the differences, the better the model fits the data. The resulting estimator can be expressed by a simple formula, especially in the case of a simple linear regression, in which there is a single regressor on the right side of the regression equation. The OLS estimator is con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Omitted Variable Bias

In statistics, omitted-variable bias (OVB) occurs when a statistical model leaves out one or more relevant variables. The bias results in the model attributing the effect of the missing variables to those that were included. More specifically, OVB is the bias that appears in the estimates of parameters in a regression analysis, when the assumed specification is incorrect in that it omits an independent variable that is a determinant of the dependent variable and correlated with one or more of the included independent variables. In linear regression Intuition Suppose the true cause-and-effect relationship is given by: :y=a+bx+cz+u with parameters ''a, b, c'', dependent variable ''y'', independent variables ''x'' and ''z'', and error term ''u''. We wish to know the effect of ''x'' itself upon ''y'' (that is, we wish to obtain an estimate of ''b''). Two conditions must hold true for omitted-variable bias to exist in linear regression: * the omitted variable must be a determi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Regression

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given set of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |