|

Catastrophic Cancellation

In numerical analysis, catastrophic cancellation is the phenomenon that subtracting good approximations to two nearby numbers may yield a very bad approximation to the difference of the original numbers. For example, if there are two studs, one L_1 = 254.5\,\text long and the other L_2 = 253.5\,\text long, and they are measured with a ruler that is good only to the centimeter, then the approximations could come out to be \tilde L_1 = 255\,\text and \tilde L_2 = 253\,\text. These may be good approximations, in relative error, to the true lengths: the approximations are in error by less than 2% of the true lengths, , L_1 - \tilde L_1, /, L_1, < 2\%. However, if the ''approximate'' lengths are subtracted, the difference will be , even though the true difference between the lengths is . The difference of the approximations, |

Numerical Analysis

Numerical analysis is the study of algorithms that use numerical approximation (as opposed to symbolic computation, symbolic manipulations) for the problems of mathematical analysis (as distinguished from discrete mathematics). It is the study of numerical methods that attempt at finding approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics (predicting the motions of planets, stars and galaxies), numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living ce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relative Error

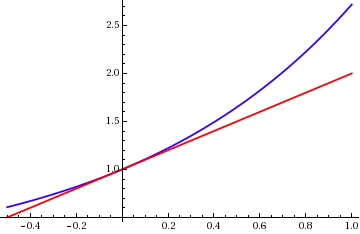

The approximation error in a data value is the discrepancy between an exact value and some ''approximation'' to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute error divided by the data value). An approximation error can occur because of computing machine precision or measurement error (e.g. the length of a piece of paper is 4.53 cm but the ruler only allows you to estimate it to the nearest 0.1 cm, so you measure it as 4.5 cm). In the mathematical field of numerical analysis, the numerical stability of an algorithm indicates how the error is propagated by the algorithm. Formal definition One commonly distinguishes between the relative error and the absolute error. Given some value ''v'' and its approximation ''v''approx, the absolute error is :\epsilon = , v-v_\text, \ , where the vertical bars denote the absolute value. If v \ne 0, the relative error is : \eta = \frac = \left, \ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Arithmetic

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten (decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-poin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sterbenz Lemma

In floating-point arithmetic, the Sterbenz lemma or Sterbenz's lemma is a theorem giving conditions under which floating-point differences are computed exactly. It is named after Pat H. Sterbenz, who published a variant of it in 1974. The Sterbenz lemma applies to IEEE 754, the most widely used floating-point number system in computers. Proof Let \beta be the radix of the floating-point system and p the precision. Consider several easy cases first: * If x is zero then x - y = -y, and if y is zero then x - y = x, so the result is trivial because floating-point negation is always exact. * If x = y the result is zero and thus exact. * If x < 0 then we must also have so . In this case, , so the result follows from the theorem restricted to . * If , we can write with , so the result follow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ill-conditioned

In numerical analysis, the condition number of a function measures how much the output value of the function can change for a small change in the input argument. This is used to measure how sensitive a function is to changes or errors in the input, and how much error in the output results from an error in the input. Very frequently, one is solving the inverse problem: given f(x) = y, one is solving for ''x,'' and thus the condition number of the (local) inverse must be used. In linear regression the condition number of the moment matrix can be used as a diagnostic for multicollinearity. The condition number is an application of the derivative, and is formally defined as the value of the asymptotic worst-case relative change in output for a relative change in input. The "function" is the solution of a problem and the "arguments" are the data in the problem. The condition number is frequently applied to questions in linear algebra, in which case the derivative is straightforward but ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Double-precision Floating-point Format

Double-precision floating-point format (sometimes called FP64 or float64) is a floating-point number format, usually occupying 64 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. Floating point is used to represent fractional values, or when a wider range is needed than is provided by fixed point (of the same bit width), even if at the cost of precision. Double precision may be chosen when the range or precision of single precision would be insufficient. In the IEEE 754-2008 standard, the 64-bit base-2 format is officially referred to as binary64; it was called double in IEEE 754-1985. IEEE 754 specifies additional floating-point formats, including 32-bit base-2 ''single precision'' and, more recently, base-10 representations. One of the first programming languages to provide single- and double-precision floating-point data types was Fortran. Before the widespread adoption of IEEE 754-1985, the representation and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inverse Trigonometric Functions

In mathematics, the inverse trigonometric functions (occasionally also called arcus functions, antitrigonometric functions or cyclometric functions) are the inverse functions of the trigonometric functions (with suitably restricted Domain of a function, domains). Specifically, they are the inverses of the sine, cosine, tangent (trigonometry), tangent, cotangent, secant (trigonometry), secant, and cosecant functions, and are used to obtain an angle from any of the angle's trigonometric ratios. Inverse trigonometric functions are widely used in engineering, navigation, physics, and geometry. Notation Several notations for the inverse trigonometric functions exist. The most common convention is to name inverse trigonometric functions using an arc- prefix: , , , etc. (This convention is used throughout this article.) This notation arises from the following geometric relationships: when measuring in radians, an angle of ''θ'' radians will correspond to an arc whose length is ''rθ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

2Sum

2Sum is a floating-point algorithm for computing the exact round-off error in a floating-point addition operation. 2Sum and its variant Fast2Sum were first published by Møller in 1965. Fast2Sum is often used implicitly in other algorithms such as compensated summation algorithms; Kahan's summation algorithm was published first in 1965, and Fast2Sum was later factored out of it by Dekker in 1971 for double-double arithmetic algorithms. The names ''2Sum'' and ''Fast2Sum'' appear to have been applied retroactively by Shewchuk in 1997. Algorithm Given two floating-point numbers a and b, 2Sum computes the floating-point sum s := \operatorname(a + b) and the floating-point error t := a + b - \operatorname(a + b) so that s + t = a + b. The error t is itself a floating-point number. :Inputs floating-point numbers a, b :Outputs sum s = \operatorname(a + b) and error t = a + b - \operatorname(a + b) :# s := a \oplus b :# a' := s \ominus b :# b' := s \ominus a' :# \delta_a := a \ominus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Well-conditioned

In numerical analysis, the condition number of a function measures how much the output value of the function can change for a small change in the input argument. This is used to measure how sensitive a function is to changes or errors in the input, and how much error in the output results from an error in the input. Very frequently, one is solving the inverse problem: given f(x) = y, one is solving for ''x,'' and thus the condition number of the (local) inverse must be used. In linear regression the condition number of the moment matrix can be used as a diagnostic for multicollinearity. The condition number is an application of the derivative, and is formally defined as the value of the asymptotic worst-case relative change in output for a relative change in input. The "function" is the solution of a problem and the "arguments" are the data in the problem. The condition number is frequently applied to questions in linear algebra, in which case the derivative is straightforward but ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |