|

2Sum

2Sum is a floating-point algorithm for computing the exact round-off error in a floating-point addition operation. 2Sum and its variant Fast2Sum were first published by Ole Møller in 1965. Fast2Sum is often used implicitly in other algorithms such as compensated summation algorithms; Kahan's summation algorithm was published first in 1965, and Fast2Sum was later factored out of it by Dekker in 1971 for double-double arithmetic algorithms. The names ''2Sum'' and ''Fast2Sum'' appear to have been applied retroactively by Shewchuk in 1997. Algorithm Given two floating-point numbers a and b, 2Sum computes the floating-point sum s := a \oplus b rounded to nearest and the floating-point error t := a + b - (a \oplus b) so that s + t = a + b, where \oplus and \ominus respectively denote the addition and subtraction rounded to nearest. The error t is itself a floating-point number. :Inputs floating-point numbers a, b :Outputs rounded sum s = a \oplus b and exact error t = a + b - (a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kahan Summation Algorithm

In numerical analysis, the Kahan summation algorithm, also known as compensated summation, significantly reduces the numerical error in the total obtained by adding a sequence of finite- precision floating-point numbers, compared to the naive approach. This is done by keeping a separate ''running compensation'' (a variable to accumulate small errors), in effect extending the precision of the sum by the precision of the compensation variable. In particular, simply summing n numbers in sequence has a worst-case error that grows proportional to n, and a root mean square error that grows as \sqrt for random inputs (the roundoff errors form a random walk).. With compensated summation, using a compensation variable with sufficiently high precision the worst-case error bound is effectively independent of n, so a large number of values can be summed with an error that only depends on the floating-point precision of the result. The algorithm is attributed to William Kahan;. Ivo Babuš ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 754

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-point arithmetic originally established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard #Design rationale, addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and Software portability, portably. Many hardware floating-point units use the IEEE 754 standard. The standard defines: * ''arithmetic formats:'' sets of Binary code, binary and decimal floating-point data, which consist of finite numbers (including signed zeros and subnormal numbers), infinity, infinities, and special "not a number" values (NaNs) * ''interchange formats:'' encodings (bit strings) that may be used to exchange floating-point data in an efficient and compact form * ''rounding rules:'' properties to be satisfied when rounding numbers during arithmetic and conversions * ''operations:'' arithmetic and other operatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Arithmetic

In computing, floating-point arithmetic (FP) is arithmetic on subsets of real numbers formed by a ''significand'' (a Sign (mathematics), signed sequence of a fixed number of digits in some Radix, base) multiplied by an integer power of that base. Numbers of this form are called floating-point numbers. For example, the number 2469/200 is a floating-point number in base ten with five digits: 2469/200 = 12.345 = \! \underbrace_\text \! \times \! \underbrace_\text\!\!\!\!\!\!\!\overbrace^ However, 7716/625 = 12.3456 is not a floating-point number in base ten with five digits—it needs six digits. The nearest floating-point number with only five digits is 12.346. And 1/3 = 0.3333… is not a floating-point number in base ten with any finite number of digits. In practice, most floating-point systems use Binary number, base two, though base ten (decimal floating point) is also common. Floating-point arithmetic operations, such as addition and division, approximate the correspond ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Round-off Error

In computing, a roundoff error, also called rounding error, is the difference between the result produced by a given algorithm using exact arithmetic and the result produced by the same algorithm using finite-precision, rounded arithmetic. Rounding errors are due to inexactness in the representation of real numbers and the arithmetic operations done with them. This is a form of quantization error. When using approximation equations or algorithms, especially when using finitely many digits to represent real numbers (which in theory have infinitely many digits), one of the goals of numerical analysis is to estimate computation errors. Computation errors, also called numerical errors, include both truncation errors and roundoff errors. When a sequence of calculations with an input involving any roundoff error are made, errors may accumulate, sometimes dominating the calculation. In ill-conditioned problems, significant error may accumulate. In short, there are two major facets ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

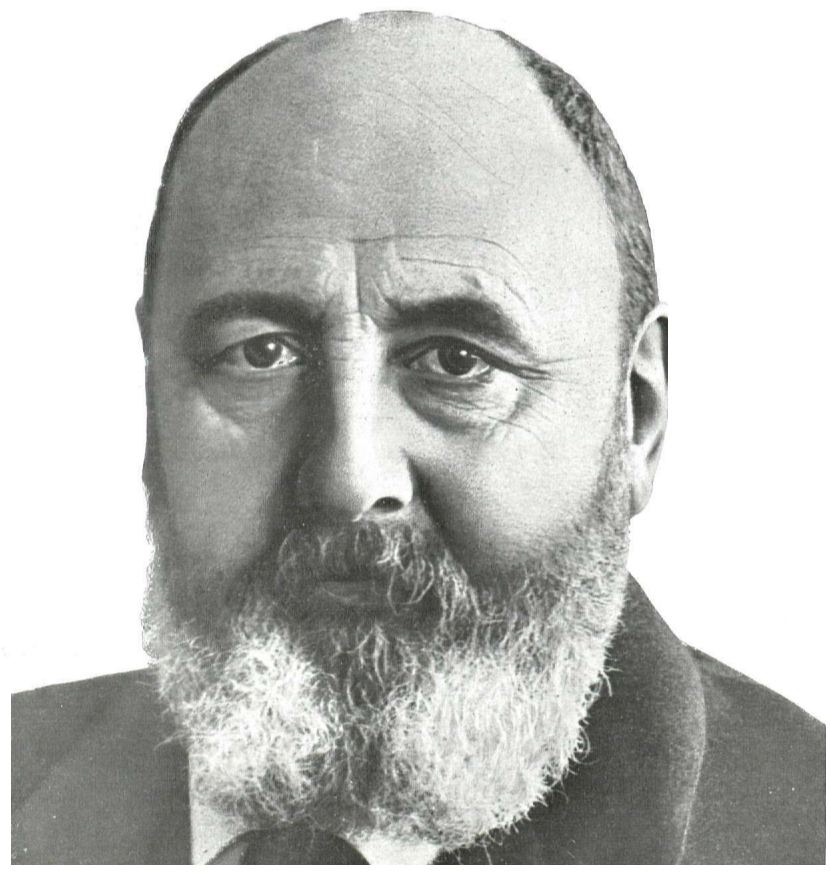

Theodorus Dekker

Theodorus Jozef Dekker (Dirk Dekker, 1 March 1927 - 25 November 2021) was a Dutch mathematician. Dekker completed his Ph.D. degree from the University of Amsterdam in 1958. His thesis was titled "Paradoxical Decompositions of Sets and Spaces". Dekker invented an algorithm that allows two processes to share a single-use resource without conflict, using only shared memory for communication, named Dekker's algorithm. References Prof. dr. T.J. Dekker, 1927 -at the University of Amsterdam The University of Amsterdam (abbreviated as UvA, ) is a public university, public research university located in Amsterdam, Netherlands. Established in 1632 by municipal authorities, it is the fourth-oldest academic institution in the Netherlan ... ''Album Academicum'' website External links * {{DEFAULTSORT:Dekker, Theodorus 1927 births 2021 deaths Dutch mathematicians University of Amsterdam alumni Academic staff of the University of Amsterdam People from Heerhugowaard ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Double-double Arithmetic

In computing, quadruple precision (or quad precision) is a binary floating-point–based computer number format that occupies 16 bytes (128 bits) with precision at least twice the 53-bit double precision. This 128-bit quadruple precision is designed not only for applications requiring results in higher than double precision, but also, as a primary function, to allow the computation of double precision results more reliably and accurately by minimising overflow and round-off errors in intermediate calculations and scratch variables. William Kahan, primary architect of the original IEEE 754 floating-point standard noted, "For now the 10-byte Extended format is a tolerable compromise between the value of extra-precise arithmetic and the price of implementing it to run fast; very soon two more bytes of precision will become tolerable, and ultimately a 16-byte format ... That kind of gradual evolution towards wider precision was already in view when IEEE Standard 754 for Floating-Po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete & Computational Geometry

'' Discrete & Computational Geometry'' is a peer-reviewed mathematics journal published quarterly by Springer. Founded in 1986 by Jacob E. Goodman and Richard M. Pollack, the journal publishes articles on discrete geometry and computational geometry. Abstracting and indexing The journal is indexed in: * ''Mathematical Reviews'' * '' Zentralblatt MATH'' * ''Science Citation Index'' * ''Current Contents ''Current Contents'' is a rapid alerting service database from Clarivate, formerly the Institute for Scientific Information and Thomson Reuters. It is published online and in several different printed subject sections. History ''Current Contents ...'' Notable articles Two articles published in ''Discrete & Computational Geometry'', one by Gil Kalai in 1992 with a proof of a subexponential upper bound on the diameter of a polytope and another by Samuel Ferguson in 2006 on the Kepler conjecture on optimal three-dimensional sphere packing, earned their authors the Fulk ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradual Underflow

In computer science, subnormal numbers are the subset of denormalized numbers (sometimes called denormals) that fill the underflow gap around zero in floating-point arithmetic. Any non-zero number with magnitude smaller than the smallest positive normal number is ''subnormal'', while ''denormal'' can also refer to numbers outside that range. Terminology In some older documents (especially standards documents such as the initial releases of IEEE 754 and the C language), "denormal" is used to refer exclusively to subnormal numbers. This usage persists in various standards documents, especially when discussing hardware that is incapable of representing any other denormalized numbers, but the discussion here uses the term "subnormal" in line with the 2008 revision of IEEE 754. In casual discussions the terms ''subnormal'' and ''denormal'' are often used interchangeably, in part because there are ''no'' denormalized IEEE binary numbers outside the subnormal range. The term "numbe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Round-off Error

In computing, a roundoff error, also called rounding error, is the difference between the result produced by a given algorithm using exact arithmetic and the result produced by the same algorithm using finite-precision, rounded arithmetic. Rounding errors are due to inexactness in the representation of real numbers and the arithmetic operations done with them. This is a form of quantization error. When using approximation equations or algorithms, especially when using finitely many digits to represent real numbers (which in theory have infinitely many digits), one of the goals of numerical analysis is to estimate computation errors. Computation errors, also called numerical errors, include both truncation errors and roundoff errors. When a sequence of calculations with an input involving any roundoff error are made, errors may accumulate, sometimes dominating the calculation. In ill-conditioned problems, significant error may accumulate. In short, there are two major facets ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Double-double Arithmetic

In computing, quadruple precision (or quad precision) is a binary floating-point–based computer number format that occupies 16 bytes (128 bits) with precision at least twice the 53-bit double precision. This 128-bit quadruple precision is designed not only for applications requiring results in higher than double precision, but also, as a primary function, to allow the computation of double precision results more reliably and accurately by minimising overflow and round-off errors in intermediate calculations and scratch variables. William Kahan, primary architect of the original IEEE 754 floating-point standard noted, "For now the 10-byte Extended format is a tolerable compromise between the value of extra-precise arithmetic and the price of implementing it to run fast; very soon two more bytes of precision will become tolerable, and ultimately a 16-byte format ... That kind of gradual evolution towards wider precision was already in view when IEEE Standard 754 for Floating-Po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Arithmetic

Computer arithmetic is the scientific field that deals with representation of numbers on computers and corresponding implementations of the arithmetic operations. It includes: *Fixed-point arithmetic *Floating-point arithmetic *Interval arithmetic *Arbitrary-precision arithmetic *Modular arithmetic ** Multi-modular arithmetic ** ''p''-adic arithmetic, consisting of computing modulo a single prime number and retrieving the integer or rational result by using Hensel lifting **Finite field arithmetic * Matrix arithmetic In the cases where the size of the representation of a number is fixed (fixed-point, floating-point and interval arithmetic), the main concern is to control the computational error, as far as possible; see, for example IEEE 754. In the other cases, where an exact result should be provided, the main concern is the practical efficiency, which is optimized by combining improvements of computational complexity In computer science, the computational complexity or si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating Point

In computing, floating-point arithmetic (FP) is arithmetic on subsets of real numbers formed by a ''significand'' (a signed sequence of a fixed number of digits in some base) multiplied by an integer power of that base. Numbers of this form are called floating-point numbers. For example, the number 2469/200 is a floating-point number in base ten with five digits: 2469/200 = 12.345 = \! \underbrace_\text \! \times \! \underbrace_\text\!\!\!\!\!\!\!\overbrace^ However, 7716/625 = 12.3456 is not a floating-point number in base ten with five digits—it needs six digits. The nearest floating-point number with only five digits is 12.346. And 1/3 = 0.3333… is not a floating-point number in base ten with any finite number of digits. In practice, most floating-point systems use base two, though base ten (decimal floating point) is also common. Floating-point arithmetic operations, such as addition and division, approximate the corresponding real number arithmetic operations ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |