|

Sterbenz Lemma

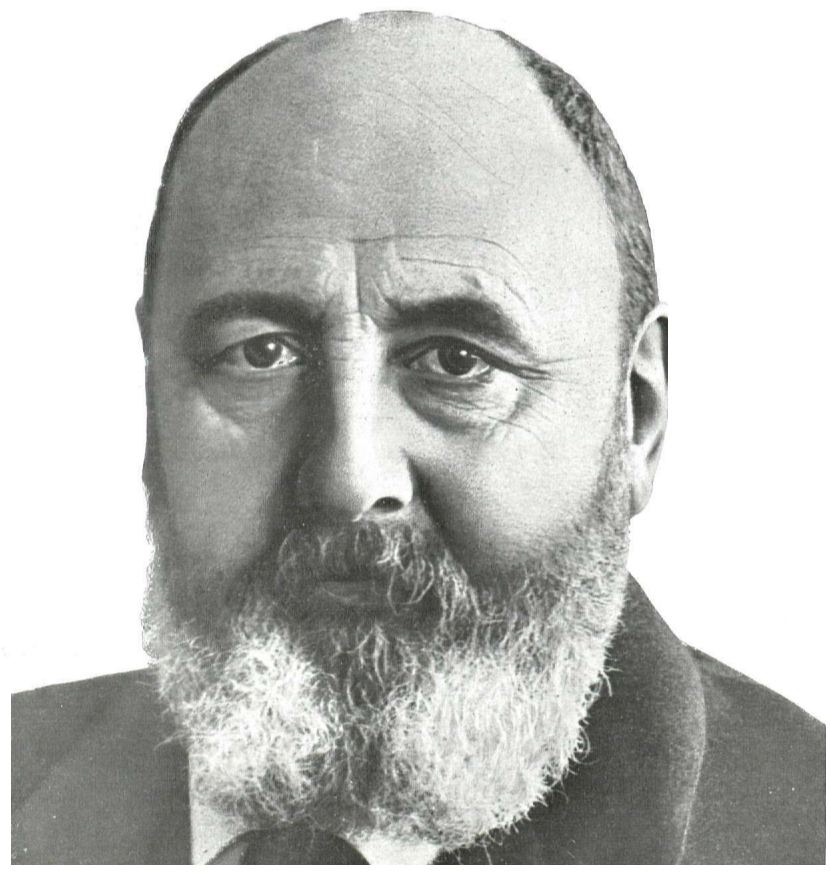

In floating-point arithmetic, the Sterbenz lemma or Sterbenz's lemma is a theorem giving conditions under which floating-point differences are computed exactly. It is named after Pat H. Sterbenz, who published a variant of it in 1974. The Sterbenz lemma applies to IEEE 754, the most widely used floating-point number system in computers. Proof Let \beta be the radix of the floating-point system and p the precision. Consider several easy cases first: * If x is zero then x - y = -y, and if y is zero then x - y = x, so the result is trivial because floating-point negation is always exact. * If x = y the result is zero and thus exact. * If x < 0 then we must also have so . In this case, , so the result follows from the theorem restricted to . * If , we can write with , so the result follow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Arithmetic

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten (decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-poin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Arithmetic

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten (decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-poin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subnormal Numbers

In computer science, subnormal numbers are the subset of denormalized numbers (sometimes called denormals) that fill the underflow gap around zero in floating-point arithmetic. Any non-zero number with magnitude smaller than the smallest normal number is ''subnormal''. :: ''Usage note: in some older documents (especially standards documents such as the initial releases of IEEE 754 and the C language), "denormal" is used to refer exclusively to subnormal numbers. This usage persists in various standards documents, especially when discussing hardware that is incapable of representing any other denormalized numbers, but the discussion here uses the term subnormal in line with the 2008 revision of IEEE 754.'' In a normal floating-point value, there are no leading zeros in the significand ( mantissa); rather, leading zeros are removed by adjusting the exponent (for example, the number 0.0123 would be written as ). Conversely, a denormalized floating point value has a significand with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 754

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-point arithmetic established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and portably. Many hardware floating-point units use the IEEE 754 standard. The standard defines: * ''arithmetic formats:'' sets of binary and decimal floating-point data, which consist of finite numbers (including signed zeros and subnormal numbers), infinities, and special "not a number" values (NaNs) * ''interchange formats:'' encodings (bit strings) that may be used to exchange floating-point data in an efficient and compact form * ''rounding rules:'' properties to be satisfied when rounding numbers during arithmetic and conversions * ''operations:'' arithmetic and other operations (such as trigonometric functions) on arithmetic formats * ''excepti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Catastrophic Cancellation

In numerical analysis, catastrophic cancellation is the phenomenon that subtracting good approximations to two nearby numbers may yield a very bad approximation to the difference of the original numbers. For example, if there are two studs, one L_1 = 254.5\,\text long and the other L_2 = 253.5\,\text long, and they are measured with a ruler that is good only to the centimeter, then the approximations could come out to be \tilde L_1 = 255\,\text and \tilde L_2 = 253\,\text. These may be good approximations, in relative error, to the true lengths: the approximations are in error by less than 2% of the true lengths, , L_1 - \tilde L_1, /, L_1, < 2\%. However, if the ''approximate'' lengths are subtracted, the difference will be , even though the true difference between the lengths is . The difference of the approximations, |

Heron's Formula

In geometry, Heron's formula (or Hero's formula) gives the area of a triangle in terms of the three side lengths , , . If s = \tfrac12(a + b + c) is the semiperimeter of the triangle, the area is, :A = \sqrt. It is named after first-century engineer Heron of Alexandria (or Hero) who proved it in his work ''Metrica'', though it was probably known centuries earlier. Example Let be the triangle with sides , and . This triangle’s semiperimeter is :s=\frac=\frac=16 and so the area is : \begin A &= \sqrt = \sqrt\\ &= \sqrt = \sqrt = 24. \end In this example, the side lengths and area are integers, making it a Heronian triangle. However, Heron's formula works equally well in cases where one or more of the side lengths are not integers. Alternate expressions Heron's formula can also be written in terms of just the side lengths instead of using the semiperimeter, in several ways, :\begin A &=\tfrac\sqrt \\ mu&=\tfrac\sqrt \\ mu&=\tfrac\sqrt \\ mu&=\tfrac\sqrt \\ mu&=\tfra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Analysis (mathematics)

In mathematics, error analysis is the study of kind and quantity of error, or uncertainty, that may be present in the solution to a problem. This issue is particularly prominent in applied areas such as numerical analysis and statistics. Error analysis in numerical modeling In numerical simulation or modeling of real systems, error analysis is concerned with the changes in the output of the model as the parameters to the model vary about a mean. For instance, in a system modeled as a function of two variables z \,=\, f(x,y). Error analysis deals with the propagation of the numerical errors in x and y (around mean values \bar and \bar) to error in z (around a mean \bar). In numerical analysis, error analysis comprises both forward error analysis and backward error analysis. Forward error analysis Forward error analysis involves the analysis of a function z' = f'(a_0,\,a_1,\,\dots,\,a_n) which is an approximation (usually a finite polynomial) to a function z \,=\, f(a_0,a_1,\do ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Arithmetic

In computing, an arithmetic logic unit (ALU) is a combinational digital circuit that performs arithmetic and bitwise operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on floating point numbers. It is a fundamental building block of many types of computing circuits, including the central processing unit (CPU) of computers, FPUs, and graphics processing units (GPUs). The inputs to an ALU are the data to be operated on, called operands, and a code indicating the operation to be performed; the ALU's output is the result of the performed operation. In many designs, the ALU also has status inputs or outputs, or both, which convey information about a previous operation or the current operation, respectively, between the ALU and external status registers. Signals An ALU has a variety of input and output nets, which are the electrical conductors used to convey digital signals between the ALU and external circuitry. When an ALU is o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating Point

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten (decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-poin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)