|

Content Discovery

A content discovery platform is an implemented software recommendation platform which uses recommender system tools. It utilizes user metadata in order to discover and recommend appropriate content, whilst reducing ongoing maintenance and development costs. A content discovery platform delivers personalized content to websites, mobile devices and set-top boxes. A large range of content discovery platforms currently exist for various forms of content ranging from news articles and academic journal articles to television. As operators compete to be the gateway to home entertainment, personalized television is a key service differentiator. Academic content discovery has recently become another area of interest, with several companies being established to help academic researchers keep up to date with relevant academic content and serendipitously discover new content. Methodology To provide and recommend content, a search algorithm is used within a content discovery platform to prov ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software

Software is a set of computer programs and associated software documentation, documentation and data (computing), data. This is in contrast to Computer hardware, hardware, from which the system is built and which actually performs the work. At the low level language, lowest programming level, executable code consists of Machine code, machine language instructions supported by an individual Microprocessor, processor—typically a central processing unit (CPU) or a graphics processing unit (GPU). Machine language consists of groups of Binary number, binary values signifying Instruction set architecture, processor instructions that change the state of the computer from its preceding state. For example, an instruction may change the value stored in a particular storage location in the computer—an effect that is not directly observable to the user. An instruction System call, may also invoke one of many Input/output, input or output operations, for example displaying some text on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pattern Recognition

Pattern recognition is the automated recognition of patterns and regularities in data. It has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Pattern recognition has its origins in statistics and engineering; some modern approaches to pattern recognition include the use of machine learning, due to the increased availability of big data and a new abundance of processing power. These activities can be viewed as two facets of the same field of application, and they have undergone substantial development over the past few decades. Pattern recognition systems are commonly trained from labeled "training" data. When no labeled data are available, other algorithms can be used to discover previously unknown patterns. KDD and data mining have a larger focus on unsupervised methods and stronger connection to business use. Pattern recognition focuses more on the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine Software

Presented below is a list of search engine software. Commercial Free {{columns-list, colwidth=22em, * Apache Lucene * Apache Nutch * Apache Solr * Datafari Community Edition * DocFetcher * Gigablast * Grub * Ht-//Dig * Isearch * Meilisearch * MnoGoSearch * OpenSearch * OpenSearchServer * PHP-Crawler * Sphinx * StrangeSearch * SWISH-E * Terrier Search Engine Vespa* Xapian * YaCy * Zettair See also * Search appliance * Bilingual search engine * Content discovery platform * Document retrieval * Incremental search * Web crawler A Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spi ... Lists of software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Explosion

The information explosion is the rapid increase in the amount of published information or data and the effects of this abundance. As the amount of available data grows, the problem of managing the information becomes more difficult, which can lead to information overload. The Online Oxford English Dictionary indicates use of the phrase in a March 1964 ''New Statesman'' article. ''The New York Times'' first used the phrase in its editorial content in an article by Walter Sullivan on June 7, 1964, in which he described the phrase as "much discussed". (p11.) The earliest known use of the phrase was in a speech about television by NBC president Pat Weaver at the Institute of Practitioners of Advertising in London on September 27, 1955. The speech was rebroadcast on radio station WSUI in Iowa and excerpted in the ''Daily Iowan'' newspaper two months later. Many sectors are seeing this rapid increase in the amount of information available such as healthcare, supermarkets, and even gove ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Filtering System

An information filtering system is a system that removes redundant or unwanted information from an information stream using (semi)automated or computerized methods prior to presentation to a human user. Its main goal is the management of the information overload and increment of the semantic signal-to-noise ratio. To do this the user's profile is compared to some reference characteristics. These characteristics may originate from the information item (the content-based approach) or the user's social environment (the collaborative filtering approach). Whereas in information transmission filter (signal processing), signal processing filters are used against syntax-disrupting noise on the bit-level, the methods employed in information filtering act on the semantic level. The range of machine methods employed builds on the same principles as those for information extraction. A notable application can be found in the field of email spam filters. Thus, it is not only the information expl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

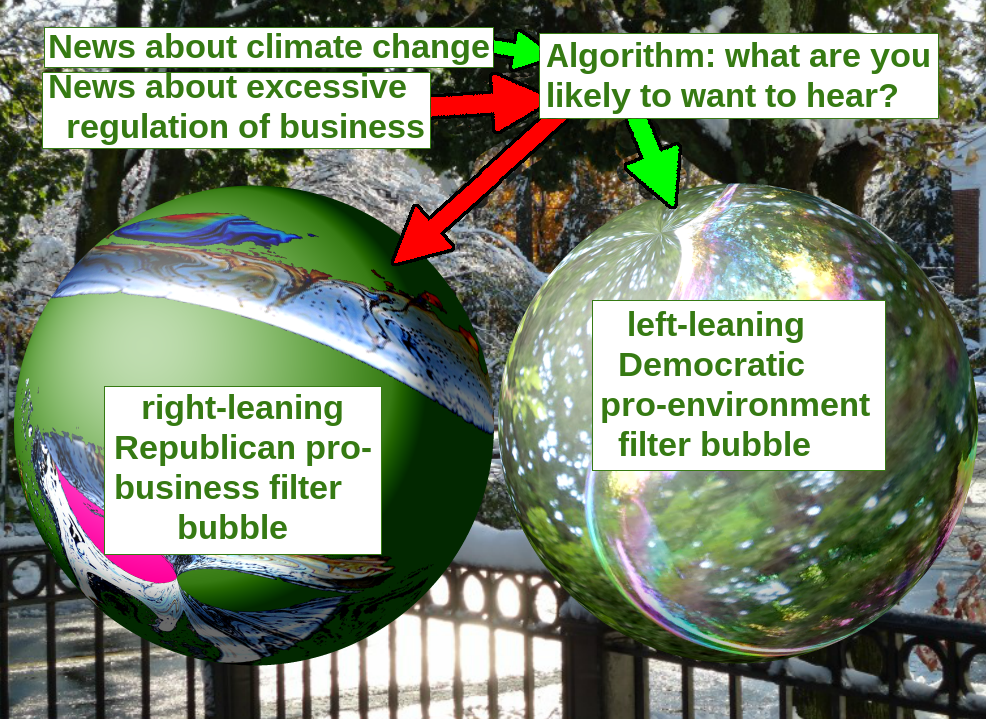

Filter Bubble

A filter bubble or ideological frame is a state of intellectual isolationTechnopediaDefinition – What does Filter Bubble mean?, Retrieved October 10, 2017, "....A filter bubble is the intellectual isolation, that can occur when websites make use of algorithms to selectively assume the information a user would want to see, and then give information to the user according to this assumption ... A filter bubble, therefore, can cause users to get significantly less contact with contradicting viewpoints, causing the user to become intellectually isolated...." that can result from personalized searches when a website algorithm selectively guesses what information a user would like to see based on information about the user, such as location, past click-behavior, and search history.Website visitor tracking As a result, users become separated from information that disagrees with their viewpoints, effectively isolating them in their own cultural or ideological bubbles. The choices made by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Television

Streaming television is the digital distribution of television content, such as TV shows, as streaming media delivered over the Internet. Streaming television stands in contrast to dedicated terrestrial television delivered by over-the-air aerial systems, cable television, and/or satellite television systems. History Up until the 1990s, it was not thought possible that a television programme could be squeezed into the limited telecommunication bandwidth of a copper telephone cable to provide a streaming service of acceptable quality, as the required bandwidth of a digital television signal was around 200 Mbit/s, which was 2,000 times greater than the bandwidth of a speech signal over a copper telephone wire. Streaming services were only made possible as a result of two major technological developments: MPEG ( motion-compensated DCT) video compression and asymmetric digital subscriber line (ADSL) data transmission. The first worldwide live-streaming event was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Broadband

In telecommunications, broadband is wide bandwidth data transmission which transports multiple signals at a wide range of frequencies and Internet traffic types, that enables messages to be sent simultaneously, used in fast internet connections. The medium can be coaxial cable, optical fiber, wireless Internet (radio), twisted pair or satellite. In the context of Internet access, broadband is used to mean any high-speed Internet access that is always on and faster than dial-up access over traditional analog or ISDN PSTN services. Overview Different criteria for "broad" have been applied in different contexts and at different times. Its origin is in physics, acoustics, and radio systems engineering, where it had been used with a meaning similar to " wideband", or in the context of audio noise reduction systems, where it indicated a single-band rather than a multiple-audio-band system design of the compander. Later, with the advent of digital telecommunications, the term was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population). A statistical model represents, often in considerably idealized form, the data-generating process. A statistical model is usually specified as a mathematical relationship between one or more random variables and other non-random variables. As such, a statistical model is "a formal representation of a theory" ( Herman Adèr quoting Kenneth Bollen). All statistical hypothesis tests and all statistical estimators are derived via statistical models. More generally, statistical models are part of the foundation of statistical inference. Introduction Informally, a statistical model can be thought of as a statistical assumption (or set of statistical assumptions) with a certain property: that the assumption allows us to calculate the probability of any event. As an example, consider a pair of ordinary six ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PubMed

PubMed is a free search engine accessing primarily the MEDLINE database of references and abstracts on life sciences and biomedical topics. The United States National Library of Medicine (NLM) at the National Institutes of Health maintain the database as part of the Entrez system of information retrieval. From 1971 to 1997, online access to the MEDLINE database had been primarily through institutional facilities, such as university libraries. PubMed, first released in January 1996, ushered in the era of private, free, home- and office-based MEDLINE searching. The PubMed system was offered free to the public starting in June 1997. Content In addition to MEDLINE, PubMed provides access to: * older references from the print version of '' Index Medicus'', back to 1951 and earlier * references to some journals before they were indexed in Index Medicus and MEDLINE, for instance ''Science'', '' BMJ'', and '' Annals of Surgery'' * very recent entries to records for an article ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google Scholar

Google Scholar is a freely accessible web search engine that indexes the full text or metadata of scholarly literature across an array of publishing formats and disciplines. Released in beta in November 2004, the Google Scholar index includes peer-reviewed online academic journals and books, conference papers, theses and dissertations, preprints, abstracts, technical reports, and other scholarly literature, including court opinions and patents. Google Scholar uses a web crawler, or web robot, to identify files for inclusion in the search results. For content to be indexed in Google Scholar, it must meet certain specified criteria. An earlier statistical estimate published in PLOS One using a mark and recapture method estimated approximately 80–90% coverage of all articles published in English with an estimate of 100 million.''Trend Watch'' (2014) Nature 509(7501), 405 – discussing Madian Khabsa and C Lee Giles (2014''The Number of Scholarly Documents on the Public W ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Content Analysis

Content analysis is the study of documents and communication artifacts, which might be texts of various formats, pictures, audio or video. Social scientists use content analysis to examine patterns in communication in a replicable and systematic manner. One of the key advantages of using content analysis to analyse social phenomena is its non-invasive nature, in contrast to simulating social experiences or collecting survey answers. Practices and philosophies of content analysis vary between academic disciplines. They all involve systematic reading or observation of texts or artifacts which are assigned labels (sometimes called codes) to indicate the presence of interesting, meaningful pieces of content. By systematically labeling the content of a set of texts, researchers can analyse patterns of content quantitatively using statistical methods, or use qualitative methods to analyse meanings of content within texts. Computers are increasingly used in content analysis to autom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |