|

Conditional Move

In computer architecture, predication is a feature that provides an alternative to Conditional (computer programming), conditional transfer of control flow, control, as implemented by conditional branch (computer science), branch machine instruction (computer science), instructions. Predication works by having conditional (''predicated'') non-branch instructions associated with a ''predicate'', a Boolean data type, Boolean value used by the instruction to control whether the instruction is allowed to modify the architectural state or not. If the predicate specified in the instruction is true, the instruction modifies the architectural state; otherwise, the architectural state is unchanged. For example, a predicated move instruction (a conditional move) will only modify the destination if the predicate is true. Thus, instead of using a conditional branch to select an instruction or a sequence of instructions to Execution (computing), execute based on the predicate that controls ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branch Predictor

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures. Two-way branching is usually implemented with a conditional jump instruction. A conditional jump can either be "taken" and jump to a different place in program memory, or it can be "not taken" and continue execution immediately after the conditional jump. It is not known for certain whether a conditional jump will be taken or not taken until the condition has been calculated and the conditional jump has passed the execution stage in the instruction pipeline (see fig. 1). Without branch prediction, the processor would have to wait until the conditional jump instruction has passed the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Datapath

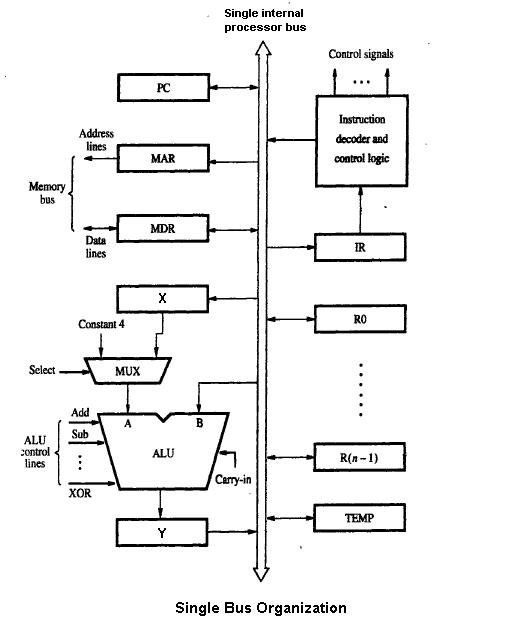

A data path is a collection of functional units such as arithmetic logic units (ALUs) or multipliers that perform data processing operations, registers, and buses. Along with the control unit it composes the central processing unit (CPU). A larger data path can be made by joining more than one data paths using multiplexers. A data path is the ALU, the set of registers, and the CPU's internal bus(es) that allow data to flow between them. The simplest design for a CPU uses one common internal bus. Efficient addition requires a slightly more complicated three-internal-bus structure. Many relatively simple CPUs have a 2-read, 1-write register file connected to the 2 inputs and 1 output of the ALU. During the late 1990s, there was growing research in the area of reconfigurable data paths—data paths that may be re-purposed at run-time using programmable fabric—as such designs may allow for more efficient processing as well as substantial power savings. Finite-state machi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Unit

The control unit (CU) is a component of a computer's central processing unit (CPU) that directs the operation of the processor. A CU typically uses a binary decoder to convert coded instructions into timing and control signals that direct the operation of the other units (memory, arithmetic logic unit and input and output devices, etc.). Most computer resources are managed by the CU. It directs the flow of data between the CPU and the other devices. John von Neumann included the control unit as part of the von Neumann architecture. In modern computer designs, the control unit is typically an internal part of the CPU with its overall role and operation unchanged since its introduction. Multicycle control units The simplest computers use a multicycle microarchitecture. These were the earliest designs. They are still popular in the very smallest computers, such as the embedded systems that operate machinery. In a computer, the control unit often steps through the instructio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thumb-2

ARM (stylised in lowercase as arm, formerly an acronym for Advanced RISC Machines and originally Acorn RISC Machine) is a family of reduced instruction set computer, RISC instruction set architectures (ISAs) for central processing unit, computer processors. Arm Holdings develops the ISAs and licenses them to other companies, who build the physical devices that use the instruction set. It also designs and licenses semiconductor intellectual property core, cores that implement these ISAs. Due to their low costs, low power consumption, and low heat generation, ARM processors are useful for light, portable, battery-powered devices, including smartphones, laptops, and tablet computers, as well as embedded systems. However, ARM processors are also used for desktop computer, desktops and server (computing), servers, including Fugaku (supercomputer), Fugaku, the world's fastest supercomputer from 2020 to 2022. With over 230 billion ARM chips produced, , ARM is the most widely used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Embedded System

An embedded system is a specialized computer system—a combination of a computer processor, computer memory, and input/output peripheral devices—that has a dedicated function within a larger mechanical or electronic system. It is embedded as part of a complete device often including electrical or electronic hardware and mechanical parts. Because an embedded system typically controls physical operations of the machine that it is embedded within, it often has real-time computing constraints. Embedded systems control many devices in common use. , it was estimated that ninety-eight percent of all microprocessors manufactured were used in embedded systems. Modern embedded systems are often based on microcontrollers (i.e. microprocessors with integrated memory and peripheral interfaces), but ordinary microprocessors (using external chips for memory and peripheral interface circuits) are also common, especially in more complex systems. In either case, the processor(s) us ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Condition Code Register

A status register, flag register, or condition code register (CCR) is a collection of status flag bits for a processor. Examples of such registers include FLAGS register in the x86 architecture, flags in the program status word (PSW) register in the IBM System/360 architecture through z/Architecture, and the application program status register (APSR) in the ARM Cortex-A architecture. The status register is a hardware register that contains information about the state of the processor. Individual bits are implicitly or explicitly read and/or written by the machine code instructions executing on the processor. The status register lets an instruction take action contingent on the outcome of a previous instruction. Typically, flags in the status register are modified as effects of arithmetic and bit manipulation operations. For example, a Z bit may be set if the result of the operation is zero and cleared if it is nonzero. Other classes of instructions may also modify the flags ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branch Prediction

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures. Two-way branching is usually implemented with a conditional jump instruction. A conditional jump can either be "taken" and jump to a different place in program memory, or it can be "not taken" and continue execution immediately after the conditional jump. It is not known for certain whether a conditional jump will be taken or not taken until the condition has been calculated and the conditional jump has passed the execution stage in the instruction pipeline (see fig. 1). Without branch prediction, the processor would have to wait until the conditional jump instruction has passed the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Scheduling

In computer science, instruction scheduling is a compiler optimization used to improve instruction-level parallelism, which improves performance on machines with instruction pipelines. Put more simply, it tries to do the following without changing the meaning of the code: * Avoid pipeline stalls by rearranging the order of instructions. * Avoid illegal or semantically ambiguous operations (typically involving subtle instruction pipeline timing issues or non-interlocked resources). The pipeline stalls can be caused by structural hazards (processor resource limit), data hazards (output of one instruction needed by another instruction) and control hazards (branching). Data hazards Instruction scheduling is typically done on a single basic block. In order to determine whether rearranging the block's instructions in a certain way preserves the behavior of that block, we need the concept of a ''data dependency''. There are three types of dependencies, which also happen to be the thr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bitwise Operation

In computer programming, a bitwise operation operates on a bit string, a bit array or a binary numeral (considered as a bit string) at the level of its individual bits. It is a fast and simple action, basic to the higher-level arithmetic operations and directly supported by the central processing unit, processor. Most bitwise operations are presented as two-operand instructions where the result replaces one of the input operands. On simple low-cost processors, typically, bitwise operations are substantially faster than division, several times faster than multiplication, and sometimes significantly faster than addition. While modern processors usually perform addition and multiplication just as fast as bitwise operations due to their longer instruction pipelines and other computer architecture, architectural design choices, bitwise operations do commonly use less power because of the reduced use of resources. Bitwise operators In the explanations below, any indication of a bit's p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pipeline (computing)

In computing, a pipeline, also known as a data pipeline, is a set of data processing elements connected in series, where the output of one element is the input of the next one. The elements of a pipeline are often executed in parallel or in time-sliced fashion. Some amount of buffer storage is often inserted between elements. Concept and motivation Pipelining is a commonly used concept in everyday life. For example, in the assembly line of a car factory, each specific task—such as installing the engine, installing the hood, and installing the wheels—is often done by a separate work station. The stations carry out their tasks in parallel, each on a different car. Once a car has had one task performed, it moves to the next station. Variations in the time needed to complete the tasks can be accommodated by "buffering" (holding one or more cars in a space between the stations) and/or by "stalling" (temporarily halting the upstream stations), until the next station becomes avai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |