|

Center-of-gravity Method

The center-of-gravity method is a theoretic algorithm for convex optimization. It can be seen as a generalization of the bisection method from one-dimensional functions to multi-dimensional functions. It is theoretically important as it attains the optimal convergence rate. However, it has little practical value as each step is very computationally expensive. Input Our goal is to solve a convex optimization problem of the form:minimize ''f''(''x'') s.t. ''x'' in ''G'',where ''f'' is a convex function, and ''G'' is a convex subset of a Euclidean space ''Rn''. We assume that we have a "subgradient oracle": a routine that can compute a subgradient of ''f'' at any given point (if ''f'' is differentiable, then the only subgradient is the gradient \nabla f; but we do not assume that ''f'' is differentiable). Method The method is iterative. At each iteration ''t'', we keep a convex region ''Gt'', which surely contains the desired minimum. Initially we have ''G''0 = ''G''. Then, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

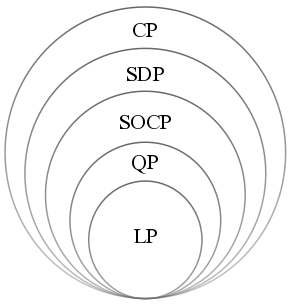

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bisection Method

In mathematics, the bisection method is a root-finding method that applies to any continuous function for which one knows two values with opposite signs. The method consists of repeatedly bisecting the interval defined by these values and then selecting the subinterval in which the function changes sign, and therefore must contain a root. It is a very simple and robust method, but it is also relatively slow. Because of this, it is often used to obtain a rough approximation to a solution which is then used as a starting point for more rapidly converging methods. The method is also called the interval halving method, the binary search method, or the dichotomy method. For polynomials, more elaborate methods exist for testing the existence of a root in an interval ( Descartes' rule of signs, Sturm's theorem, Budan's theorem). They allow extending the bisection method into efficient algorithms for finding all real roots of a polynomial; see Real-root isolation. The method ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convex Function

In mathematics, a real-valued function is called convex if the line segment between any two distinct points on the graph of a function, graph of the function lies above or on the graph between the two points. Equivalently, a function is convex if its epigraph (mathematics), ''epigraph'' (the set of points on or above the graph of the function) is a convex set. In simple terms, a convex function graph is shaped like a cup \cup (or a straight line like a linear function), while a concave function's graph is shaped like a cap \cap. A twice-differentiable function, differentiable function of a single variable is convex if and only if its second derivative is nonnegative on its entire domain of a function, domain. Well-known examples of convex functions of a single variable include a linear function f(x) = cx (where c is a real number), a quadratic function cx^2 (c as a nonnegative real number) and an exponential function ce^x (c as a nonnegative real number). Convex functions pl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convex Set

In geometry, a set of points is convex if it contains every line segment between two points in the set. For example, a solid cube (geometry), cube is a convex set, but anything that is hollow or has an indent, for example, a crescent shape, is not convex. The boundary (topology), boundary of a convex set in the plane is always a convex curve. The intersection of all the convex sets that contain a given subset of Euclidean space is called the convex hull of . It is the smallest convex set containing . A convex function is a real-valued function defined on an interval (mathematics), interval with the property that its epigraph (mathematics), epigraph (the set of points on or above the graph of a function, graph of the function) is a convex set. Convex minimization is a subfield of mathematical optimization, optimization that studies the problem of minimizing convex functions over convex sets. The branch of mathematics devoted to the study of properties of convex sets and convex f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subgradient

In mathematics, the subderivative (or subgradient) generalizes the derivative to convex functions which are not necessarily differentiable. The set of subderivatives at a point is called the subdifferential at that point. Subderivatives arise in convex analysis, the study of convex functions, often in connection to convex optimization. Let f:I \to \mathbb be a real-valued convex function defined on an open interval of the real line. Such a function need not be differentiable at all points: For example, the absolute value function f(x)=, x, is non-differentiable when x=0. However, as seen in the graph on the right (where f(x) in blue has non-differentiable kinks similar to the absolute value function), for any x_0 in the domain of the function one can draw a line which goes through the point (x_0,f(x_0)) and which is everywhere either touching or below the graph of ''f''. The slope of such a line is called a ''subderivative''. Definition Rigorously, a ''subderivative'' of a c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient

In vector calculus, the gradient of a scalar-valued differentiable function f of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p gives the direction and the rate of fastest increase. The gradient transforms like a vector under change of basis of the space of variables of f. If the gradient of a function is non-zero at a point p, the direction of the gradient is the direction in which the function increases most quickly from p, and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to minimize a function by gradient descent. In coordinate-free terms, the gradient of a function f(\mathbf) may be defined by: df=\nabla f \cdot d\mathbf where df is the total infinitesimal change in f for a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Iterative Method

In computational mathematics, an iterative method is a Algorithm, mathematical procedure that uses an initial value to generate a sequence of improving approximate solutions for a class of problems, in which the ''i''-th approximation (called an "iterate") is derived from the previous ones. A specific implementation with Algorithm#Termination, termination criteria for a given iterative method like gradient descent, hill climbing, Newton's method, or Quasi-Newton method, quasi-Newton methods like Broyden–Fletcher–Goldfarb–Shanno algorithm, BFGS, is an algorithm of an iterative method or a method of successive approximation. An iterative method is called ''Convergent series, convergent'' if the corresponding sequence converges for given initial approximations. A mathematically rigorous convergence analysis of an iterative method is usually performed; however, heuristic-based iterative methods are also common. In contrast, direct methods attempt to solve the problem by a finit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Center Of Mass

In physics, the center of mass of a distribution of mass in space (sometimes referred to as the barycenter or balance point) is the unique point at any given time where the weight function, weighted relative position (vector), position of the distributed mass sums to zero. For a rigid body containing its center of mass, this is the point to which a force may be applied to cause a linear acceleration without an angular acceleration. Calculations in mechanics are often simplified when formulated with respect to the center of mass. It is a hypothetical point where the entire mass of an object may be assumed to be concentrated to visualise its motion. In other words, the center of mass is the particle equivalent of a given object for application of Newton's laws of motion. In the case of a single rigid body, the center of mass is fixed in relation to the body, and if the body has uniform density, it will be located at the centroid. The center of mass may be located outside the Phys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Convergence

In mathematical analysis, particularly numerical analysis, the rate of convergence and order of convergence of a sequence that converges to a limit are any of several characterizations of how quickly that sequence approaches its limit. These are broadly divided into rates and orders of convergence that describe how quickly a sequence further approaches its limit once it is already close to it, called asymptotic rates and orders of convergence, and those that describe how quickly sequences approach their limits from starting points that are not necessarily close to their limits, called non-asymptotic rates and orders of convergence. Asymptotic behavior is particularly useful for deciding when to stop a sequence of numerical computations, for instance once a target precision has been reached with an iterative root-finding algorithm, but pre-asymptotic behavior is often crucial for determining whether to begin a sequence of computations at all, since it may be impossible or imprac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ellipsoid Method

In mathematical optimization, the ellipsoid method is an iterative method for convex optimization, minimizing convex functions over convex sets. The ellipsoid method generates a sequence of ellipsoids whose volume uniformly decreases at every step, thus enclosing a minimizer of a convex function. When specialized to solving feasible linear optimization problems with rational data, the ellipsoid method is an algorithm which finds an optimal solution in a number of steps that is polynomial in the input size. History The ellipsoid method has a long history. As an iterative method, a preliminary version was introduced by Naum Z. Shor. In 1972, an approximation algorithm for real convex optimization, convex minimization was studied by Arkadi Nemirovski and David B. Yudin (Judin). As an algorithm for solving linear programming problems with rational data, the ellipsoid algorithm was studied by Leonid Khachiyan; Khachiyan's achievement was to prove the Polynomial time, polynomial-time ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |