|

Blind Equalization

Blind equalization is a digital signal processing technique in which the transmitted signal is inferred ( equalized) from the received signal, while making use only of the transmitted signal statistics. Hence, the use of the word ''blind'' in the name. Blind equalization is essentially blind deconvolution applied to digital communications. Nonetheless, the emphasis in blind equalization is on online estimation of the equalization filter, which is the inverse of the channel impulse response, rather than the estimation of the channel impulse response itself. This is due to blind deconvolution common mode of usage in digital communications systems, as a means to extract the continuously transmitted signal from the received signal, with the channel impulse response being of secondary intrinsic importance. The estimated equalizer is then convolved with the received signal to yield an estimation of the transmitted signal. Problem statement Noiseless model Assuming a linear time i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Digital Signal Processing

Digital signal processing (DSP) is the use of digital processing, such as by computers or more specialized digital signal processors, to perform a wide variety of signal processing operations. The digital signals processed in this manner are a sequence of numbers that represent samples of a continuous variable in a domain such as time, space, or frequency. In digital electronics, a digital signal is represented as a pulse train, which is typically generated by the switching of a transistor. Digital signal processing and analog signal processing are subfields of signal processing. DSP applications include audio and speech processing, sonar, radar and other sensor array processing, spectral density estimation, statistical signal processing, digital image processing, data compression, video coding, audio coding, image compression, signal processing for telecommunications, control systems, biomedical engineering, and seismology, among others. DSP can involve linear or nonli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolution

In mathematics (in particular, functional analysis), convolution is a operation (mathematics), mathematical operation on two function (mathematics), functions ( and ) that produces a third function (f*g) that expresses how the shape of one is modified by the other. The term ''convolution'' refers to both the result function and to the process of computing it. It is defined as the integral of the product of the two functions after one is reflected about the y-axis and shifted. The choice of which function is reflected and shifted before the integral does not change the integral result (see #Properties, commutativity). The integral is evaluated for all values of shift, producing the convolution function. Some features of convolution are similar to cross-correlation: for real-valued functions, of a continuous or discrete variable, convolution (f*g) differs from cross-correlation (f \star g) only in that either or is reflected about the y-axis in convolution; thus it is a cross-c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Predictive Coding

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model. LPC is the most widely used method in speech coding and speech synthesis. It is a powerful speech analysis technique, and a useful method for encoding good quality speech at a low bit rate. Overview LPC starts with the assumption that a speech signal is produced by a buzzer at the end of a tube (for voiced sounds), with occasional added hissing and popping sounds (for voiceless sounds such as sibilants and plosives). Although apparently crude, this Source–filter model is actually a close approximation of the reality of speech production. The glottis (the space between the vocal folds) produces the buzz, which is characterized by its intensity (loudness) and frequency (pitch). The vocal tract (the throat and mouth) forms the tube, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Blind Deconvolution

In electrical engineering and applied mathematics, blind deconvolution is deconvolution without explicit knowledge of the impulse response function used in the convolution. This is usually achieved by making appropriate assumptions of the input to estimate the impulse response by analyzing the output. Blind deconvolution is not solvable without making assumptions on input and impulse response. Most of the algorithms to solve this problem are based on assumption that both input and impulse response live in respective known subspaces. However, blind deconvolution remains a very challenging non-convex optimization problem even with this assumption. In image processing In image processing, blind deconvolution is a deconvolution technique that permits recovery of the target scene from a single or set of "blurred" images in the presence of a poorly determined or unknown point spread function (PSF). Regular linear and non-linear deconvolution techniques utilize a known PSF. For blind ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Components Analysis

Principal component analysis (PCA) is a popular technique for analyzing large datasets containing a high number of dimensions/features per observation, increasing the interpretability of data while preserving the maximum amount of information, and enabling the visualization of multidimensional data. Formally, PCA is a statistical technique for reducing the dimensionality of a dataset. This is accomplished by linearly transforming the data into a new coordinate system where (most of) the variation in the data can be described with fewer dimensions than the initial data. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identify clusters of closely related data points. Principal component analysis has applications in many fields such as population genetics, microbiome studies, and atmospheric science. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

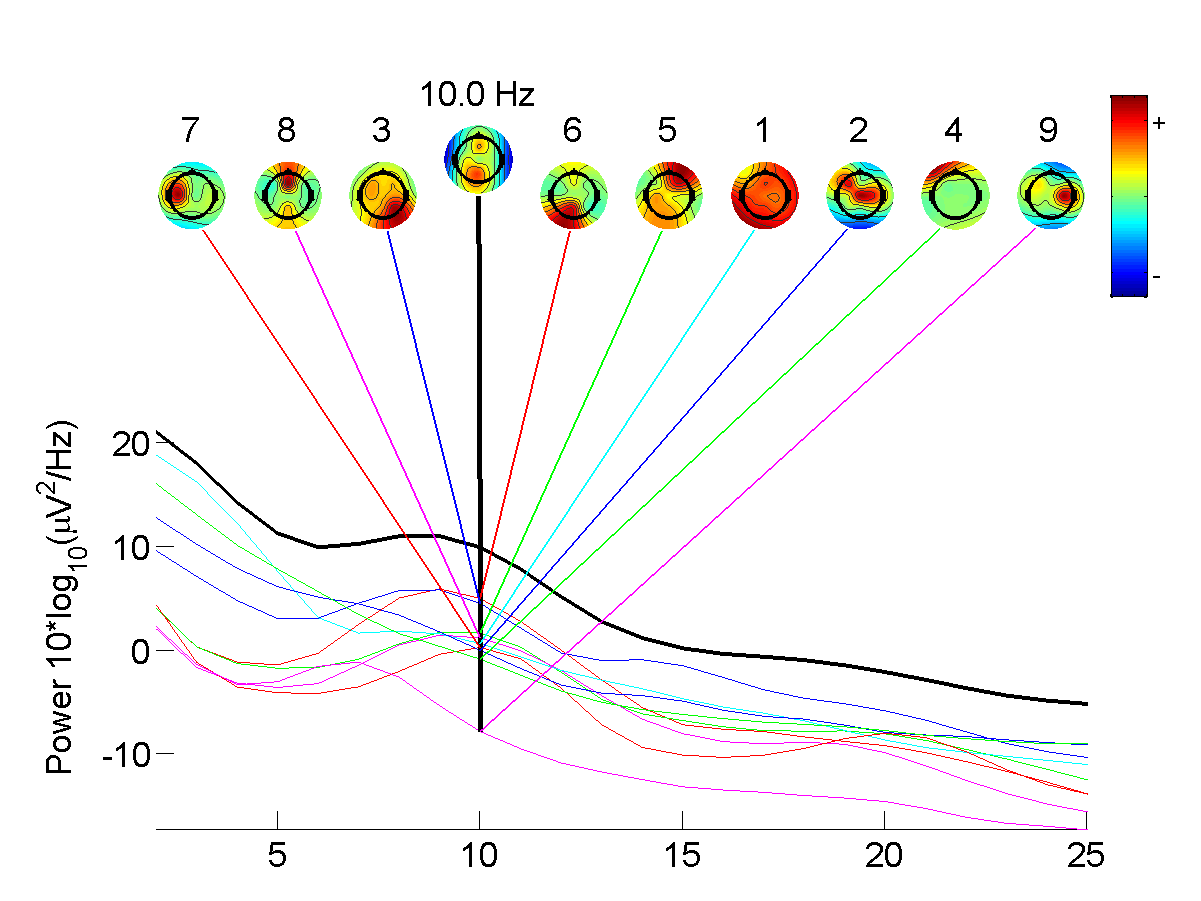

Independent Component Analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are statistically independent from each other. ICA is a special case of blind source separation. A common example application is the "cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence assumption is correct, blind ICA separation of a mixed signal gives very good results. It is also used for signals that are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Higher-order Statistics

In statistics, the term higher-order statistics (HOS) refers to functions which use the third or higher power of a sample, as opposed to more conventional techniques of lower-order statistics, which use constant, linear, and quadratic terms (zeroth, first, and second powers). The third and higher moments, as used in the skewness and kurtosis, are examples of HOS, whereas the first and second moments, as used in the arithmetic mean (first), and variance (second) are examples of low-order statistics. HOS are particularly used in the estimation of shape parameters, such as skewness and kurtosis, as when measuring the deviation of a distribution from the normal distribution. In statistical theory, one long-established approach to higher-order statistics, for univariate and multivariate distributions is through the use of cumulants and joint cumulants.Kendall, MG., Stuart, A. (1969) ''The Advanced Theory of Statistics, Volume 1: Distribution Theory, 3rd Edition'', Griffin. (Chapte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least Mean Squares Filter

Least mean squares (LMS) algorithms are a class of adaptive filter used to mimic a desired filter by finding the filter coefficients that relate to producing the least mean square of the error signal (difference between the desired and the actual signal). It is a stochastic gradient descent method in that the filter is only adapted based on the error at the current time. It was invented in 1960 by Stanford University professor Bernard Widrow and his first Ph.D. student, Ted Hoff. Problem formulation Relationship to the Wiener filter The realization of the causal Wiener filter looks a lot like the solution to the least squares estimate, except in the signal processing domain. The least squares solution, for input matrix \mathbf and output vector \boldsymbol y is : \boldsymbol = (\mathbf ^\mathbf\mathbf)^\mathbf^\boldsymbol y . The FIR least mean squares filter is related to the Wiener filter, but minimizing the error criterion of the former does not rely on cross-corr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimum Phase

In control theory and signal processing, a linear, time-invariant system is said to be minimum-phase if the system and its inverse are causal and stable. The most general causal LTI transfer function can be uniquely factored into a series of an all-pass and a minimum phase system. The system function is then the product of the two parts, and in the time domain the response of the system is the convolution of the two part responses. The difference between a minimum phase and a general transfer function is that a minimum phase system has all of the poles and zeroes of its transfer function in the left half of the s-plane representation (in discrete time, respectively, inside the unit circle of the z-plane). Since inverting a system function leads to poles turning to zeroes and vice versa, and poles on the right side (s-plane imaginary line) or outside ( z-plane unit circle) of the complex plane lead to unstable systems, only the class of minimum phase systems is closed under in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite Impulse Response

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response (or response to any finite length input) is of ''finite'' duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely (usually decaying). The impulse response (that is, the output in response to a Kronecker delta input) of an Nth-order discrete-time FIR filter lasts exactly N+1 samples (from first nonzero element through last nonzero element) before it then settles to zero. FIR filters can be discrete-time or continuous-time, and digital or analog. Definition For a causal discrete-time FIR filter of order ''N'', each value of the output sequence is a weighted sum of the most recent input values: :\begin y &= b_0 x + b_1 x -1+ \cdots + b_N x -N\\ &= \sum_^N b_i\cdot x -i \end where: * x /math> is the input signal, * y /math> is the output signa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LTI System Theory

LTI can refer to: * ''LTI – Lingua Tertii Imperii'', a book by Victor Klemperer * Language Technologies Institute, a division of Carnegie Mellon University * Linear time-invariant system, an engineering theory that investigates the response of a linear, time-invariant system to an arbitrary input signal * ''Licensed to Ill'', the 1986 debut album by the Beastie Boys * Lost Time Incident or industrial injury or Occupational injury * Learning Tools Interoperability * Louisiana Training Institute-East Baton Rouge, later known as the Jetson Center for Youth (JCY), a juvenile prison in Louisiana Companies * London Taxis International * Larsen & Toubro Infotech Biology and medicine * Lymphoid tissue-inducer cell, see innate lymphoid cell Innate lymphoid cells (ILCs) are the most recently discovered family of innate immune cells, derived from common lymphoid progenitors (CLPs). In response to pathogenic tissue damage, ILCs contribute to immunity via the secretion of signalling ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |