|

Bit Complexity

The bit is the most basic unit of information in computing and digital communication. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also widely used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. A contiguous group of binary digits is commonly called a '' bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight bits is called one ''byte'', but historically the size of the byte is not strictly defined. Frequently, half, full, double and quadruple words consist of a number of bytes which is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Units Of Information

A unit of information is any unit of measure of digital data size. In digital computing, a unit of information is used to describe the capacity of a digital data storage device. In telecommunications, a unit of information is used to describe the throughput of a communication channel. In information theory, a unit of information is used to measure information contained in messages and the entropy of random variables. Due to the need to work with data sizes that range from very small to very large, units of information cover a wide range of data sizes. Units are defined as multiples of a smaller unit except for the smallest unit which is based on convention and hardware design. Multiplier prefixes are used to describe relatively large sizes. For binary hardware, by far the most common hardware today, the smallest unit is the bit, a portmanteau of binary digit, which represents a value that is one of two possible values; typically shown as 0 and 1. The nibble, 4 bits, re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shannon (unit)

The shannon (symbol: Sh) is a unit of information named after Claude Shannon, the founder of information theory. IEC 80000-13 defines the shannon as the information content associated with an event when the probability of the event occurring is . It is understood as such within the realm of information theory, and is conceptually distinct from the bit, a term used in data processing and storage to denote a single instance of a binary signal. A sequence of ''n'' binary symbols (such as contained in computer memory or a binary data transmission) is properly described as consisting of ''n'' bits, but the information content of those ''n'' symbols may be more or less than ''n'' shannons depending on the ''a priori'' probability of the actual sequence of symbols. The shannon also serves as a unit of the information entropy of an event, which is defined as the expected value of the information content of the event (i.e., the probability-weighted average of the information content of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Voltage

Voltage, also known as (electrical) potential difference, electric pressure, or electric tension, is the difference in electric potential between two points. In a Electrostatics, static electric field, it corresponds to the Work (electrical), work needed per unit of Electric charge, charge to move a positive Test particle#Electrostatics, test charge from the first point to the second point. In the SI unit, International System of Units (SI), the SI derived unit, derived unit for voltage is the ''volt'' (''V''). The voltage between points can be caused by the build-up of electric charge (e.g., a capacitor), and from an electromotive force (e.g., electromagnetic induction in a Electric generator, generator). On a macroscopic scale, a potential difference can be caused by electrochemical processes (e.g., cells and batteries), the pressure-induced piezoelectric effect, and the thermoelectric effect. Since it is the difference in electric potential, it is a physical Scalar (physics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

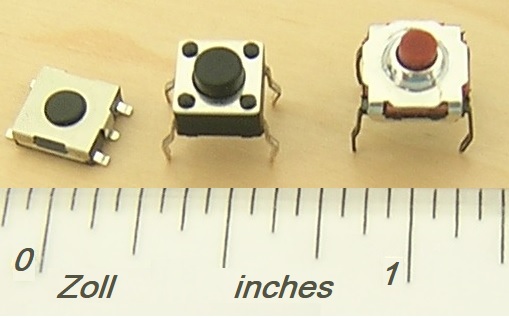

Switch

In electrical engineering, a switch is an electrical component that can disconnect or connect the conducting path in an electrical circuit, interrupting the electric current or diverting it from one conductor to another. The most common type of switch is an electromechanical device consisting of one or more sets of movable electrical contacts connected to external circuits. When a pair of contacts is touching current can pass between them, while when the contacts are separated no current can flow. Switches are made in many different configurations; they may have multiple sets of contacts controlled by the same knob or actuator, and the contacts may operate simultaneously, sequentially, or alternately. A switch may be operated manually, for example, a light switch or a keyboard button, or may function as a sensing element to sense the position of a machine part, liquid level, pressure, or temperature, such as a thermostat. Many specialized forms exist, such as the toggle swit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

State (computer Science)

In information technology and computer science, a system is described as stateful if it is designed to remember preceding events or user interactions; the remembered information is called the state of the system. The set of states a system can occupy is known as its state space. In a discrete system, the state space is countable and often finite. The system's internal behaviour or interaction with its environment consists of separately occurring individual actions or events, such as accepting input or producing output, that may or may not cause the system to change its state. Examples of such systems are digital logic circuits and components, automata and formal language, computer programs, and computers. The output of a digital circuit or deterministic computer program at any time is completely determined by its current inputs and its state. Digital logic circuit state Digital logic circuits can be divided into two types: combinational logic, whose output signals a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flip-flop (electronics)

In electronics, flip-flops and latches are electronic circuit, circuits that have two stable states that can store state information – a bistable multivibrator. The circuit can be made to change state by signals applied to one or more control inputs and will output its state (often along with its logical complement too). It is the basic storage element in sequential logic. Flip-flops and latches are fundamental building blocks of digital electronics systems used in computers, communications, and many other types of systems. Flip-flops and latches are used as data storage elements to store a single ''bit'' (binary digit) of data; one of its two states represents a "one" and the other represents a "zero". Such data storage can be used for storage of ''state (computer science), state'', and such a circuit is described as sequential logic in electronics. When used in a finite-state machine, the output and next state depend not only on its current input, but also on its current stat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John W

John is a common English name and surname: * John (given name) * John (surname) John may also refer to: New Testament Works * Gospel of John, a title often shortened to John * First Epistle of John, often shortened to 1 John * Second Epistle of John, often shortened to 2 John * Third Epistle of John, often shortened to 3 John People * John the Baptist (died ), regarded as a prophet and the forerunner of Jesus Christ * John the Apostle (died ), one of the twelve apostles of Jesus Christ * John the Evangelist, assigned author of the Fourth Gospel, once identified with the Apostle * John of Patmos, also known as John the Divine or John the Revelator, the author of the Book of Revelation, once identified with the Apostle * John the Presbyter, a figure either identified with or distinguished from the Apostle, the Evangelist and John of Patmos Other people with the given name Religious figures * John, father of Andrew the Apostle and Saint Peter * Pope John (disambigu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

A Mathematical Theory Of Communication

"A Mathematical Theory of Communication" is an article by mathematician Claude E. Shannon published in '' Bell System Technical Journal'' in 1948. It was renamed ''The Mathematical Theory of Communication'' in the 1949 book of the same name, a small but significant title change after realizing the generality of this work. It has tens of thousands of citations, being one of the most influential and cited scientific papers of all time, as it gave rise to the field of information theory, with ''Scientific American'' referring to the paper as the "Magna Carta of the Information Age", while the electrical engineer Robert G. Gallager called the paper a "blueprint for the digital era". Historian James Gleick rated the paper as the most important development of 1948, placing the transistor second in the same time period, with Gleick emphasizing that the paper by Shannon was "even more profound and more fundamental" than the transistor. It is also noted that "as did relativity and qua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ralph Hartley

Ralph Vinton Lyon Hartley (November 30, 1888 – May 1, 1970) was an American electronics researcher. He invented the Hartley oscillator and the Hartley transform, and contributed to the foundations of information theory. His legacy includes the naming of the hartley, a unit of information equal to one decimal digit, after him. Biography Hartley was born in Sprucemont, Nevada, and attended the University of Utah, receiving an A.B. degree in 1909. He became a Rhodes Scholar at St Johns, Oxford University, in 1910 and received a B.A. degree in 1912 and a B.Sc. degree in 1913. He married Florence Vail of Brooklyn on March 21, 1916. The Hartleys had no children. He returned to the United States and was employed at the Research Laboratory of the Western Electric Company. In 1915 he was in charge of radio receiver development for the Bell System transatlantic radiotelephone tests. For this he developed the Hartley oscillator and also a neutralizing circuit to eliminate triode ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 1541-2002

IEEE 1541-2002 is a standard issued in 2002 by the Institute of Electrical and Electronics Engineers (IEEE) concerning the use of prefixes for binary multiples of units of measurement related to digital electronics and computing. IEEE 1541-2021 revises and supersedes IEEE 1541–2002, which is 'inactive'. While the International System of Units (SI) defines multiples based on powers of ten (like k = 103, M = 106, etc.), a different definition is sometimes used in computing, based on powers of two (like k = 210, M = 220, etc.). This is due to binary nature of current computing systems, making powers of two the simplest to calculate. In the early years of computing, there was no significant error in using the same prefix for either quantity (210 = 1,024 and 103 = 1000 are equal, to two significant figures). Thus, the SI prefixes were borrowed to indicate nearby binary multiples for these computer-related quantities. Meanwhile, manufacturers of storage devices, such as hard disks, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEC 80000-13

ISO/IEC 80000, ''Quantities and units'', is an international standard describing the International System of Quantities (ISQ). It was developed and promulgated jointly by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). It serves as a style guide for using physical quantities and units of measurement, formulas involving them, and their corresponding units, in scientific and educational documents for worldwide use. The ISO/IEC 80000 family of standards was completed with the publication of the first edition of Part 1 in November 2009. Overview By 2021, ISO/IEC 80000 comprised 13 parts, two of which (parts 6 and 13) were developed by IEC and the remaining 11 were developed by ISO, with a further three parts (15, 16 and, 17) under development. Part 14 was withdrawn. Subject areas By 2021 the 80000 standard had 13 published parts. A description of each part is available online, with the complete parts for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Detection And Correction

In information theory and coding theory with applications in computer science and telecommunications, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases. Definitions ''Error detection'' is the detection of errors caused by noise or other impairments during transmission from the transmitter to the receiver. ''Error correction'' is the detection of errors and reconstruction of the original, error-free data. History In classical antiquity, copyists of the Hebrew Bible were paid for their work according to the number of stichs (lines of verse). As the prose books of the Bible were hardly ever w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |