|

Binomial Inverse Theorem

In mathematics (specifically linear algebra), the Woodbury matrix identity, named after Max A. Woodbury, says that the inverse of a rank-''k'' correction of some matrix can be computed by doing a rank-''k'' correction to the inverse of the original matrix. Alternative names for this formula are the matrix inversion lemma, Sherman–Morrison–Woodbury formula or just Woodbury formula. However, the identity appeared in several papers before the Woodbury report. The Woodbury matrix identity is : \left(A + UCV \right)^ = A^ - A^U \left(C^ + VA^U \right)^ VA^, where ''A'', ''U'', ''C'' and ''V'' are conformable matrices: ''A'' is ''n''×''n'', ''C'' is ''k''×''k'', ''U'' is ''n''×''k'', and ''V'' is ''k''×''n''. This can be derived using blockwise matrix inversion. While the identity is primarily used on matrices, it holds in a general ring or in an Ab-category. Discussion To prove this result, we will start by proving a simpler one. Replacing ''A'' and ''C'' with the ide ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented in modern mathematics with the major subdisciplines of number theory, algebra, geometry, and analysis, respectively. There is no general consensus among mathematicians about a common definition for their academic discipline. Most mathematical activity involves the discovery of properties of abstract objects and the use of pure reason to prove them. These objects consist of either abstractions from nature orin modern mathematicsentities that are stipulated to have certain properties, called axioms. A ''proof'' consists of a succession of applications of deductive rules to already established results. These results include previously proved theorems, axioms, andin case of abstraction from naturesome basic properties that are considered true starting points of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kalman Filter

For statistics and control theory, Kalman filtering, also known as linear quadratic estimation (LQE), is an algorithm that uses a series of measurements observed over time, including statistical noise and other inaccuracies, and produces estimates of unknown variables that tend to be more accurate than those based on a single measurement alone, by estimating a joint probability distribution over the variables for each timeframe. The filter is named after Rudolf E. Kálmán, who was one of the primary developers of its theory. This digital filter is sometimes termed the ''Stratonovich–Kalman–Bucy filter'' because it is a special case of a more general, nonlinear filter developed somewhat earlier by the Soviet mathematician Ruslan Stratonovich. In fact, some of the special case linear filter's equations appeared in papers by Stratonovich that were published before summer 1960, when Kalman met with Stratonovich during a conference in Moscow. Kalman filtering has numerous tech ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lemmas In Linear Algebra

Lemma may refer to: Language and linguistics * Lemma (morphology), the canonical, dictionary or citation form of a word * Lemma (psycholinguistics), a mental abstraction of a word about to be uttered Science and mathematics * Lemma (botany), a part of a grass plant * Lemma (mathematics), a type of proposition Other uses * ''Lemma'' (album), by John Zorn (2013) * Lemma (logic), an informal contention See also *Analemma, a diagram showing the variation of the position of the Sun in the sky *Dilemma *Lema (other) Lema may refer to: Nature Amphibians * Lema tree frog, ''Hypsiboas lemai'', a species of frog *''Centrolene lema'', a synonym for '' Vitreorana gorzulae'', the Bolivar giant glass frog Insects *'' Kedestes lema'', the Lema ranger, a butterfly * ... * Lemmatisation * Neurolemma, part of a neuron {{Disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Invertible Matrix

In linear algebra, an -by- square matrix is called invertible (also nonsingular or nondegenerate), if there exists an -by- square matrix such that :\mathbf = \mathbf = \mathbf_n \ where denotes the -by- identity matrix and the multiplication used is ordinary matrix multiplication. If this is the case, then the matrix is uniquely determined by , and is called the (multiplicative) ''inverse'' of , denoted by . Matrix inversion is the process of finding the matrix that satisfies the prior equation for a given invertible matrix . A square matrix that is ''not'' invertible is called singular or degenerate. A square matrix is singular if and only if its determinant is zero. Singular matrices are rare in the sense that if a square matrix's entries are randomly selected from any finite region on the number line or complex plane, the probability that the matrix is singular is 0, that is, it will "almost never" be singular. Non-square matrices (-by- matrices for which ) do not hav ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinant

In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. It characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the linear map represented by the matrix is an isomorphism. The determinant of a product of matrices is the product of their determinants (the preceding property is a corollary of this one). The determinant of a matrix is denoted , , or . The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e & f \\ g & h & i \end= aei + bfg + cdh - ceg - bdi - afh. The determinant of a matrix can be defined in several equivalent ways. Leibniz formula expresses the determinant as a sum of signed products of matrix entries such that each summand is the product of different entries, and the number of these summands is n!, the factorial of (t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Determinant Lemma

In mathematics, in particular linear algebra, the matrix determinant lemma computes the determinant of the sum of an invertible matrix A and the dyadic product, uvT, of a column vector u and a row vector vT. Statement Suppose A is an invertible square matrix and u, v are column vectors. Then the matrix determinant lemma states that :\det\left(\mathbf + \mathbf^\textsf\right) = \left(1 + \mathbf^\textsf\mathbf^\mathbf\right)\,\det\left(\mathbf\right)\,. Here, uvT is the outer product of two vectors u and v. The theorem can also be stated in terms of the adjugate matrix of A: :\det\left(\mathbf + \mathbf^\textsf\right) = \det\left(\mathbf\right) + \mathbf^\textsf\mathrm\left(\mathbf\right)\mathbf\,, in which case it applies whether or not the square matrix A is invertible. Proof First the proof of the special case A = I follows from the equality: : \begin \mathbf & 0 \\ \mathbf^\textsf & 1 \end \begin \mathbf + \mathbf^\textsf & \mathbf \\ 0 & 1 \end \begin \mathbf & 0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Schur Complement

In linear algebra and the theory of matrices, the Schur complement of a block matrix is defined as follows. Suppose ''p'', ''q'' are nonnegative integers, and suppose ''A'', ''B'', ''C'', ''D'' are respectively ''p'' × ''p'', ''p'' × ''q'', ''q'' × ''p'', and ''q'' × ''q'' matrices of complex numbers. Let :M = \left begin A & B \\ C & D \end\right/math> so that ''M'' is a (''p'' + ''q'') × (''p'' + ''q'') matrix. If ''D'' is invertible, then the Schur complement of the block ''D'' of the matrix ''M'' is the ''p'' × ''p'' matrix defined by :M/D := A - BD^C. If ''A'' is invertible, the Schur complement of the block ''A'' of the matrix ''M'' is the ''q'' × ''q'' matrix defined by :M/A := D - CA^B. In the case that ''A'' or ''D'' is singular, substituting a generalized inverse for the inverses on ''M/A'' and ''M/D'' yields the generalized Schur complement. The Schur complement is named after Issai Schur who used it to prove Schur's lemma, although it had been used previous ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Numerical Partial Differential Equations

Numerical methods for partial differential equations is the branch of numerical analysis that studies the numerical solution of partial differential equations (PDEs). In principle, specialized methods for hyperbolic, parabolic or elliptic partial differential equations exist. Overview of methods Finite difference method In this method, functions are represented by their values at certain grid points and derivatives are approximated through differences in these values. Method of lines The method of lines (MOL, NMOL, NUMOLHamdi, S., W. E. Schiesser and G. W. Griffiths (2007) Method of lines ''Scholarpedia'', 2(7):2859.) is a technique for solving partial differential equations (PDEs) in which all dimensions except one are discretized. MOL allows standard, general-purpose methods and software, developed for the numerical integration of ordinary differential equations (ODEs) and differential algebraic equations (DAEs), to be used. A large number of integration routines have been ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Numerical Linear Algebra

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible. Numerical linear algebra aims to solve problems of continuous mathematics using finite precision computers, so its applications to the natural and social sciences ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parametric Solution

In mathematics, a parametric equation defines a group of quantities as functions of one or more independent variables called parameters. Parametric equations are commonly used to express the coordinates of the points that make up a geometric object such as a curve or surface, in which case the equations are collectively called a parametric representation or parameterization (alternatively spelled as parametrisation) of the object. For example, the equations :\begin x &= \cos t \\ y &= \sin t \end form a parametric representation of the unit circle, where ''t'' is the parameter: A point (''x'', ''y'') is on the unit circle if and only if there is a value of ''t'' such that these two equations generate that point. Sometimes the parametric equations for the individual scalar output variables are combined into a single parametric equation in vectors: :(x, y)=(\cos t, \sin t). Parametric representations are generally nonunique (see the "Examples in two dimensions" section belo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

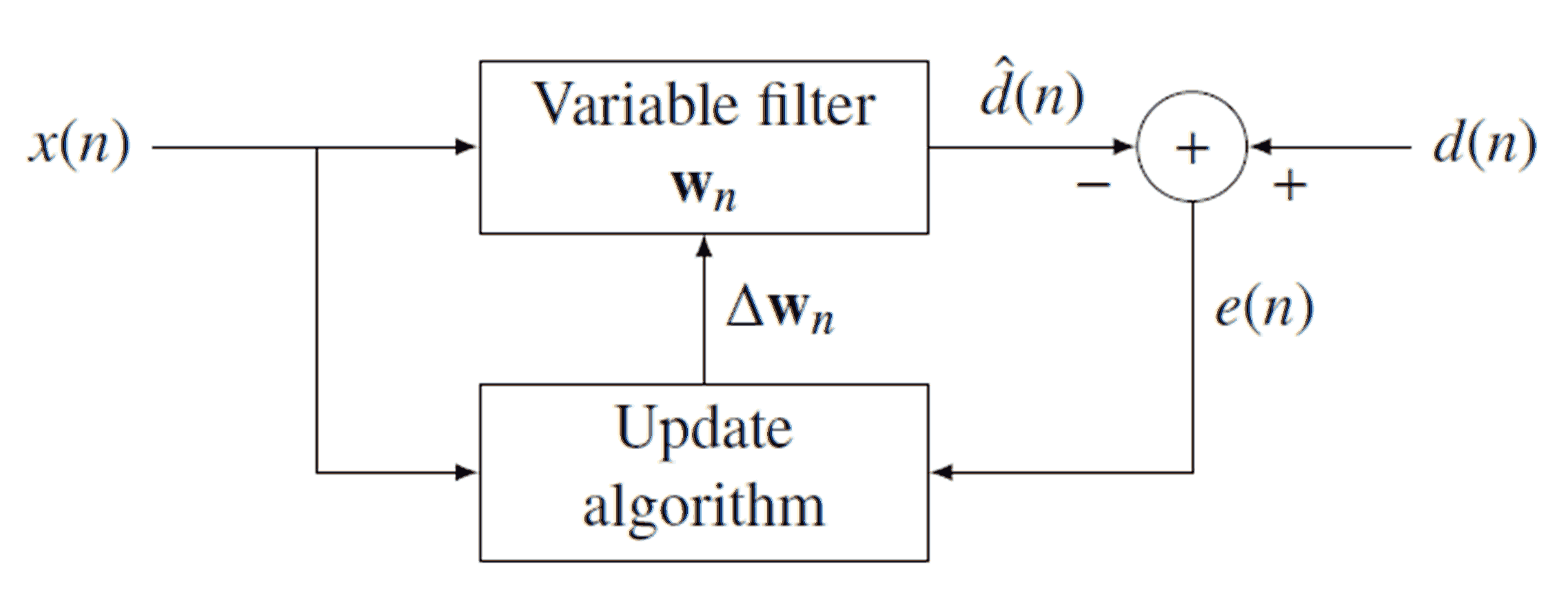

Recursive Least Squares

Recursive least squares (RLS) is an adaptive filter algorithm that recursively finds the coefficients that minimize a weighted linear least squares cost function relating to the input signals. This approach is in contrast to other algorithms such as the least mean squares (LMS) that aim to reduce the mean square error. In the derivation of the RLS, the input signals are considered deterministic, while for the LMS and similar algorithms they are considered stochastic. Compared to most of its competitors, the RLS exhibits extremely fast convergence. However, this benefit comes at the cost of high computational complexity. Motivation RLS was discovered by Gauss but lay unused or ignored until 1950 when Plackett rediscovered the original work of Gauss from 1821. In general, the RLS can be used to solve any problem that can be solved by adaptive filters. For example, suppose that a signal d(n) is transmitted over an echoey, noisy channel that causes it to be received as :x(n)=\sum_^q ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |