|

Adaptive Algorithm

An adaptive algorithm is an algorithm that changes its behavior at the time it is run, based on information available and on ''a priori'' defined reward mechanism (or criterion). Such information could be the story of recently received data, information on the available computational resources, or other run-time acquired (or ''a priori'' known) information related to the environment in which it operates. Among the most used adaptive algorithms is the Widrow-Hoff’s least mean squares (LMS), which represents a class of stochastic gradient-descent algorithms used in adaptive filtering and machine learning. In adaptive filtering the LMS is used to mimic a desired filter by finding the filter coefficients that relate to producing the least mean square of the error signal (difference between the desired and the actual signal). For example, stable partition, using no additional memory is ''O''(''n'' lg ''n'') but given ''O''(''n'') memory, it can be ''O''(''n'') in time. As implemen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

AdaBoost

AdaBoost (short for Adaptive Boosting) is a statistical classification meta-algorithm formulated by Yoav Freund and Robert Schapire in 1995, who won the 2003 Gödel Prize for their work. It can be used in conjunction with many types of learning algorithm to improve performance. The output of multiple ''weak learners'' is combined into a weighted sum that represents the final output of the boosted classifier. Usually, AdaBoost is presented for binary classification, although it can be generalized to multiple classes or bounded intervals of real values. AdaBoost is adaptive in the sense that subsequent weak learners (models) are adjusted in favor of instances misclassified by previous models. In some problems, it can be less susceptible to overfitting than other learning algorithms. The individual learners can be weak, but as long as the performance of each one is slightly better than random guessing, the final model can be proven to converge to a strong learner. Although AdaBo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adaptive Grammar

An adaptive grammar is a formal grammar that explicitly provides mechanisms within the Formal system, formalism to allow its own Production rule (formal languages), production rules to be manipulated. Overview John N. Shutt defines adaptive grammar as a grammatical formalism that allows rule sets (aka sets of production rules) to be explicitly manipulated within a grammar. Types of manipulation include rule addition, deletion, and modification. Early history The first description of grammar adaptivity (though not under that name) in the literature is generallyChristiansen, Henning, "Survey of Adaptable Grammars" ''ACM SIGPLAN Notices'', Vol. 25 No. 11, pp. 35-44, Nov. 1990.Shutt, John N., Recursive Adaptable Grammars', Master’s Thesis, Worcester Polytechnic Institute, 1993. (16 December 2003 emended revision.)Jackson, Quinn Tyler, Adapting to Babel: Adaptivity and Context-Sensitivity in Parsing', Ibis Publications, Plymouth, Massachusetts, March 2006. taken to be in a paper by A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adaptive Filter

An adaptive filter is a system with a linear filter that has a transfer function controlled by variable parameters and a means to adjust those parameters according to an optimization algorithm. Because of the complexity of the optimization algorithms, almost all adaptive filters are digital filters. Adaptive filters are required for some applications because some parameters of the desired processing operation (for instance, the locations of reflective surfaces in a reverberant space) are not known in advance or are changing. The closed loop adaptive filter uses feedback in the form of an error signal to refine its transfer function. Generally speaking, the closed loop adaptive process involves the use of a cost function, which is a criterion for optimum performance of the filter, to feed an algorithm, which determines how to modify filter transfer function to minimize the cost on the next iteration. The most common cost function is the mean square of the error signal. As the pow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adaptation (computer Science)

Adaptation in computer science is a process where an interactive system (adaptive system) adapts its behaviour to individual users based on information acquired about its user(s) and its environment. Adaptation is one of the three pillars of empiricism in Scrum. The need for adaptation A software system passes through a potentially long software engineering cycle and before delivery, requirement engineers, designers and software developers realize the components of the system. However, it is impossible to anticipate the requirements of all users, and a single best or optimal system configuration is impossible. The active involvement of users and clear understanding of user and task requirements is a challenge in the development of computer-based interactive systems for two reasons: * The potential user groups may not be known at the start of the project, and would need to be identified according to future scenarios of how the software system will be used. These groups nee ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

MiniDisc

MiniDisc (MD) is an erasable magneto-optical disc-based data storage format offering a capacity of 60, 74, or 80 minutes of digitized audio. Sony announced the MiniDisc in September 1992 and released it in November of that year for sale in Japan and in December in Europe, North America, and other countries. The music format was based on ATRAC audio data compression, Sony's own proprietary compression code. Its successor, Hi-MD, would later introduce the option of linear PCM digital recording to meet audio quality comparable to that of a compact disc. MiniDiscs were very popular in Japan and found moderate success in Europe. Although it was designed to succeed the cassette tape, it did not manage to supplant it globally. By March 2011, Sony had sold 22 million MD players, but discontinued further development. Sony ceased manufacturing and sold the last of the players by March 2013. On January 23, 2025, Sony announced they would end the production of recordable MD media ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adaptive Transform Acoustic Coding

Adaptive Transform Acoustic Coding (ATRAC) is a family of proprietary audio compression algorithms developed by Sony. MiniDisc was the first commercial product to incorporate ATRAC, in 1992. ATRAC allowed a relatively small disc like MiniDisc to have the same running time as a CD while storing audio information with minimal perceptible loss in quality. Improvements to the codec in the form of ATRAC3, ATRAC3plus, and ATRAC Advanced Lossless followed in 1999, 2002, and 2006 respectively. Files in ATRAC3 format originally had the extension; however, in most cases, the files would be stored in an OpenMG Audio container using the extension . Previously, files that were encrypted with OpenMG had the extension, which was replaced by starting in SonicStage v2.1. Encryption is no longer compulsory as of v3.2. Other MiniDisc manufacturers such as Sharp and Panasonic also implemented their own versions of the ATRAC codec. History ATRAC was developed for Sony's MiniDisc format. ATR ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Signal Processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomography, seismic signals, Altimeter, altimetry processing, and scientific measurements. Signal processing techniques are used to optimize transmissions, Data storage, digital storage efficiency, correcting distorted signals, improve subjective video quality, and to detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital refinement of these techniques can be found in the digital control systems of the 1940s and 1950s. In 1948, Claude Shannon wrote the influential paper "A Mathematical Theory of Communication" which was publis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Prediction By Partial Matching

Prediction by partial matching (PPM) is an adaptive statistical data compression technique based on context modeling and prediction. PPM models use a set of previous symbols in the uncompressed symbol stream to predict the next symbol in the stream. PPM algorithms can also be used to cluster data into predicted groupings in cluster analysis. Theory Predictions are usually reduced to symbol rankings. Each symbol (a letter, bit or any other amount of data) is ranked before it is compressed, and the ranking system determines the corresponding codeword (and therefore the compression rate). In many compression algorithms, the ranking is equivalent to probability mass function estimation. Given the previous letters (or given a context), each symbol is assigned with a probability. For instance, in arithmetic coding the symbols are ranked by their probabilities to appear after previous symbols, and the whole sequence is compressed into a single fraction that is computed according t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adaptive Huffman Coding

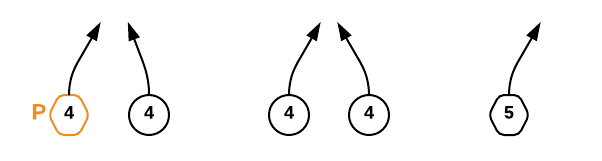

Adaptive Huffman coding (also called Dynamic Huffman coding) is an adaptive coding technique based on Huffman coding. It permits building the code as the symbols are being transmitted, having no initial knowledge of source distribution, that allows one-pass encoding and adaptation to changing conditions in data. The benefit of one-pass procedure is that the source can be encoded in real time, though it becomes more sensitive to transmission errors, since just a single loss ruins the whole code, requiring error detection and correction. Algorithms There are a number of implementations of this method, the most notable are FGK (Newton Faller, Faller-Robert G. Gallager, Gallager-Donald Knuth, Knuth) and Jeffrey Vitter, Vitter algorithm. FGK Algorithm It is an online coding technique based on Huffman coding. Having no initial knowledge of occurrence frequencies, it permits dynamically adjusting the Huffman's tree as data are being transmitted. In a FGK Huffman tree, a special external ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adaptive Coding

Adaptive coding refers to variants of entropy encoding methods of lossless data compression. They are particularly suited to streaming data, as they adapt to localized changes in the characteristics of the data, and don't require a first pass over the data to calculate a probability model. The cost paid for these advantages is that the encoder and decoder must be more complex to keep their states synchronized, and more computational power is needed to keep adapting the encoder/decoder state. Almost all data compression methods involve the use of a ''model'', a prediction of the composition of the data. When the data matches the prediction made by the model, the encoder can usually transmit the content of the data at a lower information cost, by making reference to the model. This general statement is a bit misleading as general data compression algorithms would include the popular LZW and LZ77 algorithms, which are hardly comparable to compression techniques typically called ''a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |