|

Apache Pinot

Apache Pinot is a column-oriented, open-source, distributed data store written in Java. Pinot is designed to execute OLAP queries with low latency. It is suited in contexts where fast analytics, such as aggregations, are needed on immutable data, possibly, with real-time data ingestion.Pawar, Neha"Pinot Joins Apache Incubator", ''LinkedIn Engineering'', 01 April 2019 The name Pinot comes from the Pinot grape vines that are pressed into liquid that is used to produce a variety of different wines. The founders of the database chose the name as a metaphor for analyzing vast quantities of data from a variety of different file formats or streaming data sources. Pinot was first created at LinkedIn after the engineering staff determined that there were no off the shelf solutions that met the social networking site's requirements like predictable low latency, data freshness in seconds, fault tolerance and scalability. Pinot is used in production by technology companies such as Uber, Mic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pinot Logo '', a 1984 French film with Gérard Jugnot

{{disambiguation ...

Pinot may refer to: *Pinot (grape), a grape family *Pinot (surname) *Pinot (restaurant), a restaurant by chef Joachim Splichal See also *Pino (other) *''Pinot simple flic ''Pinot simple flic'' is a French cinema, French crime film, crime-comedy film directed by Gérard Jugnot and released in 1984 in film, 1984. Plot The film starts in the 13th arrondissement of Paris in 1984. Robert Pinot is a police officer as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache ZooKeeper

Apache ZooKeeper is an open-source server for highly reliable distributed coordination of cloud applications. It is a project of the Apache Software Foundation. ZooKeeper is essentially a service for distributed systems offering a hierarchical key-value store, which is used to provide a distributed configuration service, synchronization service, and naming registry for large distributed systems (see '' Use cases''). ZooKeeper was a sub-project of Hadoop but is now a top-level Apache project in its own right. Overview ZooKeeper's architecture supports high availability through redundant services. The clients can thus ask another ZooKeeper leader if the first fails to answer. ZooKeeper nodes store their data in a hierarchical name space, much like a file system or a tree data structure. Clients can read from and write to the nodes and in this way have a shared configuration service. ZooKeeper can be viewed as an atomic broadcast system, through which updates are totally or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehousing

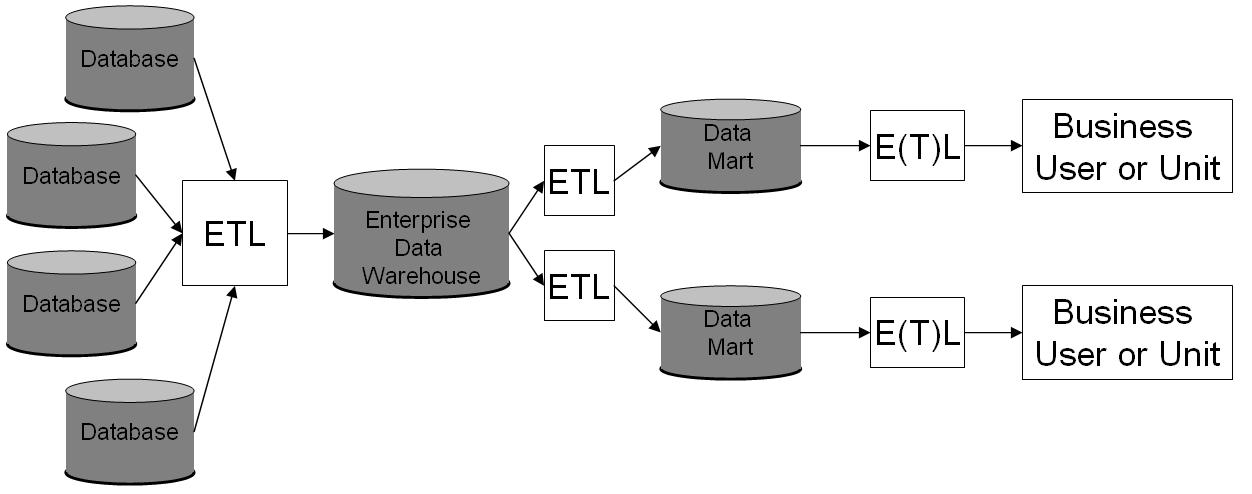

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is considered a core component of business intelligence. DWs are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses staging, data integration, and access layers t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google Cloud Storage

Google Cloud Storage is a RESTful online file storage web service for storing and accessing data on Google Cloud Platform infrastructure. The service combines the performance and scalability of Google's cloud with advanced security and sharing capabilities. It is an ''Infrastructure as a Service'' (IaaS), comparable to Amazon S3. Contrary to Google Drive and according to different service specifications, Google Cloud Storage appears to be more suitable for enterprises. Feasibility User activation is resourced through the API Developer Console. Google Account holders must first access the service by logging in and then agreeing to the Terms of Service, followed by enabling a billing structure. Design Google Cloud Storage stores objects (originally limited to 100 GiB, currently up to 5 TiB) in projects which are organized into buckets. All requests are authorized using Identity and Access Management policies or access control lists associated with a user or service accoun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microsoft Azure

Microsoft Azure, often referred to as Azure ( , ), is a cloud computing platform operated by Microsoft for application management via around the world-distributed data centers. Microsoft Azure has multiple capabilities such as software as a service (SaaS), platform as a service (PaaS) and infrastructure as a service (IaaS) and supports many different programming languages, tools, and frameworks, including both Microsoft-specific and third-party software and systems. Azure, announced at Microsoft's Professional Developers Conference (PDC) in October 2008, went by the internal project codename "Project Red Dog", and was formally released in February 2010 as Windows Azure, before being renamed Microsoft Azure on March 25, 2014. Services Microsoft Azure uses large-scale virtualization at Microsoft data centers worldwide and it offers more than 600 services. Compute services * Virtual machines, infrastructure as a service (IaaS) allowing users to launch general-purpos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Amazon S3

Amazon S3 or Amazon Simple Storage Service is a service offered by Amazon Web Services (AWS) that provides object storage through a web service interface. Amazon S3 uses the same scalable storage infrastructure that Amazon.com uses to run its e-commerce network. Amazon S3 can store any type of object, which allows uses like storage for Internet applications, backups, disaster recovery, data archives, data lakes for analytics, and hybrid cloud storage. AWS launched Amazon S3 in the United States on March 14, 2006, then in Europe in November 2007. Design Amazon S3 manages data with an object storage architecture which aims to provide scalability, high availability, and low latency with high durability. The basic storage units of Amazon S3 are objects which are organized into buckets. Each object is identified by a unique, user-assigned key. Buckets can be managed using the console provided by Amazon S3, programmatically with the AWS SDK, or the REST application pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hadoop

Apache Hadoop () is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. Thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Batch Processing

Computerized batch processing is a method of running software programs called jobs in batches automatically. While users are required to submit the jobs, no other interaction by the user is required to process the batch. Batches may automatically be run at scheduled times as well as being run contingent on the availability of computer resources. History The term "batch processing" originates in the traditional classification of methods of production as job production (one-off production), batch production (production of a "batch" of multiple items at once, one stage at a time), and flow production (mass production, all stages in process at once). Early history Early computers were capable of running only one program at a time. Each user had sole control of the machine for a scheduled period of time. They would arrive at the computer with program and data, often on punched paper cards and magnetic or paper tape, and would load their program, run and debug it, and carry off thei ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Kafka

Apache Kafka is a distributed event store and stream-processing platform. It is an open-source system developed by the Apache Software Foundation written in Java and Scala. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. Kafka can connect to external systems (for data import/export) via Kafka Connect, and provides the Kafka Streams libraries for stream processing applications. Kafka uses a binary TCP-based protocol that is optimized for efficiency and relies on a "message set" abstraction that naturally groups messages together to reduce the overhead of the network roundtrip. This "leads to larger network packets, larger sequential disk operations, contiguous memory blocks ..which allows Kafka to turn a bursty stream of random message writes into linear writes." History Kafka was originally developed at LinkedIn, and was subsequently open sourced in early 2011. Jay Kreps, Neha Narkhede and Jun Rao helped co-cre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inverted Index

In computer science, an inverted index (also referred to as a postings list, postings file, or inverted file) is a database index storing a mapping from content, such as words or numbers, to its locations in a table, or in a document or a set of documents (named in contrast to a forward index, which maps from documents to content). The purpose of an inverted index is to allow fast full-text searches, at a cost of increased processing when a document is added to the database. The inverted file may be the database file itself, rather than its index. It is the most popular data structure used in document retrieval systems, used on a large scale for example in search engines. Additionally, several significant general-purpose mainframe-based database management systems have used inverted list architectures, including ADABAS, DATACOM/DB, and Model 204. There are two main variants of inverted indexes: A record-level inverted index (or inverted file index or just inverted file) contains ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Index

A database index is a data structure that improves the speed of data retrieval operations on a database table at the cost of additional writes and storage space to maintain the index data structure. Indexes are used to quickly locate data without having to search every row in a database table every time a database table is accessed. Indexes can be created using one or more columns of a database table, providing the basis for both rapid random lookups and efficient access of ordered records. An index is a copy of selected columns of data, from a table, that is designed to enable very efficient search. An index normally includes a "key" or direct link to the original row of data from which it was copied, to allow the complete row to be retrieved efficiently. Some databases extend the power of indexing by letting developers create indexes on column values that have been transformed by functions or expressions. For example, an index could be created on upper(last_name), which wou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |