Biostatistics (also known as biometry) is a branch of

statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

that applies statistical methods to a wide range of topics in

biology

Biology is the scientific study of life and living organisms. It is a broad natural science that encompasses a wide range of fields and unifying principles that explain the structure, function, growth, History of life, origin, evolution, and ...

. It encompasses the design of biological

experiment

An experiment is a procedure carried out to support or refute a hypothesis, or determine the efficacy or likelihood of something previously untried. Experiments provide insight into cause-and-effect by demonstrating what outcome occurs whe ...

s, the collection and analysis of data from those experiments and the interpretation of the results.

History

Biostatistics and genetics

Biostatistical modeling forms an important part of numerous modern biological theories.

Genetics

Genetics is the study of genes, genetic variation, and heredity in organisms.Hartl D, Jones E (2005) It is an important branch in biology because heredity is vital to organisms' evolution. Gregor Mendel, a Moravian Augustinians, Augustinian ...

studies, since its beginning, used statistical concepts to understand observed experimental results. Some genetics scientists even contributed with statistical advances with the development of methods and tools.

Gregor Mendel

Gregor Johann Mendel Order of Saint Augustine, OSA (; ; ; 20 July 1822 – 6 January 1884) was an Austrian Empire, Austrian biologist, meteorologist, mathematician, Augustinians, Augustinian friar and abbot of St Thomas's Abbey, Brno, St. Thom ...

started the genetics studies investigating genetics segregation patterns in families of peas and used statistics to explain the collected data. In the early 1900s, after the rediscovery of Mendel's Mendelian inheritance work, there were gaps in understanding between genetics and evolutionary Darwinism.

Francis Galton

Sir Francis Galton (; 16 February 1822 – 17 January 1911) was an English polymath and the originator of eugenics during the Victorian era; his ideas later became the basis of behavioural genetics.

Galton produced over 340 papers and b ...

tried to expand Mendel's discoveries with human data and proposed a different model with fractions of the heredity coming from each ancestral composing an infinite series. He called this the theory of "

Law of Ancestral Heredity". His ideas were strongly disagreed by

William Bateson

William Bateson (8 August 1861 – 8 February 1926) was an English biologist who was the first person to use the term genetics to describe the study of heredity, and the chief populariser of the ideas of Gregor Mendel following their rediscover ...

, who followed Mendel's conclusions, that genetic inheritance were exclusively from the parents, half from each of them. This led to a vigorous debate between the biometricians, who supported Galton's ideas, as

Raphael Weldon,

Arthur Dukinfield Darbishire and

Karl Pearson

Karl Pearson (; born Carl Pearson; 27 March 1857 – 27 April 1936) was an English biostatistician and mathematician. He has been credited with establishing the discipline of mathematical statistics. He founded the world's first university ...

, and Mendelians, who supported Bateson's (and Mendel's) ideas, such as

Charles Davenport and

Wilhelm Johannsen. Later, biometricians could not reproduce Galton conclusions in different experiments, and Mendel's ideas prevailed. By the 1930s, models built on statistical reasoning had helped to resolve these differences and to produce the neo-Darwinian

modern evolutionary synthesis.

Solving these differences also allowed to define the concept of population genetics and brought together genetics and evolution. The three leading figures in the establishment of

population genetics

Population genetics is a subfield of genetics that deals with genetic differences within and among populations, and is a part of evolutionary biology. Studies in this branch of biology examine such phenomena as Adaptation (biology), adaptation, s ...

and this synthesis all relied on statistics and developed its use in biology.

*

Ronald Fisher

Sir Ronald Aylmer Fisher (17 February 1890 – 29 July 1962) was a British polymath who was active as a mathematician, statistician, biologist, geneticist, and academic. For his work in statistics, he has been described as "a genius who a ...

worked alongside statistician Betty Allan developing several basic statistical methods in support of his work studying the crop experiments at

Rothamsted Research, published in Fisher's books

Statistical Methods for Research Workers (1925) and

The Genetical Theory of Natural Selection (1930), as well as Allan's scientific papers. Fisher went on to give many contributions to genetics and statistics. Some of them include the

ANOVA,

p-value concepts,

Fisher's exact test and

Fisher's equation for

population dynamics. He is credited for the sentence "Natural selection is a mechanism for generating an exceedingly high degree of improbability".

*

Sewall G. Wright developed

''F''-statistics and methods of computing them and defined

inbreeding coefficient

The coefficient of relationship is a measure of the degree of consanguinity (or biological relationship) between two individuals. The term coefficient of relationship was defined by Sewall Wright in 1922, and was derived from his definition of ...

.

*

J. B. S. Haldane's book, ''The Causes of Evolution'', reestablished natural selection as the premier mechanism of evolution by explaining it in terms of the mathematical consequences of Mendelian genetics. He also developed the theory of

primordial soup.

These and other biostatisticians,

mathematical biologists, and statistically inclined geneticists helped bring together

evolutionary biology

Evolutionary biology is the subfield of biology that studies the evolutionary processes such as natural selection, common descent, and speciation that produced the diversity of life on Earth. In the 1930s, the discipline of evolutionary biolo ...

and

genetics

Genetics is the study of genes, genetic variation, and heredity in organisms.Hartl D, Jones E (2005) It is an important branch in biology because heredity is vital to organisms' evolution. Gregor Mendel, a Moravian Augustinians, Augustinian ...

into a consistent, coherent whole that could begin to be

quantitatively modeled.

In parallel to this overall development, the pioneering work of

D'Arcy Thompson in ''On Growth and Form'' also helped to add quantitative discipline to biological study.

Despite the fundamental importance and frequent necessity of statistical reasoning, there may nonetheless have been a tendency among biologists to distrust or deprecate results which are not

qualitatively apparent. One anecdote describes

Thomas Hunt Morgan banning the

Friden calculator from his department at

Caltech

The California Institute of Technology (branded as Caltech) is a private university, private research university in Pasadena, California, United States. The university is responsible for many modern scientific advancements and is among a small g ...

, saying "Well, I am like a guy who is prospecting for gold along the banks of the Sacramento River in 1849. With a little intelligence, I can reach down and pick up big nuggets of gold. And as long as I can do that, I'm not going to let any people in my department waste scarce resources in

placer mining."

Research planning

Any research in

life sciences

This list of life sciences comprises the branches of science that involve the scientific study of life – such as microorganisms, plants, and animals including human beings. This science is one of the two major branches of natural science, ...

is proposed to answer a

scientific question we might have. To answer this question with a high certainty, we need

accurate results. The correct definition of the main

hypothesis

A hypothesis (: hypotheses) is a proposed explanation for a phenomenon. A scientific hypothesis must be based on observations and make a testable and reproducible prediction about reality, in a process beginning with an educated guess o ...

and the research plan will reduce errors while taking a decision in understanding a phenomenon. The research plan might include the research question, the hypothesis to be tested, the

experimental design,

data collection

Data collection or data gathering is the process of gathering and measuring information on targeted variables in an established system, which then enables one to answer relevant questions and evaluate outcomes. Data collection is a research com ...

methods,

data analysis

Data analysis is the process of inspecting, Data cleansing, cleansing, Data transformation, transforming, and Data modeling, modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Da ...

perspectives and costs involved. It is essential to carry the study based on the three basic principles of experimental statistics:

randomization

Randomization is a statistical process in which a random mechanism is employed to select a sample from a population or assign subjects to different groups.Oxford English Dictionary "randomization" The process is crucial in ensuring the random alloc ...

,

replication, and local control.

Research question

The research question will define the objective of a study. The research will be headed by the question, so it needs to be concise, at the same time it is focused on interesting and novel topics that may improve science and knowledge and that field. To define the way to ask the

scientific question, an exhaustive

literature review might be necessary. So the research can be useful to add value to the

scientific community

The scientific community is a diverse network of interacting scientists. It includes many "working group, sub-communities" working on particular scientific fields, and within particular institutions; interdisciplinary and cross-institutional acti ...

.

Hypothesis definition

Once the aim of the study is defined, the possible answers to the research question can be proposed, transforming this question into a

hypothesis

A hypothesis (: hypotheses) is a proposed explanation for a phenomenon. A scientific hypothesis must be based on observations and make a testable and reproducible prediction about reality, in a process beginning with an educated guess o ...

. The main propose is called

null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

(H

0) and is usually based on a permanent knowledge about the topic or an obvious occurrence of the phenomena, sustained by a deep literature review. We can say it is the standard expected answer for the data under the situation in

test. In general, H

O assumes no association between treatments. On the other hand, the

alternative hypothesis is the denial of H

O. It assumes some degree of association between the treatment and the outcome. Although, the hypothesis is sustained by question research and its expected and unexpected answers.

As an example, consider groups of similar animals (mice, for example) under two different diet systems. The research question would be: what is the best diet? In this case, H

0 would be that there is no difference between the two diets in mice

metabolism

Metabolism (, from ''metabolē'', "change") is the set of life-sustaining chemical reactions in organisms. The three main functions of metabolism are: the conversion of the energy in food to energy available to run cellular processes; the co ...

(H

0: μ

1 = μ

2) and the

alternative hypothesis would be that the diets have different effects over animals metabolism (H

1: μ

1 ≠ μ

2).

The

hypothesis

A hypothesis (: hypotheses) is a proposed explanation for a phenomenon. A scientific hypothesis must be based on observations and make a testable and reproducible prediction about reality, in a process beginning with an educated guess o ...

is defined by the researcher, according to his/her interests in answering the main question. Besides that, the

alternative hypothesis can be more than one hypothesis. It can assume not only differences across observed parameters, but their degree of differences (''i.e.'' higher or shorter).

Sampling

Usually, a study aims to understand an effect of a phenomenon over a

population

Population is a set of humans or other organisms in a given region or area. Governments conduct a census to quantify the resident population size within a given jurisdiction. The term is also applied to non-human animals, microorganisms, and pl ...

. In

biology

Biology is the scientific study of life and living organisms. It is a broad natural science that encompasses a wide range of fields and unifying principles that explain the structure, function, growth, History of life, origin, evolution, and ...

, a

population

Population is a set of humans or other organisms in a given region or area. Governments conduct a census to quantify the resident population size within a given jurisdiction. The term is also applied to non-human animals, microorganisms, and pl ...

is defined as all the

individual

An individual is one that exists as a distinct entity. Individuality (or self-hood) is the state or quality of living as an individual; particularly (in the case of humans) as a person unique from other people and possessing one's own needs or g ...

s of a given

species

A species () is often defined as the largest group of organisms in which any two individuals of the appropriate sexes or mating types can produce fertile offspring, typically by sexual reproduction. It is the basic unit of Taxonomy (biology), ...

, in a specific area at a given time. In biostatistics, this concept is extended to a variety of collections possible of study. Although, in biostatistics, a

population

Population is a set of humans or other organisms in a given region or area. Governments conduct a census to quantify the resident population size within a given jurisdiction. The term is also applied to non-human animals, microorganisms, and pl ...

is not only the individuals, but the total of one specific component of their

organism

An organism is any life, living thing that functions as an individual. Such a definition raises more problems than it solves, not least because the concept of an individual is also difficult. Many criteria, few of them widely accepted, have be ...

s, as the whole

genome

A genome is all the genetic information of an organism. It consists of nucleotide sequences of DNA (or RNA in RNA viruses). The nuclear genome includes protein-coding genes and non-coding genes, other functional regions of the genome such as ...

, or all the sperm

cells, for animals, or the total leaf area, for a plant, for example.

It is not possible to take the

measures from all the elements of a

population

Population is a set of humans or other organisms in a given region or area. Governments conduct a census to quantify the resident population size within a given jurisdiction. The term is also applied to non-human animals, microorganisms, and pl ...

. Because of that, the

sampling process is very important for

statistical inference

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of ...

.

Sampling is defined as to randomly get a representative part of the entire population, to make posterior inferences about the population. So, the

sample might catch the most

variability across a population.

The

sample size is determined by several things, since the scope of the research to the resources available. In

clinical research

Clinical research is a branch of medical research that involves people and aims to determine the effectiveness (efficacy) and safety of medications, devices, diagnostic products, and treatment regimens intended for improving human health. The ...

, the trial type, as

inferiority,

equivalence, and

superiority is a key in determining sample

size

Size in general is the Magnitude (mathematics), magnitude or dimensions of a thing. More specifically, ''geometrical size'' (or ''spatial size'') can refer to three geometrical measures: length, area, or volume. Length can be generalized ...

.

Experimental design

Experimental designs sustain those basic principles of

experimental statistics. There are three basic experimental designs to randomly allocate

treatments in all

plots of the

experiment

An experiment is a procedure carried out to support or refute a hypothesis, or determine the efficacy or likelihood of something previously untried. Experiments provide insight into cause-and-effect by demonstrating what outcome occurs whe ...

. They are

completely randomized design,

randomized block design, and

factorial designs. Treatments can be arranged in many ways inside the experiment. In

agriculture

Agriculture encompasses crop and livestock production, aquaculture, and forestry for food and non-food products. Agriculture was a key factor in the rise of sedentary human civilization, whereby farming of domesticated species created ...

, the correct

experimental design is the root of a good study and the arrangement of

treatments within the study is essential because

environment largely affects the

plots (

plants

Plants are the eukaryotes that form the kingdom Plantae; they are predominantly photosynthetic. This means that they obtain their energy from sunlight, using chloroplasts derived from endosymbiosis with cyanobacteria to produce sugars f ...

,

livestock

Livestock are the Domestication, domesticated animals that are raised in an Agriculture, agricultural setting to provide labour and produce diversified products for consumption such as meat, Egg as food, eggs, milk, fur, leather, and wool. The t ...

,

microorganism

A microorganism, or microbe, is an organism of microscopic scale, microscopic size, which may exist in its unicellular organism, single-celled form or as a Colony (biology)#Microbial colonies, colony of cells. The possible existence of unseen ...

s). These main arrangements can be found in the literature under the names of "

lattices", "incomplete blocks", "

split plot", "augmented blocks", and many others. All of the designs might include

control plots, determined by the researcher, to provide an

error estimation during

inference

Inferences are steps in logical reasoning, moving from premises to logical consequences; etymologically, the word '' infer'' means to "carry forward". Inference is theoretically traditionally divided into deduction and induction, a distinct ...

.

In

clinical studies

Clinical trials are prospective biomedical or behavioral research studies on human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments (such as novel vaccines, drugs, dietar ...

, the

samples are usually smaller than in other biological studies, and in most cases, the

environment effect can be controlled or measured. It is common to use

randomized controlled clinical trials, where results are usually compared with

observational study

In fields such as epidemiology, social sciences, psychology and statistics, an observational study draws inferences from a sample (statistics), sample to a statistical population, population where the dependent and independent variables, independ ...

designs such as

case–control or

cohort.

Data collection

Data collection methods must be considered in research planning, because it highly influences the sample size and experimental design.

Data collection varies according to the type of data. For

qualitative data

Qualitative properties are properties that are observed and can generally not be measured with a numerical result, unlike Quantitative property, quantitative properties, which have numerical characteristics.

Description

Qualitative properties a ...

, collection can be done with structured questionnaires or by observation, considering presence or intensity of disease, using score criterion to categorize levels of occurrence. For

quantitative data, collection is done by measuring numerical information using instruments.

In agriculture and biology studies, yield data and its components can be obtained by

metric measures. However, pest and disease injuries in plants are obtained by observation, considering score scales for levels of damage. Especially, in genetic studies, modern methods for data collection in field and laboratory should be considered, as high-throughput platforms for phenotyping and genotyping. These tools allow bigger experiments, while turn possible evaluate many plots in lower time than a human-based only method for data collection.

Finally, all data collected of interest must be stored in an organized data frame for further analysis.

Analysis and data interpretation

Descriptive tools

Data can be represented through

tables or

graphical representation, such as line charts, bar charts, histograms, scatter plot. Also,

measures of central tendency and

variability can be very useful to describe an overview of the data. Follow some examples:

Frequency tables

One type of table is the

frequency

Frequency is the number of occurrences of a repeating event per unit of time. Frequency is an important parameter used in science and engineering to specify the rate of oscillatory and vibratory phenomena, such as mechanical vibrations, audio ...

table, which consists of data arranged in rows and columns, where the frequency is the number of occurrences or repetitions of data. Frequency can be:

Absolute: represents the number of times that a determined value appear;

Relative: obtained by the division of the absolute frequency by the total number;

In the next example, we have the number of genes in ten

operons of the same organism.

:

Line graph

Line graph

In the mathematics, mathematical discipline of graph theory, the line graph of an undirected graph is another graph that represents the adjacencies between edge (graph theory), edges of . is constructed in the following way: for each edge i ...

s represent the variation of a value over another metric, such as time. In general, values are represented in the vertical axis, while the time variation is represented in the horizontal axis.

Bar chart

A

bar chart

A bar chart or bar graph is a chart or graph that presents categorical variable, categorical data with rectangular bars with heights or lengths proportional to the values that they represent. The bars can be plotted vertically or horizontally. A ...

is a graph that shows categorical data as bars presenting heights (vertical bar) or widths (horizontal bar) proportional to represent values. Bar charts provide an image that could also be represented in a tabular format.

In the bar chart example, we have the birth rate in Brazil for the December months from 2010 to 2016.

The sharp fall in December 2016 reflects the outbreak of

Zika virus in the birth rate in Brazil.

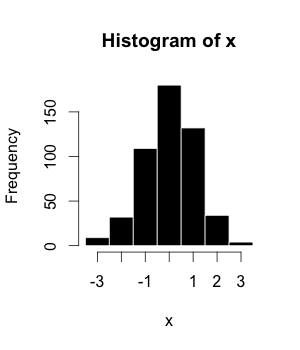

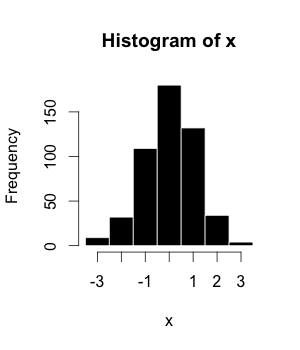

Histograms

The

histogram

A histogram is a visual representation of the frequency distribution, distribution of quantitative data. To construct a histogram, the first step is to Data binning, "bin" (or "bucket") the range of values— divide the entire range of values in ...

(or frequency distribution) is a graphical representation of a dataset tabulated and divided into uniform or non-uniform classes. It was first introduced by

Karl Pearson

Karl Pearson (; born Carl Pearson; 27 March 1857 – 27 April 1936) was an English biostatistician and mathematician. He has been credited with establishing the discipline of mathematical statistics. He founded the world's first university ...

.

Scatter plot

A

scatter plot is a mathematical diagram that uses Cartesian coordinates to display values of a dataset. A scatter plot shows the data as a set of points, each one presenting the value of one variable determining the position on the horizontal axis and another variable on the vertical axis. They are also called scatter graph, scatter chart, scattergram, or scatter diagram.

Mean

The

arithmetic mean

In mathematics and statistics, the arithmetic mean ( ), arithmetic average, or just the ''mean'' or ''average'' is the sum of a collection of numbers divided by the count of numbers in the collection. The collection is often a set of results fr ...

is the sum of a collection of values (

) divided by the number of items of this collection (

).

:

Median

The

median

The median of a set of numbers is the value separating the higher half from the lower half of a Sample (statistics), data sample, a statistical population, population, or a probability distribution. For a data set, it may be thought of as the “ ...

is the value in the middle of a dataset.

Mode

The

mode is the value of a set of data that appears most often.

Box plot

Box plot

In descriptive statistics, a box plot or boxplot is a method for demonstrating graphically the locality, spread and skewness groups of numerical data through their quartiles.

In addition to the box on a box plot, there can be lines (which are ca ...

is a method for graphically depicting groups of numerical data. The maximum and minimum values are represented by the lines, and the interquartile range (IQR) represent 25–75% of the data.

Outlier

In statistics, an outlier is a data point that differs significantly from other observations. An outlier may be due to a variability in the measurement, an indication of novel data, or it may be the result of experimental error; the latter are ...

s may be plotted as circles.

Correlation coefficients

Although correlations between two different kinds of data could be inferred by graphs, such as scatter plot, it is necessary validate this though numerical information. For this reason,

correlation coefficient

A correlation coefficient is a numerical measure of some type of linear correlation, meaning a statistical relationship between two variables. The variables may be two columns of a given data set of observations, often called a sample, or two c ...

s are required. They provide a numerical value that reflects the strength of an association.

Pearson correlation coefficient

Pearson correlation coefficient

In statistics, the Pearson correlation coefficient (PCC) is a correlation coefficient that measures linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviatio ...

is a measure of association between two variables, X and Y. This coefficient, usually represented by ''ρ'' (rho) for the population and ''r'' for the sample, assumes values between −1 and 1, where ''ρ'' = 1 represents a perfect positive correlation, ''ρ'' = −1 represents a perfect negative correlation, and ''ρ'' = 0 is no linear correlation.

Inferential statistics

It is used to make

inference

Inferences are steps in logical reasoning, moving from premises to logical consequences; etymologically, the word '' infer'' means to "carry forward". Inference is theoretically traditionally divided into deduction and induction, a distinct ...

s about an unknown population, by estimation and/or hypothesis testing. In other words, it is desirable to obtain parameters to describe the population of interest, but since the data is limited, it is necessary to make use of a representative sample in order to estimate them. With that, it is possible to test previously defined hypotheses and apply the conclusions to the entire population. The

standard error of the mean is a measure of variability that is crucial to do inferences.

*

Hypothesis testing

Hypothesis testing is essential to make inferences about populations aiming to answer research questions, as settled in "Research planning" section. Authors defined four steps to be set:

# ''The hypothesis to be tested'': as stated earlier, we have to work with the definition of a

null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

(H

0), that is going to be tested, and an

alternative hypothesis. But they must be defined before the experiment implementation.

# ''Significance level and decision rule'': A decision rule depends on the

level of significance, or in other words, the acceptable error rate (α). It is easier to think that we define a ''critical value'' that determines the statistical significance when a

test statistic

Test statistic is a quantity derived from the sample for statistical hypothesis testing.Berger, R. L.; Casella, G. (2001). ''Statistical Inference'', Duxbury Press, Second Edition (p.374) A hypothesis test is typically specified in terms of a tes ...

is compared with it. So, α also has to be predefined before the experiment.

# ''Experiment and statistical analysis'': This is when the experiment is really implemented following the appropriate

experimental design, data is collected and the more suitable statistical tests are evaluated.

# ''Inference'': Is made when the

null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

is rejected or not rejected, based on the evidence that the comparison of

p-values and α brings. It is pointed that the failure to reject H

0 just means that there is not enough evidence to support its rejection, but not that this hypothesis is true.

*

Confidence intervals

A confidence interval is a range of values that can contain the true real parameter value in given a certain level of confidence. The first step is to estimate the best-unbiased estimate of the population parameter. The upper value of the interval is obtained by the sum of this estimate with the multiplication between the standard error of the mean and the confidence level. The calculation of lower value is similar, but instead of a sum, a subtraction must be applied.

Statistical considerations

Power and statistical error

When testing a hypothesis, there are two types of statistic errors possible:

Type I error

Type I error, or a false positive, is the erroneous rejection of a true null hypothesis in statistical hypothesis testing. A type II error, or a false negative, is the erroneous failure in bringing about appropriate rejection of a false null hy ...

and

Type II error

Type I error, or a false positive, is the erroneous rejection of a true null hypothesis in statistical hypothesis testing. A type II error, or a false negative, is the erroneous failure in bringing about appropriate rejection of a false null hy ...

.

* The type I error or

false positive is the incorrect rejection of a true null hypothesis

* The type II error or

false negative is the failure to reject a false

null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

.

The

significance level denoted by α is the type I error rate and should be chosen before performing the test. The type II error rate is denoted by β and

statistical power of the test is 1 − β.

p-value

The

p-value is the probability of obtaining results as extreme as or more extreme than those observed, assuming the

null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

(H

0) is true. It is also called the calculated probability. It is common to confuse the p-value with the

significance level (α), but, the α is a predefined threshold for calling significant results. If p is less than α, the null hypothesis (H

0) is rejected.

Multiple testing

In multiple tests of the same hypothesis, the probability of the occurrence of

false positives (familywise error rate) increase and a strategy is needed to account for this occurrence. This is commonly achieved by using a more stringent threshold to reject null hypotheses. The

Bonferroni correction

In statistics, the Bonferroni correction is a method to counteract the multiple comparisons problem.

Background

The method is named for its use of the Bonferroni inequalities.

Application of the method to confidence intervals was described by ...

defines an acceptable global significance level, denoted by α* and each test is individually compared with a value of α = α*/m. This ensures that the familywise error rate in all m tests, is less than or equal to α*. When m is large, the Bonferroni correction may be overly conservative. An alternative to the Bonferroni correction is to control the

false discovery rate (FDR). The FDR controls the expected proportion of the rejected

null hypotheses (the so-called discoveries) that are false (incorrect rejections). This procedure ensures that, for independent tests, the false discovery rate is at most q*. Thus, the FDR is less conservative than the Bonferroni correction and have more power, at the cost of more false positives.

Mis-specification and robustness checks

The main hypothesis being tested (e.g., no association between treatments and outcomes) is often accompanied by other technical assumptions (e.g., about the form of the probability distribution of the outcomes) that are also part of the null hypothesis. When the technical assumptions are violated in practice, then the null may be frequently rejected even if the main hypothesis is true. Such rejections are said to be due to model mis-specification. Verifying whether the outcome of a statistical test does not change when the technical assumptions are slightly altered (so-called robustness checks) is the main way of combating mis-specification.

Model selection criteria

Model criteria selection will select or model that more approximate true model. The

Akaike's Information Criterion (AIC) and The

Bayesian Information Criterion (BIC) are examples of asymptotically efficient criteria.

Developments and big data

Recent developments have made a large impact on biostatistics. Two important changes have been the ability to collect data on a high-throughput scale, and the ability to perform much more complex analysis using computational techniques. This comes from the development in areas as

sequencing technologies,

Bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, ...

and

Machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

(

Machine learning in bioinformatics).

Use in high-throughput data

New biomedical technologies like

microarrays,

next-generation sequencers (for genomics) and

mass spectrometry

Mass spectrometry (MS) is an analytical technique that is used to measure the mass-to-charge ratio of ions. The results are presented as a ''mass spectrum'', a plot of intensity as a function of the mass-to-charge ratio. Mass spectrometry is used ...

(for proteomics) generate enormous amounts of data, allowing many tests to be performed simultaneously. Careful analysis with biostatistical methods is required to separate the signal from the noise. For example, a microarray could be used to measure many thousands of genes simultaneously, determining which of them have different expression in diseased cells compared to normal cells. However, only a fraction of genes will be differentially expressed.

Multicollinearity often occurs in high-throughput biostatistical settings. Due to high intercorrelation between the predictors (such as

gene expression

Gene expression is the process (including its Regulation of gene expression, regulation) by which information from a gene is used in the synthesis of a functional gene product that enables it to produce end products, proteins or non-coding RNA, ...

levels), the information of one predictor might be contained in another one. It could be that only 5% of the predictors are responsible for 90% of the variability of the response. In such a case, one could apply the biostatistical technique of dimension reduction (for example via principal component analysis). Classical statistical techniques like linear or

logistic regression

In statistics, a logistic model (or logit model) is a statistical model that models the logit, log-odds of an event as a linear function (calculus), linear combination of one or more independent variables. In regression analysis, logistic regres ...

and

linear discriminant analysis

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), canonical variates analysis (CVA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to fi ...

do not work well for high dimensional data (i.e. when the number of observations n is smaller than the number of features or predictors p: n < p). As a matter of fact, one can get quite high R

2-values despite very low predictive power of the statistical model. These classical statistical techniques (esp.

least squares linear regression) were developed for low dimensional data (i.e. where the number of observations n is much larger than the number of predictors p: n >> p). In cases of high dimensionality, one should always consider an independent validation test set and the corresponding residual sum of squares (RSS) and R

2 of the validation test set, not those of the training set.

Often, it is useful to pool information from multiple predictors together. For example,

Gene Set Enrichment Analysis (GSEA) considers the perturbation of whole (functionally related) gene sets rather than of single genes. These gene sets might be known biochemical pathways or otherwise functionally related genes. The advantage of this approach is that it is more robust: It is more likely that a single gene is found to be falsely perturbed than it is that a whole pathway is falsely perturbed. Furthermore, one can integrate the accumulated knowledge about biochemical pathways (like the

JAK-STAT signaling pathway) using this approach.

Bioinformatics advances in databases, data mining, and biological interpretation

The development of

biological databases enables storage and management of biological data with the possibility of ensuring access for users around the world. They are useful for researchers depositing data, retrieve information and files (raw or processed) originated from other experiments or indexing scientific articles, as

PubMed

PubMed is an openly accessible, free database which includes primarily the MEDLINE database of references and abstracts on life sciences and biomedical topics. The United States National Library of Medicine (NLM) at the National Institute ...

. Another possibility is search for the desired term (a gene, a protein, a disease, an organism, and so on) and check all results related to this search. There are databases dedicated to

SNPs (

dbSNP), the knowledge on genes characterization and their pathways (

KEGG) and the description of gene function classifying it by cellular component, molecular function and biological process (

Gene Ontology).

In addition to databases that contain specific molecular information, there are others that are ample in the sense that they store information about an organism or group of organisms. As an example of a database directed towards just one organism, but that contains much data about it, is the ''

Arabidopsis thaliana

''Arabidopsis thaliana'', the thale cress, mouse-ear cress or arabidopsis, is a small plant from the mustard family (Brassicaceae), native to Eurasia and Africa. Commonly found along the shoulders of roads and in disturbed land, it is generally ...

'' genetic and molecular database – TAIR. Phytozome, in turn, stores the assemblies and annotation files of dozen of plant genomes, also containing visualization and analysis tools. Moreover, there is an interconnection between some databases in the information exchange/sharing and a major initiative was the

International Nucleotide Sequence Database Collaboration The International Nucleotide Sequence Database Collaboration (INSDC) consists of a joint effort to collect and disseminate databases containing DNA and RNA sequences. It involves the following computerized databases: NIG's DNA Data Bank of Japan ( ...

(INSDC) which relates data from DDBJ, EMBL-EBI, and NCBI.

Nowadays, increase in size and complexity of molecular datasets leads to use of powerful statistical methods provided by computer science algorithms which are developed by

machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

area. Therefore, data mining and machine learning allow detection of patterns in data with a complex structure, as biological ones, by using methods of

supervised and

unsupervised learning, regression, detection of

clusters and

association rule mining, among others.

To indicate some of them,

self-organizing maps and

''k''-means are examples of cluster algorithms;

neural networks

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either Cell (biology), biological cells or signal pathways. While individual neurons are simple, many of them together in a netwo ...

implementation and

support vector machines models are examples of common machine learning algorithms.

Collaborative work among molecular biologists, bioinformaticians, statisticians and computer scientists is important to perform an experiment correctly, going from planning, passing through data generation and analysis, and ending with biological interpretation of the results.

Use of computationally intensive methods

On the other hand, the advent of modern computer technology and relatively cheap computing resources have enabled computer-intensive biostatistical methods like

bootstrapping and

re-sampling methods.

In recent times,

random forests have gained popularity as a method for performing

statistical classification

When classification is performed by a computer, statistical methods are normally used to develop the algorithm.

Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''f ...

. Random forest techniques generate a panel of decision trees. Decision trees have the advantage that you can draw them and interpret them (even with a basic understanding of mathematics and statistics). Random Forests have thus been used for clinical decision support systems.

Applications

Public health

Public health

Public health is "the science and art of preventing disease, prolonging life and promoting health through the organized efforts and informed choices of society, organizations, public and private, communities and individuals". Analyzing the de ...

, including

epidemiology

Epidemiology is the study and analysis of the distribution (who, when, and where), patterns and Risk factor (epidemiology), determinants of health and disease conditions in a defined population, and application of this knowledge to prevent dise ...

,

health services research,

nutrition

Nutrition is the biochemistry, biochemical and physiology, physiological process by which an organism uses food and water to support its life. The intake of these substances provides organisms with nutrients (divided into Macronutrient, macro- ...

,

environmental health

Environmental health is the branch of public health concerned with all aspects of the natural environment, natural and built environment affecting human health. To effectively control factors that may affect health, the requirements for a hea ...

and health care policy & management. In these

medicine

Medicine is the science and Praxis (process), practice of caring for patients, managing the Medical diagnosis, diagnosis, prognosis, Preventive medicine, prevention, therapy, treatment, Palliative care, palliation of their injury or disease, ...

contents, it's important to consider the design and analysis of the

clinical trial

Clinical trials are prospective biomedical or behavioral research studies on human subject research, human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments (such as novel v ...

s. As one example, there is the assessment of severity state of a patient with a prognosis of an outcome of a disease.

With new technologies and genetics knowledge, biostatistics are now also used for

Systems medicine, which consists in a more personalized medicine. For this, is made an integration of data from different sources, including conventional patient data, clinico-pathological parameters, molecular and genetic data as well as data generated by additional new-omics technologies.

Quantitative genetics

The study of

population genetics

Population genetics is a subfield of genetics that deals with genetic differences within and among populations, and is a part of evolutionary biology. Studies in this branch of biology examine such phenomena as Adaptation (biology), adaptation, s ...

and

statistical genetics in order to link variation in

genotype

The genotype of an organism is its complete set of genetic material. Genotype can also be used to refer to the alleles or variants an individual carries in a particular gene or genetic location. The number of alleles an individual can have in a ...

with a variation in

phenotype

In genetics, the phenotype () is the set of observable characteristics or traits of an organism. The term covers the organism's morphology (physical form and structure), its developmental processes, its biochemical and physiological propert ...

. In other words, it is desirable to discover the genetic basis of a measurable trait, a quantitative trait, that is under polygenic control. A genome region that is responsible for a continuous trait is called a

quantitative trait locus

A quantitative trait locus (QTL) is a locus (section of DNA) that correlates with variation of a quantitative trait in the phenotype of a population of organisms. QTLs are mapped by identifying which molecular markers (such as SNPs or AFLPs) ...

(QTL). The study of QTLs become feasible by using

molecular marker

In molecular biology and other fields, a molecular marker is a molecule, sampled from some source, that gives information about its source. For example, DNA is a molecular marker that gives information about the organism from which it was taken. ...

s and measuring traits in populations, but their mapping needs the obtaining of a population from an experimental crossing, like an F2 or

recombinant inbred strains/lines (RILs). To scan for QTLs regions in a genome, a

gene map based on linkage have to be built. Some of the best-known QTL mapping algorithms are Interval Mapping, Composite Interval Mapping, and Multiple Interval Mapping.

However, QTL mapping resolution is impaired by the amount of recombination assayed, a problem for species in which it is difficult to obtain large offspring. Furthermore, allele diversity is restricted to individuals originated from contrasting parents, which limit studies of allele diversity when we have a panel of individuals representing a natural population. For this reason, the

genome-wide association study

In genomics, a genome-wide association study (GWA study, or GWAS), is an observational study of a genome-wide set of Single-nucleotide polymorphism, genetic variants in different individuals to see if any variant is associated with a trait. GWA s ...

was proposed in order to identify QTLs based on

linkage disequilibrium, that is the non-random association between traits and molecular markers. It was leveraged by the development of high-throughput

SNP genotyping.

In

animal

Animals are multicellular, eukaryotic organisms in the Biology, biological Kingdom (biology), kingdom Animalia (). With few exceptions, animals heterotroph, consume organic material, Cellular respiration#Aerobic respiration, breathe oxygen, ...

and

plant breeding, the use of markers in

selection aiming for breeding, mainly the molecular ones, collaborated to the development of

marker-assisted selection. While QTL mapping is limited due resolution, GWAS does not have enough power when rare variants of small effect that are also influenced by environment. So, the concept of Genomic Selection (GS) arises in order to use all molecular markers in the selection and allow the prediction of the performance of candidates in this selection. The proposal is to genotype and phenotype a training population, develop a model that can obtain the genomic estimated breeding values (GEBVs) of individuals belonging to a genotype and but not phenotype population, called testing population. This kind of study could also include a validation population, thinking in the concept of

cross-validation, in which the real phenotype results measured in this population are compared with the phenotype results based on the prediction, what used to check the accuracy of the model.

As a summary, some points about the application of quantitative genetics are:

* This has been used in agriculture to improve crops (

Plant breeding) and

livestock

Livestock are the Domestication, domesticated animals that are raised in an Agriculture, agricultural setting to provide labour and produce diversified products for consumption such as meat, Egg as food, eggs, milk, fur, leather, and wool. The t ...

(

Animal breeding

Animal breeding is a branch of animal science that addresses the evaluation (using best linear unbiased prediction and other methods) of the genetic value (estimated breeding value, EBV) of livestock. Selecting for breeding animals with superior ...

).

* In biomedical research, this work can assist in finding candidates

gene

In biology, the word gene has two meanings. The Mendelian gene is a basic unit of heredity. The molecular gene is a sequence of nucleotides in DNA that is transcribed to produce a functional RNA. There are two types of molecular genes: protei ...

allele

An allele is a variant of the sequence of nucleotides at a particular location, or Locus (genetics), locus, on a DNA molecule.

Alleles can differ at a single position through Single-nucleotide polymorphism, single nucleotide polymorphisms (SNP), ...

s that can cause or influence predisposition to diseases in

human genetics

Expression data

Studies for differential expression of genes from

RNA-Seq data, as for

RT-qPCR and

microarrays, demands comparison of conditions. The goal is to identify genes which have a significant change in abundance between different conditions. Then, experiments are designed appropriately, with replicates for each condition/treatment, randomization and blocking, when necessary. In RNA-Seq, the quantification of expression uses the information of mapped reads that are summarized in some genetic unit, as

exon

An exon is any part of a gene that will form a part of the final mature RNA produced by that gene after introns have been removed by RNA splicing. The term ''exon'' refers to both the DNA sequence within a gene and to the corresponding sequence ...

s that are part of a gene sequence. As

microarray

A microarray is a multiplex (assay), multiplex lab-on-a-chip. Its purpose is to simultaneously detect the expression of thousands of biological interactions. It is a two-dimensional array on a Substrate (materials science), solid substrate—usu ...

results can be approximated by a normal distribution, RNA-Seq counts data are better explained by other distributions. The first used distribution was the

Poisson one, but it underestimate the sample error, leading to false positives. Currently, biological variation is considered by methods that estimate a dispersion parameter of a

negative binomial distribution.

Generalized linear model

In statistics, a generalized linear model (GLM) is a flexible generalization of ordinary linear regression. The GLM generalizes linear regression by allowing the linear model to be related to the response variable via a ''link function'' and by ...

s are used to perform the tests for statistical significance and as the number of genes is high, multiple tests correction have to be considered. Some examples of other analysis on

genomics

Genomics is an interdisciplinary field of molecular biology focusing on the structure, function, evolution, mapping, and editing of genomes. A genome is an organism's complete set of DNA, including all of its genes as well as its hierarchical, ...

data comes from microarray or

proteomics

Proteomics is the large-scale study of proteins. Proteins are vital macromolecules of all living organisms, with many functions such as the formation of structural fibers of muscle tissue, enzymatic digestion of food, or synthesis and replicatio ...

experiments. Often concerning diseases or disease stages.

Other studies

*

Ecology

Ecology () is the natural science of the relationships among living organisms and their Natural environment, environment. Ecology considers organisms at the individual, population, community (ecology), community, ecosystem, and biosphere lev ...

,

ecological forecasting

* Biological

sequence analysis

In bioinformatics, sequence analysis is the process of subjecting a DNA, RNA or peptide sequence to any of a wide range of analytical methods to understand its features, function, structure, or evolution. It can be performed on the entire genome ...

*

Systems biology for gene network inference or pathways analysis.

*

Clinical research

Clinical research is a branch of medical research that involves people and aims to determine the effectiveness (efficacy) and safety of medications, devices, diagnostic products, and treatment regimens intended for improving human health. The ...

and pharmaceutical development

*

Population dynamics, especially in regards to

fisheries science.

*

Phylogenetics

In biology, phylogenetics () is the study of the evolutionary history of life using observable characteristics of organisms (or genes), which is known as phylogenetic inference. It infers the relationship among organisms based on empirical dat ...

and

evolution

Evolution is the change in the heritable Phenotypic trait, characteristics of biological populations over successive generations. It occurs when evolutionary processes such as natural selection and genetic drift act on genetic variation, re ...

*

Pharmacodynamics

Pharmacodynamics (PD) is the study of the biochemistry, biochemical and physiology, physiologic effects of drugs (especially pharmaceutical drugs). The effects can include those manifested within animals (including humans), microorganisms, or comb ...

*

Pharmacokinetics

*

Neuroimaging

Neuroimaging is the use of quantitative (computational) techniques to study the neuroanatomy, structure and function of the central nervous system, developed as an objective way of scientifically studying the healthy human brain in a non-invasive ...

Tools

There are a lot of tools that can be used to do statistical analysis in biological data. Most of them are useful in other areas of knowledge, covering a large number of applications (alphabetical). Here are brief descriptions of some of them:

*

ASReml: Another software developed by VSNi

that can be used also in R environment as a package. It is developed to estimate variance components under a general linear mixed model using

restricted maximum likelihood (REML). Models with fixed effects and random effects and nested or crossed ones are allowed. Gives the possibility to investigate different

variance-covariance matrix structures.

* CycDesigN: A computer package developed by VSNi

that helps the researchers create experimental designs and analyze data coming from a design present in one of three classes handled by CycDesigN. These classes are resolvable, non-resolvable, partially replicated and

crossover designs. It includes less used designs the Latinized ones, as t-Latinized design.

*

Orange: A programming interface for high-level data processing, data mining and data visualization. Include tools for gene expression and genomics.

*

R: An

open source

Open source is source code that is made freely available for possible modification and redistribution. Products include permission to use and view the source code, design documents, or content of the product. The open source model is a decentrali ...

environment and programming language dedicated to statistical computing and graphics. It is an implementation of

S language maintained by CRAN. In addition to its functions to read data tables, take descriptive statistics, develop and evaluate models, its repository contains packages developed by researchers around the world. This allows the development of functions written to deal with the statistical analysis of data that comes from specific applications. In the case of Bioinformatics, for example, there are packages located in the main repository (CRAN) and in others, as

Bioconductor. It is also possible to use packages under development that are shared in hosting-services as

GitHub

GitHub () is a Proprietary software, proprietary developer platform that allows developers to create, store, manage, and share their code. It uses Git to provide distributed version control and GitHub itself provides access control, bug trackin ...

.

*

SAS: A data analysis software widely used, going through universities, services and industry. Developed by a company with the same name (

SAS Institute), it uses

SAS language for programming.

* PLA 3.0: Is a biostatistical analysis software for regulated environments (e.g. drug testing) which supports Quantitative Response Assays (Parallel-Line, Parallel-Logistics, Slope-Ratio) and Dichotomous Assays (Quantal Response, Binary Assays). It also supports weighting methods for combination calculations and the automatic data aggregation of independent assay data.

*

Weka: A

Java

Java is one of the Greater Sunda Islands in Indonesia. It is bordered by the Indian Ocean to the south and the Java Sea (a part of Pacific Ocean) to the north. With a population of 156.9 million people (including Madura) in mid 2024, proje ...

software for

machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

and

data mining

Data mining is the process of extracting and finding patterns in massive data sets involving methods at the intersection of machine learning, statistics, and database systems. Data mining is an interdisciplinary subfield of computer science and ...

, including tools and methods for visualization, clustering, regression, association rule, and classification. There are tools for cross-validation, bootstrapping and a module of algorithm comparison. Weka also can be run in other programming languages as Perl or R.

*

Python (programming language)

Python is a high-level programming language, high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation.

Python is type system#DYNAMIC, dynamically type-checked a ...

image analysis, deep-learning, machine-learning

*

SQL databases

*

NoSQL

NoSQL (originally meaning "Not only SQL" or "non-relational") refers to a type of database design that stores and retrieves data differently from the traditional table-based structure of relational databases. Unlike relational databases, which ...

*

NumPy numerical python

*

SciPy

*

SageMath

*

LAPACK linear algebra

*

MATLAB

MATLAB (an abbreviation of "MATrix LABoratory") is a proprietary multi-paradigm programming language and numeric computing environment developed by MathWorks. MATLAB allows matrix manipulations, plotting of functions and data, implementat ...

*

Apache Hadoop

*

Apache Spark

*

Amazon Web Services

Amazon Web Services, Inc. (AWS) is a subsidiary of Amazon.com, Amazon that provides Software as a service, on-demand cloud computing computing platform, platforms and Application programming interface, APIs to individuals, companies, and gover ...

Scope and training programs

Almost all educational programmes in biostatistics are at

postgraduate level. They are most often found in schools of public health, affiliated with schools of medicine, forestry, or agriculture, or as a focus of application in departments of statistics.

In the United States, where several universities have dedicated biostatistics departments, many other top-tier universities integrate biostatistics faculty into statistics or other departments, such as

epidemiology

Epidemiology is the study and analysis of the distribution (who, when, and where), patterns and Risk factor (epidemiology), determinants of health and disease conditions in a defined population, and application of this knowledge to prevent dise ...

. Thus, departments carrying the name "biostatistics" may exist under quite different structures. For instance, relatively new biostatistics departments have been founded with a focus on

bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, ...

and

computational biology

Computational biology refers to the use of techniques in computer science, data analysis, mathematical modeling and Computer simulation, computational simulations to understand biological systems and relationships. An intersection of computer sci ...

, whereas older departments, typically affiliated with schools of

public health

Public health is "the science and art of preventing disease, prolonging life and promoting health through the organized efforts and informed choices of society, organizations, public and private, communities and individuals". Analyzing the de ...

, will have more traditional lines of research involving epidemiological studies and

clinical trial

Clinical trials are prospective biomedical or behavioral research studies on human subject research, human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments (such as novel v ...

s as well as bioinformatics. In larger universities around the world, where both a statistics and a biostatistics department exist, the degree of integration between the two departments may range from the bare minimum to very close collaboration. In general, the difference between a statistics program and a biostatistics program is twofold: (i) statistics departments will often host theoretical/methodological research which are less common in biostatistics programs and (ii) statistics departments have lines of research that may include biomedical applications but also other areas such as industry (

quality control

Quality control (QC) is a process by which entities review the quality of all factors involved in production. ISO 9000 defines quality control as "a part of quality management focused on fulfilling quality requirements".

This approach plac ...

), business and

economics

Economics () is a behavioral science that studies the Production (economics), production, distribution (economics), distribution, and Consumption (economics), consumption of goods and services.

Economics focuses on the behaviour and interac ...

and biological areas other than medicine.

Specialized journals

* Biostatistics

* International Journal of Biostatistics

* Journal of Epidemiology and Biostatistics

* Biostatistics and Public Health

* Biometrics

* Biometrika

* Biometrical Journal

* Communications in Biometry and Crop Science

* Statistical Applications in Genetics and Molecular Biology

* Statistical Methods in Medical Research

* Pharmaceutical Statistics

* Statistics in Medicine

See also

*

Bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, ...

*

Epidemiological method

*

Epidemiology

Epidemiology is the study and analysis of the distribution (who, when, and where), patterns and Risk factor (epidemiology), determinants of health and disease conditions in a defined population, and application of this knowledge to prevent dise ...

*

Group size measures

*

Health indicator

*

Mathematical and theoretical biology

References

External links

*

The International Biometric SocietyThe Collection of Biostatistics Research ArchiveGuide to Biostatistics (MedPageToday.com)

Biomedical Statistics

{{Authority control

Bioinformatics

The

The