In

probability theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set o ...

and

statistics, the beta distribution is a family of continuous

probability distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomeno ...

s defined on the interval

, 1in terms of two positive

parameters

A parameter (), generally, is any characteristic that can help in defining or classifying a particular system (meaning an event, project, object, situation, etc.). That is, a parameter is an element of a system that is useful, or critical, when ...

, denoted by ''alpha'' (''α'') and ''beta'' (''β''), that appear as exponents of the random variable and control the

shape

A shape or figure is a graphical representation of an object or its external boundary, outline, or external surface, as opposed to other properties such as color, texture, or material type.

A plane shape or plane figure is constrained to lie on ...

of the distribution.

The beta distribution has been applied to model the behavior of

random variables limited to intervals of finite length in a wide variety of disciplines. The beta distribution is a suitable model for the random behavior of percentages and proportions.

In

Bayesian inference, the beta distribution is the

conjugate prior probability distribution for the

Bernoulli,

binomial,

negative binomial and

geometric

Geometry (; ) is, with arithmetic, one of the oldest branches of mathematics. It is concerned with properties of space such as the distance, shape, size, and relative position of figures. A mathematician who works in the field of geometry is ca ...

distributions.

The formulation of the beta distribution discussed here is also known as the beta distribution of the first kind, whereas ''beta distribution of the second kind'' is an alternative name for the

beta prime distribution. The generalization to multiple variables is called a

Dirichlet distribution

In probability and statistics, the Dirichlet distribution (after Peter Gustav Lejeune Dirichlet), often denoted \operatorname(\boldsymbol\alpha), is a family of continuous multivariate probability distributions parameterized by a vector \bolds ...

.

Definitions

Probability density function

The

probability density function

In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) c ...

(PDF) of the beta distribution, for , and shape parameters ''α'', ''β'' > 0, is a

power function of the variable ''x'' and of its

reflection as follows:

:

where Γ(''z'') is the

gamma function

In mathematics, the gamma function (represented by , the capital letter gamma from the Greek alphabet) is one commonly used extension of the factorial function to complex numbers. The gamma function is defined for all complex numbers except th ...

. The

beta function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function that is closely related to the gamma function and to binomial coefficients. It is defined by the integral

: \Beta(z_1,z_2) = \int_0^1 t^ ...

,

, is a

normalization constant to ensure that the total probability is 1. In the above equations ''x'' is a

realization—an observed value that actually occurred—of a

random process ''X''.

This definition includes both ends and , which is consistent with definitions for other

continuous distributions supported on a bounded interval which are special cases of the beta distribution, for example the

arcsine distribution, and consistent with several authors, like

N. L. Johnson and

S. Kotz.

However, the inclusion of and does not work for ; accordingly, several other authors, including

W. Feller,

choose to exclude the ends and , (so that the two ends are not actually part of the domain of the density function) and consider instead .

Several authors, including

N. L. Johnson and

S. Kotz,

use the symbols ''p'' and ''q'' (instead of ''α'' and ''β'') for the shape parameters of the beta distribution, reminiscent of the symbols traditionally used for the parameters of the

Bernoulli distribution

In probability theory and statistics, the Bernoulli distribution, named after Swiss mathematician Jacob Bernoulli,James Victor Uspensky: ''Introduction to Mathematical Probability'', McGraw-Hill, New York 1937, page 45 is the discrete probab ...

, because the beta distribution approaches the Bernoulli distribution in the limit when both shape parameters ''α'' and ''β'' approach the value of zero.

In the following, a random variable ''X'' beta-distributed with parameters ''α'' and ''β'' will be denoted by:

:

Other notations for beta-distributed random variables used in the statistical literature are

and

.

Cumulative distribution function

The

cumulative distribution function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x.

Ev ...

is

:

where

is the

incomplete beta function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function that is closely related to the gamma function and to binomial coefficients. It is defined by the integral

: \Beta(z_1,z_2) = \int_0^1 t ...

and

is the

regularized incomplete beta function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function that is closely related to the gamma function and to binomial coefficients. It is defined by the integral

: \Beta(z_1,z_2) = \int_0^1 t^ ...

.

Alternative parameterizations

Two parameters

=Mean and sample size

=

The beta distribution may also be reparameterized in terms of its mean ''μ'' and the sum of the two shape parameters (

p. 83). Denoting by αPosterior and βPosterior the shape parameters of the posterior beta distribution resulting from applying Bayes theorem to a binomial likelihood function and a prior probability, the interpretation of the addition of both shape parameters to be sample size = ''ν'' = ''α''·Posterior + ''β''·Posterior is only correct for the Haldane prior probability Beta(0,0). Specifically, for the Bayes (uniform) prior Beta(1,1) the correct interpretation would be sample size = ''α''·Posterior + ''β'' Posterior − 2, or ''ν'' = (sample size) + 2. For sample size much larger than 2, the difference between these two priors becomes negligible. (See section

Bayesian inference for further details.) ν = α + β is referred to as the "sample size" of a Beta distribution, but one should remember that it is, strictly speaking, the "sample size" of a binomial likelihood function only when using a Haldane Beta(0,0) prior in Bayes theorem.

This parametrization may be useful in Bayesian parameter estimation. For example, one may administer a test to a number of individuals. If it is assumed that each person's score (0 ≤ ''θ'' ≤ 1) is drawn from a population-level Beta distribution, then an important statistic is the mean of this population-level distribution. The mean and sample size parameters are related to the shape parameters α and β via

[

: ''α'' = ''μν'', ''β'' = (1 − ''μ'')''ν''

Under this parametrization, one may place an uninformative prior probability over the mean, and a vague prior probability (such as an exponential or gamma distribution) over the positive reals for the sample size, if they are independent, and prior data and/or beliefs justify it.

]

=Mode and concentration

=

Concave

Concave or concavity may refer to:

Science and technology

* Concave lens

* Concave mirror

Mathematics

* Concave function, the negative of a convex function

* Concave polygon, a polygon which is not convex

* Concave set

In geometry, a subset ...

beta distributions, which have , can be parametrized in terms of mode and "concentration". The mode, , and concentration, , can be used to define the usual shape parameters as follows:sufficient statistics

In statistics, a statistic is ''sufficient'' with respect to a statistical model and its associated unknown parameter if "no other statistic that can be calculated from the same sample provides any additional information as to the value of the pa ...

, and . Note also that in the limit, , the distribution becomes flat.

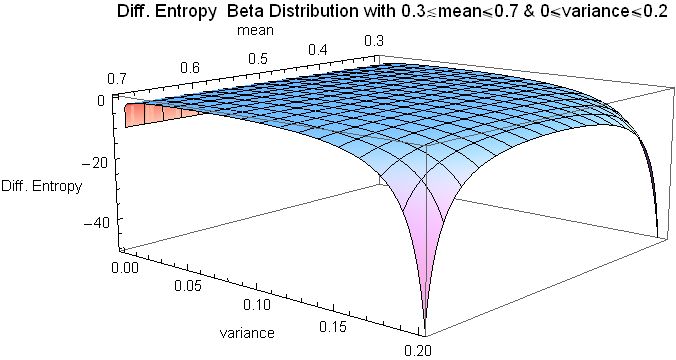

=Mean and variance

=

Solving the system of (coupled) equations given in the above sections as the equations for the mean and the variance of the beta distribution in terms of the original parameters ''α'' and ''β'', one can express the ''α'' and ''β'' parameters in terms of the mean (''μ'') and the variance (var):

:

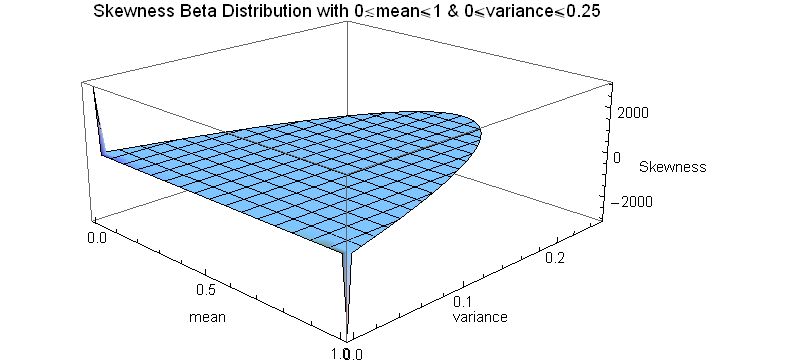

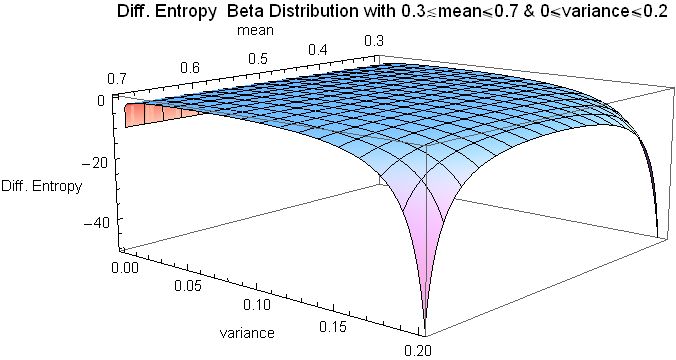

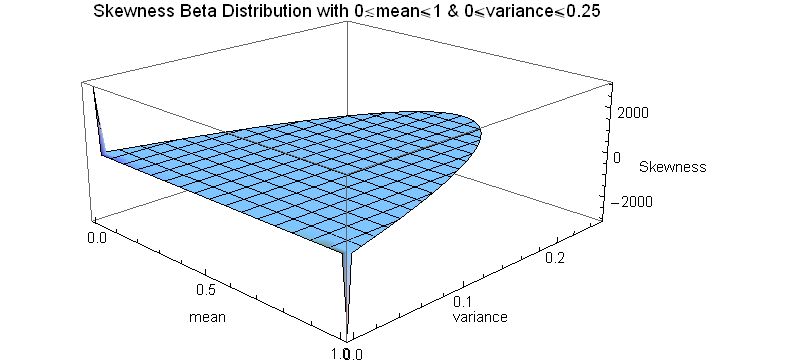

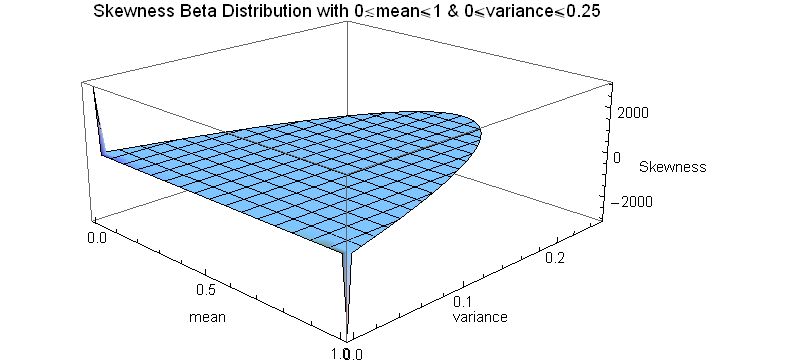

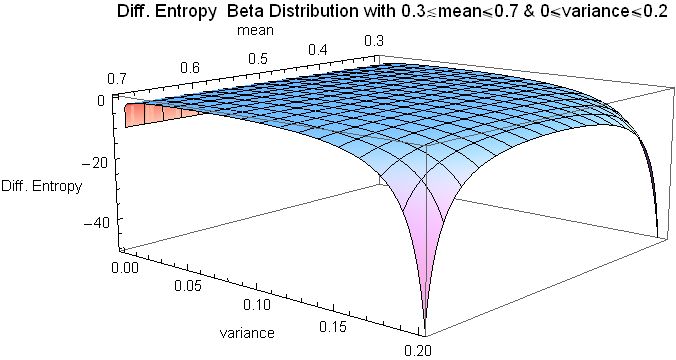

This parametrization of the beta distribution may lead to a more intuitive understanding than the one based on the original parameters ''α'' and ''β''. For example, by expressing the mode, skewness, excess kurtosis and differential entropy in terms of the mean and the variance:

Four parameters

A beta distribution with the two shape parameters α and β is supported on the range ,1or (0,1). It is possible to alter the location and scale of the distribution by introducing two further parameters representing the minimum, ''a'', and maximum ''c'' (''c'' > ''a''), values of the distribution,[ by a linear transformation substituting the non-dimensional variable ''x'' in terms of the new variable ''y'' (with support 'a'',''c''or (''a'',''c'')) and the parameters ''a'' and ''c'':

:

The ]probability density function

In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) c ...

of the four parameter beta distribution is equal to the two parameter distribution, scaled by the range (''c''-''a''), (so that the total area under the density curve equals a probability of one), and with the "y" variable shifted and scaled as follows:

::

That a random variable ''Y'' is Beta-distributed with four parameters α, β, ''a'', and ''c'' will be denoted by:

:

Some measures of central location are scaled (by (''c''-''a'')) and shifted (by ''a''), as follows:

:

Note: the geometric mean and harmonic mean cannot be transformed by a linear transformation in the way that the mean, median and mode can.

The shape parameters of ''Y'' can be written in term of its mean and variance as

:

The statistical dispersion measures are scaled (they do not need to be shifted because they are already centered on the mean) by the range (c-a), linearly for the mean deviation and nonlinearly for the variance:

::

::

::

Since the skewness

In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. The skewness value can be positive, zero, negative, or undefined.

For a unimo ...

and excess kurtosis are non-dimensional quantities (as moments centered on the mean and normalized by the standard deviation), they are independent of the parameters ''a'' and ''c'', and therefore equal to the expressions given above in terms of ''X'' (with support ,1or (0,1)):

::

::

Properties

Measures of central tendency

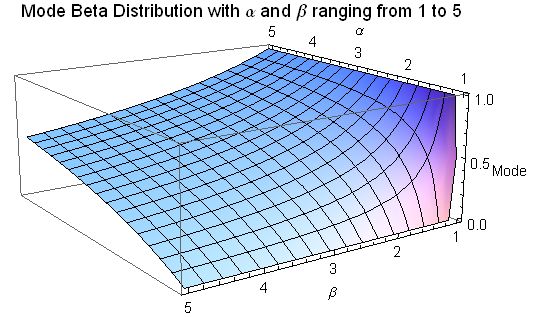

Mode

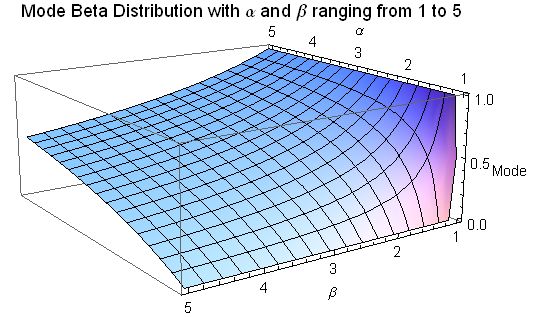

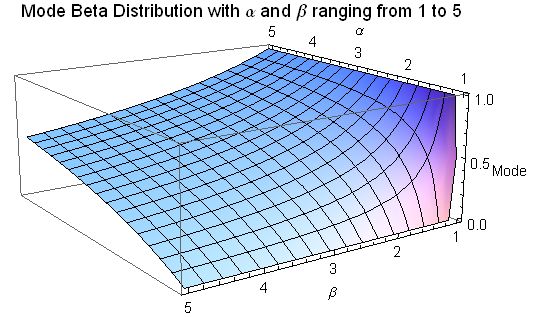

The mode of a Beta distributed random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

''X'' with ''α'', ''β'' > 1 is the most likely value of the distribution (corresponding to the peak in the PDF), and is given by the following expression: * Whether the ends are part of the

* Whether the ends are part of the domain

Domain may refer to:

Mathematics

*Domain of a function, the set of input values for which the (total) function is defined

** Domain of definition of a partial function

**Natural domain of a partial function

**Domain of holomorphy of a function

*Do ...

of the density function

* Whether a singularity can ever be called a ''mode''

* Whether cases with two maxima should be called ''bimodal''

Median

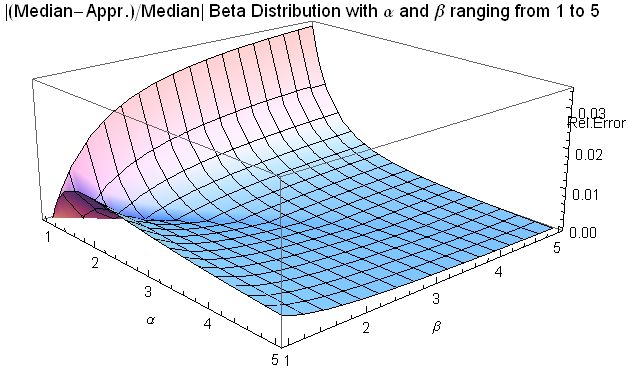

The median of the beta distribution is the unique real number for which the

The median of the beta distribution is the unique real number for which the regularized incomplete beta function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function that is closely related to the gamma function and to binomial coefficients. It is defined by the integral

: \Beta(z_1,z_2) = \int_0^1 t^ ...

. There is no general closed-form expression

In mathematics, a closed-form expression is a mathematical expression that uses a finite number of standard operations. It may contain constants, variables, certain well-known operations (e.g., + − × ÷), and functions (e.g., ''n''th ro ...

for the median of the beta distribution for arbitrary values of ''α'' and ''β''. Closed-form expression

In mathematics, a closed-form expression is a mathematical expression that uses a finite number of standard operations. It may contain constants, variables, certain well-known operations (e.g., + − × ÷), and functions (e.g., ''n''th ro ...

s for particular values of the parameters ''α'' and ''β'' follow:

* For symmetric cases ''α'' = ''β'', median = 1/2.

* For ''α'' = 1 and ''β'' > 0, median (this case is the mirror-image of the power function ,1distribution)

* For ''α'' > 0 and ''β'' = 1, median = (this case is the power function ,1distribution[

:

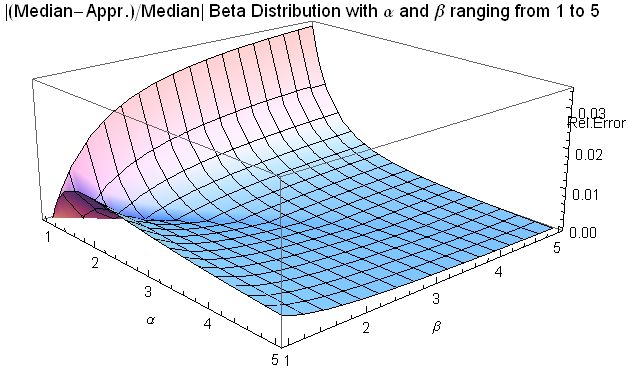

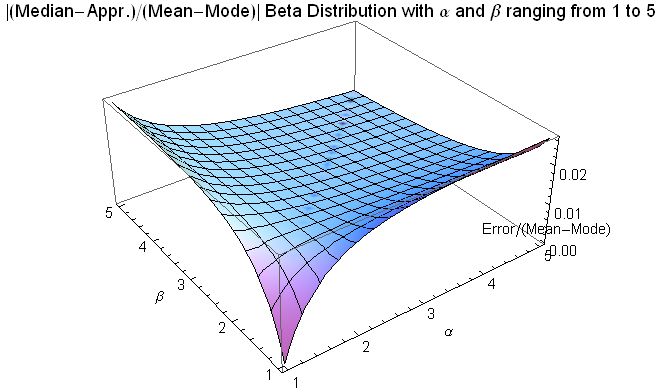

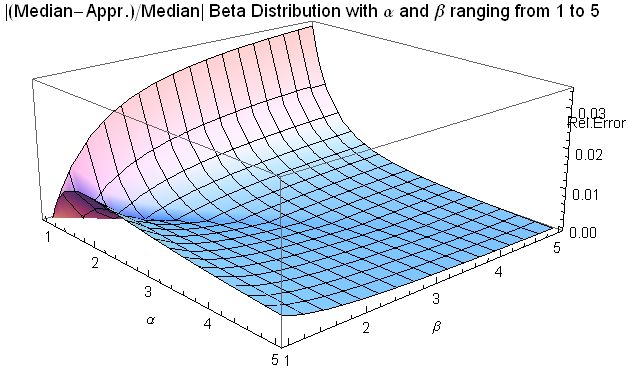

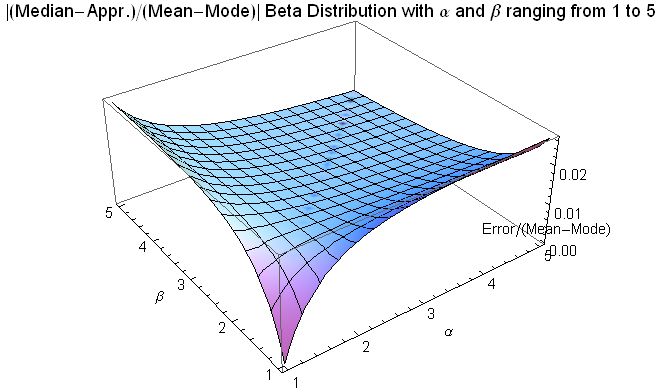

When α, β ≥ 1, the ]relative error

The approximation error in a data value is the discrepancy between an exact value and some ''approximation'' to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute er ...

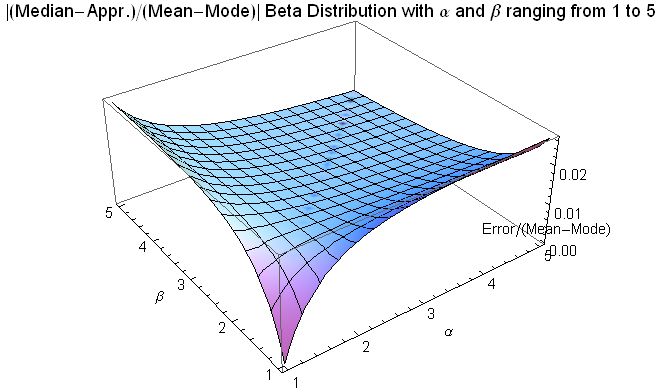

(the absolute error divided by the median) in this approximation is less than 4% and for both α ≥ 2 and β ≥ 2 it is less than 1%. The absolute error divided by the difference between the mean and the mode is similarly small:

Mean

The

The expected value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a ...

(mean) (''μ'') of a Beta distribution random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

''X'' with two parameters ''α'' and ''β'' is a function of only the ratio ''β''/''α'' of these parameters:degenerate distribution

In mathematics, a degenerate distribution is, according to some, a probability distribution in a space with support only on a manifold of lower dimension, and according to others a distribution with support only at a single point. By the latter ...

with a Dirac delta function

In mathematics, the Dirac delta distribution ( distribution), also known as the unit impulse, is a generalized function or distribution over the real numbers, whose value is zero everywhere except at zero, and whose integral over the entire ...

spike at the right end, , with probability 1, and zero probability everywhere else. There is 100% probability (absolute certainty) concentrated at the right end, .

Similarly, for ''β''/''α'' → ∞, or for ''α''/''β'' → 0, the mean is located at the left end, . The beta distribution becomes a 1-point Degenerate distribution

In mathematics, a degenerate distribution is, according to some, a probability distribution in a space with support only on a manifold of lower dimension, and according to others a distribution with support only at a single point. By the latter ...

with a Dirac delta function

In mathematics, the Dirac delta distribution ( distribution), also known as the unit impulse, is a generalized function or distribution over the real numbers, whose value is zero everywhere except at zero, and whose integral over the entire ...

spike at the left end, ''x'' = 0, with probability 1, and zero probability everywhere else. There is 100% probability (absolute certainty) concentrated at the left end, ''x'' = 0. Following are the limits with one parameter finite (non-zero) and the other approaching these limits:

:

While for typical unimodal distributions (with centrally located modes, inflexion points at both sides of the mode, and longer tails) (with Beta(''α'', ''β'') such that ) it is known that the sample mean (as an estimate of location) is not as robust as the sample median, the opposite is the case for uniform or "U-shaped" bimodal distributions (with Beta(''α'', ''β'') such that ), with the modes located at the ends of the distribution. As Mosteller and Tukey remark (random walk

In mathematics, a random walk is a random process that describes a path that consists of a succession of random steps on some mathematical space.

An elementary example of a random walk is the random walk on the integer number line \mathbb ...

s, since the probability for the time of the last visit to the origin in a random walk is distributed as the arcsine distribution Beta(1/2, 1/2):[ the mean of a number of realizations of a random walk is a much more robust estimator than the median (which is an inappropriate sample measure estimate in this case).

]

Geometric mean

The logarithm of the geometric mean

In mathematics, the geometric mean is a mean or average which indicates a central tendency of a set of numbers by using the product of their values (as opposed to the arithmetic mean which uses their sum). The geometric mean is defined as the ...

''GX'' of a distribution with random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

''X'' is the arithmetic mean of ln(''X''), or, equivalently, its expected value:

: The

The

* Whether the ends are part of the

* Whether the ends are part of the

The median of the beta distribution is the unique real number for which the

The median of the beta distribution is the unique real number for which the

The

The  The

The

* Whether the ends are part of the

* Whether the ends are part of the

The median of the beta distribution is the unique real number for which the

The median of the beta distribution is the unique real number for which the

The

The