|

Generative Adversarial Network

A generative adversarial network (GAN) is a class of machine learning frameworks designed by Ian Goodfellow and his colleagues in June 2014. Two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics. Though originally proposed as a form of generative model for unsupervised learning, GANs have also proved useful for semi-supervised learning, fully supervised learning, and reinforcement learning. The core idea of a GAN is based on the "indirect" training through the discriminator, another neural network that can tell how "realistic" the input seems, which itself is also being updated dynamically. This means that the generator is not t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Space

A latent space, also known as a latent feature space or embedding space, is an embedding of a set of items within a manifold in which items resembling each other are positioned closer to one another in the latent space. Position within the latent space can be viewed as being defined by a set of latent variables that emerge from the resemblances from the objects. In most cases, the dimensionality of the latent space is chosen to be lower than the dimensionality of the feature space from which the data points are drawn, making the construction of a latent space an example of dimensionality reduction, which can also be viewed as a form of data compression. Latent spaces are usually fit via machine learning, and they can then be used as feature spaces in machine learning models, including classifiers and other supervised predictors. The interpretation of the latent spaces of machine learning models is an active field of study, but latent space interpretation is difficult to achieve. Du ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Axioms

The Kolmogorov axioms are the foundations of probability theory introduced by Russian mathematician Andrey Kolmogorov in 1933. These axioms remain central and have direct contributions to mathematics, the physical sciences, and real-world probability cases. An alternative approach to formalising probability, favoured by some Bayesians, is given by Cox's theorem. Axioms The assumptions as to setting up the axioms can be summarised as follows: Let (\Omega, F, P) be a measure space with P(E) being the probability of some event E'','' and P(\Omega) = 1. Then (\Omega, F, P) is a probability space, with sample space \Omega, event space F and probability measure P. First axiom The probability of an event is a non-negative real number: :P(E)\in\mathbb, P(E)\geq 0 \qquad \forall E \in F where F is the event space. It follows that P(E) is always finite, in contrast with more general measure theory. Theories which assign negative probability relax the first axiom. Second axiom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variational Autoencoders

In machine learning, a variational autoencoder (VAE), is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling, belonging to the families of probabilistic graphical models and variational Bayesian methods. Variational autoencoders are often associated with the autoencoder model because of its architectural affinity, but with significant differences in the goal and mathematical formulation. Variational autoencoders are probabilistic generative models that require neural networks as only a part of their overall structure, as e.g. in VQ-VAE. The neural network components are typically referred to as the encoder and decoder for the first and second component respectively. The first neural network maps the input variable to a latent space that corresponds to the parameters of a variational distribution. In this way, the encoder can produce multiple different samples that all come from the same distribution. The decoder has the opposite function, w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymptotic Theory (statistics)

In statistics, asymptotic theory, or large sample theory, is a framework for assessing properties of estimators and statistical tests. Within this framework, it is often assumed that the sample size may grow indefinitely; the properties of estimators and tests are then evaluated under the limit of . In practice, a limit evaluation is considered to be approximately valid for large finite sample sizes too.Höpfner, R. (2014), Asymptotic Statistics, Walter de Gruyter. 286 pag. , Overview Most statistical problems begin with a dataset of size . The asymptotic theory proceeds by assuming that it is possible (in principle) to keep collecting additional data, thus that the sample size grows infinitely, i.e. . Under the assumption, many results can be obtained that are unavailable for samples of finite size. An example is the weak law of large numbers. The law states that for a sequence of independent and identically distributed (IID) random variables , if one value is drawn from each rand ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Approximation Theorem

In the mathematical theory of artificial neural networks, universal approximation theorems are results that establish the density of an algorithmically generated class of functions within a given function space of interest. Typically, these results concern the approximation capabilities of the feedforward architecture on the space of continuous functions between two Euclidean spaces, and the approximation is with respect to the compact convergence topology. However, there are also a variety of results between non-Euclidean spaces and other commonly used architectures and, more generally, algorithmically generated sets of functions, such as the convolutional neural network (CNN) architecture, radial basis-functions, or neural networks with specific properties. Most universal approximation theorems can be parsed into two classes. The first quantifies the approximation capabilities of neural networks with an arbitrary number of artificial neurons ("''arbitrary width''" case) and the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

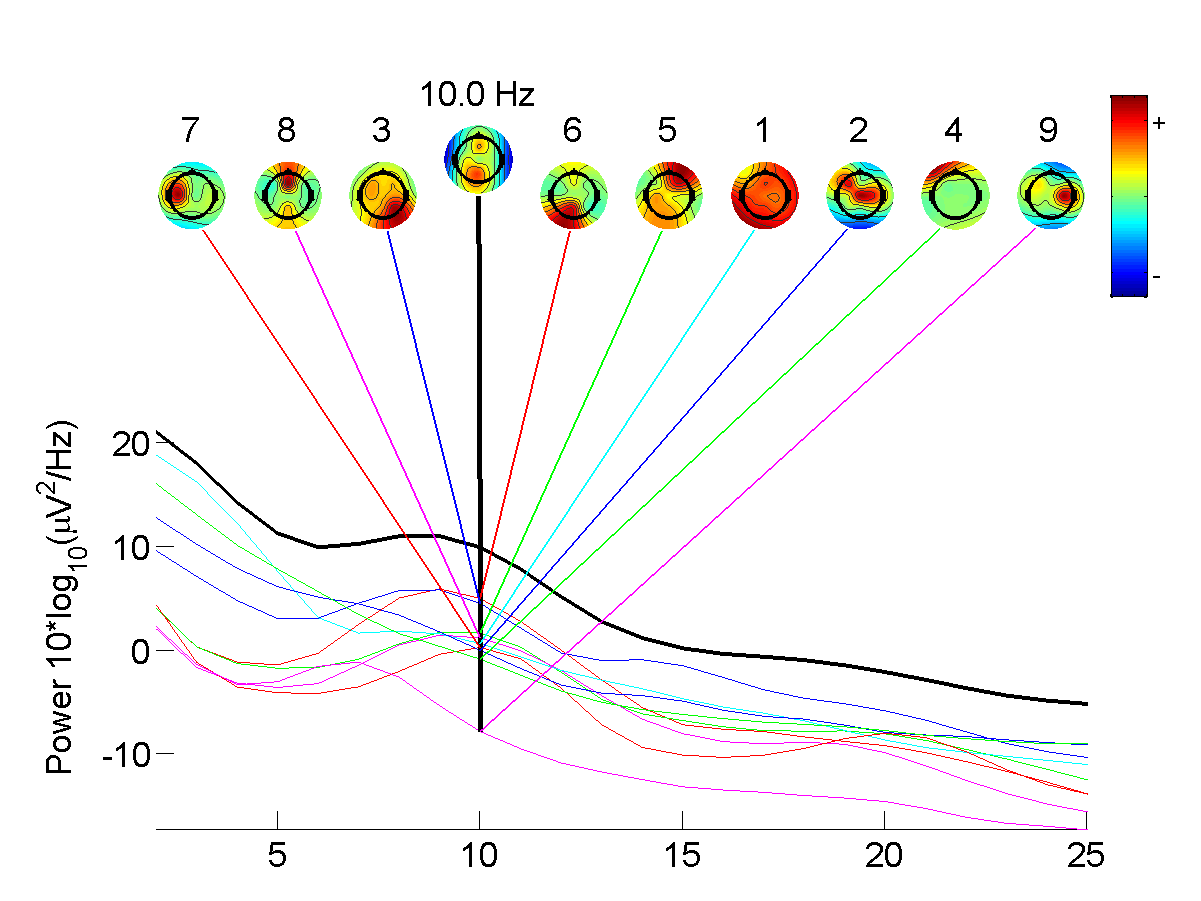

Independent Component Analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are statistically independent from each other. ICA is a special case of blind source separation. A common example application is the " cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence assumption is correct, blind ICA separation of a mixed signal gives very good results. It is also used for signals that a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising–Lenz–Little model) is a stochastic spin-glass model with an external field, i.e., a Sherrington–Kirkpatrick model, that is a stochastic Ising model. It is a statistical physics technique applied in the context of cognitive science. It is also classified as a Markov random field. Boltzmann machines are theoretically intriguing because of the locality and Hebbian nature of their training algorithm (being trained by Hebb's rule), and because of their parallelism and the resemblance of their dynamics to simple physical processes. Boltzmann machines with unconstrained connectivity have not been proven useful for practical problems in machine learning or inference, but if the connectivity is properly constrained, the learning can be made efficient enough to be useful for practical problems. They are named after the Boltzmann distribution in statistical mechanics, which i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

WaveNet

WaveNet is a deep neural network for generating raw audio. It was created by researchers at London-based AI firm DeepMind. The technique, outlined in a paper in September 2016, is able to generate relatively realistic-sounding human-like voices by directly modelling waveforms using a neural network method trained with recordings of real speech. Tests with US English and Mandarin reportedly showed that the system outperforms Google's best existing text-to-speech (TTS) systems, although as of 2016 its text-to-speech synthesis still was less convincing than actual human speech. WaveNet's ability to generate raw waveforms means that it can model any kind of audio, including music. History Generating speech from text is an increasingly common task thanks to the popularity of software such as Apple's Siri, Microsoft's Cortana, Amazon Alexa and the Google Assistant. Most such systems use a variation of a technique that involves concatenated sound fragments together to form recognis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Types Of Deep Generative Models

Type may refer to: Science and technology Computing * Typing, producing text via a keyboard, typewriter, etc. * Data type, collection of values used for computations. * File type * TYPE (DOS command), a command to display contents of a file. * Type (Unix), a command in POSIX shells that gives information about commands. * Type safety, the extent to which a programming language discourages or prevents type errors. * Type system, defines a programming language's response to data types. Mathematics * Type (model theory) * Type theory, basis for the study of type systems * Arity or type, the number of operands a function takes * Type, any proposition or set in the intuitionistic type theory * Type, of an entire function ** Exponential type Biology * Type (biology), which fixes a scientific name to a taxon * Dog type, categorization by use or function of domestic dogs Lettering * Type is a design concept for lettering used in typography which helped bring about modern textual pri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flow-based Generative Model

A flow-based generative model is a generative model used in machine learning that explicitly models a probability distribution by leveraging normalizing flow, which is a statistical method using the change-of-variable law of probabilities to transform a simple distribution into a complex one. The direct modeling of likelihood provides many advantages. For example, the negative log-likelihood can be directly computed and minimized as the loss function. Additionally, novel samples can be generated by sampling from the initial distribution, and applying the flow transformation. In contrast, many alternative generative modeling methods such as variational autoencoder (VAE) and generative adversarial network do not explicitly represent the likelihood function. Method Let z_0 be a (possibly multivariate) random variable with distribution p_0(z_0). For i = 1, ..., K, let z_i = f_i(z_) be a sequence of random variables transformed from z_0. The functions f_1, ..., f_K should be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networks (SIANN), based on the shared-weight architecture of the convolution kernels or filters that slide along input features and provide translation- equivariant responses known as feature maps. Counter-intuitively, most convolutional neural networks are not invariant to translation, due to the downsampling operation they apply to the input. They have applications in image and video recognition, recommender systems, image classification, image segmentation, medical image analysis, natural language processing, brain–computer interfaces, and financial time series. CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |