|

Estimation

Estimation (or estimating) is the process of finding an estimate or approximation, which is a value that is usable for some purpose even if input data may be incomplete, uncertain, or unstable. The value is nonetheless usable because it is derived from the best information available.C. Lon Enloe, Elizabeth Garnett, Jonathan Miles, ''Physical Science: What the Technology Professional Needs to Know'' (2000), p. 47. Typically, estimation involves "using the value of a statistic derived from a sample to estimate the value of a corresponding population parameter".Raymond A. Kent, "Estimation", ''Data Construction and Data Analysis for Survey Research'' (2001), p. 157. The sample provides information that can be projected, through various formal or informal processes, to determine a range most likely to describe the missing information. An estimate that turns out to be incorrect will be an overestimate if the estimate exceeds the actual result and an underestimate if the estimate f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

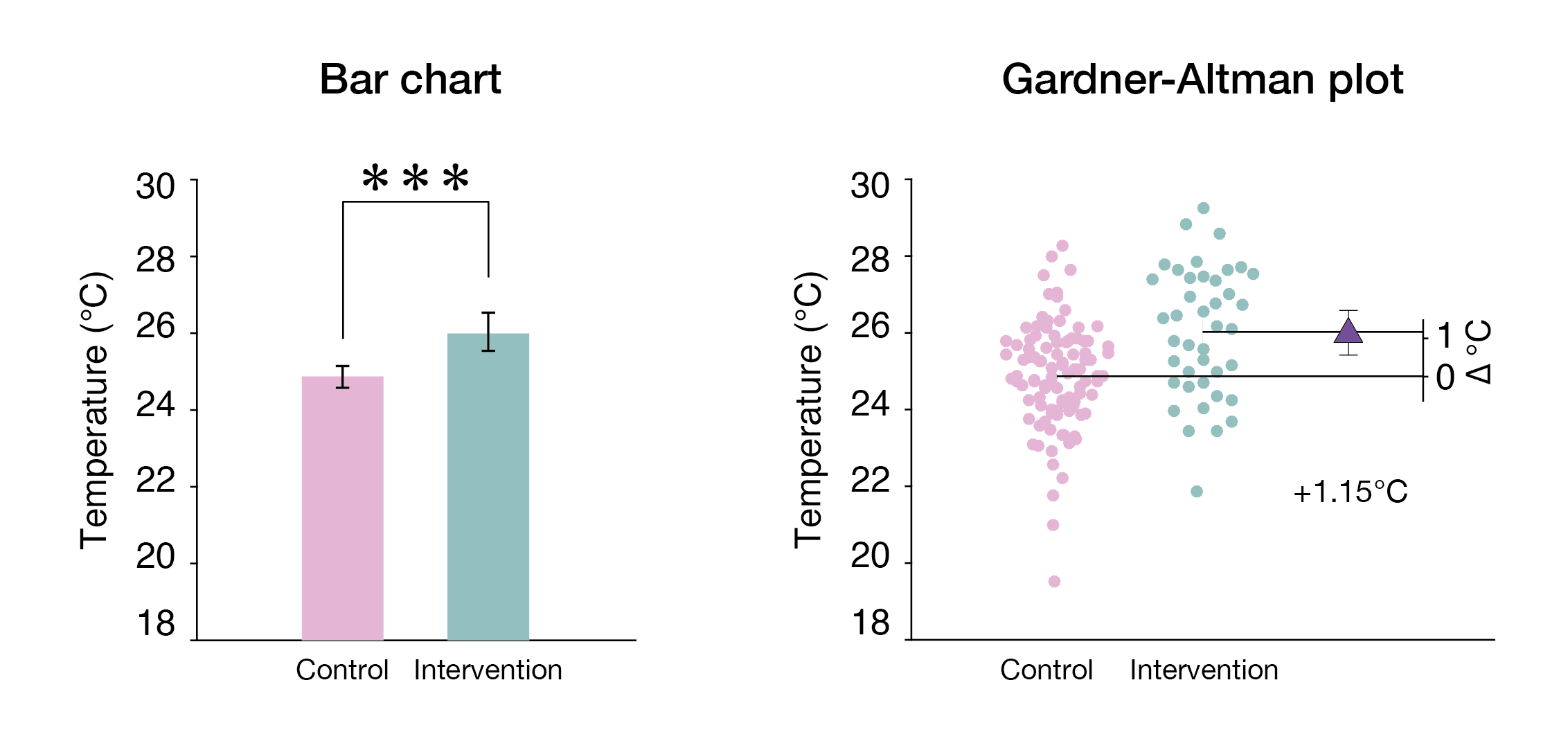

Estimation Statistics

Estimation statistics, or simply estimation, is a data analysis framework that uses a combination of effect sizes, confidence intervals, precision planning, and meta-analysis to plan experiments, analyze data and interpret results. It complements hypothesis testing approaches such as Statistical hypothesis testing, null hypothesis significance testing (NHST), by going beyond the question is an effect present or not, and provides information about how large an effect is. Estimation statistics is sometimes referred to as ''the new statistics''. The primary aim of estimation methods is to report an effect size (a point estimate) along with its confidence interval, the latter of which is related to the precision of the estimate. The confidence interval summarizes a range of likely values of the underlying population effect. Proponents of estimation see reporting a P-value, ''P'' value as an unhelpful distraction from the important business of reporting an effect size with its confide ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation Theory

Estimation theory is a branch of statistics that deals with estimating the values of Statistical parameter, parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the measured data. An ''estimator'' attempts to approximate the unknown parameters using the measurements. In estimation theory, two approaches are generally considered: * The probabilistic approach (described in this article) assumes that the measured data is random with probability distribution dependent on the parameters of interest * The set estimation, set-membership approach assumes that the measured data vector belongs to a set which depends on the parameter vector. Examples For example, it is desired to estimate the proportion of a population of voters who will vote for a particular candidate. That proportion is the parameter sought; the estimate is based on a small random sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation (project Management)

Estimation within project management (e.g., for engineering or software development), are the basis of sound project planning. Many processes have been developed to aid project managers in making accurate estimates. Processes (engineering) *Analogy based estimation * Compartmentalization (i.e., breakdown of tasks) * Cost estimate * Delphi method *Documenting estimation results *Educated assumptions *Estimating each task *Examining historical data *Identifying dependencies * Parametric estimating *Risk assessment *Structured planning Processes (software development) Popular estimation processes for software projects include: * Cocomo * Cosysmo * Event chain methodology Event chain methodology is a network theory, network analysis technique that is focused on identifying and managing events and relationships between them (event chains) that affect project schedules. It is an uncertainty modeling schedule techniqu ... * Function points * Planning poker * Program Eval ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Point Estimate

In statistics, point estimation involves the use of sample data to calculate a single value (known as a point estimate since it identifies a point in some parameter space) which is to serve as a "best guess" or "best estimate" of an unknown population parameter (for example, the population mean). More formally, it is the application of a point estimator to the data to obtain a point estimate. Point estimation can be contrasted with interval estimation: such interval estimates are typically either confidence intervals, in the case of frequentist inference, or credible intervals, in the case of Bayesian inference. More generally, a point estimator can be contrasted with a set estimator. Examples are given by confidence sets or credible sets. A point estimator can also be contrasted with a distribution estimator. Examples are given by confidence distributions, randomized estimators, and Bayesian posteriors. Properties of point estimates Biasedness “Bias” is define ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

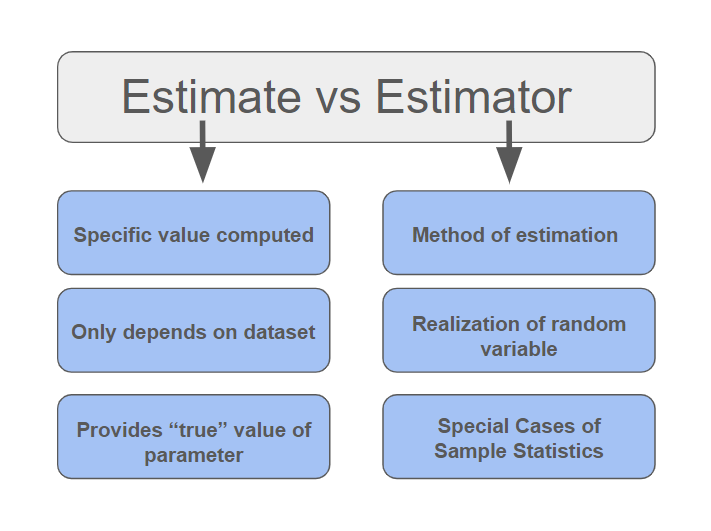

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interval Estimate

In statistics, interval estimation is the use of sample data to estimate an '' interval'' of possible values of a parameter of interest. This is in contrast to point estimation, which gives a single value. The most prevalent forms of interval estimation are '' confidence intervals'' (a frequentist method) and ''credible intervals'' (a Bayesian method). Less common forms include '' likelihood intervals,'' '' fiducial intervals,'' ''tolerance intervals,'' and ''prediction intervals''. For a non-statistical method, interval estimates can be deduced from fuzzy logic. Types Confidence intervals Confidence intervals are used to estimate the parameter of interest from a sampled data set, commonly the mean or standard deviation. A confidence interval states there is a 100γ% confidence that the parameter of interest is within a lower and upper bound. A common misconception of confidence intervals is 100γ% of the data set fits within or above/below the bounds, this is referred t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cost Estimate

A cost estimate is the approximation of the cost of a program, project, or operation. The cost estimate is the product of the cost estimating process. The cost estimate has a single total value and may have identifiable component values. A problem with a cost overrun can be avoided with a credible, reliable, and accurate cost estimate. A cost estimator is the professional who prepares cost estimates. There are different types of cost estimators, whose title may be preceded by a modifier, such as building estimator, or electrical estimator, or chief estimator. Other professionals such as quantity surveyors and cost engineering, cost engineers may also prepare cost estimates or contribute to cost estimates. In the US, according to the Bureau of Labor Statistics, there were 185,400 cost estimators in 2010. There are around 53,000 professional quantity surveyors working in the UK. Overview The U.S. Government Accountability Office (GAO) defines a cost estimate as "the summation of in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fermi Problem

A Fermi problem (or Fermi question, Fermi quiz), also known as an order-of-magnitude problem, is an estimation problem in physics or engineering education, designed to teach dimensional analysis or approximation of extreme scientific calculations. Fermi problems are usually back-of-the-envelope calculations. Fermi problems typically involve making justified guesses about quantities and their variance or lower and upper bounds. In some cases, order-of-magnitude estimates can also be derived using dimensional analysis. A Fermi estimate (or order-of-magnitude estimate, order estimation) is an estimate of an extreme scientific calculation. The estimation technique is named after physicist Enrico Fermi as he was known for his ability to make good approximate calculations with little or no actual data. Historical background An example is Enrico Fermi's estimate of the strength of the atomic bomb that detonated at the Trinity test, based on the distance traveled by pieces of paper ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomography, seismic signals, Altimeter, altimetry processing, and scientific measurements. Signal processing techniques are used to optimize transmissions, Data storage, digital storage efficiency, correcting distorted signals, improve subjective video quality, and to detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital refinement of these techniques can be found in the digital control systems of the 1940s and 1950s. In 1948, Claude Shannon wrote the influential paper "A Mathematical Theory of Communication" which was publis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation

An approximation is anything that is intentionally similar but not exactly equal to something else. Etymology and usage The word ''approximation'' is derived from Latin ''approximatus'', from ''proximus'' meaning ''very near'' and the prefix ''ad-'' (''ad-'' before ''p'' becomes ap- by assimilation) meaning ''to''. Words like ''approximate'', ''approximately'' and ''approximation'' are used especially in technical or scientific contexts. In everyday English, words such as ''roughly'' or ''around'' are used with a similar meaning. It is often found abbreviated as ''approx.'' The term can be applied to various properties (e.g., value, quantity, image, description) that are nearly, but not exactly correct; similar, but not exactly the same (e.g., the approximate time was 10 o'clock). Although approximation is most often applied to numbers, it is also frequently applied to such things as mathematical functions, shapes, and physical laws. In science, approximation can refer to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |