|

Benchmark (computing)

In computing, a benchmark is the act of running a computer program, a set of programs, or other operations, in order to assess the relative performance of an object, normally by running a number of standard tests and trials against it. The term ''benchmark'' is also commonly utilized for the purposes of elaborately designed benchmarking programs themselves. Benchmarking is usually associated with assessing performance characteristics of computer hardware, for example, the floating point operation performance of a CPU, but there are circumstances when the technique is also applicable to software. Software benchmarks are, for example, run against compilers or database management systems (DBMS). Benchmarks provide a method of comparing the performance of various subsystems across different chip/system architectures. Benchmarking as a part of continuous integration is called Continuous Benchmarking. Purpose As computer architecture advanced, it became more difficult to compa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OGRE Screenshot 08

An ogre (grammatical gender, feminine: ogress) is a legendary monster depicted as a large, hideous, Mythic humanoids, man-like being that eats ordinary human beings, especially infants and children. Ogres frequently feature in mythology, folklore, and fiction throughout the world. They appear in many classic works of literature, and are most often associated in fairy tales and legend. In mythology, ogres are often depicted as inhumanly large, tall, and having a disproportionately large head, abundant hair, unusually colored skin, a voracious appetite, and a strong body. Ogres are closely linked with giants and with human cannibalism, human cannibals in mythology. In both folklore and fiction, giants are often given ogrish traits (such as the giants in "Jack and the Beanstalk" and "Jack the Giant Killer", the Giant Despair in ''The Pilgrim's Progress'', and the Jötunn of Norse mythology); while ogres may be given giant-like traits. Famous examples of ogres in folklore include t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BogoMips

BogoMips (from "bogus" and MIPS) is a crude measurement of CPU speed made by the Linux kernel when it boots to calibrate an internal busy-loop. An often-quoted definition of the term is "the number of million times per second a processor can do absolutely nothing". Eric S Raymond, and Geoff Mackenzie, published on the Internet in the early 1990s, untraceable origin. BogoMips is a value that can be used to verify whether the processor in question is in the proper range of similar processors, i.e. BogoMips represents a processor's clock frequency as well as the potentially present CPU cache. It is not usable for performance comparisons among different CPUs. History In 1993, Lars Wirzenius posted a Usenet message explaining the reasons for its introduction in the Linux kernel on comp.os.linux: : .. : MIPS is short for Millions of Instructions Per Second. It is a measure for the computation speed of a processor. Like most such measures, it is more often abused than used properl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spreadsheet

A spreadsheet is a computer application for computation, organization, analysis and storage of data in tabular form. Spreadsheets were developed as computerized analogs of paper accounting worksheets. The program operates on data entered in cells of a table. Each cell may contain either numeric or text data, or the results of formulas that automatically calculate and display a value based on the contents of other cells. The term ''spreadsheet'' may also refer to one such electronic document. Spreadsheet users can adjust any stored value and observe the effects on calculated values. This makes the spreadsheet useful for "what-if" analysis since many cases can be rapidly investigated without manual recalculation. Modern spreadsheet software can have multiple interacting sheets and can display data either as text and numerals or in graphical form. Besides performing basic arithmetic and mathematical functions, modern spreadsheets provide built-in functions for common financial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Export

The import and export of data is the automated or semi-automated input and output of data sets between different software applications. It involves "translating" from the format used in one application into that used by another, where such translation is accomplished automatically via machine processes, such as transcoding, data transformation, and others. True exports of data often contain data in raw formats otherwise unreadable to end-users without the user interface that was designed to render it. Import and export of data shares semantic analogy with copying and pasting, in that sets of data are copied from one application and pasted into another. In fact, the software development behind operating system clipboards (and clipboard extender apps) greatly concerns the many details and challenges of data transformation and transcoding, in order to present the end user with the illusion of effortless copy and paste between any two apps, no matter how internally different. The "S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relational Database

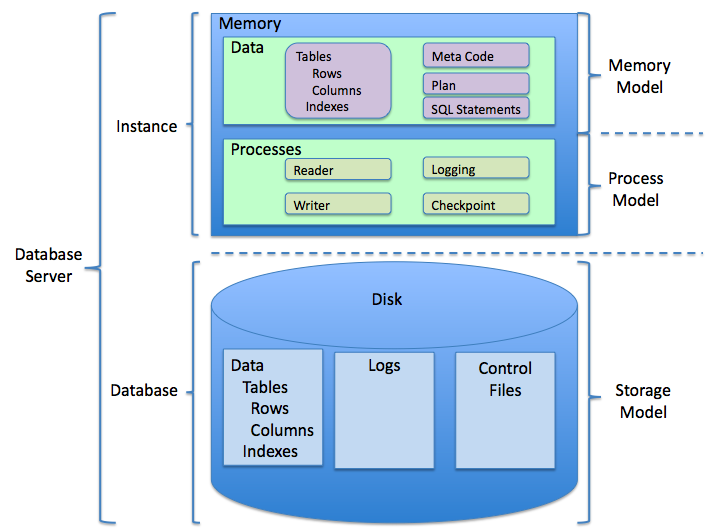

A relational database (RDB) is a database based on the relational model of data, as proposed by E. F. Codd in 1970. A Relational Database Management System (RDBMS) is a type of database management system that stores data in a structured format using rows and columns. Many relational database systems are equipped with the option of using SQL (Structured Query Language) for querying and updating the database. History The concept of relational database was defined by E. F. Codd at IBM in 1970. Codd introduced the term ''relational'' in his research paper "A Relational Model of Data for Large Shared Data Banks". In this paper and later papers, he defined what he meant by ''relation''. One well-known definition of what constitutes a relational database system is composed of Codd's 12 rules. However, no commercial implementations of the relational model conform to all of Codd's rules, so the term has gradually come to describe a broader class of database systems, which at a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reconfigurable Computing

Reconfigurable computing is a computer architecture combining some of the flexibility of software with the high performance of hardware by processing with flexible hardware platforms like FPGA, field-programmable gate arrays (FPGAs). The principal difference when compared to using ordinary microprocessors is the ability to add custom computational blocks using FPGAs. On the other hand, the main difference from custom hardware, i.e. application-specific integrated circuits (ASICs) is the possibility to adapt the hardware during runtime by "loading" a new circuit on the reconfigurable fabric, thus providing new computational blocks without the need to Semiconductor device fabrication, manufacture and add new Integrated circuit, chips to the existing system. History The concept of reconfigurable computing has existed since the 1960s, when Gerald Estrin's paper proposed the concept of a computer made of a standard processor and an array of "reconfigurable" hardware. The main process ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Superscalar

A superscalar processor (or multiple-issue processor) is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor, which can execute at most one single instruction per clock cycle, a superscalar processor can execute or start executing more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to different execution units on the processor. It therefore allows more throughput (the number of instructions that can be executed in a unit of time which can even be less than 1) than would otherwise be possible at a given clock rate. Each execution unit is not a separate processor (or a core if the processor is a multi-core processor), but an execution resource within a single CPU such as an arithmetic logic unit. While a superscalar CPU is typically also pipelined, superscalar and pipelining execution are considered different performance enhancement techni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VLIW

Very long instruction word (VLIW) refers to instruction set architectures that are designed to exploit instruction-level parallelism (ILP). A VLIW processor allows programs to explicitly specify instructions to execute in parallel computing, parallel, whereas conventional central processing units (CPUs) mostly allow programs to specify instructions to execute in sequence only. VLIW is intended to allow higher performance without the complexity inherent in some other designs. The traditional means to improve performance in processors include dividing instructions into sub steps so the instructions can be executed partly at the same time (termed ''pipelining''), dispatching individual instructions to be executed independently, in different parts of the processor (''superscalar architectures''), and even executing instructions in an order different from the program (''out-of-order execution''). These methods all complicate hardware (larger circuits, higher cost and energy use) becaus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RISC

In electronics and computer science, a reduced instruction set computer (RISC) is a computer architecture designed to simplify the individual instructions given to the computer to accomplish tasks. Compared to the instructions given to a complex instruction set computer (CISC), a RISC computer might require more instructions (more code) in order to accomplish a task because the individual instructions perform simpler operations. The goal is to offset the need to process more instructions by increasing the speed of each instruction, in particular by implementing an instruction pipeline, which may be simpler to achieve given simpler instructions. The key operational concept of the RISC computer is that each instruction performs only one function (e.g. copy a value from memory to a register). The RISC computer usually has many (16 or 32) high-speed, general-purpose registers with a load–store architecture in which the code for the register-register instructions (for performing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SPEC

The Standard Performance Evaluation Corporation (SPEC) is a non-profit consortium that establishes and maintains standardized benchmarks and performance evaluation tools for new generations of computing systems. SPEC was founded in 1988 and its membership comprises over 120 computer hardware and software vendors, educational institutions, research organizations, and government agencies internationally. SPEC benchmarks and tools are widely used to evaluate the performance of computer systems; the test results are published on the SPEC website. External links * Official List of SPEC Benchmarks Computer performance Evaluation of computers Companies established in 1988 Companies based in Virginia Standards organizations in the United States {{Compu-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithms

In mathematics and computer science, an algorithm () is a finite sequence of mathematically rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an effective method, an algorithm can be expressed within a finite amount of space and time"Any classic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microarchitecture

In electronics, computer science and computer engineering, microarchitecture, also called computer organization and sometimes abbreviated as μarch or uarch, is the way a given instruction set architecture (ISA) is implemented in a particular processor. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology. Computer architecture is the combination of microarchitecture and instruction set architecture. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the instructions, execution model, processor registers, address and data formats among other things. The microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |