|

Trapezoidal Distribution

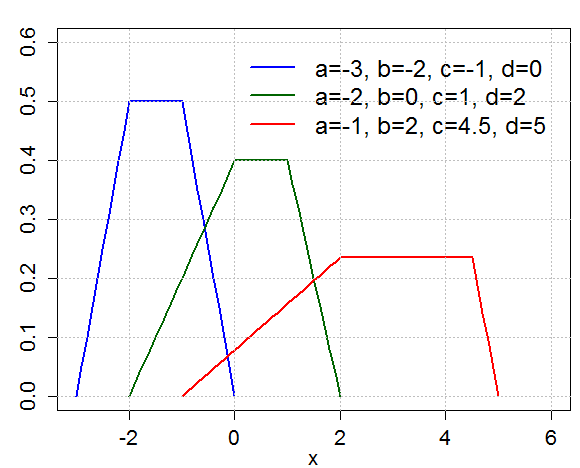

In probability theory and statistics, the trapezoidal distribution is a continuous probability distribution whose probability density function graph resembles a trapezoid. Likewise, trapezoidal distributions also roughly resemble mesas or plateaus. Each trapezoidal distribution has a Upper and lower bounds, lower bound and an Upper and lower bounds, upper bound , where , beyond which no Value (mathematics), values or Event (probability theory), events on the distribution can occur (i.e. beyond which the probability is always zero). In addition, there are two sharp bending points (non-Differentiable function, differentiable Classification of discontinuities, discontinuities) within the probability distribution, which we will call and , which occur between and , such that . The image to the right shows a perfectly Linearity, linear trapezoidal distribution. However, not all trapezoidal distributions are so precisely shaped. In the standard case, where the middle part of the trap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trapezoidal PDF

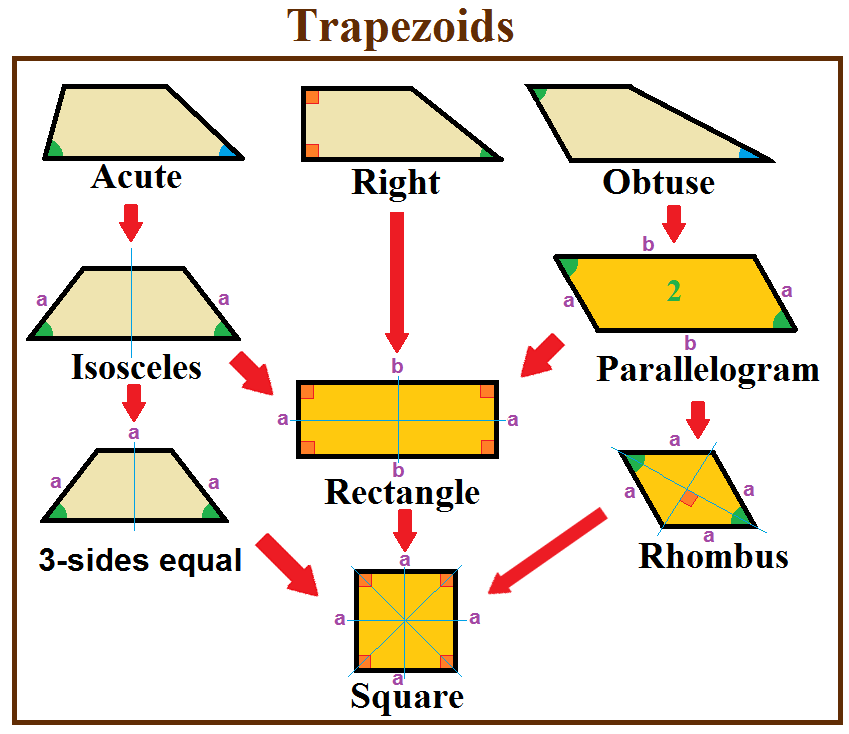

In geometry, a trapezoid () in North American English, or trapezium () in British English, is a quadrilateral that has at least one pair of Parallel (geometry), parallel sides. The parallel sides are called the ''bases'' of the trapezoid. The other two sides are called the ''legs'' or ''lateral sides''. (If the trapezoid is a parallelogram, then the choice of bases and legs is arbitrary.) A trapezoid is usually considered to be a Convex polygon, convex quadrilateral in Euclidean geometry, but there are also Crossed polygon, crossed cases. If ''ABCD'' is a convex trapezoid, then ''ABDC'' is a crossed trapezoid. The metric formulas in this article apply in convex trapezoids. Definitions ''Trapezoid'' can be defined exclusively or inclusively. Under an exclusive definition a trapezoid is a quadrilateral having pair of parallel sides, with the other pair of opposite sides non-parallel. Parallelograms including rhombi, rectangles, and squares are then not considered to be trapez ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maxima And Minima

In mathematical analysis, the maximum and minimum of a function are, respectively, the greatest and least value taken by the function. Known generically as extremum, they may be defined either within a given range (the ''local'' or ''relative'' extrema) or on the entire domain (the ''global'' or ''absolute'' extrema) of a function. Pierre de Fermat was one of the first mathematicians to propose a general technique, adequality, for finding the maxima and minima of functions. As defined in set theory, the maximum and minimum of a set are the greatest and least elements in the set, respectively. Unbounded infinite sets, such as the set of real numbers, have no minimum or maximum. In statistics, the corresponding concept is the sample maximum and minimum. Definition A real-valued function ''f'' defined on a domain ''X'' has a global (or absolute) maximum point at ''x''∗, if for all ''x'' in ''X''. Similarly, the function has a global (or absolute) minimum point at ''x''� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Poisson Distribution

In probability theory and statistics, the Poisson distribution () is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time if these events occur with a known constant mean rate and independently of the time since the last event. It can also be used for the number of events in other types of intervals than time, and in dimension greater than 1 (e.g., number of events in a given area or volume). The Poisson distribution is named after French mathematician Siméon Denis Poisson. It plays an important role for discrete-stable distributions. Under a Poisson distribution with the expectation of ''λ'' events in a given interval, the probability of ''k'' events in the same interval is: :\frac . For instance, consider a call center which receives an average of ''λ ='' 3 calls per minute at all times of day. If the calls are independent, receiving one does not change the probability of when the next on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bates Distribution

In probability and business statistics, the Bates distribution, named after Grace Bates, is a probability distribution of the mean of a number of statistically independent uniformly distributed random variables on the unit interval. This distribution is related to the uniform, the triangular, and the normal Gaussian distribution, and has applications in broadcast engineering for signal enhancement. The Bates distribution on ,1/math> and of parameter n is sometimes confused with the Irwin–Hall distribution of parameter n, which is the distribution of the sum (not the mean) of n independent random variables uniformly distributed on the unit interval ,1/math>. More precisely, if X has a Bates distribution on ,1/math>, then nX has an Irwin-Hall distribution of parameter n (and support on ,n/math>). For n=1, both the Bates distribution and the Irwin-Hall distribution coincide with the uniform distribution on the unit interval ,1/math>. Definition The Bates distributio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Irwin–Hall Distribution

In probability and statistics, the Irwin–Hall distribution, named after Joseph Oscar Irwin and Philip Hall, is a probability distribution for a random variable defined as the sum of a number of independent random variables, each having a uniform distribution. For this reason it is also known as the uniform sum distribution. The generation of pseudo-random numbers having an approximately normal distribution is sometimes accomplished by computing the sum of a number of pseudo-random numbers having a uniform distribution; usually for the sake of simplicity of programming. Rescaling the Irwin–Hall distribution provides the exact distribution of the random variates being generated. This distribution is sometimes confused with the Bates distribution, which is the mean (not sum) of ''n'' independent random variables uniformly distributed from 0 to 1. Definition The Irwin–Hall distribution is the continuous probability distribution for the sum of ''n'' independent and identically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Triangular Distribution

In probability theory and statistics, the triangular distribution is a continuous probability distribution with lower limit ''a'', upper limit ''b'', and mode ''c'', where ''a'' < ''b'' and ''a'' ≤ ''c'' ≤ ''b''. Special cases Mode at a bound The distribution simplifies when ''c'' = ''a'' or ''c'' = ''b''. For example, if ''a'' = 0, ''b'' = 1 and ''c'' = 1, then the and CDF become: : : |

Uniform Distribution (continuous)

In probability theory and statistics, the continuous uniform distributions or rectangular distributions are a family of symmetric probability distributions. Such a distribution describes an experiment where there is an arbitrary outcome that lies between certain bounds. The bounds are defined by the parameters, a and b, which are the minimum and maximum values. The interval can either be closed (i.e. ,b/math>) or open (i.e. (a,b)). Therefore, the distribution is often abbreviated U(a,b), where U stands for uniform distribution. The difference between the bounds defines the interval length; all intervals of the same length on the distribution's support are equally probable. It is the maximum entropy probability distribution for a random variable X under no constraint other than that it is contained in the distribution's support. Definitions Probability density function The probability density function of the continuous uniform distribution is f(x) = \begin \dfrac & ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Triangular Distribution

In probability theory and statistics, the triangular distribution is a continuous probability distribution with lower limit ''a'', upper limit ''b'', and mode ''c'', where ''a'' < ''b'' and ''a'' ≤ ''c'' ≤ ''b''. Special cases Mode at a bound The distribution simplifies when ''c'' = ''a'' or ''c'' = ''b''. For example, if ''a'' = 0, ''b'' = 1 and ''c'' = 1, then the and CDF become: : : |

Uniform Distribution (continuous)

In probability theory and statistics, the continuous uniform distributions or rectangular distributions are a family of symmetric probability distributions. Such a distribution describes an experiment where there is an arbitrary outcome that lies between certain bounds. The bounds are defined by the parameters, a and b, which are the minimum and maximum values. The interval can either be closed (i.e. ,b/math>) or open (i.e. (a,b)). Therefore, the distribution is often abbreviated U(a,b), where U stands for uniform distribution. The difference between the bounds defines the interval length; all intervals of the same length on the distribution's support are equally probable. It is the maximum entropy probability distribution for a random variable X under no constraint other than that it is contained in the distribution's support. Definitions Probability density function The probability density function of the continuous uniform distribution is f(x) = \begin \dfrac & ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Special Case

In logic, especially as applied in mathematics, concept is a special case or specialization of concept precisely if every instance of is also an instance of but not vice versa, or equivalently, if is a generalization of .Brown, James Robert. Philosophy of Mathematics: An Introduction to a World of Proofs and Pictures'. United Kingdom, Taylor & Francis, 2005. 27. A limiting case is a type of special case which is arrived at by taking some aspect of the concept to the extreme of what is permitted in the general case. If is true, one can immediately deduce that is true as well, and if is false, can also be immediately deduced to be false. A degenerate case is a special case which is in some way qualitatively different from almost all of the cases allowed. Examples Special case examples include the following: * All squares are rectangles (but not all rectangles are squares); therefore the square is a special case of the rectangle. It is also a special case of the rhombus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment (mathematics)

In mathematics, the moments of a function are certain quantitative measures related to the shape of the function's graph. If the function represents mass density, then the zeroth moment is the total mass, the first moment (normalized by total mass) is the center of mass, and the second moment is the moment of inertia. If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness, and the fourth standardized moment is the kurtosis. For a distribution of mass or probability on a bounded interval, the collection of all the moments (of all orders, from to ) uniquely determines the distribution ( Hausdorff moment problem). The same is not true on unbounded intervals ( Hamburger moment problem). In the mid-nineteenth century, Pafnuty Chebyshev became the first person to think systematically in terms of the moments of random variables. Significance of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |