|

Samplesort

Samplesort is a sorting algorithm that is a divide and conquer algorithm often used in parallel processing systems. Conventional divide and conquer sorting algorithms partitions the array into sub-intervals or buckets. The buckets are then sorted individually and then concatenated together. However, if the array is non-uniformly distributed, the performance of these sorting algorithms can be significantly throttled. Samplesort addresses this issue by selecting a sample of size from the -element sequence, and determining the range of the buckets by sorting the sample and choosing elements from the result. These elements (called splitters) then divide the array into approximately equal-sized buckets. Samplesort is described in the 1970 paper, "Samplesort: A Sampling Approach to Minimal Storage Tree Sorting", by W. D. Frazer and A. C. McKellar. Algorithm Samplesort is a generalization of quicksort. Where quicksort partitions its input into two parts at each step, based on a single ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sorting Algorithm

In computer science, a sorting algorithm is an algorithm that puts elements of a List (computing), list into an Total order, order. The most frequently used orders are numerical order and lexicographical order, and either ascending or descending. Efficient sorting is important for optimizing the Algorithmic efficiency, efficiency of other algorithms (such as search algorithm, search and merge algorithm, merge algorithms) that require input data to be in sorted lists. Sorting is also often useful for Canonicalization, canonicalizing data and for producing human-readable output. Formally, the output of any sorting algorithm must satisfy two conditions: # The output is in monotonic order (each element is no smaller/larger than the previous element, according to the required order). # The output is a permutation (a reordering, yet retaining all of the original elements) of the input. Although some algorithms are designed for sequential access, the highest-performing algorithms assum ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quicksort

Quicksort is an efficient, general-purpose sorting algorithm. Quicksort was developed by British computer scientist Tony Hoare in 1959 and published in 1961. It is still a commonly used algorithm for sorting. Overall, it is slightly faster than merge sort and heapsort for randomized data, particularly on larger distributions. Quicksort is a divide-and-conquer algorithm. It works by selecting a "pivot" element from the array and partitioning the other elements into two sub-arrays, according to whether they are less than or greater than the pivot. For this reason, it is sometimes called partition-exchange sort. The sub-arrays are then sorted recursively. This can be done in-place, requiring small additional amounts of memory to perform the sorting. Quicksort is a comparison sort, meaning that it can sort items of any type for which a "less-than" relation (formally, a total order) is defined. It is a comparison-based sort since elements ''a'' and ''b'' are only swapped in ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sorting Algorithms

In computer science, a sorting algorithm is an algorithm that puts elements of a list into an order. The most frequently used orders are numerical order and lexicographical order, and either ascending or descending. Efficient sorting is important for optimizing the efficiency of other algorithms (such as search and merge algorithms) that require input data to be in sorted lists. Sorting is also often useful for canonicalizing data and for producing human-readable output. Formally, the output of any sorting algorithm must satisfy two conditions: # The output is in monotonic order (each element is no smaller/larger than the previous element, according to the required order). # The output is a permutation (a reordering, yet retaining all of the original elements) of the input. Although some algorithms are designed for sequential access, the highest-performing algorithms assume data is stored in a data structure which allows random access. History and concepts From the begin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiprocessor

Multiprocessing (MP) is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined ( multiple cores on one die, multiple dies in one package, multiple packages in one system unit, etc.). A multiprocessor is a computer system having two or more processing units (multiple processors) each sharing main memory and peripherals, in order to simultaneously process programs. A 2009 textbook defined multiprocessor system similarly, but noted that the processors may share "some or all of the system’s memory and I/O facilities"; it also gave tightly coupled system as a synonymous term. At the operating system level, ''multiprocessing'' is sometimes used to refer to the executio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

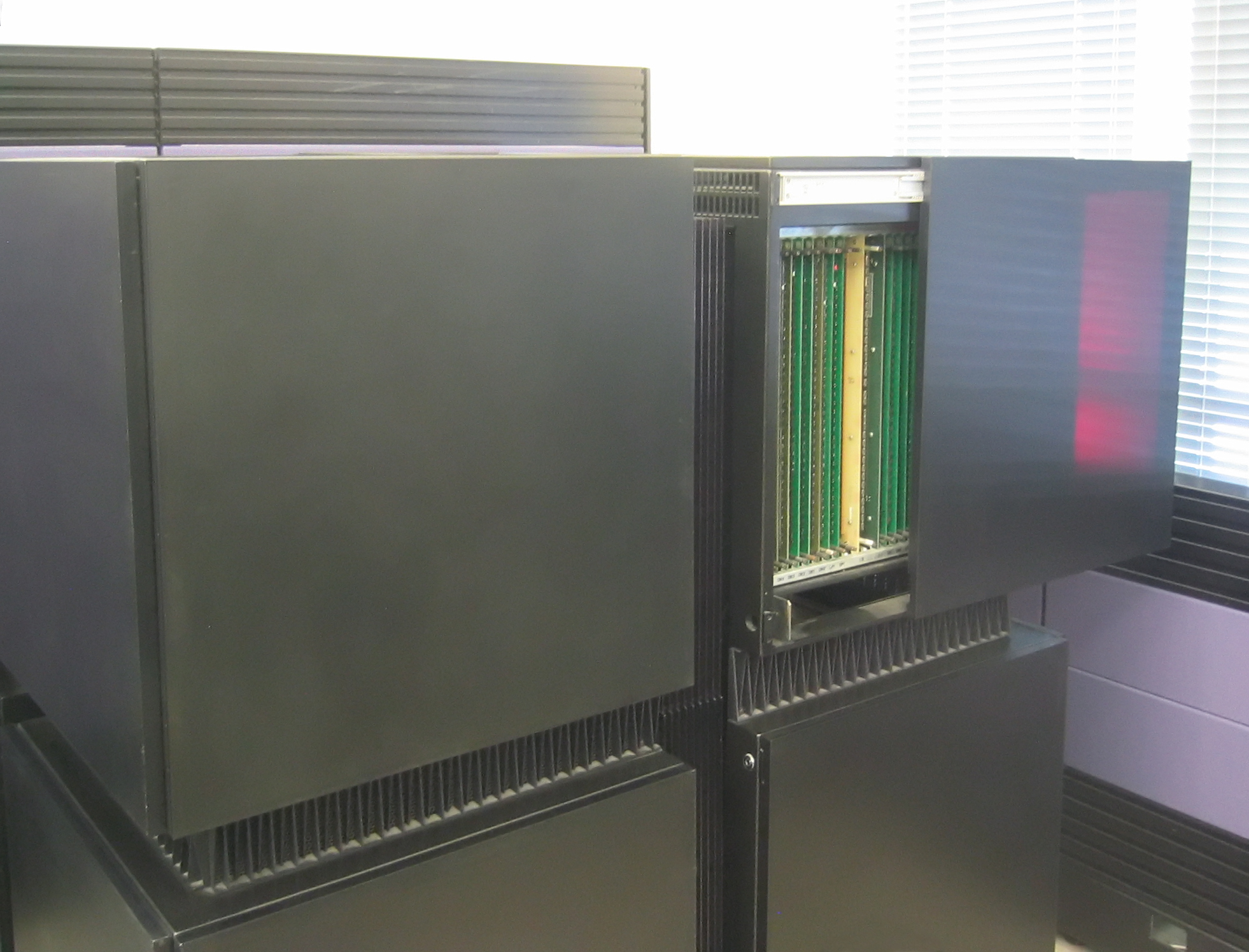

Connection Machine

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel Hypercube internetwork topology, hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flashsort

Flashsort is a distribution sorting algorithm showing linear computational complexity for uniformly distributed data sets and relatively little additional memory requirement. The original work was published in 1998 by Karl-Dietrich Neubert. Concept Flashsort is an efficient in-place implementation of histogram sort, itself a type of bucket sort. It assigns each of the input elements to one of ''buckets'', efficiently rearranges the input to place the buckets in the correct order, then sorts each bucket. The original algorithm sorts an input array as follows: # Using a first pass over the input or ''a priori'' knowledge, find the minimum and maximum sort keys. # Linearly divide the range into buckets. # Make one pass over the input, counting the number of elements which fall into each bucket. (Neubert calls the buckets "classes" and the assignment of elements to their buckets "classification".) # Convert the counts of elements in each bucket to a prefix sum, where i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branch Misprediction

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures. Two-way branching is usually implemented with a conditional jump instruction. A conditional jump can either be "taken" and jump to a different place in program memory, or it can be "not taken" and continue execution immediately after the conditional jump. It is not known for certain whether a conditional jump will be taken or not taken until the condition has been calculated and the conditional jump has passed the execution stage in the instruction pipeline (see fig. 1). Without branch prediction, the processor would have to wait until the conditional jump instruction has passed the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predication (computer Architecture)

In computer architecture, predication is a feature that provides an alternative to conditional transfer of control, as implemented by conditional branch machine instructions. Predication works by having conditional (''predicated'') non-branch instructions associated with a ''predicate'', a Boolean value used by the instruction to control whether the instruction is allowed to modify the architectural state or not. If the predicate specified in the instruction is true, the instruction modifies the architectural state; otherwise, the architectural state is unchanged. For example, a predicated move instruction (a conditional move) will only modify the destination if the predicate is true. Thus, instead of using a conditional branch to select an instruction or a sequence of instructions to execute based on the predicate that controls whether the branch occurs, the instructions to be executed are associated with that predicate, so that they will be executed, or not executed, ba ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop Unrolling

Loop unrolling, also known as loop unwinding, is a loop transformation technique that attempts to optimize a program's execution speed at the expense of its binary size, which is an approach known as space–time tradeoff. The transformation can be undertaken manually by the programmer or by an optimizing compiler. On modern processors, loop unrolling is often counterproductive, as the increased code size can cause more cache misses; ''cf.'' Duff's device. The goal of loop unwinding is to increase a program's speed by reducing or eliminating instructions that control the loop, such as pointer arithmetic and "end of loop" tests on each iteration; reducing branch penalties; as well as hiding latencies, including the delay in reading data from memory. To eliminate this computational overhead, loops can be re-written as a repeated sequence of similar independent statements. Loop unrolling is also part of certain formal verification techniques, in particular bounded model chec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Animation

Animation is a filmmaking technique whereby still images are manipulated to create moving images. In traditional animation, images are drawn or painted by hand on transparent celluloid sheets to be photographed and exhibited on film. Animation has been recognised as an artistic medium, specifically within the entertainment industry. Many animations are either traditional animations or computer animations made with computer-generated imagery (CGI). Stop motion animation, in particular claymation, has continued to exist alongside these other forms. Animation is contrasted with live action, although the two do not exist in isolation. Many moviemakers have produced films that are a hybrid of the two. As CGI increasingly approximates photographic imagery, filmmakers can easily composite 3D animations into their film rather than using practical effects for showy visual effects (VFX). General overview Computer animation can be very detailed 3D animation, while 2D c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPGPU

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing. Essentially, a GPGPU pipeline is a kind of parallel processing between one or more GPUs and CPUs that analyzes data as if it were in image or other graphic form. While GPUs operate at lower frequencies, they typically have many times the number of cores. Thus, GPUs can process far more pictures and graphical data per second than a traditional CPU. Migrating data into graphical form and then using the GPU to scan and analyze it can create a large speedup. GPGPU pipelines were developed at the beginning of the 21st century for grap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |