|

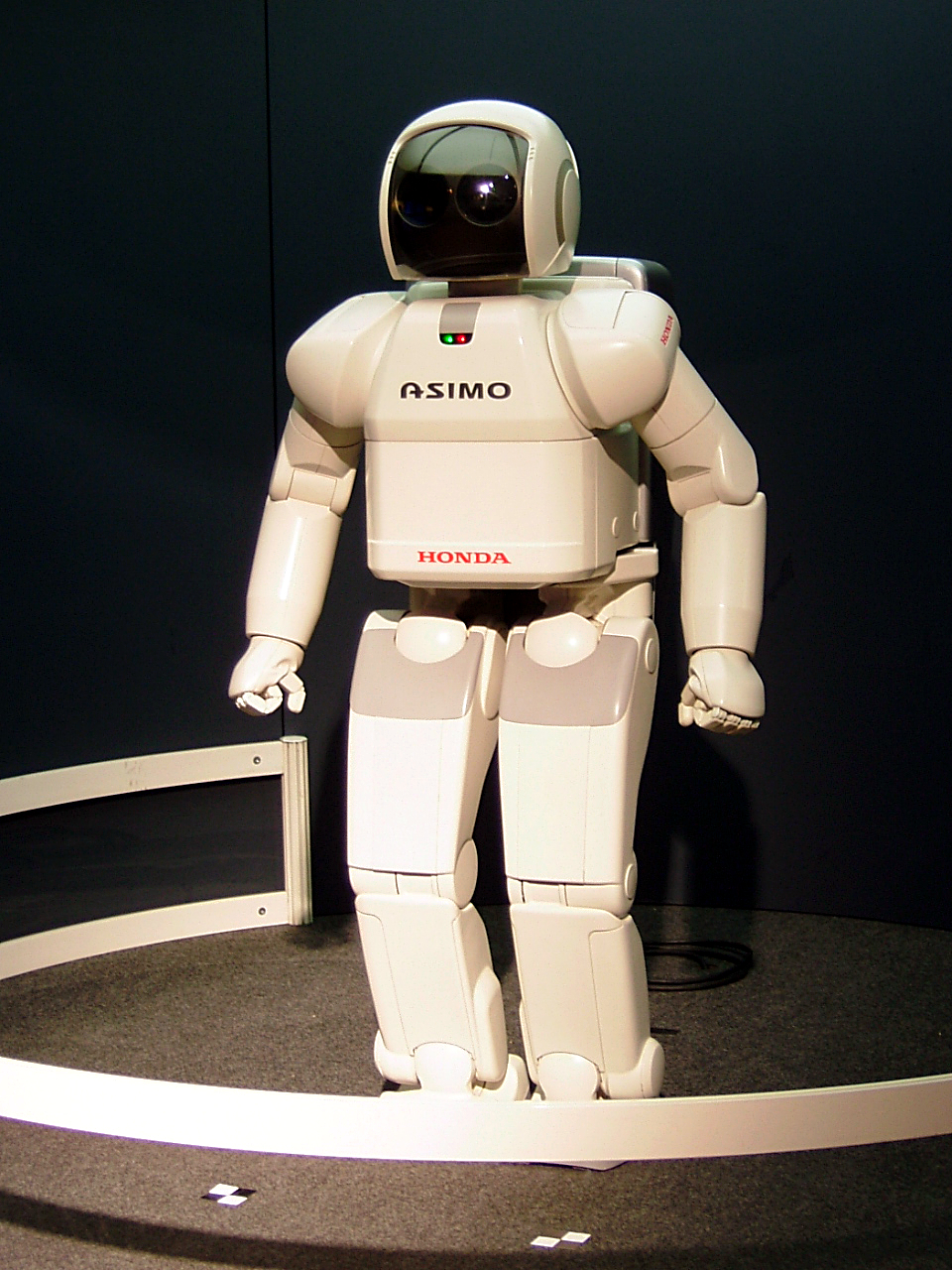

Robot Constitution

The Robot Constitution is a security ruleset part of AutoRT set by Google DeepMind in January 2024 for its AI products. The rules are inspired by Asimov's Three Laws of Robotics.The rules are applied to the underlying large language models A large language model (LLM) is a language model trained with Self-supervised learning, self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially Natural language generation, language g ... of the helper robots. Rule number 1 is a robot “may not injure a human being” . References AI software Existential risk from artificial general intelligence Google {{Google-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google DeepMind

DeepMind Technologies Limited, trading as Google DeepMind or simply DeepMind, is a British–American artificial intelligence research laboratory which serves as a subsidiary of Alphabet Inc. Founded in the UK in 2010, it was acquired by Google in 2014 and merged with Google AI's Google Brain division to become Google DeepMind in April 2023. The company is headquartered in London, with research centres in the United States, Canada, France, Germany, and Switzerland. DeepMind introduced neural Turing machines (neural networks that can access external memory like a conventional Turing machine), resulting in a computer that loosely resembles short-term memory in the human brain. DeepMind has created neural network models to play video games and board games. It made headlines in 2016 after its AlphaGo program beat a human professional Go player Lee Sedol, a world champion, in a five-game match, which was the subject of a documentary film. A more general program, AlphaZero, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asimov

Isaac Asimov ( ; – April 6, 1992) was an Russian-born American writer and professor of biochemistry at Boston University. During his lifetime, Asimov was considered one of the "Big Three" science fiction writers, along with Robert A. Heinlein and Arthur C. Clarke. A prolific writer, he wrote or edited more than 500 books. He also wrote an estimated 90,000 letters and postcards. Best known for his hard science fiction, Asimov also wrote mysteries and fantasy, as well as popular science and other non-fiction. Asimov's most famous work is the '' Foundation'' series, the first three books of which won the one-time Hugo Award for "Best All-Time Series" in 1966. His other major series are the '' Galactic Empire'' series and the ''Robot'' series. The ''Galactic Empire'' novels are set in the much earlier history of the same fictional universe as the ''Foundation'' series. Later, with '' Foundation and Earth'' (1986), he linked this distant future to the ''Robot'' series, cr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Three Laws Of Robotics

The Three Laws of Robotics (often shortened to The Three Laws or Asimov's Laws) are a set of rules devised by science fiction author Isaac Asimov, which were to be followed by robots in several of his stories. The rules were introduced in his 1942 short story " Runaround" (included in the 1950 collection '' I, Robot''), although similar restrictions had been implied in earlier stories. The Laws The Three Laws, presented to be from the fictional "Handbook of Robotics, 56th Edition, 2058 A.D.", are: # A robot may not injure a human being or, through inaction, allow a human being to come to harm. # A robot must obey the orders by human beings except where such orders would conflict with the First Law. # A robot must protect its own existence as long as such protection does not conflict with the First or Second Law. Use in fiction The Three Laws form an organizing principle and unifying theme for Asimov's robot-based fiction, appearing in his ''Robot'' series, the stories linked t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

A large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pretrained transformers (GPTs), which are largely used in generative chatbots such as ChatGPT or Gemini. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained in. History Before the emergence of transformer-based models in 2017, some language models were considered large relative to the computational and data constraints of their time. In the early 1990s, IBM's statistical models pioneered word alignment techniques for machine translation, laying the groundwork for corpus-based language modeling. A sm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AI Software

Artificial intelligence (AI) is the capability of computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals. High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., ChatGPT and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go). However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Existential Risk From Artificial General Intelligence

Existential risk from artificial intelligence refers to the idea that substantial progress in artificial general intelligence (AGI) could lead to human extinction or an irreversible global catastrophe. One argument for the importance of this risk references how human beings dominate other species because the human brain possesses distinctive capabilities other animals lack. If AI were to surpass human intelligence and become superintelligent, it might become uncontrollable. Just as the fate of the mountain gorilla depends on human goodwill, the fate of humanity could depend on the actions of a future machine superintelligence. The plausibility of existential catastrophe due to AI is widely debated. It hinges in part on whether AGI or superintelligence are achievable, the speed at which dangerous capabilities and behaviors emerge, and whether practical scenarios for AI takeovers exist. Concerns about superintelligence have been voiced by computer scientists and tech CEOs such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |