|

Quoc V. Le

Lê Viết Quốc (born 1982), or in romanized form Quoc Viet Le, is a Vietnamese computer scientist and a machine learning pioneer at Google Brain, which he established with colleagues from Google. He co-invented the Doc2Vec, doc2vec and seq2seq models in natural language processing. Le also initiated and lead the AutoML initiative at Google Brain, including the proposal of neural architecture search. Education and career Le was born in Hương Thủy in the Thừa Thiên Huế province of Vietnam. He attended Quốc Học – Huế High School for the Gifted, Quốc Học Huế High School before moving to Australia in 2004 to pursue a Bachelor’s degree at the Australian National University. During his undergraduate studies, he worked witAlex Smolaon Kernel method in machine learning. In 2007, Le moved to the United States to pursue graduate studies in computer science at Stanford University, where his PhD advisor was Andrew Ng. In 2011, Le became a founding member of Goo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AutoML

Automated machine learning (AutoML) is the process of automating the tasks of applying machine learning to real-world problems. It is the combination of automation and ML. AutoML potentially includes every stage from beginning with a raw dataset to building a machine learning model ready for deployment. AutoML was proposed as an artificial intelligence-based solution to the growing challenge of applying machine learning. The high degree of automation in AutoML aims to allow non-experts to make use of machine learning models and techniques without requiring them to become experts in machine learning. Automating the process of applying machine learning end-to-end additionally offers the advantages of producing simpler solutions, faster creation of those solutions, and models that often outperform hand-designed models. Common techniques used in AutoML include hyperparameter optimization, meta-learning and neural architecture search. Comparison to the standard approach In a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MIT Technology Review

''MIT Technology Review'' is a bimonthly magazine wholly owned by the Massachusetts Institute of Technology. It was founded in 1899 as ''The Technology Review'', and was re-launched without "''The''" in its name on April 23, 1998, under then publisher R. Bruce Journey. In September 2005, it was changed, under its then editor-in-chief and publisher, Jason Pontin, to a form resembling the historical magazine. Before the 1998 re-launch, the editor stated that "nothing will be left of the old magazine except the name." It was therefore necessary to distinguish between the modern and the historical ''Technology Review''. The historical magazine had been published by the MIT Alumni Association, was more closely aligned with the interests of MIT alumni, and had a more intellectual tone and much smaller public circulation. The magazine, billed from 1998 to 2005 as "MIT's Magazine of Innovation", and from 2005 onwards as simply "published by MIT", focused on new technology and how it is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chain-of-thought Prompting

Prompt engineering is the process of structuring or crafting an instruction in order to produce the best possible output from a generative artificial intelligence (AI) model. A ''prompt'' is natural language text describing the task that an AI should perform. A prompt for a text-to-text language model can be a query, a command, or a longer statement including context, instructions, and conversation history. Prompt engineering may involve phrasing a query, specifying a style, choice of words and grammar, providing relevant context, or describing a character for the AI to mimic. When communicating with a text-to-image or a text-to-audio model, a typical prompt is a description of a desired output such as "a high-quality photo of an astronaut riding a horse" or "Lo-fi slow BPM electro chill with organic samples". Prompting a text-to-image model may involve adding, removing, or emphasizing words to achieve a desired subject, style, layout, lighting, and aesthetic. History In 2018, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LaMDA

The London Academy of Music and Dramatic Art (LAMDA) is a drama school located in Hammersmith, London. Founded in 1861, it is the oldest specialist drama school in the British Isles and a founding member of the Federation of Drama Schools. In January 2025 the school expanded its training grounds to New York City through a partnership with A.R.T. New York in Manhattan to provide studio training to actors in the US. LAMDA was ranked as the No. 1 drama school in the UK by The Guardian University Guide in 2025. The academy's graduates work regularly at the Royal National Theatre, the Royal Shakespeare Company, Shakespeare's Globe, and the theatres of London's West End and Hollywood, as well as on the BBC, Broadway, and in the MCU. It is registered as a company under the name LAMDA Ltd and as a charity under its trading name London Academy of Music and Dramatic Art. There is an associate organisation in America under the name of American Friends of LAMDA (AFLAMDA). A very high ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Representation Learning

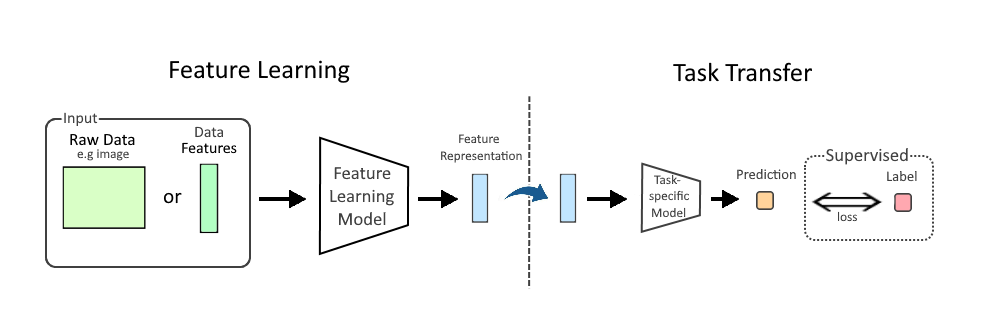

In machine learning (ML), feature learning or representation learning is a set of techniques that allow a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that ML tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data, such as image, video, and sensor data, have not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. Feature learning can be either supervised, unsupervised, or self-supervised: * In supervised feature learning, features are learned using labeled input data. Labeled data includes input-label pairs where the inp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tomáš Mikolov

Tomáš Mikolov is a Czech computer scientist working in the field of machine learning. In March 2020, Mikolov became a senior research scientist at the Czech Institute of Informatics, Robotics and Cybernetics. Career Mikolov obtained his PhD in Computer Science from Brno University of Technology for his work on recurrent neural network-based language models. He is the lead author of the 2013 paper that introduced the Word2vec technique in natural language processing and is an author on the FastText architecture. Mikolov came up with the idea to generate text from neural language models in 2007 and his RNNLM toolkit was the first to demonstrate the capability to train language models on large corpora, resulting in large improvements over the state of the art. Prior to joining Facebook in 2014, Mikolov worked as a visiting researcher at Johns Hopkins University, Université de Montréal, Microsoft and Google. He left Facebook at some time in 2019/2020 to join the Czech Institut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation is use of computational techniques to translate text or speech from one language to another, including the contextual, idiomatic and pragmatic nuances of both languages. Early approaches were mostly rule-based or statistical. These methods have since been superseded by neural machine translation and large language models. History Origins The origins of machine translation can be traced back to the work of Al-Kindi, a ninth-century Arabic cryptographer who developed techniques for systemic language translation, including cryptanalysis, frequency analysis, and probability and statistics, which are used in modern machine translation. The idea of machine translation later appeared in the 17th century. In 1629, René Descartes proposed a universal language, with equivalent ideas in different tongues sharing one symbol. The idea of using digital computers for translation of natural languages was proposed as early as 1947 by England's A. D. Booth and Warr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Oriol Vinyals

Oriol Vinyals (born 1983) is a Spanish machine learning researcher at DeepMind. He is currently technical lead on Gemini, along with Noam Shazeer and Jeff Dean. Education and career Vinyals was born in Barcelona, Catalonia, Spain. He studied mathematics and telecommunication engineering at the Universitat Politècnica de Catalunya. He then moved to the US and studied for a Master's degree in computer science at University of California, San Diego, and at University of California, Berkeley, where he received his PhD in 2013 under Nelson Morgan in the Department of Electrical Engineering and Computer Science. Vinyals co-invented the seq2seq model for machine translation along with Ilya Sutskever and Quoc Viet Le. He led the AlphaStar research group at DeepMind, which applies artificial intelligence to computer games such as StarCraft II. In 2016, he was chosen by the magazine MIT Technology Review as one of the 35 most innovative young people under 35. By 2022 he was a prin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ilya Sutskever

Ilya Sutskever (; born 8 December 1986) is an Israeli-Canadian computer scientist who specializes in machine learning. He has made several major contributions to the field of deep learning. With Alex Krizhevsky and Geoffrey Hinton, he co-invented AlexNet, a convolutional neural network. Sutskever co-founded and was a former chief scientist at OpenAI. In 2023, he was one of the members of OpenAI's board that ousted Sam Altman from his position as the organization's CEO; Altman was reinstated a week later, and Sutskever stepped down from the board. In June 2024, Sutskever co-founded the company Safe Superintelligence alongside Daniel Gross and Daniel Levy. Early life and education Sutskever was born into a Jewish family in Nizhny Novgorod, Russia (then Gorky, Soviet Union). At the age of 5, he made aliyah with his family and lived in Jerusalem, until he was 16, when his family moved to Canada. Sutskever attended the Open University of Israel from 2000 to 2002. After moving ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Core

A multi-core processor (MCP) is a microprocessor on a single integrated circuit (IC) with two or more separate central processing units (CPUs), called ''cores'' to emphasize their multiplicity (for example, ''dual-core'' or ''quad-core''). Each core reads and executes program instructions, specifically ordinary CPU instructions (such as add, move data, and branch). However, the MCP can run instructions on separate cores at the same time, increasing overall speed for programs that support multithreading or other parallel computing techniques. Manufacturers typically integrate the cores onto a single IC die, known as a ''chip multiprocessor'' (CMP), or onto multiple dies in a single chip package. As of 2024, the microprocessors used in almost all new personal computers are multi-core. A multi-core processor implements multiprocessing in a single physical package. Designers may couple cores in a multi-core device tightly or loosely. For example, cores may or may not share c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jeff Dean

Jeffrey Adgate Dean (born July 23, 1968) is an American computer scientist and software engineer. Since 2018, he has been the lead of Google AI. He was appointed Google's chief scientist in 2023 after the merger of DeepMind and Google Brain into Google DeepMind. Education Dean received a B.S., ''summa cum laude'', from the University of Minnesota in computer science and economics in 1990. His undergraduate thesis was on neural networks in C programming, advised by Vipin Kumar. He received a Ph.D. in computer science from the University of Washington in 1996, working under Craig Chambers on compilers and whole-program optimization techniques for object-oriented programming languages. He was elected to the National Academy of Engineering in 2009, which recognized his work on "the science and engineering of large-scale distributed computer systems". Career Before joining Google, Dean worked at DEC/Compaq's Western Research Laboratory, where he worked on profiling tools, mic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |