|

Product Of Experts

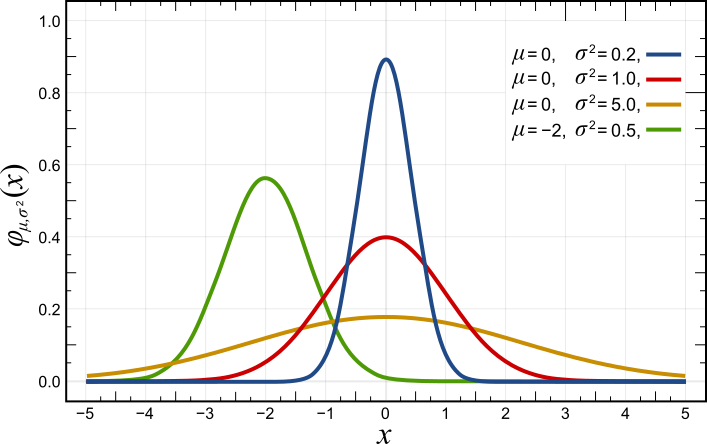

Product of experts (PoE) is a machine learning technique. It models a probability distribution by combining the output from several simpler distributions. It was proposed by Geoffrey Hinton in 1999, along with an algorithm for training the parameters of such a system. The core idea is to combine several probability distributions ("experts") by multiplying their density functions—making the PoE classification similar to an "and" operation. This allows each expert to make decisions on the basis of a few dimensions without having to cover the full dimensionality of a problem: P(y, \)=\frac\prod_^M f_j(y, \) where f_j are unnormalized expert densities and Z=\int\mboxy \prod_^M f_j(y, \) is a normalization constant (see partition function (statistical mechanics)). This is related to (but quite different from) a mixture model, where several probability distributions p_j(y, \) are combined via an "or" operation, which is a weighted sum of their density functions: P(y, \)=\sum_^M \a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Geoffrey Hinton

Geoffrey Everest Hinton (born 1947) is a British-Canadian computer scientist, cognitive scientist, and cognitive psychologist known for his work on artificial neural networks, which earned him the title "the Godfather of AI". Hinton is University Professor Emeritus at the University of Toronto. From 2013 to 2023, he divided his time working for Google (Google Brain) and the University of Toronto before publicly announcing his departure from Google in May 2023, citing concerns about the many risks of artificial intelligence (AI) technology. In 2017, he co-founded and became the chief scientific advisor of the Vector Institute in Toronto. With David Rumelhart and Ronald J. Williams, Hinton was co-author of a highly cited paper published in 1986 that popularised the backpropagation algorithm for training multi-layer neural networks, although they were not the first to propose the approach. Hinton is viewed as a leading figure in the deep learning community. The image-recognitio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Partition Function (statistical Mechanics)

In physics, a partition function describes the statistics, statistical properties of a system in thermodynamic equilibrium. Partition functions are function (mathematics), functions of the thermodynamic state function, state variables, such as the temperature and volume. Most of the aggregate thermodynamics, thermodynamic variables of the system, such as the energy, total energy, Thermodynamic free energy, free energy, entropy, and pressure, can be expressed in terms of the partition function or its derivatives. The partition function is dimensionless. Each partition function is constructed to represent a particular statistical ensemble (which, in turn, corresponds to a particular Thermodynamic free energy, free energy). The most common statistical ensembles have named partition functions. The canonical partition function applies to a canonical ensemble, in which the system is allowed to exchange heat with the Environment (systems), environment at fixed temperature, volume, an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mixture Model

In statistics, a mixture model is a probabilistic model for representing the presence of subpopulations within an overall population, without requiring that an observed data set should identify the sub-population to which an individual observation belongs. Formally a mixture model corresponds to the mixture distribution that represents the probability distribution of observations in the overall population. However, while problems associated with "mixture distributions" relate to deriving the properties of the overall population from those of the sub-populations, "mixture models" are used to make statistical inferences about the properties of the sub-populations given only observations on the pooled population, without sub-population identity information. Mixture models are used for clustering, under the name model-based clustering, and also for density estimation. Mixture models should not be confused with models for compositional data, i.e., data whose components are constra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Restricted Boltzmann Machine

A restricted Boltzmann machine (RBM) (also called a restricted Sherrington–Kirkpatrick model with external field or restricted stochastic Ising–Lenz–Little model) is a generative stochastic artificial neural network that can learn a probability distribution over its set of inputs. RBMs were initially proposed under the name Harmonium by Paul Smolensky in 1986, and rose to prominence after Geoffrey Hinton and collaborators used fast learning algorithms for them in the mid-2000s. RBMs have found applications in dimensionality reduction, classification, collaborative filtering, feature learning, topic modelling,Ruslan Salakhutdinov and Geoffrey Hinton (2010)Replicated softmax: an undirected topic model. '' Neural Information Processing Systems'' 23. immunology, and even manybody quantum mechanics. They can be trained in either supervised or unsupervised ways, depending on the task. As their name implies, RBMs are a variant of Boltzmann machines, with the restrictio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mixture Of Experts

Mixture of experts (MoE) is a machine learning technique where multiple expert networks (learners) are used to divide a problem space into homogeneous regions. MoE represents a form of ensemble learning. They were also called committee machines. Basic theory MoE always has the following components, but they are implemented and combined differently according to the problem being solved: * Experts f_1, ..., f_n, each taking the same input x, and producing outputs f_1(x), ..., f_n(x). * A weighting function (also known as a gating function) w, which takes input x and produces a vector of outputs (w(x)_1, ..., w(x)_n). This may or may not be a probability distribution, but in both cases, its entries are non-negative. * \theta = (\theta_0, \theta_1, ..., \theta_n) is the set of parameters. The parameter \theta_0 is for the weighting function. The parameters \theta_1, \dots, \theta_n are for the experts. * Given an input x, the mixture of experts produces a single output by combinin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Boltzmann Machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising model), named after Ludwig Boltzmann, is a spin glass, spin-glass model with an external field, i.e., a Spin glass#Sherrington–Kirkpatrick model, Sherrington–Kirkpatrick model, that is a stochastic Ising model. It is a statistical physics technique applied in the context of cognitive science. It is also classified as a Markov random field. Boltzmann machines are theoretically intriguing because of the locality and Hebbian nature of their training algorithm (being trained by Hebb's rule), and because of their Parallelism (computing), parallelism and the resemblance of their dynamics to simple physical processes. Boltzmann machines with unconstrained connectivity have not been proven useful for practical problems in machine learning or inference, but if the connectivity is properly constrained, the learning can be made efficient enough to be useful for practical problems. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scholarpedia

''Scholarpedia'' is an English-language wiki-based online encyclopedia with features commonly associated with Open access (publishing), open-access online academic journals, which aims to have quality content in science and medicine. ''Scholarpedia'' articles are written by invited or approved expert authors and are subject to peer review. ''Scholarpedia'' lists the real names and affiliations of all authors, curators and editors involved in an article: however, the peer review process (which can suggest changes or additions, and has to be satisfied before an article can appear) is anonymous. ''Scholarpedia'' articles are stored in an online repository, and can be citation, cited as conventional journal articles (''Scholarpedia'' has the ISSN number ). ''Scholarpedia''s citation system includes support for revision numbers. The project was created in February 2006 by Eugene M. Izhikevich, while he was a researcher at the Neurosciences Institute, San Diego, California. Izhikevich ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |