|

Outline Of Probability

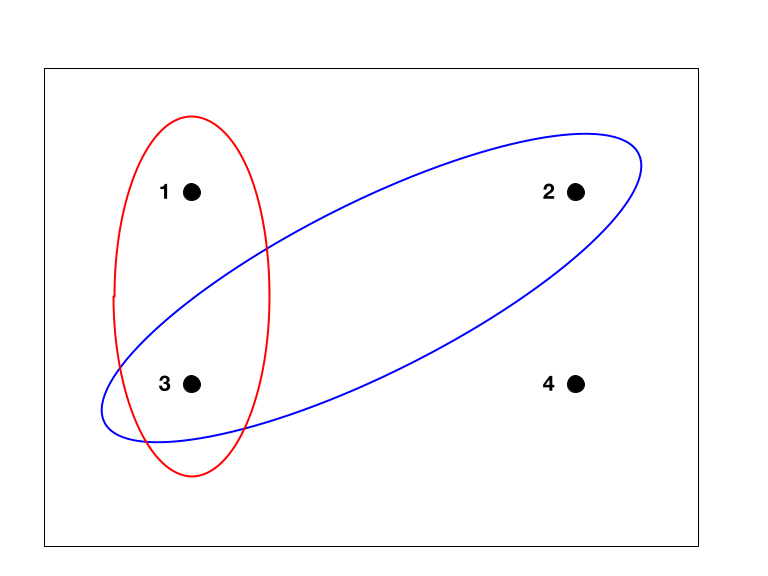

Probability is a measure of the likeliness that an event will occur. Probability is used to quantify an attitude of mind towards some proposition whose truth is not certain. The proposition of interest is usually of the form "A specific event will occur." The attitude of mind is of the form "How certain is it that the event will occur?" The certainty that is adopted can be described in terms of a numerical measure, and this number, between 0 and 1 (where 0 indicates impossibility and 1 indicates certainty) is called the probability. Probability theory is used extensively in statistics, mathematics, science and philosophy to draw conclusions about the likelihood of potential events and the underlying mechanics of complex systems. Introduction * Probability and randomness. Basic probability (Related topics: set theory, simple theorems in the algebra of sets) Events * Events in probability theory * Elementary events, sample spaces, Venn diagrams * Mutual exclusivity Ele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and 1, where, roughly speaking, 0 indicates impossibility of the event and 1 indicates certainty."Kendall's Advanced Theory of Statistics, Volume 1: Distribution Theory", Alan Stuart and Keith Ord, 6th Ed, (2009), .William Feller, ''An Introduction to Probability Theory and Its Applications'', (Vol 1), 3rd Ed, (1968), Wiley, . The higher the probability of an event, the more likely it is that the event will occur. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes ("heads" and "tails") are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2 (which could also be written ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frequency Probability

Frequentist probability or frequentism is an interpretation of probability; it defines an event's probability as the limit of its relative frequency in many trials (the long-run probability). Probabilities can be found (in principle) by a repeatable objective process (and are thus ideally devoid of opinion). The continued use of frequentist methods in scientific inference, however, has been called into question. The development of the frequentist account was motivated by the problems and paradoxes of the previously dominant viewpoint, the classical interpretation. In the classical interpretation, probability was defined in terms of the principle of indifference, based on the natural symmetry of a problem, so, ''e.g.'' the probabilities of dice games arise from the natural symmetric 6-sidedness of the cube. This classical interpretation stumbled at any statistical problem that has no natural symmetry for reasoning. Definition In the frequentist interpretation, probabilities ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Compact Set

In mathematics, a random compact set is essentially a compact set-valued random variable. Random compact sets are useful in the study of attractors for random dynamical systems. Definition Let (M, d) be a complete separable metric space. Let \mathcal denote the set of all compact subsets of M. The Hausdorff metric h on \mathcal is defined by :h(K_, K_) := \max \left\. (\mathcal, h) is also а complete separable metric space. The corresponding open subsets generate a σ-algebra on \mathcal, the Borel sigma algebra \mathcal(\mathcal) of \mathcal. A random compact set is а measurable function K from а probability space (\Omega, \mathcal, \mathbb) into (\mathcal, \mathcal (\mathcal) ). Put another way, a random compact set is a measurable function K \colon \Omega \to 2^ such that K(\omega) is almost surely In probability theory, an event is said to happen almost surely (sometimes abbreviated as a.s.) if it happens with probability 1 (or Lebesgue measure 1). In other ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Element

In probability theory, random element is a generalization of the concept of random variable to more complicated spaces than the simple real line. The concept was introduced by who commented that the “development of probability theory and expansion of area of its applications have led to necessity to pass from schemes where (random) outcomes of experiments can be described by number or a finite set of numbers, to schemes where outcomes of experiments represent, for example, vectors, functions, processes, fields, series, transformations, and also sets or collections of sets.” The modern-day usage of “random element” frequently assumes the space of values is a topological vector space, often a Banach or Hilbert space with a specified natural sigma algebra of subsets. Definition Let (\Omega, \mathcal, P) be a probability space, and (E, \mathcal) a measurable space. A random element with values in ''E'' is a function which is (\mathcal, \mathcal)-measurable. That is, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Probability Space

In probability theory, a standard probability space, also called Lebesgue–Rokhlin probability space or just Lebesgue space (the latter term is ambiguous) is a probability space satisfying certain assumptions introduced by Vladimir Rokhlin in 1940. Informally, it is a probability space consisting of an interval and/or a finite or countable number of atoms. The theory of standard probability spaces was started by von Neumann in 1932 and shaped by Vladimir Rokhlin in 1940. Rokhlin showed that the unit interval endowed with the Lebesgue measure has important advantages over general probability spaces, yet can be effectively substituted for many of these in probability theory. The dimension of the unit interval is not an obstacle, as was clear already to Norbert Wiener. He constructed the Wiener process (also called Brownian motion) in the form of a measurable map from the unit interval to the space of continuous functions. Short history The theory of standard probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Space

In probability theory, a probability space or a probability triple (\Omega, \mathcal, P) is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models the throwing of a die. A probability space consists of three elements:Stroock, D. W. (1999). Probability theory: an analytic view. Cambridge University Press. # A sample space, \Omega, which is the set of all possible outcomes. # An event space, which is a set of events \mathcal, an event being a set of outcomes in the sample space. # A probability function, which assigns each event in the event space a probability, which is a number between 0 and 1. In order to provide a sensible model of probability, these elements must satisfy a number of axioms, detailed in this article. In the example of the throw of a standard die, we would take the sample space to be \. For the event space, we could simply use the set of all subsets of the sam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Measure

In mathematics, a probability measure is a real-valued function defined on a set of events in a probability space that satisfies measure properties such as ''countable additivity''. The difference between a probability measure and the more general notion of measure (which includes concepts like area or volume) is that a probability measure must assign value 1 to the entire probability space. Intuitively, the additivity property says that the probability assigned to the union of two disjoint events by the measure should be the sum of the probabilities of the events; for example, the value assigned to "1 or 2" in a throw of a dice should be the sum of the values assigned to "1" and "2". Probability measures have applications in diverse fields, from physics to finance and biology. Definition The requirements for a function \mu to be a probability measure on a probability space are that: * \mu must return results in the unit interval , 1 returning 0 for the empty set and 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sample Space

In probability theory, the sample space (also called sample description space, possibility space, or outcome space) of an experiment or random trial is the set of all possible outcomes or results of that experiment. A sample space is usually denoted using set notation, and the possible ordered outcomes, or sample points, are listed as elements in the set. It is common to refer to a sample space by the labels ''S'', Ω, or ''U'' (for " universal set"). The elements of a sample space may be numbers, words, letters, or symbols. They can also be finite, countably infinite, or uncountably infinite. A subset of the sample space is an event, denoted by E. If the outcome of an experiment is included in E, then event E has occurred. For example, if the experiment is tossing a single coin, the sample space is the set \, where the outcome H means that the coin is heads and the outcome T means that the coin is tails. The possible events are E=\, E = \, and E = \. For tossing two coins, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Measure Theory

In mathematics, the concept of a measure is a generalization and formalization of geometrical measures (length, area, volume) and other common notions, such as mass and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations (such as spectral measures and projection-valued measures) of measure are widely used in quantum physics and physics in general. The intuition behind this concept dates back to ancient Greece, when Archimedes tried to calculate the area of a circle. But it was not until the late 19th and early 20th centuries that measure theory became a branch of mathematics. The foundations of modern measure theory were laid in the works of Émile Borel, Henri Lebesgue, Nikolai Luzin, Johann Radon, Const ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms of probability, axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure (mathematics), measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event (probability theory), event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of determinism, non-deterministic or uncertain processes or measured Quantity, quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |