|

Newton's Identities

In mathematics, Newton's identities, also known as the Girard–Newton formulae, give relations between two types of symmetric polynomials, namely between power sums and elementary symmetric polynomials. Evaluated at the roots of a monic polynomial ''P'' in one variable, they allow expressing the sums of the ''k''-th powers of all roots of ''P'' (counted with their multiplicity) in terms of the coefficients of ''P'', without actually finding those roots. These identities were found by Isaac Newton around 1666, apparently in ignorance of earlier work (1629) by Albert Girard. They have applications in many areas of mathematics, including Galois theory, invariant theory, group theory, combinatorics, as well as further applications outside mathematics, including general relativity. Mathematical statement Formulation in terms of symmetric polynomials Let ''x''1, ..., ''x''''n'' be variables, denote for ''k'' ≥ 1 by ''p''''k''(''x''1, ..., ''x''''n'') the ''k''-th p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many areas of mathematics, which include number theory (the study of numbers), algebra (the study of formulas and related structures), geometry (the study of shapes and spaces that contain them), Mathematical analysis, analysis (the study of continuous changes), and set theory (presently used as a foundation for all mathematics). Mathematics involves the description and manipulation of mathematical object, abstract objects that consist of either abstraction (mathematics), abstractions from nature orin modern mathematicspurely abstract entities that are stipulated to have certain properties, called axioms. Mathematics uses pure reason to proof (mathematics), prove properties of objects, a ''proof'' consisting of a succession of applications of in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (: matrices) is a rectangle, rectangular array or table of numbers, symbol (formal), symbols, or expression (mathematics), expressions, with elements or entries arranged in rows and columns, which is used to represent a mathematical object or property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two-by-three matrix", a " matrix", or a matrix of dimension . Matrices are commonly used in linear algebra, where they represent linear maps. In geometry, matrices are widely used for specifying and representing geometric transformations (for example rotation (mathematics), rotations) and coordinate changes. In numerical analysis, many computational problems are solved by reducing them to a matrix computation, and this often involves computing with matrices of huge dimensions. Matrices are used in most areas of mathematics and scientific fields, either directly ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bell Polynomial

In combinatorial mathematics, the Bell polynomials, named in honor of Eric Temple Bell, are used in the study of set partitions. They are related to Stirling and Bell numbers. They also occur in many applications, such as in Faà di Bruno's formula. Definitions Exponential Bell polynomials The ''partial'' or ''incomplete'' exponential Bell polynomials are a triangular array of polynomials given by :\begin B_(x_1,x_2,\dots,x_) &= \sum \left(\right)^\left(\right)^\cdots\left(\right)^ \\ &= n! \sum \prod_^ \frac, \end where the sum is taken over all sequences ''j''1, ''j''2, ''j''3, ..., ''j''''n''−''k''+1 of non-negative integers such that these two conditions are satisfied: :j_1 + j_2 + \cdots + j_ = k, :j_1 + 2 j_2 + 3 j_3 + \cdots + (n-k+1)j_ = n. The sum :\begin B_n(x_1,\dots,x_n)&=\sum_^n B_(x_1,x_2,\dots,x_)\\ &=n! \sum_ \prod_^n \frac \end is called the ''n''th ''complete exponential Bell polynomial''. Ordinary Bell polynomials Likewise, the partial ''ordinary'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Faa Di Bruno's Formula

The Federal Aviation Administration (FAA) is a U.S. federal government agency within the U.S. Department of Transportation that regulates civil aviation in the United States and surrounding international waters. Its powers include air traffic control, certification of personnel and aircraft, setting standards for airports, and protection of U.S. assets during the launch or re-entry of commercial space vehicles. Powers over neighboring international waters were delegated to the FAA by authority of the International Civil Aviation Organization. The FAA was created in as the Federal Aviation Agency, replacing the Civil Aeronautics Administration (CAA). In 1967, the FAA became part of the newly formed U.S. Department of Transportation and was renamed the Federal Aviation Administration. Major functions The FAA's roles include: *Regulating U.S. commercial space transportation *Regulating air navigation facilities' geometric and flight inspection standards *Encouraging and deve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power Series

In mathematics, a power series (in one variable) is an infinite series of the form \sum_^\infty a_n \left(x - c\right)^n = a_0 + a_1 (x - c) + a_2 (x - c)^2 + \dots where ''a_n'' represents the coefficient of the ''n''th term and ''c'' is a constant called the ''center'' of the series. Power series are useful in mathematical analysis, where they arise as Taylor series of infinitely differentiable functions. In fact, Borel's theorem implies that every power series is the Taylor series of some smooth function. In many situations, the center ''c'' is equal to zero, for instance for Maclaurin series. In such cases, the power series takes the simpler form \sum_^\infty a_n x^n = a_0 + a_1 x + a_2 x^2 + \dots. The partial sums of a power series are polynomials, the partial sums of the Taylor series of an analytic function are a sequence of converging polynomial approximations to the function at the center, and a converging power series can be seen as a kind of generalized polynom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monomial

In mathematics, a monomial is, roughly speaking, a polynomial which has only one term. Two definitions of a monomial may be encountered: # A monomial, also called a power product or primitive monomial, is a product of powers of variables with nonnegative integer exponents, or, in other words, a product of variables, possibly with repetitions. For example, x^2yz^3=xxyzzz is a monomial. The constant 1 is a primitive monomial, being equal to the empty product and to x^0 for any variable x. If only a single variable x is considered, this means that a monomial is either 1 or a power x^n of x, with n a positive integer. If several variables are considered, say, x, y, z, then each can be given an exponent, so that any monomial is of the form x^a y^b z^c with a,b,c non-negative integers (taking note that any exponent 0 makes the corresponding factor equal to 1). # A monomial in the first sense multiplied by a nonzero constant, called the coefficient of the monomial. A primitive monomial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complete Homogeneous Symmetric Polynomial

In mathematics, specifically in algebraic combinatorics and commutative algebra, the complete homogeneous symmetric polynomials are a specific kind of symmetric polynomials. Every symmetric polynomial can be expressed as a polynomial expression in complete homogeneous symmetric polynomials. Definition The complete homogeneous symmetric polynomial of degree in variables , written for , is the sum of all monomials of total degree in the variables. Formally, :h_k (X_1, X_2, \dots,X_n) = \sum_ X_ X_ \cdots X_. The formula can also be written as: :h_k (X_1, X_2, \dots,X_n) = \sum_ X_^ X_^ \cdots X_^. Indeed, is just the multiplicity of in the sequence . The first few of these polynomials are :\begin h_0 (X_1, X_2, \dots,X_n) &= 1, \\ 0pxh_1 (X_1, X_2, \dots,X_n) &= \sum_ X_j, \\ h_2 (X_1, X_2, \dots,X_n) &= \sum_ X_j X_k, \\ h_3 (X_1, X_2, \dots,X_n) &= \sum_ X_j X_k X_l. \end Thus, for each nonnegative integer , there exists exactly one complete homogeneous symmetric polynomi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fundamental Theorem Of Symmetric Polynomials

In mathematics, specifically in commutative algebra, the elementary symmetric polynomials are one type of basic building block for symmetric polynomials, in the sense that any symmetric polynomial can be expressed as a polynomial in elementary symmetric polynomials. That is, any symmetric polynomial is given by an expression involving only additions and multiplication of constants and elementary symmetric polynomials. There is one elementary symmetric polynomial of degree in variables for each positive integer , and it is formed by adding together all distinct products of distinct variables. Definition The elementary symmetric polynomials in variables , written for , are defined by :\begin e_1 (X_1, X_2, \dots, X_n) &= \sum_ X_a,\\ e_2 (X_1, X_2, \dots, X_n) &= \sum_ X_a X_b,\\ e_3 (X_1, X_2, \dots, X_n) &= \sum_ X_a X_b X_c,\\ \end and so forth, ending with : e_n (X_1, X_2, \dots,X_n) = X_1 X_2 \cdots X_n. In general, for we define : e_k (X_1 , \ldots , X_n )=\su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Faddeev–LeVerrier Algorithm

In mathematics (linear algebra), the Faddeev–LeVerrier algorithm is a recursive method to calculate the coefficients of the characteristic polynomial p_A(\lambda)=\det (\lambda I_n - A) of a square matrix, , named after Dmitry Konstantinovich Faddeev and Urbain Le Verrier. Calculation of this polynomial yields the eigenvalues of as its roots; as a matrix polynomial in the matrix itself, it vanishes by the Cayley–Hamilton theorem. Computing the characteristic polynomial directly from the definition of the determinant is computationally cumbersome insofar as it introduces a new symbolic quantity \lambda; by contrast, the Faddeev-Le Verrier algorithm works directly with coefficients of matrix A. The algorithm has been independently rediscovered several times in different forms. It was first published in 1840 by Urbain Le Verrier, subsequently redeveloped by P. Horst, Jean-Marie Souriau, in its present form here by Faddeev and Sominsky, and further by J. S. Frame, and others. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adjugate Matrix

In linear algebra, the adjugate or classical adjoint of a square matrix , , is the transpose of its cofactor matrix. It is occasionally known as adjunct matrix, or "adjoint", though that normally refers to a different concept, the adjoint operator which for a matrix is the conjugate transpose. The product of a matrix with its adjugate gives a diagonal matrix (entries not on the main diagonal are zero) whose diagonal entries are the determinant of the original matrix: :\mathbf \operatorname(\mathbf) = \det(\mathbf) \mathbf, where is the identity matrix of the same size as . Consequently, the multiplicative inverse of an invertible matrix can be found by dividing its adjugate by its determinant. Definition The adjugate of is the transpose of the cofactor matrix of , :\operatorname(\mathbf) = \mathbf^\mathsf. In more detail, suppose is a ( unital) commutative ring and is an matrix with entries from . The -'' minor'' of , denoted , is the determinant of the matrix that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

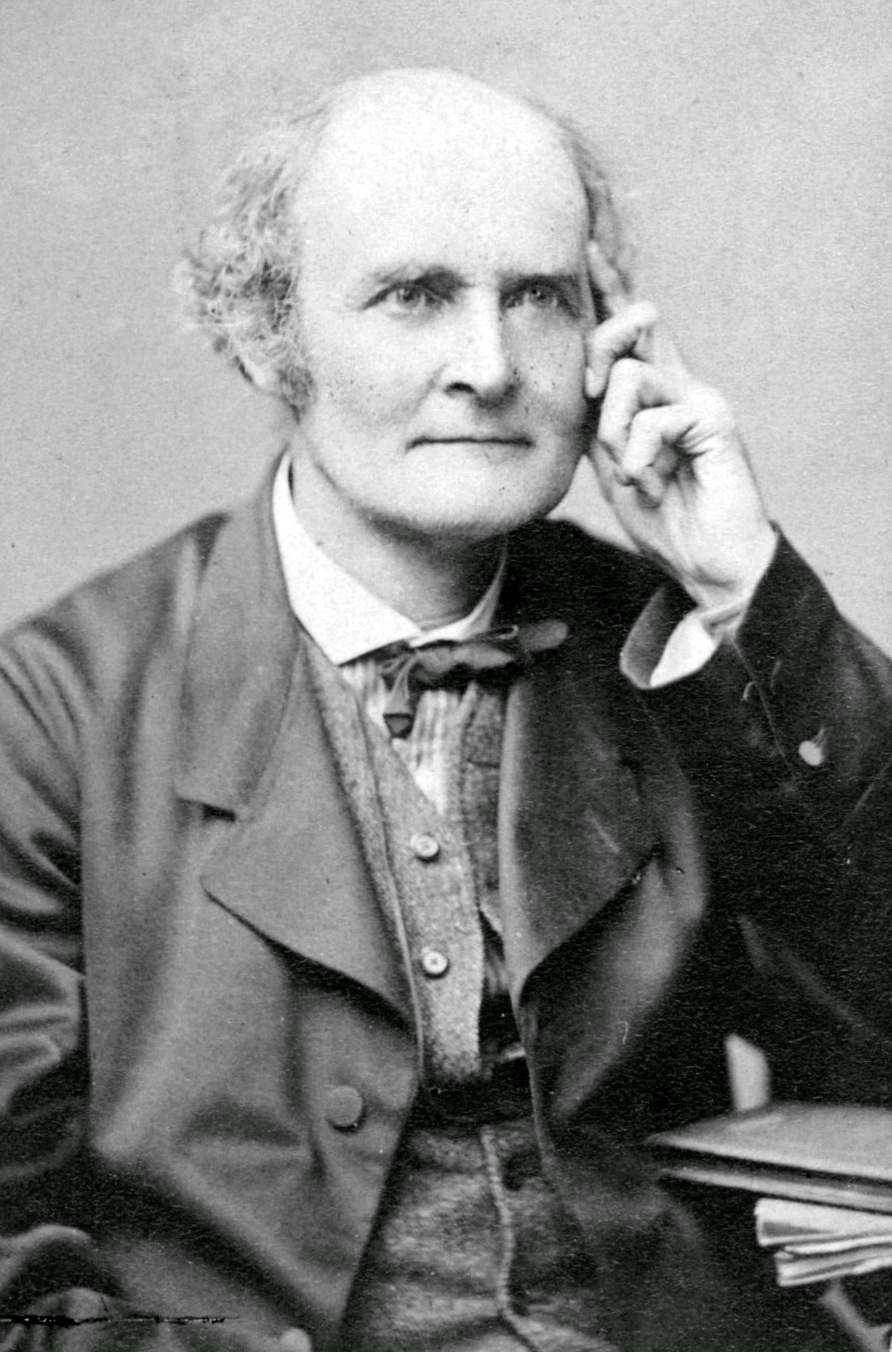

Cayley–Hamilton Theorem

In linear algebra, the Cayley–Hamilton theorem (named after the mathematicians Arthur Cayley and William Rowan Hamilton) states that every square matrix over a commutative ring (such as the real or complex numbers or the integers) satisfies its own characteristic equation. The characteristic polynomial of an matrix is defined as p_A(\lambda)=\det(\lambda I_n-A), where is the determinant operation, is a variable scalar element of the base ring, and is the identity matrix. Since each entry of the matrix (\lambda I_n-A) is either constant or linear in , the determinant of (\lambda I_n-A) is a degree- monic polynomial in , so it can be written as p_A(\lambda) = \lambda^n + c_\lambda^ + \cdots + c_1\lambda + c_0. By replacing the scalar variable with the matrix , one can define an analogous matrix polynomial expression, p_A(A) = A^n + c_A^ + \cdots + c_1A + c_0I_n. (Here, A is the given matrix—not a variable, unlike \lambda—so p_A(A) is a constant rather than ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NC (complexity)

In computational complexity theory, the class NC (for "Nick's Class") is the set of decision problems decidable in polylogarithmic time on a parallel computer with a polynomial number of processors. In other words, a problem with input size ''n'' is in NC if there exist constants ''c'' and ''k'' such that it can be solved in time using parallel processors. Stephen Cook coined the name "Nick's class" after Nick Pippenger, who had done extensive research on circuits with polylogarithmic depth and polynomial size.Arora & Barak (2009) p.120 As in the case of circuit complexity theory, usually the class has an extra constraint that the circuit family must be ''uniform'' ( see below). Just as the class P can be thought of as the tractable problems ( Cobham's thesis), so NC can be thought of as the problems that can be efficiently solved on a parallel computer.Arora & Barak (2009) p.118 NC is a subset of P because polylogarithmic parallel computations can be simulated by polynomi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |