|

Multiple Instruction, Multiple Data

In computing, multiple instruction, multiple data (MIMD) is a technique employed to achieve parallelism. Machines using MIMD have a number of processor cores that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. MIMD architectures may be used in a number of application areas such as computer-aided design/computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared memory or distributed memory categories. These classifications are based on how MIMD processors access memory. Shared memory machines may be of the bus-based, extended, or hierarchical type. Distributed memory machines may have hypercube or mesh interconnection schemes. Examples An example of MIMD system is Intel Xeon Phi, descended from Larrabee microarchitecture. These processors have multiple processing cores (up to 61 as of 2015) that can execute differen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software

Software consists of computer programs that instruct the Execution (computing), execution of a computer. Software also includes design documents and specifications. The history of software is closely tied to the development of digital computers in the mid-20th century. Early programs were written in the machine language specific to the hardware. The introduction of high-level programming languages in 1958 allowed for more human-readable instructions, making software development easier and more portable across different computer architectures. Software in a programming language is run through a compiler or Interpreter (computing), interpreter to execution (computing), execute on the architecture's hardware. Over time, software has become complex, owing to developments in Computer network, networking, operating systems, and databases. Software can generally be categorized into two main types: # operating systems, which manage hardware resources and provide services for applicat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-Uniform Memory Access

Non-uniform memory access (NUMA) is a computer storage, computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). NUMA is beneficial for workloads with high memory locality of reference and low lock contention, because a processor may operate on a subset of memory mostly or entirely within its own cache node, reducing traffic on the memory bus. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC Corporation, EMC, now Dell Te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Multiprocessing

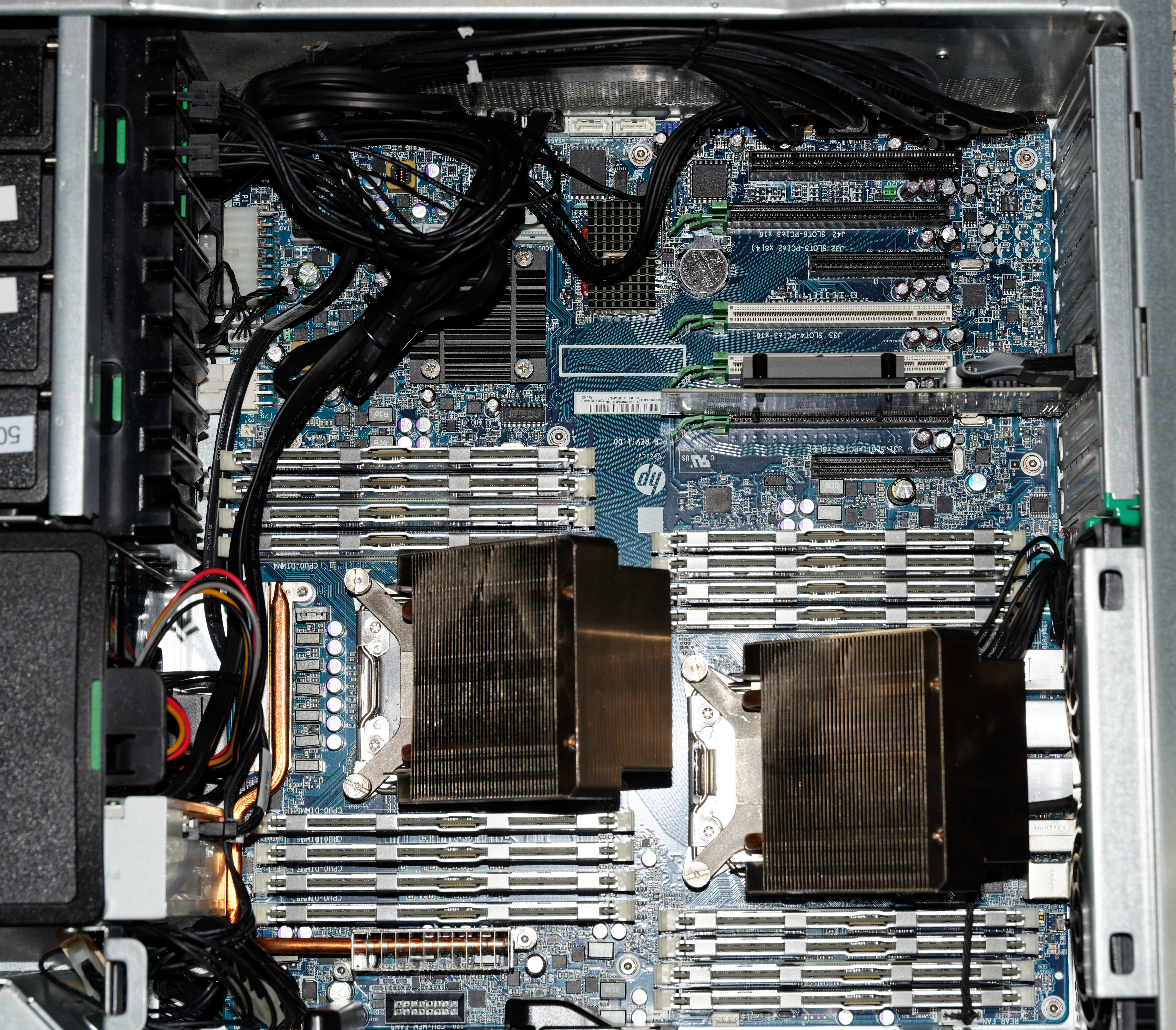

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors. Professor John D. Kubiatowicz considers traditionally SMP systems to contain processors without caches. Culler and Pal-Singh in their 1998 book "Parallel Computer Architecture: A Hardware/Software Approach" mention: "The term SMP is widely used but causes a bit of confusion. ..The more precise description of what is intended by SMP is a shared memory multiprocessor where the cost of accessing a memory location ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hypercube

In geometry, a hypercube is an ''n''-dimensional analogue of a square ( ) and a cube ( ); the special case for is known as a ''tesseract''. It is a closed, compact, convex figure whose 1- skeleton consists of groups of opposite parallel line segments aligned in each of the space's dimensions, perpendicular to each other and of the same length. A unit hypercube's longest diagonal in ''n'' dimensions is equal to \sqrt. An ''n''-dimensional hypercube is more commonly referred to as an ''n''-cube or sometimes as an ''n''-dimensional cube. The term measure polytope (originally from Elte, 1912) is also used, notably in the work of H. S. M. Coxeter who also labels the hypercubes the γn polytopes. The hypercube is the special case of a hyperrectangle (also called an ''n-orthotope''). A ''unit hypercube'' is a hypercube whose side has length one unit. Often, the hypercube whose corners (or ''vertices'') are the 2''n'' points in R''n'' with each coordinate equal to 0 or 1 i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-uniform Memory Access

Non-uniform memory access (NUMA) is a computer storage, computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). NUMA is beneficial for workloads with high memory locality of reference and low lock contention, because a processor may operate on a subset of memory mostly or entirely within its own cache node, reducing traffic on the memory bus. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC Corporation, EMC, now Dell Te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Massively Parallel (computing)

Massively may refer to: *Mass Mass is an Intrinsic and extrinsic properties, intrinsic property of a physical body, body. It was traditionally believed to be related to the physical quantity, quantity of matter in a body, until the discovery of the atom and particle physi ... * Massively (blog), a blog about MMOs {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connection Machine

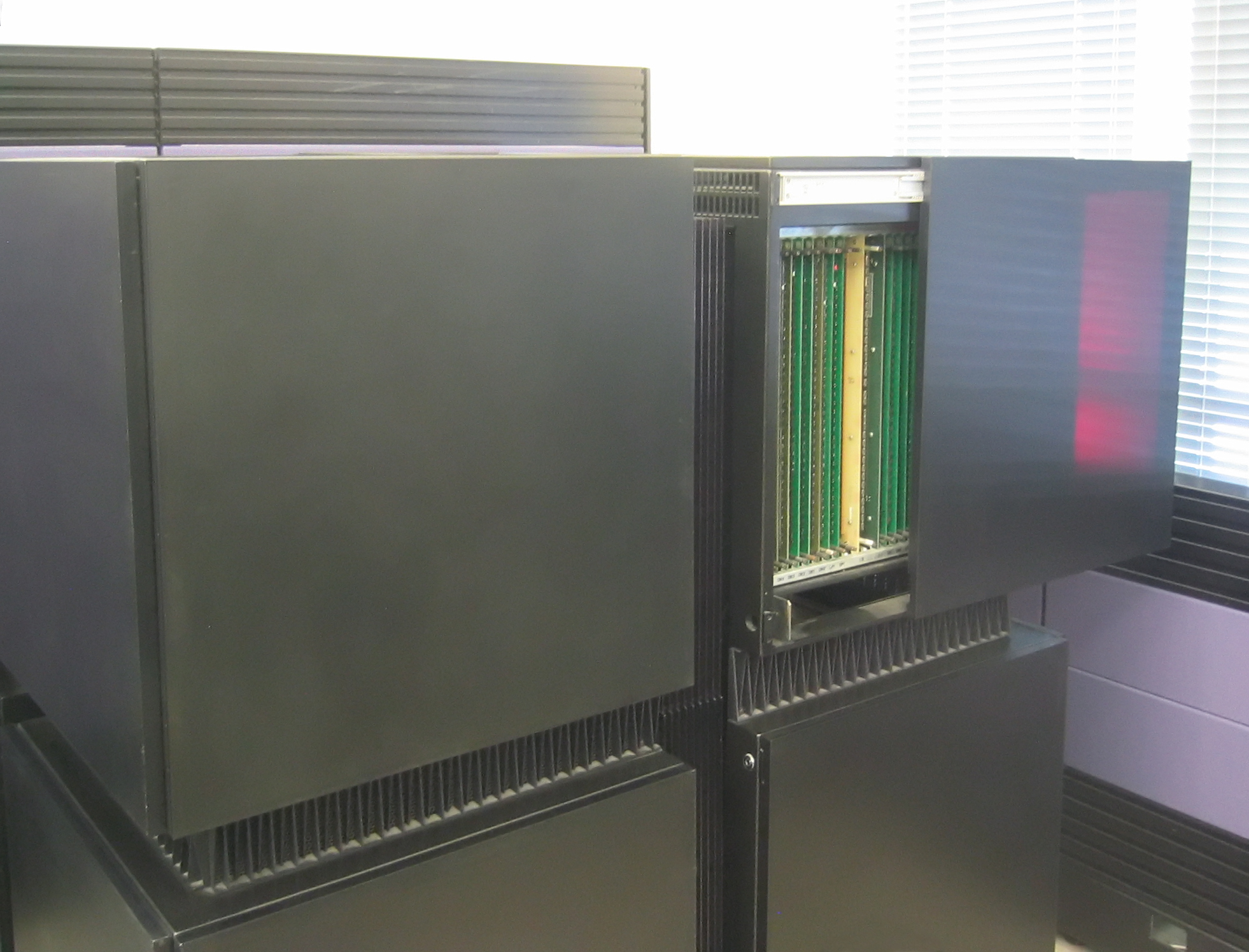

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel Hypercube internetwork topology, hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fat Tree

The fat tree network is a universal network for provably efficient communication. It was invented by Charles E. Leiserson of the MIT in 1985. k-ary n-trees, the type of fat-trees commonly used in most high-performance networks, were initially formalized in 1997. In a tree data structure, every branch has the same thickness (bandwidth), regardless of their place in the hierarchy—they are all "skinny" (''skinny'' in this context means low-bandwidth). In a fat tree, branches nearer the top of the hierarchy are "fatter" (thicker) than branches further down the hierarchy. In a telecommunications network, the branches are data links; the varied thickness (bandwidth) of the data links allows for more efficient and technology-specific use. Mesh and hypercube topologies have communication requirements that follow a rigid algorithm, and cannot be tailored to specific packaging technologies. Applications in supercomputers Supercomputers that use a fat tree network include the two fast ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache-only Memory Architecture

Cache only memory architecture (COMA) is a computer memory organization for use in multiprocessors in which the local memories (typically DRAM) at each node are used as cache. This is in contrast to using the local memories as actual main memory, as in NUMA organizations. In NUMA, each address in the global address space is typically assigned a fixed home node. When processors access some data, a copy is made in their local cache, but space remains allocated in the home node. Instead, with COMA, there is no home. An access from a remote node may cause that data to migrate. Compared to NUMA, this reduces the number of redundant copies and may allow more efficient use of the memory resources. On the other hand, it raises problems of how to find a particular data (there is no longer a home node) and what to do if a local memory fills up (migrating some data into the local memory then needs to evict some other data, which doesn't have a home to go to). Hardware memory coheren ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |