|

Markov Process

In probability theory and statistics, a Markov chain or Markov process is a stochastic process describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs ''now''." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). Markov processes are named in honor of the Russian mathematician Andrey Markov. Markov chains have many applications as statistical models of real-world processes. They provide the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability distributions, and have found application in areas including Bayesian statistics, biology, chemistry, economics, finance, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous-time Markov Chain

A continuous-time Markov chain (CTMC) is a continuous stochastic process in which, for each state, the process will change state according to an exponential random variable and then move to a different state as specified by the probabilities of a stochastic matrix. An equivalent formulation describes the process as changing state according to the least value of a set of exponential random variables, one for each possible state it can move to, with the parameters determined by the current state. An example of a CTMC with three states \ is as follows: the process makes a transition after the amount of time specified by the holding time—an exponential random variable E_i, where ''i'' is its current state. Each random variable is independent and such that E_0\sim \text(6), E_1\sim \text(12) and E_2\sim \text(18). When a transition is to be made, the process moves according to the jump chain, a discrete-time Markov chain with stochastic matrix: :\begin 0 & \frac & \frac \\ \frac & 0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finance

Finance refers to monetary resources and to the study and Academic discipline, discipline of money, currency, assets and Liability (financial accounting), liabilities. As a subject of study, is a field of Business administration, Business Administration wich study the planning, organizing, leading, and controlling of an organization's resources to achieve its goals. Based on the scope of financial activities in financial systems, the discipline can be divided into Personal finance, personal, Corporate finance, corporate, and public finance. In these financial systems, assets are bought, sold, or traded as financial instruments, such as Currency, currencies, loans, Bond (finance), bonds, Share (finance), shares, stocks, Option (finance), options, Futures contract, futures, etc. Assets can also be banked, Investment, invested, and Insurance, insured to maximize value and minimize loss. In practice, Financial risk, risks are always present in any financial action and entities. Due ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harris Chain

In the mathematical study of stochastic processes, a Harris chain is a Markov chain where the chain returns to a particular part of the state space an unbounded number of times. Harris chains are regenerative processes and are named after Theodore Harris. The theory of Harris chains and Harris recurrence is useful for treating Markov chains on general (possibly uncountably infinite) state spaces. Definition Let \ be a Markov chain on a general state space \Omega with stochastic kernel K. The kernel represents a generalized one-step transition probability law, so that P(X_\in C\mid X_n=x)=K(x,C) for all states x in \Omega and all measurable sets C\subseteq \Omega. The chain \ is a ''Harris chain''R. Durrett. ''Probability: Theory and Examples''. Thomson, 2005. . if there exists A\subseteq\Omega,\varepsilon>0, and probability measure \rho with \rho(\Omega)=1 such that # If \tau_A:=\inf \, then P(\tau_A 0, and let ''A'' and Ω be open sets containing ''x''0 and ''y''0 res ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chains On A Measurable State Space

A Markov chain on a measurable state space is a discrete-time-homogeneous Markov chain with a measurable space as state space. History The definition of Markov chains has evolved during the 20th century. In 1953 the term Markov chain was used for stochastic processes with discrete or continuous index set, living on a countable or finite state space, see Doob. or Chung. Since the late 20th century it became more popular to consider a Markov chain as a stochastic process with discrete index set, living on a measurable state space.Daniel Revuz: ''Markov Chains''. 2nd edition, 1984.Rick Durrett: ''Probability: Theory and Examples''. Fourth edition, 2005. Definition Denote with (E , \Sigma) a measurable space and with p a Markov kernel with source and target (E , \Sigma). A stochastic process (X_n)_ on (\Omega,\mathcal,\mathbb) is called a time homogeneous Markov chain with Markov kernel p and start distribution \mu if : \mathbb _0 \in A_0 , X_1 \in A_1, \dots , X_n \in A_n= \in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

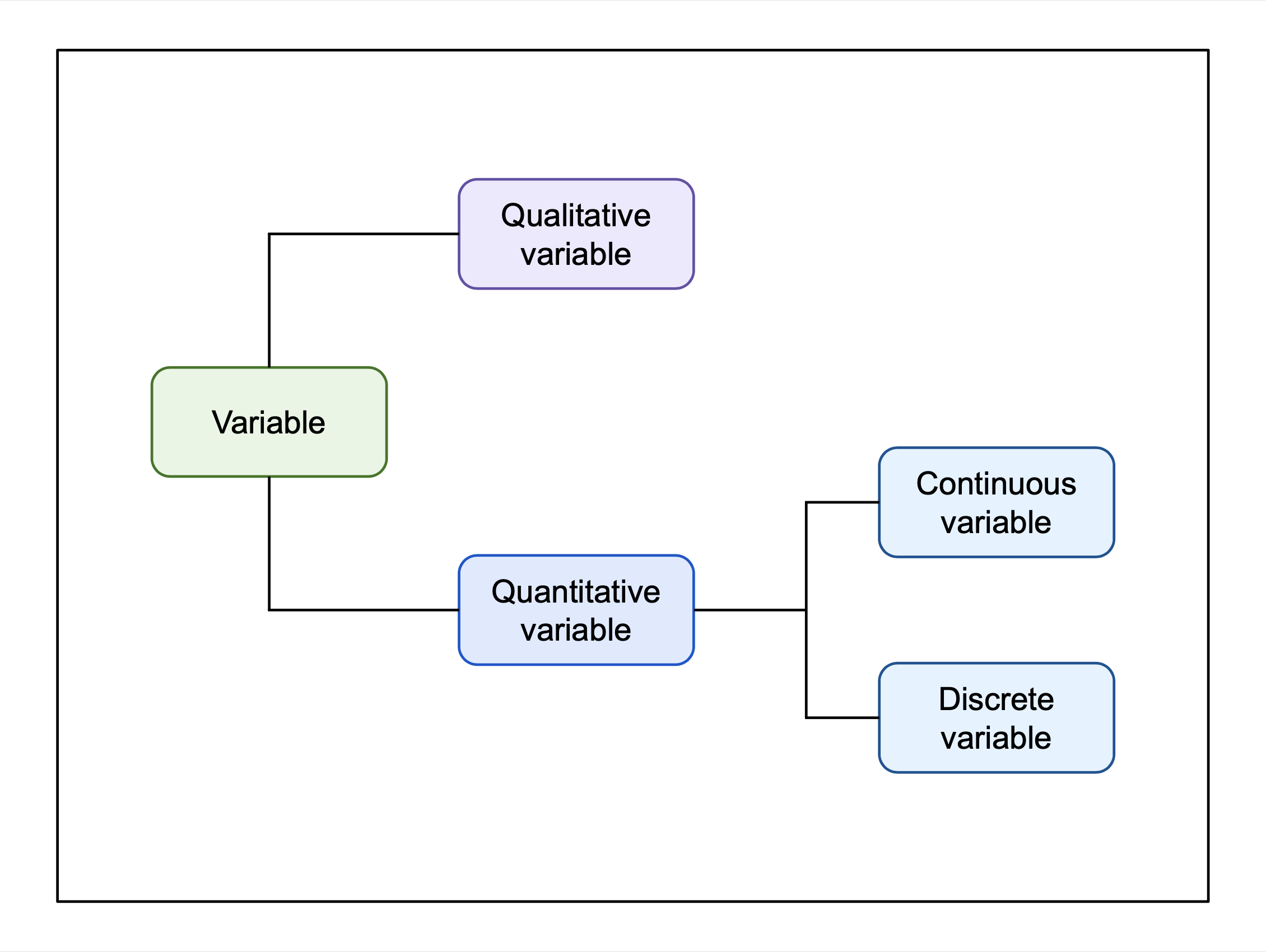

Continuous Or Discrete Variable

In mathematics and statistics, a quantitative variable may be continuous or discrete. If it can take on two real values and all the values between them, the variable is continuous in that interval. If it can take on a value such that there is a non- infinitesimal gap on each side of it containing no values that the variable can take on, then it is discrete around that value. In some contexts, a variable can be discrete in some ranges of the number line and continuous in others. In statistics, continuous and discrete variables are distinct statistical data types which are described with different probability distributions. Continuous variable A continuous variable is a variable such that there are possible values between any two values. For example, a variable over a non-empty range of the real numbers is continuous if it can take on any value in that range. Methods of calculus are often used in problems in which the variables are continuous, for example in continuous opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

State Space

In computer science, a state space is a discrete space representing the set of all possible configurations of a system. It is a useful abstraction for reasoning about the behavior of a given system and is widely used in the fields of artificial intelligence and game theory. For instance, the toy problem Vacuum World has a discrete finite state space in which there are a limited set of configurations that the vacuum and dirt can be in. A "counter" system, where states are the natural numbers starting at 1 and are incremented over time has an infinite discrete state space. The angular position of an undamped pendulum is a continuous (and therefore infinite) state space. Definition State spaces are useful in computer science as a simple model of machines. Formally, a state space can be defined as a tuple [''N'', ''A'', ''S'', ''G''] where: * ''N'' is a Set (mathematics), set of states * ''A'' is a set of arcs connecting the states * ''S'' is a nonempty subset of ''N ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two event (probability theory), events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called Pairwise independence, pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Probability

In probability theory, conditional probability is a measure of the probability of an Event (probability theory), event occurring, given that another event (by assumption, presumption, assertion or evidence) is already known to have occurred. This particular method relies on event A occurring with some sort of relationship with another event B. In this situation, the event A can be analyzed by a conditional probability with respect to B. If the event of interest is and the event is known or assumed to have occurred, "the conditional probability of given ", or "the probability of under the condition ", is usually written as or occasionally . This can also be understood as the fraction of probability B that intersects with A, or the ratio of the probabilities of both events happening to the "given" one happening (how many times A occurs rather than not assuming B has occurred): P(A \mid B) = \frac. For example, the probability that any given person has a cough on any given day ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memorylessness

In probability and statistics, memorylessness is a property of probability distributions. It describes situations where previous failures or elapsed time does not affect future trials or further wait time. Only the geometric and exponential distributions are memoryless. Definition A random variable X is memoryless if \Pr(X>t+s \mid X>s)=\Pr(X>t)where \Pr is its probability mass function or probability density function when X is discrete or continuous respectively and t and s are nonnegative numbers. In discrete cases, the definition describes the first success in an infinite sequence of independent and identically distributed Bernoulli trials, like the number of coin flips until landing heads. In continuous situations, memorylessness models random phenomena, like the time between two earthquakes. The memorylessness property asserts that the number of previously failed trials or the elapsed time is independent, or has no effect, on the future trials or lead time. The equality ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Property

In probability theory and statistics, the term Markov property refers to the memoryless property of a stochastic process, which means that its future evolution is independent of its history. It is named after the Russian mathematician Andrey Markov. The term strong Markov property is similar to the Markov property, except that the meaning of "present" is defined in terms of a random variable known as a stopping time. The term Markov assumption is used to describe a model where the Markov property is assumed to hold, such as a hidden Markov model. A Markov random field extends this property to two or more dimensions or to random variables defined for an interconnected network of items. An example of a model for such a field is the Ising model. A discrete-time stochastic process satisfying the Markov property is known as a Markov chain. Introduction A stochastic process has the Markov property if the conditional probability distribution of future states of the process (cond ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |